Intel Gets the Jitters: Plans, Then Nixes, PCIe 4.0 Support on Comet Lake

One step forward, one step back.

The transition to 10nm doesn't appear to be Intel's only problem: According to multiple sources we spoke to at CES 2020, many upcoming Socket 1200 motherboards will support PCIe 4.0, but Intel encountered problems implementing the feature with the Comet Lake chipset, so Socket 1200 motherboards will only support PCIe 3.0 signaling rates when they come to market.

Intel's difficulty transitioning to the 10nm manufacturing process has hindered its ability to move to newer architectures, but it has also led to slower transitions to other new technologies, like PCIe 4.0. That's proven to be a liability as AMD has plowed forward with a lineup of chips spanning from desktops to the data center that support the new interface, giving it an uncontested leadership position in I/O connectivity with twice the available bandwidth for attached devices.

Intel, on the other hand, remains mired on its 14nm chips and a long string of Skylake-derivatives. And while we've assumed the company wouldn't move forward to PCIe 4.0 until it moved to a new microarchitecture, we were told by several independent sources, which requested anonymity, that Intel intended to add support for the interface with the Comet Lake platform. As a result, most iterations of Socket 1200 motherboards currently have the necessary componentry, like redrivers and external clock generators, to enable the feature.

The PCIe 4.0 interface comes with twice the bandwidth of PCIe 3.0, but that also comes along with tighter signal integrity requirements. Unfortunately, Intel reportedly ran into issues with the chipset and untenable amounts of jitter (we're told the Comet Lake processors themselves are fine), thus requiring cost-adding external clock generators to bring the interface into compliance. In either case, the issues reportedly led Intel to cancel PCIe 4.0 support on the Comet Lake platform.

We're told that some motherboards could still come to market with the PCIe 4.0-enabling components in the hopes that Intel will allow the next-gen Rocket Lake processors, which will drop into the same socket, to support the feature on motherboards with the chipset. However, given that Intel isn't known for allowing full backward compatibility with previous-gen chipsets, that's up in the air.

Socket 1200 motherboards are already in the final stages of development, but it is possible that some vendors could nix support for the interface entirely to reduce costs, or adjust the value-centric portions of their product stack to remove the expensive components.

As expected, Comet Lake processors will come with up to ten cores and are largely a scaled-up version of Coffee Lake, but Intel also increased the recommended Tau duration (the amount of time the chip spends in PL2 boost states) from 28 seconds to 56 seconds. The chips also have a 127W PL1 (power level 1) and 250W PL2, which also necessitate beefier power delivery subsystems that will result in higher-priced motherboards, particularly on the low end. You'll also have to pay for PCIe 4.0 support on high-end motherboards, while it doesn't appear there will be any support for the interface--at least in this generation.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Due to Intel's standard policy of allowing motherboard vendors to adjust the Tau duration, we could see some lower-end Z490 motherboards adjusted to the previous 28-second duration in a bid to reduce the cost of power delivery components. And naturally, some lower-end boards could come without support for the PCIe 4.0 interface as an additional cost-saving measure. However, motherboard vendors are already in the final stages of development and have other projects inbound, like AMD's B550 motherboards that we're told will release at Computex 2020. So we'll likely see the circuitry for PCIe 4.0 support remain on the majority of Socket 1200 motherboards, meaning you'll have to pay extra regardless of whether you'll ever be able to buy a CPU that you can drop in to support the extra bandwidth.

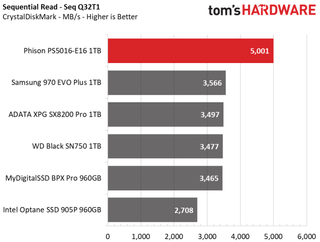

For now, Intel's challenges with the PCIe 4.0 interface appear unsolved, which unfortunately leads to slower industry adoption and development of the supporting components, like the Phison PCIe 4.0 SSD in the chart above. Next-gen SSDs that will saturate the interface with 7,000 MBps of throughput are already on the way to market, but Intel's customers won't have access to those types of speeds, not to mention the full benefits of the faster interface with PCIe 4.0 GPUs.

According to our sources, the Comet Lake platform supports DDR4-2933, Thermal Velocity Boost, and comes with UHD 630 (though we could see a rebrand to UHD 730). Apparently the difficulties with the PCIe interface, among other unspecified challenges, led Intel to delay Comet Lake from its original planned launch at CES 2020. Comet Lake is now scheduled to launch in mid-April, so we'll have to wait until then to see the impact of the changes.

Rocket Lake is currently projected to land at CES 2021.

Paul Alcorn is the Managing Editor: News and Emerging Tech for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

jimmysmitty I get the craze for more raw bandwidth especially with SSDs but can we also focus on IOPS? We need higher and more consistent IOPS much like Intels Optane 905P offers for a smoother experience. You can throw as much raw bandwidth with PCIe 4.0 x16 as you can but file sizes haven't changed and IOPS seem to be steady but still drop at certain QDs drastically.Reply

That said, for anything Intel it is best to wait. If you have anything Skylake variant don't waste the money on most anything unless the boost is worth it overall for the work you plan to do. -

DieselDrax Replyjimmysmitty said:I get the craze for more raw bandwidth especially with SSDs but can we also focus on IOPS? We need higher and more consistent IOPS much like Intels Optane 905P offers for a smoother experience. You can throw as much raw bandwidth with PCIe 4.0 x16 as you can but file sizes haven't changed and IOPS seem to be steady but still drop at certain QDs drastically.

That said, for anything Intel it is best to wait. If you have anything Skylake variant don't waste the money on most anything unless the boost is worth it overall for the work you plan to do.

Miss this one?

https://www.tomshardware.com/news/adata-sage-ssd-unveiled -

jimmysmitty ReplyDieselDrax said:Miss this one?

https://www.tomshardware.com/news/adata-sage-ssd-unveiled

I did. However the question is that peak theoretical IOPS under a certain tests (most likely sequential) and will it uphold or will it drop like most others?

IOPS can be pushed higher but a lot of them the IOPS are only that for very specific tasks. I just want to see something more like the Optane 905p where everything is more consistent even if its not the highest speed. -

DieselDrax Replyjimmysmitty said:I did. However the question is that peak theoretical IOPS under a certain tests (most likely sequential) and will it uphold or will it drop like most others?

IOPS can be pushed higher but a lot of them the IOPS are only that for very specific tasks. I just want to see something more like the Optane 905p where everything is more consistent even if its not the highest speed.

There is a whooooole lot at play, but sequential vs random IOPS and throughput can vary widely due to no fault of the SSD or its controller. To say that high, sustained IOPS are only possible with sequential I/O is a generalization that isn't exactly true and you'll find many folks that will prove it wrong.

This is where application and system/kernel optimization play a huge part. You may think "Jeez, this SSD in my system is SO SLOW when writing data. It's awful!" and the problem isn't even the SSD at all but rather the I/O scheduler used isn't ideal for the storage, the application doing the writes is very inefficient when doing write ops, etc.

Sure, there are some SSDs that do better than others with certain tasks, but in my experience that was largely with early, smaller SSDs. Recent improvements in SSDs, controllers, and OS/kernel-level efficiency when using SSDs have resulted in faster, more consistent performance in all aspects.

Are your writes page-aligned? Are your partitions aligned with page boundaries? Are you using an I/O scheduler that doesn't waste cycles trying to order writes before sending them to a storage controller that doesn't care (Think Linux CFQ vs deadline or noop)? Is your application the bottleneck due to bad/inefficient code when writing data?

Throughput and IOPS are great for comparing drives in general/generic ways, but unless your application and OS are extremely efficient and properly aligned to the storage you won't see the full potential of the SSD and it won't be the SSD's fault. Random vs sequential IOPS are largely inconsequential when it comes to actual SSD performance, IMO. Seeing a large difference in performance between random and sequential with a modern SSD raises some red flags and I would call the application and OS into question as incorrect/inefficient system setup could be triggering GC on the SSD more often than it should and if the SSD's GC isn't great then bad writes can expose that. You could argue that poor GC causing poor SSD performance is the fault of the SSD but someone else could just as easily argue that the app/system are set up incorrectly and the SSD is incorrectly being blamed as the problem.

Just my $0.02. -

jimmysmitty ReplyDieselDrax said:There is a whooooole lot at play, but sequential vs random IOPS and throughput can vary widely due to no fault of the SSD or its controller. To say that high, sustained IOPS are only possible with sequential I/O is a generalization that isn't exactly true and you'll find many folks that will prove it wrong.

This is where application and system/kernel optimization play a huge part. You may think "Jeez, this SSD in my system is SO SLOW when writing data. It's awful!" and the problem isn't even the SSD at all but rather the I/O scheduler used isn't ideal for the storage, the application doing the writes is very inefficient when doing write ops, etc.

Sure, there are some SSDs that do better than others with certain tasks, but in my experience that was largely with early, smaller SSDs. Recent improvements in SSDs, controllers, and OS/kernel-level efficiency when using SSDs have resulted in faster, more consistent performance in all aspects.

Are your writes page-aligned? Are your partitions aligned with page boundaries? Are you using an I/O scheduler that doesn't waste cycles trying to order writes before sending them to a storage controller that doesn't care (Think Linux CFQ vs deadline or noop)? Is your application the bottleneck due to bad/inefficient code when writing data?

Throughput and IOPS are great for comparing drives in general/generic ways, but unless your application and OS are extremely efficient and properly aligned to the storage you won't see the full potential of the SSD and it won't be the SSD's fault. Random vs sequential IOPS are largely inconsequential when it comes to actual SSD performance, IMO. Seeing a large difference in performance between random and sequential with a modern SSD raises some red flags and I would call the application and OS into question as incorrect/inefficient system setup could be triggering GC on the SSD more often than it should and if the SSD's GC isn't great then bad writes can expose that. You could argue that poor GC causing poor SSD performance is the fault of the SSD but someone else could just as easily argue that the app/system are set up incorrectly and the SSD is incorrectly being blamed as the problem.

Just my $0.02.

I Don't disagree that there are more factors at play. OS, software and driver levels all can change performance.

Articles focus on MB/s which have taken storage from massive bottleneck to still one of the slowest parts but not as bad as it was. I just want to see the way a drive performs with different loads and not just some top end number. -

alextheblue Reply

That's why we have reviews. The PCIe 4.0 models out now have already seen improvements in IOPS as well as sequential speeds over older models. We'll have to see some reviews to know how much that affects real performance. When the next gen units hit (like the Sage), we also need testing to see if they suffer a performance hit in real-world applications when limited to PCIe 3.0.jimmysmitty said:I Don't disagree that there are more factors at play. OS, software and driver levels all can change performance.

Articles focus on MB/s which have taken storage from massive bottleneck to still one of the slowest parts but not as bad as it was. I just want to see the way a drive performs with different loads and not just some top end number.

That aside, this delay is really embarrassing for Intel. When Samsung releases a PCIe 4.0 SSD or two, a lot of people buying that hot new Intel platform will start grumbling. -

InvalidError Reply

Unless you are reading and writing from/to a virgin SSD, there really isn't such a thing as a truly sequential workload since the SSD's write-leveling and caching algorithms will randomize the physical space over time. After a while, your neatly sequential user-space workload might just as well be small-medium size random IOs as far as the SSD's controller is concerned. What's why SSDs that have been in use and getting slower for a while can regain much of their original performance with a full-erase to reset the memory map.jimmysmitty said:I did. However the question is that peak theoretical IOPS under a certain tests (most likely sequential) and will it uphold or will it drop like most others?

If you want to know an SSD's worst-case sustainable IOPS, you'd have to look for benchmarks that show IOPS after something like one drive worth of random 4k writes and erasures after initially filling it most of the way. -

JayNor I see from other articles that the need for retimers on pcie4 motherboards is expected. I also recall AMD dropping support for existing motherboards when their pcie4 support was announced. Also, pcie4 is not supported on the Renoir chips. Were the need for expensive retimers the underlying issue for all these cases?Reply

https://wccftech.com/amd-radeon-vega-20-gpu-xgmi-pcie-4-interconnect-linux-patch/ -

InvalidError Reply

PCIe4 worked fine on most 300-400 series motherboards with hard-wired PCIe/NVMe slots (no PCIe switches for x16/x8x8/x8x4x4 support) until AMD decided to kill it with an AGESA update. As for retimers/buffers, those are only necessary for slots that are further away from the CPU/chipset, whichever they originate from.JayNor said:I see from other articles that the need for retimers on pcie4 motherboards is expected. I also recall AMD dropping support for existing motherboards when their pcie4 support was announced. -

bit_user Reply

Not enough attention is paid to low-queue depth performance, and this is where Optane is king. Everyone always touts these QD32 numbers, but those aren't relevant for the majority of desktop users.jimmysmitty said:I get the craze for more raw bandwidth especially with SSDs but can we also focus on IOPS? We need higher and more consistent IOPS much like Intels Optane 905P offers for a smoother experience. You can throw as much raw bandwidth with PCIe 4.0 x16 as you can but file sizes haven't changed and IOPS seem to be steady but still drop at certain QDs drastically.

Most Popular