Samsung to Manufacture 260 TOPS Baidu Kunlun

Baidu enters the AI fray with its own custom silicon

Baidu is entering the crowded space of AI accelerators. The company announced that it has completed the development of its Kunlun chip that offers up to 260 TOPS at 150W. It will go into production early next year on Samsung’s 14nm process and includes 2.5D HBM packaging.

Kunlun is based on Baidu’s home-grown XPU architecture for neural network processors. The chip is capable of 260 TOPS at 150W and has a bandwidth of 512GB/s. Baidu says the chip is meant for both edge and cloud computing, although the company did not provide specifications for the edge variant (as those usually have much lower TDP).

Baidu claims Kunlun is 3x faster at inference than conventional (unspecified) FPGA/GPU systems in a natural language processing model but says it also supports a wide variety of other AI workloads, though it did not say if the chip is also capable of or intended for training.

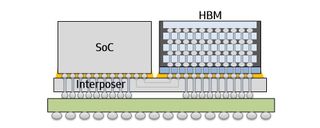

Samsung Foundry will fab the chip for Baidu, with production slated for early next year on the foundry's 14nm process with its I-Cube interposer-based 2.5D packaging to integrate the 32GB of HBM2 memory.

For Samsung, the chip helps the company expand its foundry business to data center applications, according to Ryan Lee, vice president of Foundry Marketing at Samsung Electronic:

“Baidu KUNLUN is an important milestone for Samsung Foundry as we’re expanding our business area beyond mobile to datacenter applications by developing and mass-producing AI chips. Samsung will provide comprehensive foundry solutions from design support to cutting-edge manufacturing technologies, such as 5LPE, 4LPE, as well as 2.5D packaging.”

With Kunlun, Baidu joins a range of companies with data center AI inference chips in a list that includes Google's TPUs, Qualcomm's Cloud 100, Nvidia's T4, and Intel's Nervana NNP-I and its recent Habana Goya acquisition. At the edge, companies such as Huawei, Intel, Nvidia, Apple, Qualcomm, and Samsung have integrated or discrete neural network accelerators.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Most Popular