Nvidia GeForce GTX 1080 Graphics Card Roundup

Gigabyte GTX 1080 Xtreme Gaming

It took a while for us to get our hands on Gigabyte's GeForce GTX 1080 Xtreme Gaming, but without giving too much away, the wait was worthwhile.

Gigabyte advertises this card's extended feature set, so you know we have to pay particular attention to its value-adds. Upon opening the over-sized box, we discover loads of useful extras.

While wrist protection and a new mouse pad are nice to have (even if they're more Gigabyte swag than essentials), the VR bracket does deserve special mention. That's because the GeForce GTX 1080 Xtreme Gaming comes with a front panel bracket that exposes USB 3.0 I/O and HDMI outputs for easy-to-reach connectivity.

Of course we'll cover the stacked fans and heat pipes, but let's start with a word on the 1080 Xtreme Gaming's overall performance and how we tested it.

We try to benchmark every card as it ships, right out of the box. That means we typically avoid installing vendor-specific software and intentionally use default firmware settings. Many utilities are only available for Windows, and direct comparisons are complicated by the sheer number of options available for each card. Nevertheless, in this case we're including measurements for Gigabyte's optional OC mode.

The company's four-year warranty is exemplary, even if it requires registering the 1080 Xtreme Gaming online.

Technical Specifications

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Exterior & Interfaces

The cooler cover is made out of a light alloy, with silver highlights on dark anthracite. Diagonal LED-lit struts cross above the center fan. You can control the lighting using Gigabyte's Xtreme Engine software. Because the three fans are stacked above each other in two planes, the 47oz (1330g) 1080 Xtreme Gaming is still significantly shorter than many of its competitors.

Measuring approximately 11 inches (28cm) long, five inches (13cm) tall, and almost two inches (5cm) wide, excluding the backplate, this card is anything but compact. But it's still small enough to squeeze into cases only able to accommodate 12-inch expansion cards.

Gigabyte covers the 1080 Xtreme Gaming's rear with a single-piece backplate that does nothing for cooling performance, but also doesn't hurt thanks to openings for ventilation and sufficient spacing.

Plan to accommodate an additional one-fifth of an inch (5mm) in depth beyond the plate, which may become relevant in multi-GPU configurations, particularly if your motherboard's PCIe slots are two spaces apart. Of course, it's possible to use the card without its backplate, though removing it also requires disassembling the cooler. That'll likely void Gigabyte's warranty.

The top of the card is dominated by a centered Gigabyte logo and LED indicator for the card's silent mode. Again, the color and lighting effect is controlled via software. Two eight-pin power connectors are turned 180° and positioned at the end of the card. As with so many board partner designs, Gigabyte's style is a matter of taste.

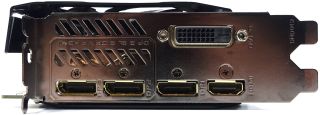

At its end the card is completely closed off, which makes sense, since the fins are positioned vertically and don't allow any airflow through the front or back anyway. The output bracket sports the same five display connectors as Nvidia's reference design, and four of them can be used simultaneously in a multi-monitor configuration.

In addition to one dual-link DVI-D connector (be aware that there is no analog signal), the bracket also exposes one HDMI 2.0b and three DisplayPort 1.4-ready outputs. The rest of the plate is mostly solid, with several openings cut into it that look like they're supposed to improve airflow, but don't actually do anything.

There's a real surprise on the other end, though. Gigabyte installs two additional HDMI outputs back there, which it means for you to connect to the front-panel accessory mentioned earlier. The company even gives you cables to make the hook-up. Alternatively, you can unscrew the front-panel jacks and install them into an included expansion bracket for extra HDMI connectivity on the back of your PC.

This type of connection sacrifices two of the rear DisplayPort outputs to enable what Gigabyte calls its Xtreme VR Link. As an option for owners of VR HMDs, we think the accessory is ingeniously simple. It addresses the hassle of hooking up HDMI and USB ports to the back of your PC with much more accessible I/O.

Board & Components

The card uses GDDR5X memory modules from Micron, which are sold along with Nvidia's GPU to board partners. Eight memory chips (MT58K256M32JA-100) transferring at 10 MT/s are attached to a 256-bit interface, allowing for a theoretical bandwidth of 320 GB/s.

Even if Gigabyte advertises 12+2 power phases, the µP9511P PWM controller, manufactured by uPI Semiconductor, is nothing but a purebred 6+2-phase model. The six GPU phases are doubled by dividing each one via separate converter circuits. First, this draws hot-spots further apart, and second, it halves each converter's current.

Gigabyte uses a total of 12 Fairchild FDMF6823Cs, which are highly integrated DrMOS modules that combine a driver IC, two power MOSFETs, and a bootstrap Schottky diode into a thermally enhanced, 6x6mm package. The memory is controlled separately through a uP1666 and utilizes two separate phases, each of which connected to a Fairchild FDMD3604AS with PowerTrench technology. The coils are well-known magic chokes produced by Lenovo; they're more or less middle-of-the-road, quality-wise.

Two capacitors are installed right below the GPU to absorb and equalize peaks in voltage. The board layout seems pretty loaded, but it's well-organized and reflects careful component placement.

Power Results

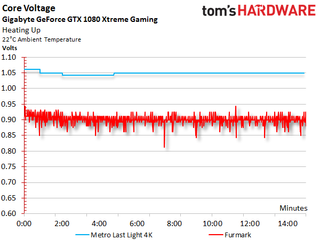

Before we look at power consumption, we should talk about the correlation between GPU Boost frequency and core voltage. We also need to revisit the subject of software configuration since Gigabyte's default settings are quite conservative and really don't do the card justice. With a little bit of manual work in the company's bundled utility, it's possible to keep the 1080 Xtreme Gaming above a 2 GHz GPU Boost frequency (or 2.1 GHz+, in our case).

Full disclosure: to achieve the settings shown in the screen shot below, we enlisted the help of a hose piping cold air from an air conditioning unit. As you might imagine, this card might be a good candidate for liquid cooling, too.

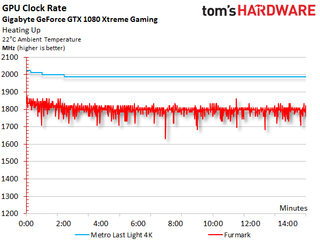

In Gaming mode, the GPU frequency remains relatively stable, even through the challenging Metro Last Light loop at 3840x2160. Setting the software to OC mode should effortlessly push it past 2 GHz; after all, it's already almost there in Gaming mode.

After warm-up, the GPU Boost frequency falls to 1987 MHz at times under load. During our stress test, it drops to 1800 MHz on average. The corresponding voltage readings fall to 1.05 and 0.9V.

Summing up measured voltages and currents, we then arrive at a total consumption figure we can easily confirm with our test equipment by monitoring the card's power connectors.

As a result of Nvidia's restrictions, manufacturers sacrifice the lowest possible frequency bin in order to gain an extra GPU Boost step. So, Gigabyte's power consumption is disproportionately high when idle. In all fairness, the company manages this behavior relatively well compared to some of its competition. Its lowest clock rate hovers at 291 MHz.

During our manual overclock, we increased the voltage by a maximum of 100mV and set the power target to 150 percent. We hit the ceiling at exactly 230W, and the card couldn't be provoked into going any higher.

Our complete power measurements are as follows:

| Power Consumption | |

|---|---|

| Idle | 11W |

| Idle Multi-Monitor | 12W |

| Blu-ray | 12W |

| Browser Games | 117-138W |

| Gaming (Metro Last Light at 4K) | 213W |

| Torture (FurMark) | 217W |

| Gaming (Metro Last Light at 4K) @ 2114 MHz | 230W |

Now let's take a more detailed look at power consumption when the card is idle, when it's gaming at 4K, and during our stress test. The graphs show the distribution of load between each voltage and supply rail, providing a bird's eye view of variations and peaks:

Temperature Results

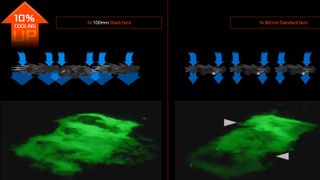

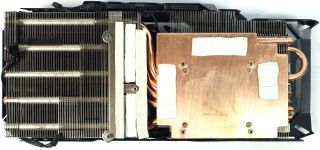

Building a thermal solution with three 10cm fans onto a card that measures less than 30cm long required some clever work on Gigabyte's part. The company calls this a stack setup, and it requires overlapping the fans somewhat; the center fan is actually mounted lower than the other two and given unique blade geometry.

While fans mounted side by side may leave dead zones without ample airflow, a stacked setup purportedly mixes air more thoroughly. We're told this should be quantifiable in our temperature measurements, particularly when it comes time to scan for hot-spots.

We strive to avoid parroting marketing material, but the following diagram from Gigabyte does do a good job explaining what's going on.

The massive copper plate is meant to cool both the GPU and memory. A VRM heat sink is placed a little farther back, integrated directly into the cooler. This makes a lot of sense; the hot MOSFETs don't heat the memory modules through the copper plate thanks to a bit of strategic separation.

The backplate is attached with four screws and it's completely aesthetic. At best, it may help with the card's structural integrity.

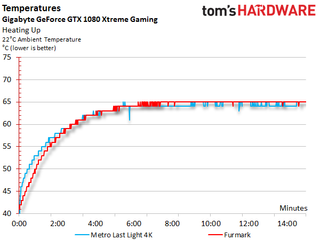

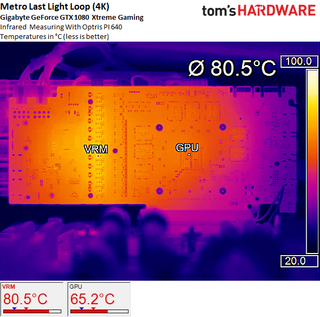

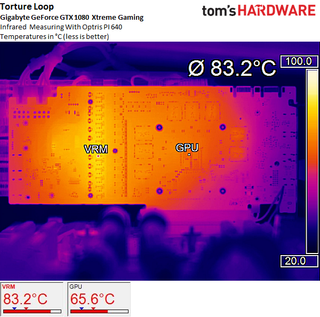

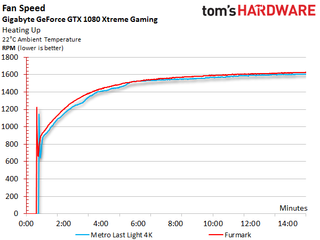

The thermal solution and its fan curve deliver downright chilly temperatures using Gigabyte's default settings. We measured 65°C (67°C inside a chassis) under the influence of fast-spinning fans.

A peak value of 81°C on some spots below the MOSFETs is quite commendable. To be sure, Gigabyte's card stays cooler than much of its competition, even after long periods of heavy use.

Even during our stress test, the results don't change much. If any part of this card's design deserves special recognition, it's the broadly distributed DrMOS and efficient VRM sink, which dissipates waste heat right into the pipes running over it.

Sound Results

Gigabyte knows how to nail exemplary hysteresis. The activation of its fans is short and crisp, and they continue to run at an almost inaudible 670 RPM after that.

In that light, the high default maximum fan speed is difficult to understand. While it does ensure relatively low temperatures, it also has a negative (though not necessarily dramatic) impact on the card's noise levels.

When the card is idle, its semi-passive mode ensures silence. Naturally, there's no reason to take measurements in that state.

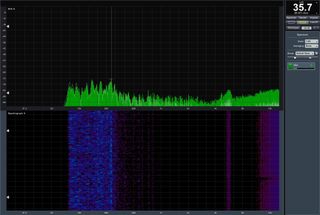

Running under full load raises the noise level to almost 36 dB(A), which is an acceptable value. It just doesn't exploit the card's full potential. Install Gigabyte's software, though, and you can complement the various overclocking modes with predefined fan profiles.

Setting the card to "silent mode" drops its noise output to just over 34 dB(A), which you might call excellent given the sound's unobtrusive character, even at higher speeds.

Unlike the fans used by Galax, Zotac, and Palit, Gigabyte's show no narrowband emissions in the low-frequency range that might be transferred to the housing and cause resonance.

It'll probably remain Gigabyte's secret why the 1080 Xtreme Gaming employs such an aggressive profile by default, only to hit record-low temperatures. We can say that configuration isn't ideal, except for those who want to take full advantage of the company's maximum power target and higher voltge. Otherwise you almost need to use the Xtreme Engine software to get this card's behavior tuned optimally.

Gigabyte GTX 1080 Xtreme Gaming

Reasons to buy

Reasons to avoid

MORE: Best Deals

MORE: Hot Bargains @PurchDeals

Current page: Gigabyte GTX 1080 Xtreme Gaming

Prev Page Gigabyte GTX 1080 G1 Gaming Next Page MSI GTX 1080 Gaming X 8GStay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

-

ledhead11 Love the article!Reply

I'm really happy with my 2 xtreme's. Last month I cranked our A/C to 64f, closed all vents in the house except the one over my case and set the fans to 100%. I was able to game with the 2-2.1ghz speed all day at 4k. It was interesting to see the GPU usage drop a couple % while fps gained a few @ 4k and able to keep the temps below 60c.

After it was all said and done though, the noise wasn't really worth it. Stock settings are just barely louder than my case fans and I only lose 1-3fps @ 4k over that experience. Temps almost never go above 60c in a room around 70-74f. My mobo has the 3 spacing setup which I believe gives the cards a little more breathing room.

The zotac's were actually my first choice but gigabyte made it so easy on amazon and all the extra stuff was pretty cool.

I ended up recycling one of the sli bridges for my old 970's since my board needed the longer one from nvida. All in all a great value in my opinion.

One bad thing I forgot to mention and its in many customer reviews and videos and a fair amount of images-bent fins on a corner of the card. The foam packaging slightly bends one of the corners on the cards. You see it right when you open the box. Very easily fixed and happened on both of mine. To me, not a big deal, but again worth mentioning. -

redgarl The EVGA FTW is a piece of garbage! The video signal is dropping randomly and make my PC crash on Windows 10. Not only that, but my first card blow up after 40 days. I am on my second one and I am getting rid of it as soon as Vega is released. EVGA drop the ball hard time on this card. Their engineering design and quality assurance is as worst as Gigabyte. This card VRAM literally burn overtime. My only hope is waiting a year and RMA the damn thing so I can get another model. The only good thing is the customer support... they take care of you.Reply -

Nuckles_56 What I would have liked to have seen was a list of the maximum overclocks each card got for core and memory and the temperatures achieved by each coolerReply -

Hupiscratch It would be good if they get rid of the DVI connector. It blocks a lot of airflow on a card that's already critical on cooling. Almost nobody that's buying this card will use the DVI anyway.Reply -

Nuckles_56 Reply18984968 said:It would be good if they get rid of the DVI connector. It blocks a lot of airflow on a card that's already critical on cooling. Almost nobody that's buying this card will use the DVI anyway.

Two things here, most of the cards don't vent air out through the rear bracket anyway due to the direction of the cooling fins on the cards. Plus, there are going to be plenty of people out there who bought the cheap Korean 1440p monitors which only have DVI inputs on them who'll be using these cards -

ern88 I have the Gigabyte GTX 1080 G1 and I think it's a really good card. Can't go wrong with buying it.Reply -

The best card out of box is eVGA FTW. I am running two of them in SLI under Windows 7, and they run freaking cool. No heat issue whatsoever.Reply

-

Mike_297 I agree with 'THESILVERSKY'; Why no Asus cards? According to various reviews their Strixx line are some of the quietest cards going!Reply -

trinori LOL you didnt include the ASUS STRIX OC ?!?Reply

well you just voided the legitimacy of your own comparison/breakdown post didnt you...

"hey guys, here's a cool comparison of all the best 1080's by price and performance so that you can see which is the best card, except for some reason we didnt include arguably the best performing card available, have fun!"

lol please..