EVGA GeForce GTX 1080 Ti FTW3 Gaming Review

Why you can trust Tom's Hardware

Board & Power Supply

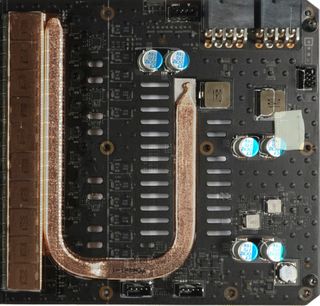

In our opinion, the most interesting part of EVGA's board is the GPU's power supply and its five doubled phases.

But before we go into more detail on that, check out the PCA's sparsely populated right side. Aside from six half-height polymer solid capacitors, two chokes for smoothing currents on the input side, and a handful of connectors, there aren't any other large components over there. That might explain cut-outs in the cooling plate between the heat sink and board.

Indeed, overlaying the heat pipe and copper sink over our bare board shot shows how these pieces fit together. Clearly, EVGA's thermal solution helps cool the chokes as well, just as we proposed during our review of the company's GeForce GTX 1080 FTW2.

The surface area available for cooling is much greater now. That's appreciated, since the coils do dissipate lots of waste heat.

GPU Power Supply

A quick glance at the components EVGA picked for its five (doubled) phases might suggest a power supply that's wildly overkill. But this isn't a configuration built for extreme overclocking; still, there's real math behind EVGA's design.

The ON Semiconductor NCP81274 that EVGA uses is a multi-phase synchronous buck converter able to drive up to eight phases. Thanks to a power-saving interface, it can operate in one of three modes: all phases on, dynamic phase shedding under average loads, or a lower (fixed) phase count for situations when the entire power supply isn't needed. This is important for distributing loads and hot-spots intelligently across the card.

Since EVGA deemed eight real phases insufficient, the company struck a compromise with five phases plus doubling, yielding 10 control circuits. This is achieved using ON Semiconductor NCP81162 current-balancing phase doublers, which monitor two phases and determine which one should receive the next PWM pulse output sent by the controller. So, when a phase receives a 40A load, the doubler splits it into two 20A loads.

One ON Semiconductor NCP81158D dual MOSFET gate driver per phase drives a pair of Alpha & Omega Semiconductor AOE6930 dual N-channel MOSFETs, which combine the high- and low-side FETs in one convenient package. The decision to employ two of those AlphaMOS chips per circuit (totaling 20 of them) isn't necessarily related to EVGA's pursuit of overclocking; there are other technical reasons to go this route...

Let's get back to our 40A load example, split into two 20A loads via phase doubling. Through the use of two dual MOSFETs in parallel, this number then drops to just 10A per package, facilitating a much more even distribution of hot-spots across the board, reducing the parallel circuits' internal resistance, and cutting down on power loss converted to waste heat. The high number of control circuits also cuts the switching frequency, further alleviating thermal load.

The AOE6930s operate efficiently at temperatures through 75 to 80°C, and up to about 20A. Beyond that, you'd be looking at worrying thermal loads. But even in the NCP81274's all-on mode, this provides up to 400A of current, which should be sufficient for any imaginable operating condition. EVGA's real motivation here was clearly to make those control circuits work as effectively as possible. Without spoiling our environmental measurements, the concept turns out well.

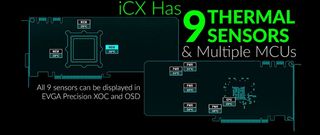

Thermal Sensors

EVGA uses an 8-bit flash-type controller made by Sonix that does a good job of capturing data almost in real-time. A total of nine small thermal sensors are positioned above and below possible hot-spots on the board. These feed information to the Sonix chip, which controls the iCX cooler's three fans in an asynchronous manner.

Due to the way those AOE6930 MOSFETs change the control circuits, however, EVGA should have moved the VRM's thermal sensors accordingly. Because the company didn't, you end up with temperature readings that sometimes don't match the measurements taken directly below the components. Still, the differences we saw are quite acceptable. The sensor readings do approximately resemble the true values.

EVGA's Precision XOC software tool displays the output of all nine sensors, going so far as to enable logging. The software also lets you fine-tune the fan controls to help reduce specific hot-spots.

If you'd like to know more about iCX, check out Testing EVGA's GeForce GTX 1080 FTW2 With New iCX Cooler.

Memory Power Supply

The GeForce GTX 1080 Ti FTW3 Gaming employs half-height ferrite core chokes that may be of average quality, but are sufficient for this application. Nvidia even uses them. You'll find these coils in the GPU and memory power supplies.

A total of 11 Micron MT58K256M321JA-110 GDDR5X ICs are organized around the GP102 processor. They operate at 11 Gb/s data rates, which helps compensate for the missing 32-bit memory controller compared to Titan Xp. We asked Micron to speculate why Nvidia didn't use the 12 Gb/s MT58K256M321JA-120 modules advertised in its datasheet, and the company mentioned they aren't widely available yet, despite appearing in its catalog. Because Nvidia sells its GPU and the memory in a bundle, EVGA has very little room to innovate in this regard.

The memory's power supply is located to the left of the GPU's voltage regulation circuitry. It consists of an ON Semiconductor NCP81278 two-phase synchronous buck converter with integrated gate drivers and the same AOE6930 dual N-channel MOSFETs.

Miscellaneous Components

Current monitoring is handled by a triple-channel Texas Instruments INA3221. Those two shunts in the input area are taking the current flow to be monitored.

And with two coils behind the eight-pin power supply connectors, EVGA even adds some kind of filtering against spikes.

The card has a dual BIOS that comes with a small sliding selector switch (master/slave). We advise against force-flashing the master BIOS to a different version, though.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

AMD-powered classic Macintosh System 1-inspired mini PC available for retail — Ayaneo's new AM01 released after a successful Indiegogo campaign

Raspberry Pi Compute Module 4S memory variants announced

TSMC's labor practices draw serious concern in Arizona — the company's new chip plant allegedly plagued by worker abuses

-

AgentLozen I'm glad that there's an option for an effective two-slot version of the 1080Ti on the market. I'm indifferent toward the design but I'm sure people who are looking for it will appreciate it just like the article says.Reply -

gio2vanni86 I have two of these, i'm still disappointed in the sli performance compared to my 980's. What i can do but complain. Nvidia needs to do a driver game overhaul these puppies should scream together. They do the opposite which makes me turn sli off and boom i get better performance from 1. Its pathetic. Nvidia should just kill Sli all together since they got rid of triple sli they mind as well get rid of sli as well.Reply -

ahnilated I have one of these and the noise at full load on these is very annoying. I am going to install one of Arctic Cooling's heatsinks. I would think with a 3 fan setup this system would cool better and not have a noise issue like this. I was quite disappointed with the noise levels on this card.Reply -

Jeff Fx Reply19811038 said:I have two of these, i'm still disappointed in the sli performance compared to my 980's. What i can do but complain. Nvidia needs to do a driver game overhaul these puppies should scream together. They do the opposite which makes me turn sli off and boom i get better performance from 1. Its pathetic. Nvidia should just kill Sli all together since they got rid of triple sli they mind as well get rid of sli as well.

SLI has always had issues. Fortunately, one of these cards will run games very well, even in VR, so there's no need for SLI. -

dstarr3 Reply19811038 said:I have two of these, i'm still disappointed in the sli performance compared to my 980's. What i can do but complain. Nvidia needs to do a driver game overhaul these puppies should scream together. They do the opposite which makes me turn sli off and boom i get better performance from 1. Its pathetic. Nvidia should just kill Sli all together since they got rid of triple sli they mind as well get rid of sli as well.

It needs support from nVidia, but it also needs support from every developer making games. And unfortunately, the number of users sporting dual GPUs is a pretty tiny sliver of the total PC user base. So devs aren't too eager to pour that much support into it if it doesn't work out of the box. -

FormatC Dual-GPU is always a problem and not so easy to realize for programmers and driver developers (profiles). AFR ist totally limited and I hope that we will see in the future more Windows/DirectX-based solutions. If....Reply -

Sam Hain For those praising the 2-slot design for it's "better-than" for SLI... True, it does make for a better fit, physically.Reply

However, SLI is and has been fading for both NV and DV's. Two, that heat-sig and fan profile requirements in a closed case for just one of these cards should be warning enough to veer away from running in a 2-way SLI using stock and sometimes 3rd party air cooling solutions. -

SBMfromLA I recall reading an article somewhere that said NVidia is trying to discourage SLi and purposely makes them underperform in SLi mode.Reply -

Sam Hain Reply19810871 said:Unlike Asus & Gigabyte, which slap 2.5-slot coolers on their GTX 1080 Tis, EVGA remains faithful to a smaller form factor with its GTX 1080 Ti FTW3 Gaming.

EVGA GeForce GTX 1080 Ti FTW3 Gaming Review : Read more

Great article! -

photonboy NVidia does not "purposely make them underperform in SLI mode". And to be clear, SLI has different versions. It's AFR that is disappearing. In the short term I wouldn't use multi-GPU at all. In the LONG term we'll be switching to Split Frame Rendering.Reply

http://hexus.net/tech/reviews/graphics/916-nvidias-sli-an-introduction/?page=2

SFR really needs native support at the GAME ENGINE level to minimize the work required to support multi-GPU. That can and will happen, but I wouldn't expect to see it have much support for about TWO YEARS or more. Remember, games usually have 3+ years of building so anything complex needs to usually be part of the game engine when you START making the game.