16GB RTX 3070 Mod Shows Impressive Performance Gains

This GPU really is handicapped with its default 8GB configuration.

YouTuber Paulo Gomes recently published a video showing how he modified a customer's RTX 3070, which used to be one of Nvidia's best graphics cards, with 16GB of GDDR6 memory. The modification resulted in serious performance improvements in the highly memory-intensive Resident Evil 4, where the 16GB mod was performing 9x better than the 8GB version in the 1% lows. (Resulting in significantly smoother gaming performance.)

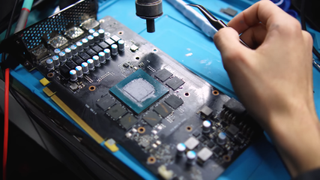

Unlike previous memory mods we've seen on cards like the RTX 2070, Gomes' RTX 3070 mod required some additional PCB work to get the 16GB memory configuration working properly. The modder had to ground some of the resistors on the PCB to trick the graphics card into supporting the higher-capacity memory ICs that are required to double the VRAM capacity on the RTX 3070.

Besides this mod, the memory swap proceeded as usual. The modder removed the initial memory chips that came on the graphics card, cleaned the PCB, and installed new Samsung 2GB memory ICs that would make up the new 16GB configuration. Besides some initial flickering that was fixed by running the GPU in high-performance mode from the Nvidia Control Panel, the card performed perfectly with the new modifications.

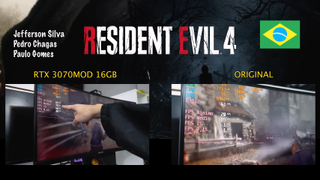

The additional 8GB of VRAM proved to be extremely useful in boosting the RTX 3070's performance in Resident Evil 4. The modder tested the game with both the original 8GB configuration and the modified 16GB configuration and found massive improvements in the card's 0.1% and 1% lows. The original card was operating at just one (yes one) FPS 1% low and 0.1% FPS lows, while the 16GB card operated with 60 and 40 FPS respectively. Average frame rates also went up, from 54 to 71 FPS.

This resulted in a massive upgrade to the overall gaming experience on the 16GB RTX 3070. The substantially higher .1% and 1% lows meant that the game was barely hitching at all, and performance was buttery smooth. The 8GB conversely had massive micro stuttering issues that would last for a significant amount of time in several areas of the game.

It's interesting to see what this mod has done for the RTX 3070, and it shows the potential of what such a GPU could do with 2023's latest AAA titles when it's not bottlenecked by video memory. This is an issue that has plagued Nvidia's 8GB RTX 30 series GPUs for some time now, especially the more-powerful RTX 3070 Ti, where the 8GB frame buffer is not big enough to run 2023's latest AAA titles smoothly at high or ultra-quality settings.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Aaron Klotz is a freelance writer for Tom’s Hardware US, covering news topics related to computer hardware such as CPUs, and graphics cards.

-

hotaru251 AMD was right over mocking NVIDIA for only giving 8GB to its gpu's.Reply

No modern GPU past the x060 tier should have 8gb or less.

16 should be the minimum. (especially for the cost of the gpu's) -

Colif They shot them in foot but it was a slow bleed, it lasted long enough that people bought them long before the wound started to show. The cards would be fine with more VRAM. The belief in Nvidia overshadowed any doubt.Reply

Its no better for 3070 TI

7H7epxa5ris -

Giroro If you're not on an 8k monitor, then your system is technically incapable of benefiting from what we currently call "ultra" (4096x4096) textures. I'm not just saying that the difference isn't a big deal, I'm saying there your typical gaming setup is not capable of displaying better visuals when you switch from "High" (2048x2048) to "Ultra".Reply

You simply don't have enough pixels to see the full resolution of a single texture, let alone the dozens/hundreds of textures on screen at any given time. A 3840 x 2160 monitor is less than half the resolution of each ultra texture. And at 1440p? Forget about it.

These unoptimized textures are also why games are wasting so much hard drive space. It's really easy for a game developer to just throw in ultra high res assets, but it's a waste of resources. Even at 4K-medium (1024x1024) it's rare in most games to have a single texture so large on screen that your system would have a chance at benefiting from the extra pixels. Even then, it's usually not that noticeable of a difference.

It used to be that medium textures meant 512x512 or lower, which could be a very noticeable step down at 4k... but that's not how most game developers are calling their settings, right now.

Jumping from High (2048x2048) to Ultra textures is a 4x hit to memory resources, but there's usually no benefit whatsoever to visuals in any reasonably described situation.

Lately, I hear a lot of people complaining that a given card can't even play "1080p max settings" with 8GB of memory, but maybe they should be rethinking their goal of using textures that are 8x larger than their monitor. -

Alvar "Miles" Udell To borrow a chart from Overclock3D, RE4 consumes more than 12GB of VRAM even at 1920x1080 without RT, and crashes if you try to max out the details on an 8GB card, so in this case any card without 16GB VRAM is insufficient.Reply

It's easy to blame nVidia for sticking with 8GB VRAM through even midrange cards, but if you're talking about this one case, which will potentially encompass more cases in the next few years, any GPU which is advertised as being able to fluidly game at 1920x1080 will have to feature 16GB, and I bet AMD will push "1080P KING!" type verbiage onto cards featuring less than 16GB VRAM.

This means the RX 5700XT 8GB, launched less than three years ago for $400 and targeted as an upper mid range video card, would be incapable of 1920x1080 gaming without detail reduction, the same as my $550 2070 Super.

If you wanted to go slippery slope, you could also say that TomsHardare, and all other reputable sites, should refuse to give any recommendation to any GPU at any price point with less than 16GB VRAM that's designed for gaming, since even entry level GPUs are targeted at 1920x1080 60fps. -

Metal Messiah. Actually NVIDIA did indeed plan a 3070 Ti 16GB SKU at some point, some 2 years ago, but this card was eventually cancelled/nuked.Reply

A prototype of an engineering sample also leaked out. No reason was given as to why the production got halted.

1608860721248612352View: https://twitter.com/Zed__Wang/status/1608860721248612352

https://pbs.twimg.com/media/FlPSSxFaMAAWfOv?format=jpg&name=large -

FunSurfer This modded RTX 3070 uses 12.43 GB of VRAM at 2560x1080 resolution, this is not good for the new 12GB RTX 4070 Ti. Wake up Nvidia. By the way, where are the double VRAM cards that we used to have, like the GTX 770 4GB?Reply -

atomicWAR Reply

Your talking max setting without RT, not low or medium. The simple fact is when you buy a sku at any given price point there will always be sacrifices you have to make to get games to run at a given frame rate...If you couldn't play RE4 or more games at low or medium setting then I might agree with you stance as that is entry level gaming. THAT said I think 12GB would be a better entry level point for VRAM. 8GB is clearly not enough for new cards coming out in newer games.Alvar Miles Udell said:To borrow a chart from Overclock3D, RE4 consumes more than 12GB of VRAM even at 1920x1080 without RT, and crashes if you try to max out the details on an 8GB card, so in this case any card without 16GB VRAM is insufficient.

It's easy to blame nVidia for sticking with 8GB VRAM through even midrange cards, but if you're talking about this one case, which will potentially encompass more cases in the next few years, any GPU which is advertised as being able to fluidly game at 1920x1080 will have to feature 16GB, and I bet AMD will push "1080P KING!" type verbiage onto cards featuring less than 16GB VRAM.

This means the RX 5700XT 8GB, launched less than three years ago for $400 and targeted as an upper mid range video card, would be incapable of 1920x1080 gaming without detail reduction, the same as my $550 2070 Super.

If you wanted to go slippery slope, you could also say that TomsHardare, and all other reputable sites, should refuse to give any recommendation to any GPU at any price point with less than 16GB VRAM that's designed for gaming, since even entry level GPUs are targeted at 1920x1080 60fps. -

InvalidError Reply

For actual "entry-level" gaming to continue to exist, the price point needs to remain economically viable and I doubt you can slap more than 8GB on a cheap enough entry-level (50-tier) GPU to draw new PC-curious customers in.atomicWAR said:THAT said I think 12GB would be a better entry level point for VRAM. 8GB is clearly not enough for new cards coming out in newer games.

If PC gaming becomes exclusive to people willing to spend $400+ on a GPU just for trying it out, the high entry cost will drive most potential new customers away and game developers will bail out of PC games development for anything that requires more than an IGP due to the install base of GPUs powerful enough to run their games as intended being too small to bother with.

AMD and Nvidia will be signing their own death certificates (at least PC gaming wise) if they insist on pushing 8GB card prices into the stratosphere or effectively abolish sensible sub-$300 SKUs. -

Order 66 It would be great if AMD released an Rx 7500xt with 4096 shaders and 10+ GB of GDDR6 to rub it in Nvidia's face. If AMD is going to mock nvidia they better not stoop to the same level as Nvidia. It would also be a welcome improvement over the 6500xt.Reply -

atomicWAR Reply

Maybe on the 8GB for 50 class cards but the 60 class? Mmmmm that's a step to far IMHO. But your not wrong with Nvidia/AMD on pricing right now I don't think anybody disagrees with that. I honestly still think Nvidia could have likely got 12 GB affordable even in 50 class cards but I don't know for certain without access to their BOM (I have heard estimates for a 4090 anywhere from 300 to 650). One would think 10GB would have been at least doable (buses changed accordingly). I just seems like from historic trends in performance per dollar gains many consumers are getting the short end of the stick and someone in the supply chain (Nvidia or otherwise) is absorbing those dollars for themselves.InvalidError said:For actual "entry-level" gaming to continue to exist, the price point needs to remain economically viable and I doubt you can slap more than 8GB on a cheap enough entry-level (50-tier) GPU to draw new PC-curious customers in.

If PC gaming becomes exclusive to people willing to spend $400+ on a GPU just for trying it out, the high entry cost will drive most potential new customers away and game developers will bail out of PC games development for anything that requires more than an IGP due to the install base of GPUs powerful enough to run their games as intended being too small to bother with.

AMD and Nvidia will be signing their own death certificates (at least PC gaming wise) if they insist on pushing 8GB card prices into the stratosphere or effectively abolish sensible sub-$300 SKUs.

Most Popular