Google's Machine Learning Chip Is Up To 30x Faster, 80x More Efficient Than CPUs And GPUs

Google revealed more details about the performance of its Tensor Processing Unit (TPU), the company’s first machine learning chip. According to some benchmarks Google performed on its TPU, Haswell server CPUs, and Nvidia Tesla K80, the TPU chip came up 15-30x faster and up to 80x more efficient than those other chips.

How The TPU Was Born

Back in 2006, Google’s engineers discussed deploying GPUs, field-programmable gate arrays (FPGAs), and custom application specific integrated circuits (ASICs) in their data centers for machine learning applications. However, at the time, they concluded that their machine learning applications didn’t require enough computation to warrant developing ASICs.

This changed in 2013, when the engineers realized that the company’s use of deep neural networks (DNNs) was exploding, and that it would soon need to double its data centers if the growth in usage of DNNs continued.

Google’s engineers then decided to prioritize building a custom ASIC for inference, which is running neural networks that have already been trained on off-the-shelf GPUs. They called this ASIC a “Tensor Processing Unit” (TPU) because it’s tailored for Google’s open source TensorFlow machine learning software library.

How The TPU Is Built

Because Google was in a rush to deploy the TPU, the company didn’t integrate it tightly to CPUs and instead connected the TPU to the processors via the PCIe I/O bus. This allowed the TPU to plug into servers just as a GPU does. However, the host server has to send the instructions to the TPU rather than the TPU fetching the instructions itself, which means it’s closer in spirit to a floating-point unit co-processor than a GPU. This was also done to simplify design and debugging.

Although it’s a custom ASIC, a type of chip typically designed to run a limited set of instructions, Google said that it has some of the flexibility of an FPGA. This means it can be programmed to handle multiple types of neural networks. Therefore, even if Google’s future needs will require different types of machine learning algorithms, the TPUs should be flexible enough to adapt.

Plus, given the performance advantage the TPUs seem to offer over CPUs and GPUs, the company will likely continue to build new generations adapted for whatever machine learning technology is most advanced at the time.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

TPU Performance Metrics

Google’s engineers said in a paper about the TPU that the most important metric it considers when buying chips for its data servers is not the peak performance of a chip, but the cost-performance metric - or, more specifically, the total cost of ownership (TCO). TCO is correlated with power use, as the more power a chip uses, the more its TCO rises over its lifetime.

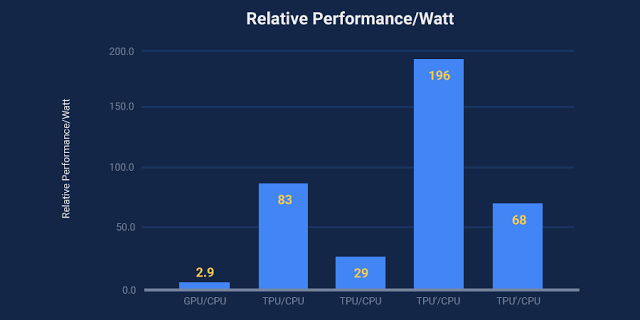

Google used two performance/Watt metrics to compare the power draw of the TPU to that of the Haswell CPU and the K80 GPU. One is the total-performance/Watt metric, which includes the power used by the host server CPU when combined with either a K80 GPU or a TPU. The other is the incremental-performance/Watt, which only refers to the power used by the K80 GPU or the TPU.

A system that includes a Haswell server chip and an Nvidia K80 GPU has 1.2-2.1x the total-performance/Watt of the Haswell CPU alone, while an K80 GPU has an incremental-performance/Watt of 1.7-2.9x compared to a Haswell CPU.

At the same time, a Haswell/TPU server has 17-34x better total-performance/Watt compared to a Haswell CPU, and a relative incremental-performance/Watt of 41-83x for the TPU alone. That also means the TPU has 25-29x the performance/Watt of a K80 GPU.

Google also claimed that its TPU can achieve 15-30x inference performance compared to the K80 GPU and the Haswell CPU.

What To Expect From Future TPU Chips

The TPU was manufactured on a 28nm planar process and has been in use since 2015. If a next-generation TPU is made on a 14nm process, it could see a 2x improvement in performance/Watt just from that jump alone, as we’ve already seen from AMD and Nvidia’s 14/16nm GPUs.

Google also said if it had taken an extra 15 months to have designed better logic--which is how long it took to design the first TPU-- it could’ve increased clock speeds by another 50%. That could be a clue that if Google is indeed working on a new generation, that kind of design would be included in it.

Because the company rushed to integrate the TPU quickly in its data centers, it used whatever memory and interconnects were available. However, it said that if it were to use 4x as much bandwidth for its servers’ memory, it could increase the performance of the TPU by another 3x.

Google hasn’t specifically talked about its plans to build a new TPU chip, but going by the performance/Watt of the first generation and how much room there is to improve it, chances are it won’t leave this opportunity on the table. The use of machine learning for all of the company’s services is only going to increase over the next few years, making such chips even more necessary than they are today.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

Vendicar Decarian "GPU u can do anything" - AmdlovaReply

Well.. No. If GPU's could do anything in a practical sense, then there wouldn't be any need for CPU's.

Just as GPU's are optimized for graphics rendering, AI chips are optimized for AI computing. This one happens to be optimized for Google's AI methodologies.

Good for google.

This trend of moving software algorithms to hardware will continue as the limits of Silicon computation are reached. It is a natural consequence of trying to squeeze more computational power out of a technology that has reached it's limits of raw computational power in the form of traditional CPU design.

The advantage of these new kinds of chips is massive parallelism optimized to suit a specific task.

Neural Network processing for example can make use of litterally hundreds of billions of parallel computing elements each taking the place of a single neuron.

Put enough of those things together with some interneuron communication a little internal memory and you have yourself a simulated brain.

You can certainly simulate such things on CPU's and even GPU's but neither are well suited to the task since both are far, far too course grained to produce rapid simulation results.

The trick with massive parallelism is in who gets what messages, and when, and where is the memory locaed and how is it accessed. -

Vendicar Decarian "dude above me" - RomeorejectReply

The dude above you is a moron.

"This sounds amazing." - Romeoreject

The Google algorithms seem to work pretty well for pattern matching.

What the production of the ASIC tells you is that Google is confident enough in the methods used and see enough speed advantage in the methods used, that they are willing to commit to the production of an ASIC to implement much of those methods in hardware - which can not be modified once produced.

Do you think the NSA uses similar ASIC's to monitor you? -

bit_user Reply

Don't know, but I also don't care. The level of sophistication of their monitoring technology isn't the issue.19528113 said:Do you think the NSA uses similar ASIC's to monitor you?

What I do care about is China's monitoring of non-Chinese. I think that's far more likely to impact me, personally. Maybe not for 5 years or more, but it could be even worse than all these ad networks tracking us. -

waynes They are getting advantage from a stripped down functionality circuit the question is what else is it useful for. If it is only useful for a narrow range of things, then what is the use of dwelling on it.Reply

It mentions limited instruction set, so it obviously is not completely hardwired, and they are tailoring it for more functionality for future editions. This extra functionality is likely at increased circuit complexity, which maybe would take some of the speculated speed improvements out of it, and reduce the amount of processing power per area compared to what could be. But what it does mean, is that of will still be a lot more powerful and efficient then present. But what if you want to make it more functional again, to do everything but 3D/graphics, then these metric advantages will again become less, but this is the sort of circuit we really do need working alongside a GPU graphic card. I call this a Workstation Processing Unit. GPU manufacturers can work on something like this from their GP-GPU experience alongside their GPU lines. -

bit_user Reply

Because machine learning is interesting, as well as GPU computing and the benefit they got by going with an ASIC.19574963 said:They are getting advantage from a stripped down functionality circuit the question is what else is it useful for. If it is only useful for a narrow range of things, then what is the use of dwelling on it.

Maybe, but you don't really know what constitutes the primitives. If the instructions are like computing the tensor product between A and B, then the programmability is probably adding very little overhead.19574963 said:It mentions limited instruction set, so it obviously is not completely hardwired, and they are tailoring it for more functionality for future editions. This extra functionality is likely at increased circuit complexity, which maybe would take some of the speculated speed improvements out of it, and reduce the amount of processing power per area compared to what could be.

They already do that. Nvidia has the Tesla cards, which have GPU without any video output. In the case of the P100, the graphics circuitry seems to have omitted from the chip, entirely. That said, I don't know what proportion of a modern GPU is occupied by the raster engines, but it might not be very much.19574963 said:But what if you want to make it more functional again, to do everything but 3D/graphics, then these metric advantages will again become less, but this is the sort of circuit we really do need working alongside a GPU graphic card. I call this a Workstation Processing Unit. GPU manufacturers can work on something like this from their GP-GPU experience alongside their GPU lines.

GPUs can't really compete with integer-only machine learning ASICs. A GPU must have lots of fp32, and that's going to waste a lot of die area, if you're only using integer arithmetic. I expect that Nvidia is already working on dedicated machine learning chips. If they built an inference engine like Google's, on the same scale as their current GPUs, it would stomp Google's effort into the ground. And bury it.