Nvidia Hopper H100 80GB Price Revealed

Nvidia’s H100 looks to be more expensive than A100.

A Japanese retailer has started taking pre-orders on Nvidia's next-generation Hopper H100 80GB compute accelerator for artificial intelligence and high-performance computing applications. The card will be available in the next several months and it looks like it will be considerably more expensive than Nvidia's current generation Ampere A100 80GB compute GPU.

GDep Advance, a retailer specializing in HPC and workstation systems, recently began taking pre-orders for Nvidia's H100 80GB AI and HPC PCI 5.0 compute card with passive cooling for servers. The board costs ¥4,745,950 ($36,405), which includes a ¥4,313,000 base price ($32,955), a ¥431,300 ($3308) consumption tax (sales tax), and a ¥1,650 ($13) delivery charge, according to the company (via Hermitage Akihabara). The board will ship in the latter half of the year, though we are unsure as to exactly when this will be.

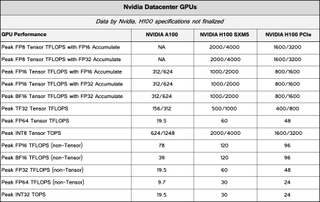

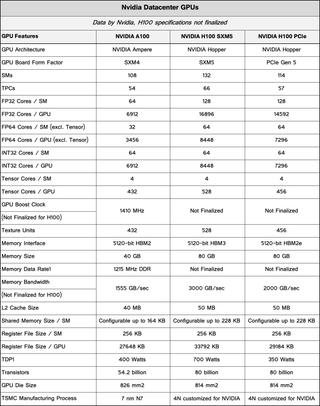

Nvidia's H100 PCIe 5.0 compute accelerator carries the company's latest GH100 compute GPU with 7296/14592 FP64/FP32 cores (see exact specifications below) that promises to deliver performance of up to 24 FP64 TFLOPS, 48 FP32 TFLOPS, 800 FP16 TFLOPS, and 1.6 INT8 TOPS. The board carries 80GB of HBM2E memory with a 5120-bit interface offering a bandwidth of around 2TB/s and has NVLink connectors (up to 600 GB/s) that allow to build systems with up to eight H100 GPUs. The card is rated for a 350W thermal design power (TDP).

Being at least two times faster than its A100 predecessor based on the Ampere architecture, Nvidia's H100 featuring the Hopper architecture looks to be considerably more expensive too. Today, an Nvidia A100 80GB card can be purchased for $13,224, whereas an Nvidia A100 40GB can cost as much as $27,113 at CDW. About a year ago, an A100 40GB PCIe card was priced at $15,849 ~ $27,113 depending on an exact reseller. By contrast an H100 80GB board due in the second half of the year is set to cost around $33,000 (at least in Japan).

We do not know whether Nvidia plans to increase list price of its H100 PCIe cards compared to A100 boards because customers get at least two times higher performance at a lower power. Meanwhile, we do know that initially Nvidia will ship its DGX H100 and DGX SuperPod systems containing SXM5 versions of GH100 GPUs as well as SXM5 boards to HPC vendors like Atos, Boxx, Dell, HP, and Lenovo.

Later on, the company will begin shipping its H100 PCIe cards to HPC vendors and only then those H100 PCIe boards will be available to smaller AI/HPC system integrators as well as value-added resellers. All of these companies are naturally more interested in shipping complete systems with H100 inside rather than selling only cards. Therefore, it is possible that initially H100 PCIe cards will be overpriced due to high demand, limited availability, and appetites of retailers.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Anton Shilov is a Freelance News Writer at Tom’s Hardware US. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

Most Popular