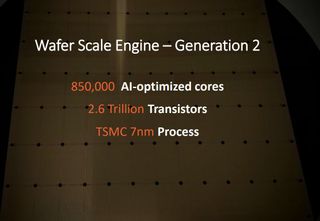

Cerebras Teases World's Largest Chip with 2.6 Trillion 7nm Transistors and 850,000 Cores

Big, Bigger, Biggest

The original Cerebras Wafer Scale Engine (WSE) is a marvel in truly every sense, but the company has now upped the ante. The original WSE brought an unbelievable 400,000 cores, 1.2 trillion 16nm transistors, 46,225 square millimeters of silicon, and 18 GB of on-chip memory, all in one chip that is as large as an entire wafer. Add in that the chip sucks 15kW of power and features 9 PB/s of memory bandwidth, and you've got a recipe for what is unquestionably the world's fastest AI processor. As you can see in the image above, the chip is nearly as large as a laptop.

How do you top that? According to the Cerebras slide deck it shared at Hot Chips 2020, you transition to TSMC's 7nm process, which allows a mind-bending 850,000 cores powered by 2.6 trillion processors - all in a single chip that's the size of an entire wafer. The company says it already has the massive chips up an running in its labs.

The current Cerebras Wafer Scale Engine (WSE) sidesteps the reticle limitations of modern chip manufacturing, which limit the size of a single monolithic processor die, to create the wafer-sized processor. The company accomplishes this feat by stitching together the dies on the wafer with a communication fabric, thus allowing it to work as one large cohesive unit.

The end result is 55.9 times larger than the world's largest GPU (the new Nvidia A100 measures 826mm2 with 54.2 billion transistors). Here's a rundown of the existing architecture, and another article covering the massive custom system used to run the processors in data centers.

Here you can see images of the first-gen system that houses the chip, which has a robust power delivery and cooling apparatus to feed the power-hungry chip. Naturally, the second-gen Wafer Scale Engine will still occupy the same amount of die area (the company is constrained by the size of single wafer, after all), but more than doubling the transistor count and the number of cores. We expect the company will also increase the memory capacity and beef up the chip interconnects to improve on-chip bandwidth, but we'll learn more details when the company announces the final product.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Paul Alcorn is the Managing Editor: News and Emerging Tech for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

Most Popular