AMD Radeon R9 Fury Review: Sapphire Tri-X Overclocked

Quickly following the Fury X, AMD’s next graphics foray is a cut-down Fiji called Fury, running at 1000MHz GPU clock. We tested Sapphire’s Tri-X Overclocked version.

Why you can trust Tom's Hardware

Power Consumption And Efficiency

We’re using the same benchmark system that we used for our AMD Radeon R9 Fury X launch article. However, we’ve tweaked it a bit. The idle power consumption’s now being measured using a system that has a lot of the software on it that tends to accumulate over time. Last time, the system was completely “fresh.” This time around, linear interpolation is applied to the data by the oscilloscope, and not when it is analyzed.

The biggest change, which we’ll stick with for all future launch articles, concerns the content, though.

We’ll look at power consumption in direct relation to gaming performance, and we’ll do so separately for 1920x1080 and 3840x2160 since there are major differences between the two. We’ll also look at several different games, and even run some of them with different settings, such as tessellation. In addition, we’ve added some applications that aren’t related to gaming. Life’s not always just about gaming, after all.

Bear in mind that these tests are merely snapshots in specific applications. A benchmark not part of our suite will likely fall somewhere between our average figure and the torture test peak. There are no absolutes these days; best estimates will always consist of a range.

Idle Power Consumption

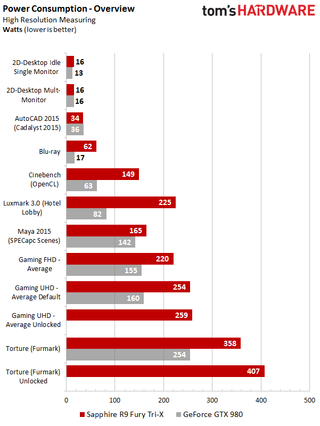

At 16W, the partner card’s power consumption is the same as the original Radeon R9 Fury X. Also, nothing changes if a TV with a different refresh rate and a 4K monitor with a different resolution are connected. This is nice to see.

Ultra HD (3840x2160) Using Default And Unlocked BIOS

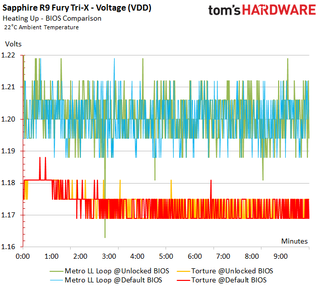

Having two BIOSes is nice, but they do practically nothing for performance. We’ll see later that the frequencies stay the same no matter which firmware you use. The unlocked BIOS just allows slightly higher average voltages over longer time periods, resulting in marginal frame rate differences.

Compared to the (slightly) slower GeForce GTX 980 with its average power consumption of 160W, the default BIOS’ 254W and unlocked BIOS’ 259W look absolutely massive.

The following two picture galleries can be used to flip back and forth between watt per FPS (Picture 1), power consumption (Picture 2) and benchmark results (Picture 3) for all games and settings we tested. We’ll first take a look at the default BIOS:

The unlocked BIOS’ only contributions are worse efficiency and a hotter graphics card:

Full HD (1920x1080) Using Default BIOS

We’re exclusively using the default BIOS due to this resolution's limits. Spot tests showed an increase of just one to three watts after switching to the unlocked BIOS. The higher power limit doesn’t impact performance at all.

Once again, and as we already know, the GeForce GTX 980 is a bit slower, but uses a lot less power and is consequently more efficient.

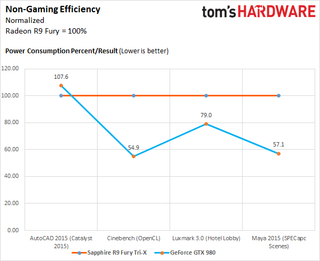

Efficiency For Different Applications

Let's shift away from gaming for a moment. Four tests show that professional software needs to be measured on a title by title basis because driver optimization, and with it application performance, can vary wildly. Generalizing just isn’t possible. We’re still offering a quick performance snapshot here.

We set the Sapphire R9 Fury Tri-X’s performance as 100 percent, since the different benchmarks have dissimilar scoring systems.

Not taking performance into account, the internal power consumption duel can be summarized like this:

So What Does The Unlocked BIOS Really Get You?

If we were mean, we’d answer the above question with "basically nothing." The second firmware is intended for overclockers, but its inclusion is questionable for a graphics card with so little frequency headroom. Other than the marginally higher power consumption during gaming, there’s barely any difference to be found between the two BIOSes. However, the graph of the voltage results for the stress test shows the substantially smaller intervals at higher voltage.

These are what increase the power consumption slightly, even if the bumps have no measurable impact on gaming performance.

It’s hard to draw a definitive conclusion about the Sapphire R9 Fury Tri-X knowing that AMD says upcoming drivers will tease more performance out of it. However, it is clear that, due to the higher leakage current, the air-cooled partner card is less efficient than the liquid-cooled Radeon R9 Fury X. Its power consumption is significantly higher than that of comparable Nvidia graphics cards.

Current page: Power Consumption And Efficiency

Prev Page Overclocking Next Page Temperatures And NoiseStay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Kevin Carbotte is a contributing writer for Tom's Hardware who primarily covers VR and AR hardware. He has been writing for us for more than four years.

Chinese researchers use low-cost Nvidia chip for hypersonic weapon —unrestricted Nvidia Jetson TX2i powers guidance system

ChatGPT can craft attacks based on chip vulnerabilities — GPT-4 model tested by UIUC computer scientists

ASRock reveals two new 27-inch 1440p IPS monitors, one with an integrated Wi-Fi antenna in the stand

-

Troezar Some good news for AMD. A bonus for Nvidia users too, more competition equals better prices for us all.Reply -

AndrewJacksonZA Kevin, Igor, thank you for the review. Now the question people might want to ask themselves is, is the $80-$100 extra for the Fury X worth it? :-)Reply -

vertexx When the @#$@#$#$@#$@ are your web designers going to fix the bleeping arrows on the charts????!!!!!Reply -

ern88 I would like to get this card. But I am currently playing at 1080p, but will probably got to 1440p soon!!!!Reply -

confus3d Serious question: does 4k on medium settings look better than 1080p on ultra for desktop-sized screens (say under 30")? These cards seem to hold a lot of promise for large 4k screens or eyefinity setups.Reply -

rohitbaran This is my next card for certain. Fury X is a bit too expensive for my taste. With driver updates, I think the results will get better.Reply -

Larry Litmanen ReplySerious question: does 4k on medium settings look better than 1080p on ultra for desktop-sized screens (say under 30")? These cards seem to hold a lot of promise for large 4k screens or eyefinity setups.

I was in microcenter the other day, one of the very few places you can actually see a 4K display physically. I have to say i wasn't impressed, everything looked small, it just looks like they shrunk the images on PC.

Maybe it was just that monitor but it did not look special to the point where i would spend $500 on monitor and $650 for a new GPU.