AMD's LiquidVR Puts Processing Muscle Behind Virtual Reality

AMD announced Liquid VR, a virtual reality initiative that takes the immediate form of a limited, alpha version SDK being seeded with select developers during GDC this week. The SDK provides access to a series of underlying processing advances (using AMD hardware, naturally) aimed at further tackling latency, device compatibility and increased content quality.

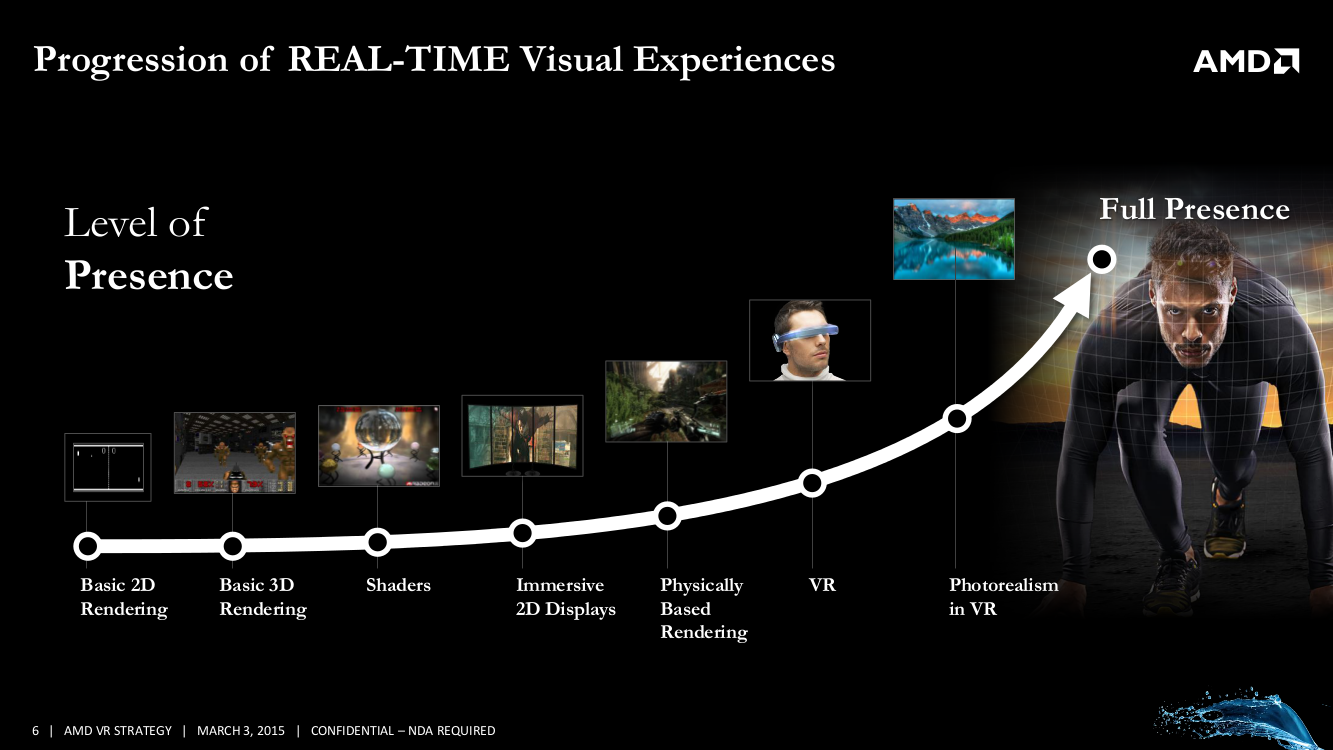

Raja Koduri, AMD's graphics CTO, called virtual reality "the next frontier for visual computing," saying today's announcements are really just the beginning, and that AMD wanted to drive virtual reality to full presence, or photo realism. To get there, he said, it will require "full sensory integration," and scalable CPUs, GPUs, and (hardware) accelerators, which will be a crucial component. AMD to the rescue, then.

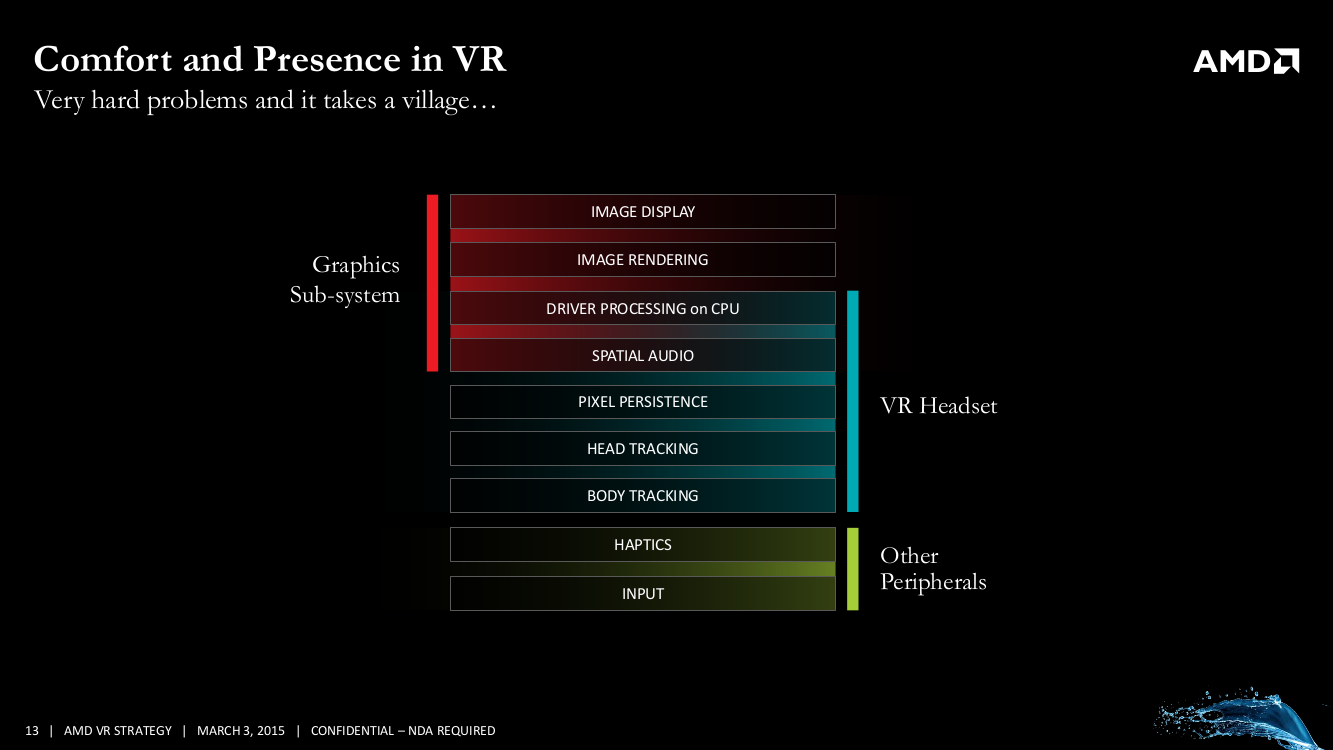

The road to VR, Kaduri said, demands adherence to two key rules: don't break presence; and "if your CPU and GPU can't keep up, you throw up." The entire ecosystem, from the graphics stack to the driver to the peripherals and even the audio processing stack, must work harmoniously, he said. And to underscore this while announcing Liquid VR, AMD involved a variety of partners, including Oculus and Crytek.

While gaming stands to be the killer app, Koduri said that he was equally enamored with the possibilities in education, medicine, training and simulation, as well as big data visualization. In other words, AMD is investing some serious resources in virtual reality.

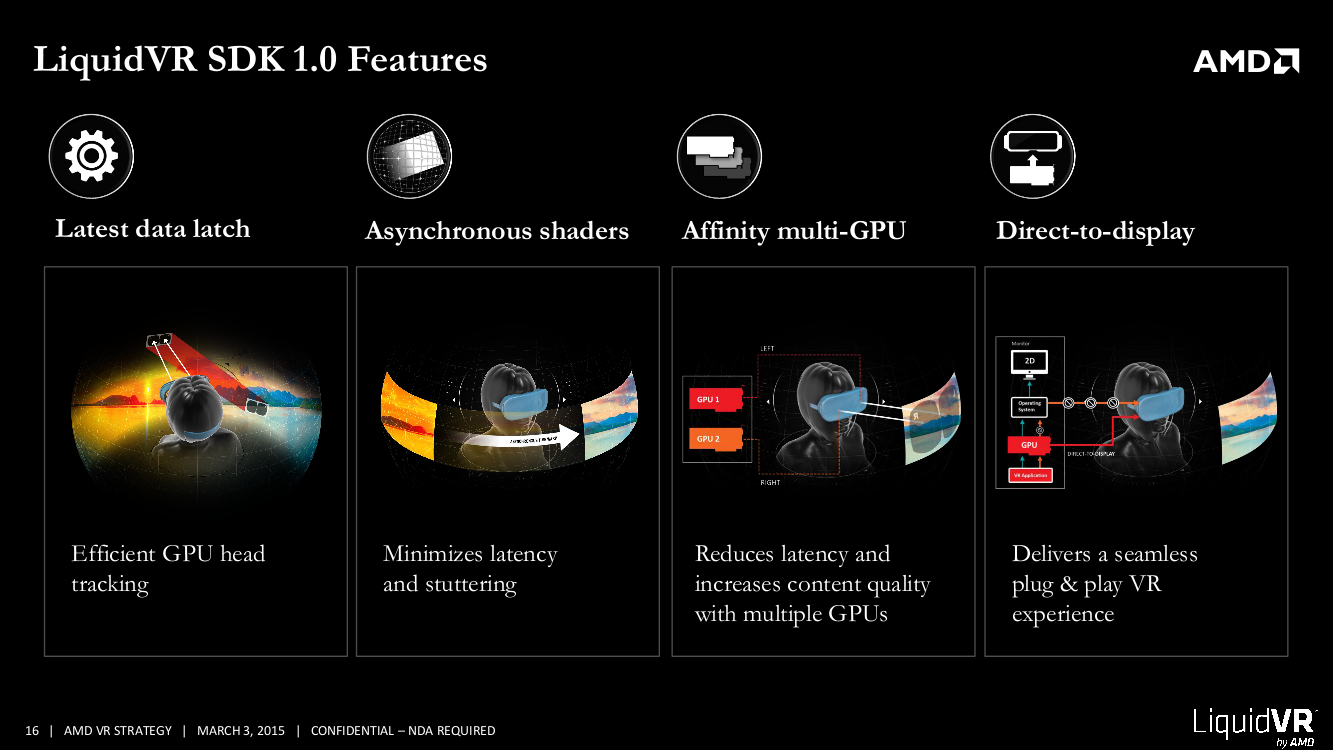

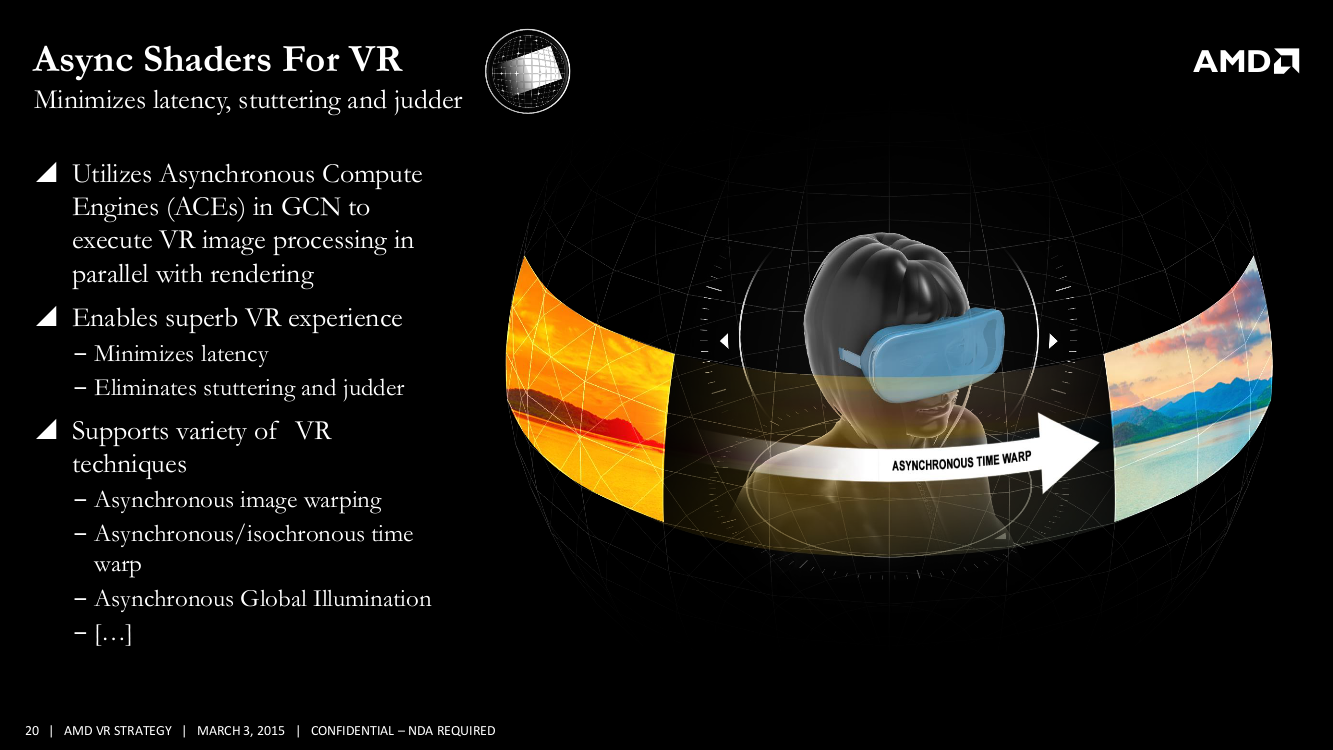

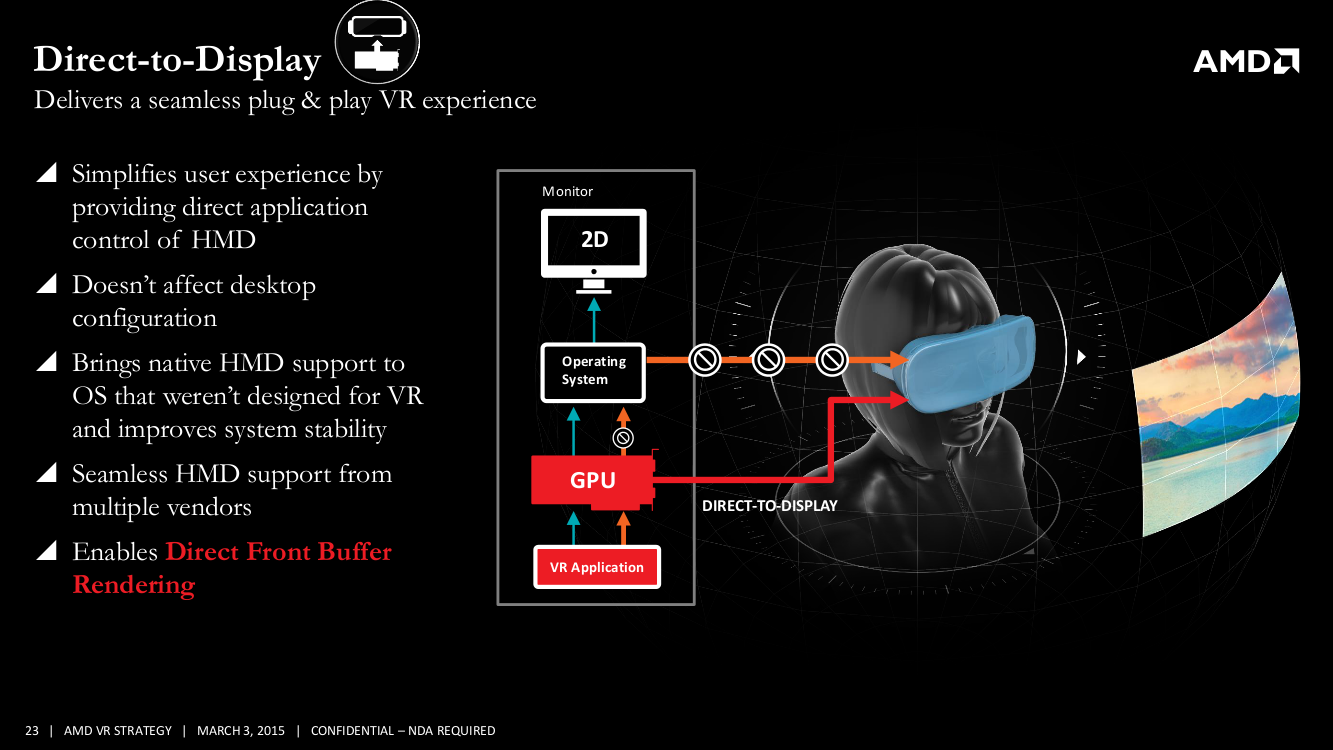

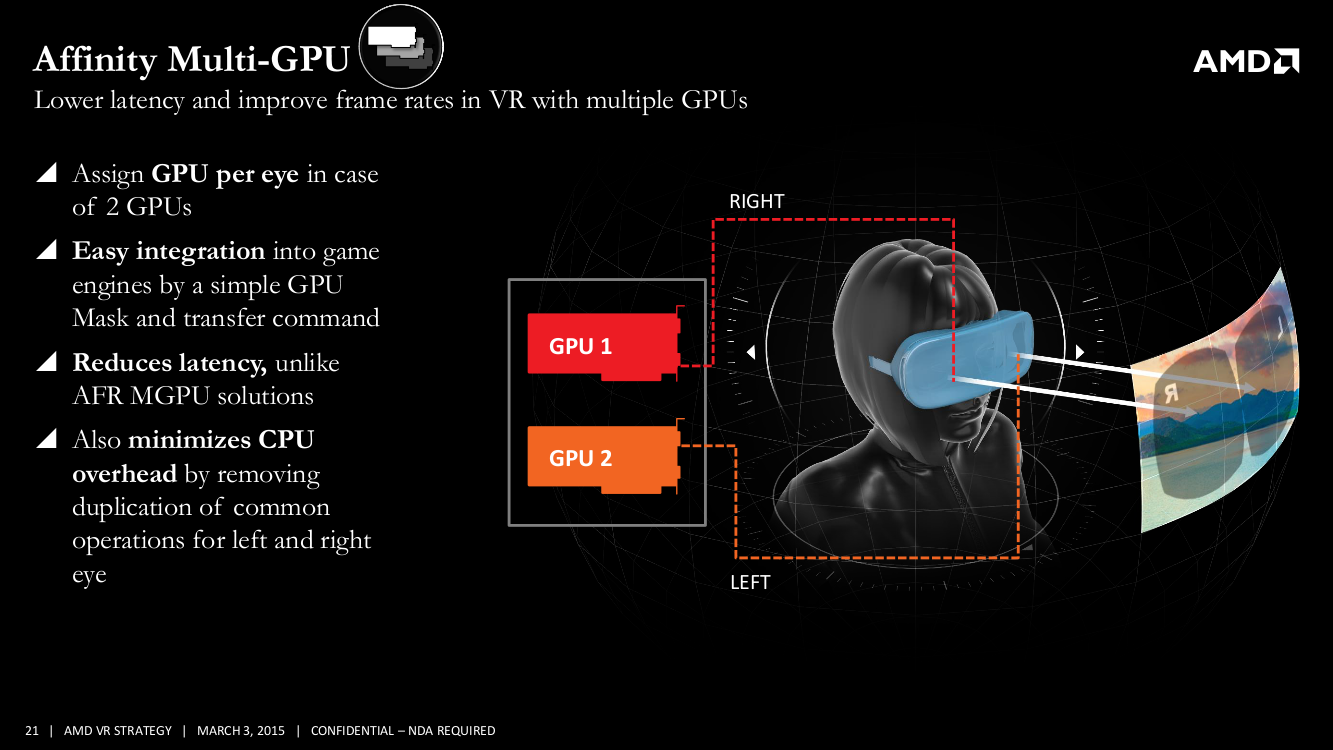

In particular, Liquid VR enables features including Latest Data Latch, Asynchronous Shaders, Affinity Multi-GPU, and Direct To Display. Much of this really re-imagines the way frames are rendered, with the goal to eke out every last millisecond of latency between head movement and visualization (or "motion to photons," to use AMD's phrasing).

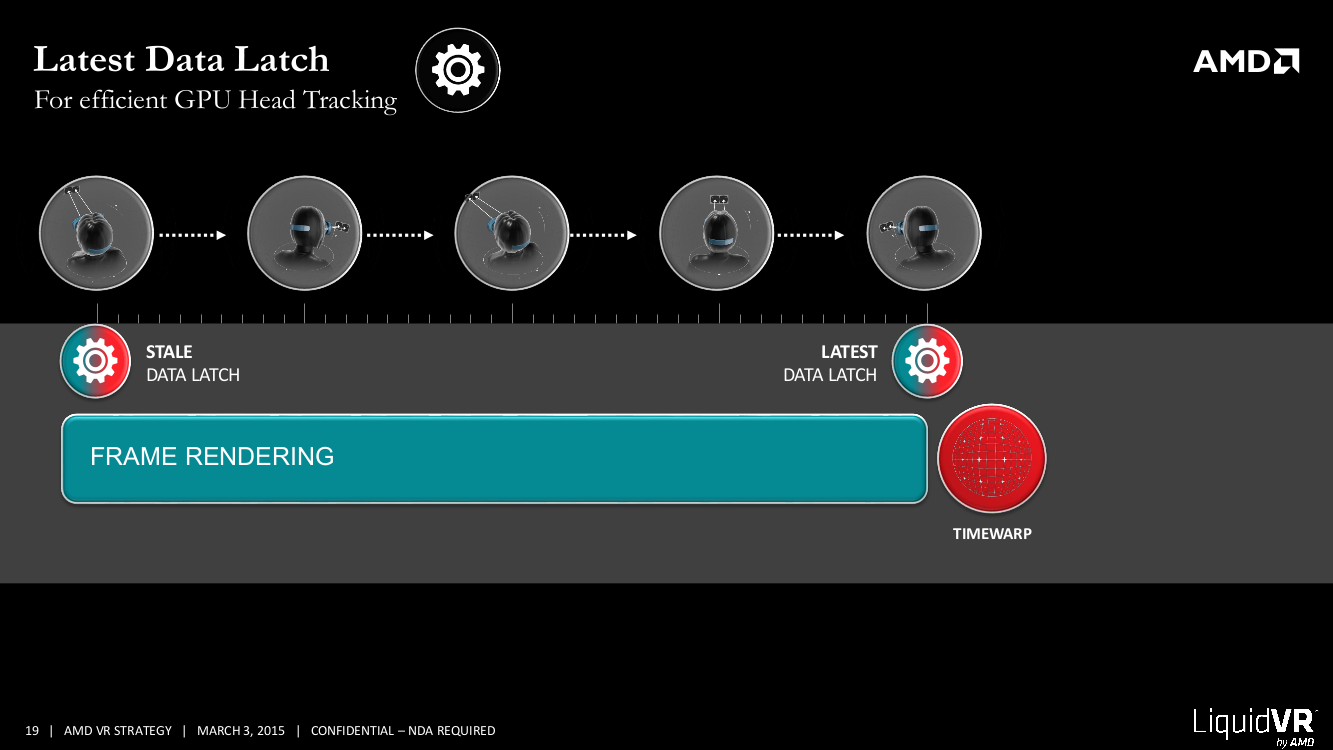

For example, "latest data latch" ensures that when the GPU grabs or binds data, it's using the very last piece of information on head tracking before VSync begins. Layla Mah, AMD's head design engineer, said that the old way of doing things meant grabbing whatever data was present, old or new, and rendering it, but now developers can ensure the headset is capturing and displaying absolutely the most recent motion.

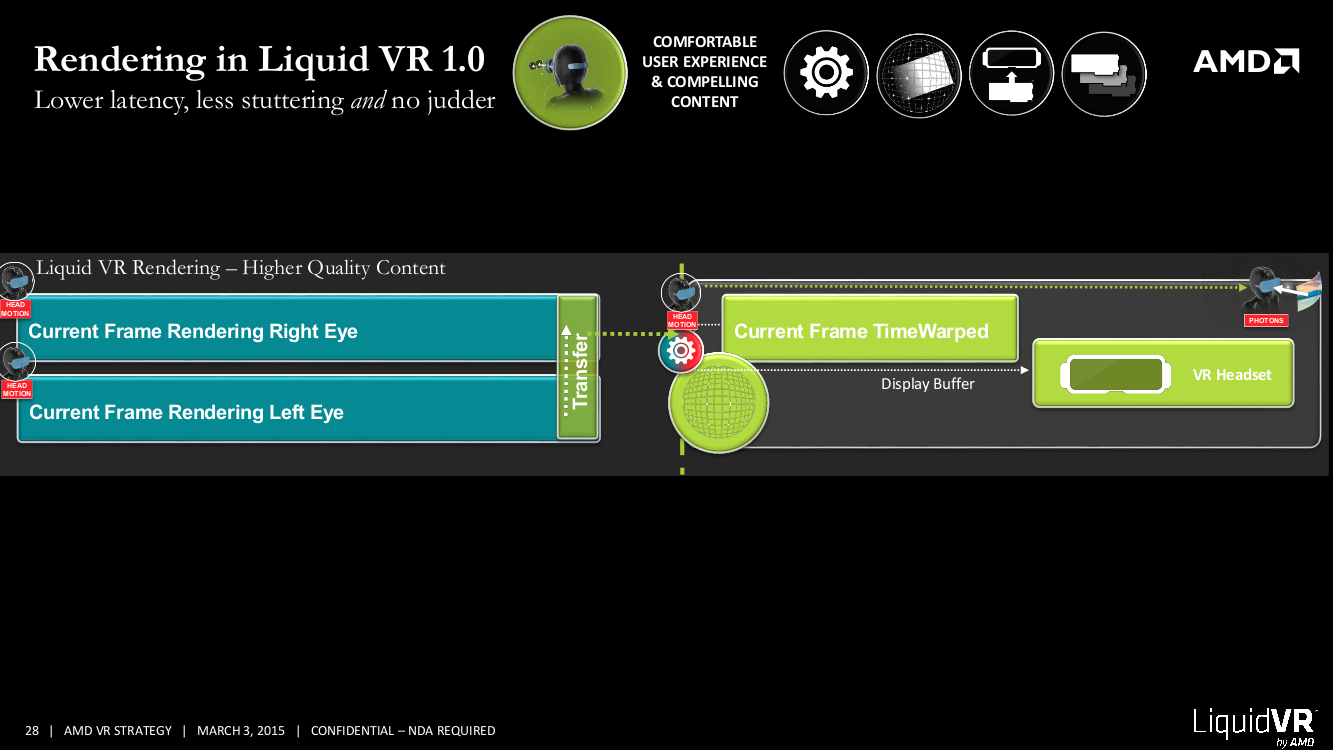

Shaders can now access asynchronous compute engines in GCN to process virtual reality images through the hardware in parallel with rendering. Because of this, Liquid VR enables asynchronous time warp, which is essentially the ability to re-project pixels to make them look the right way for your current head position. (It also lets you do things like ray tracing asynchronously.)

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

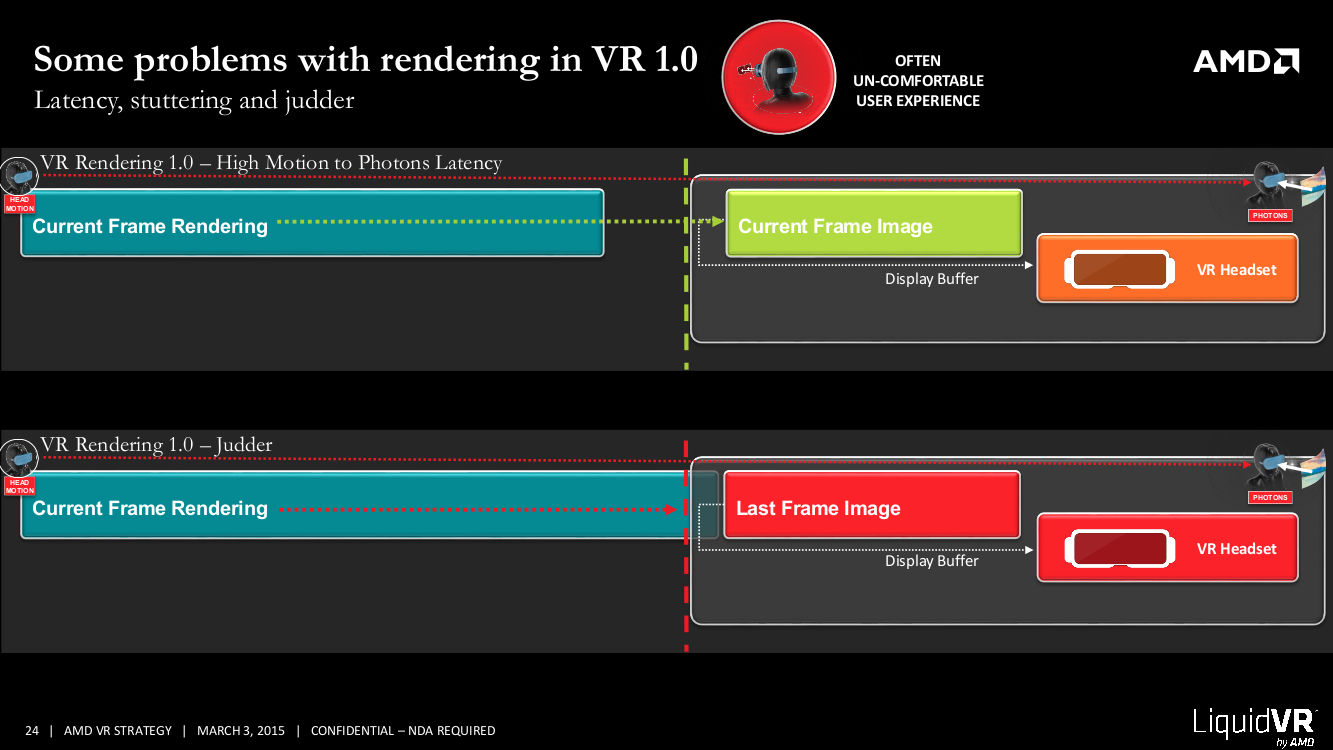

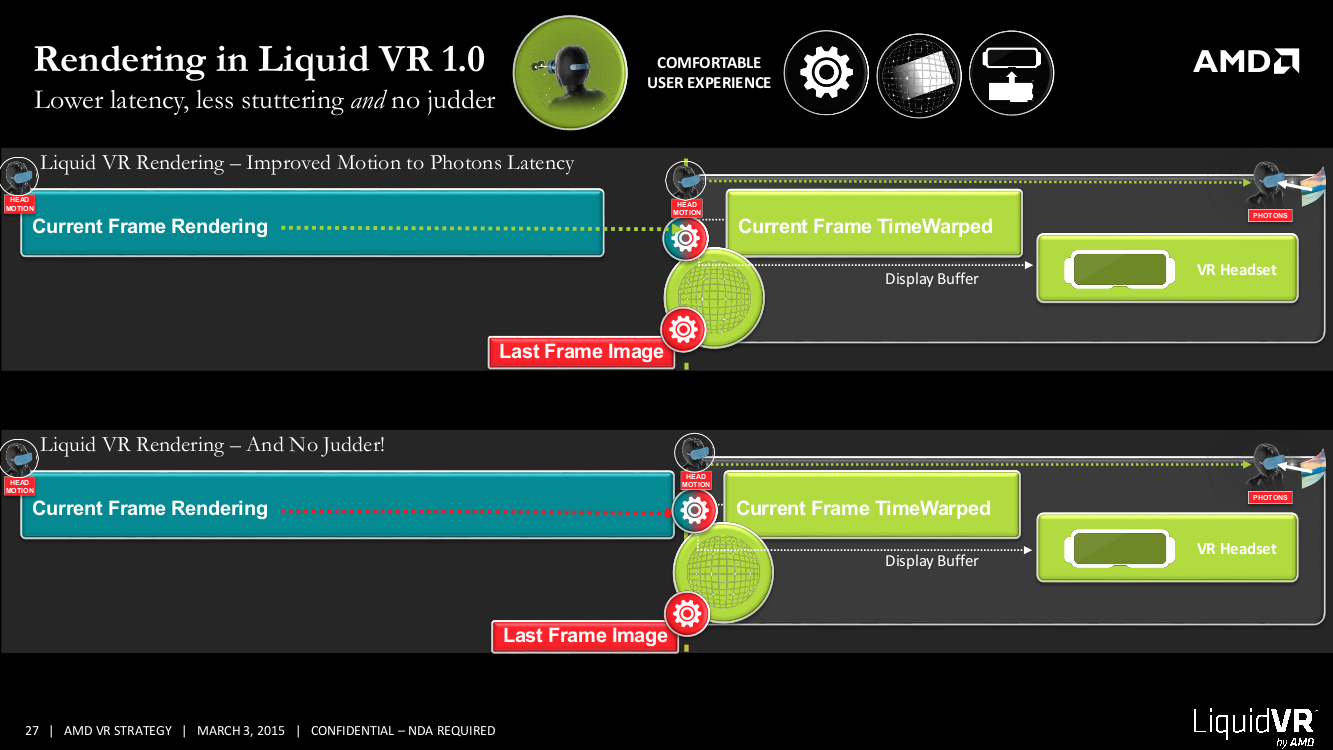

You can see this architecturally in the images above, starting with the before, without "latest data latch" and without asynchronous compute, where both latency and judder are problematic.

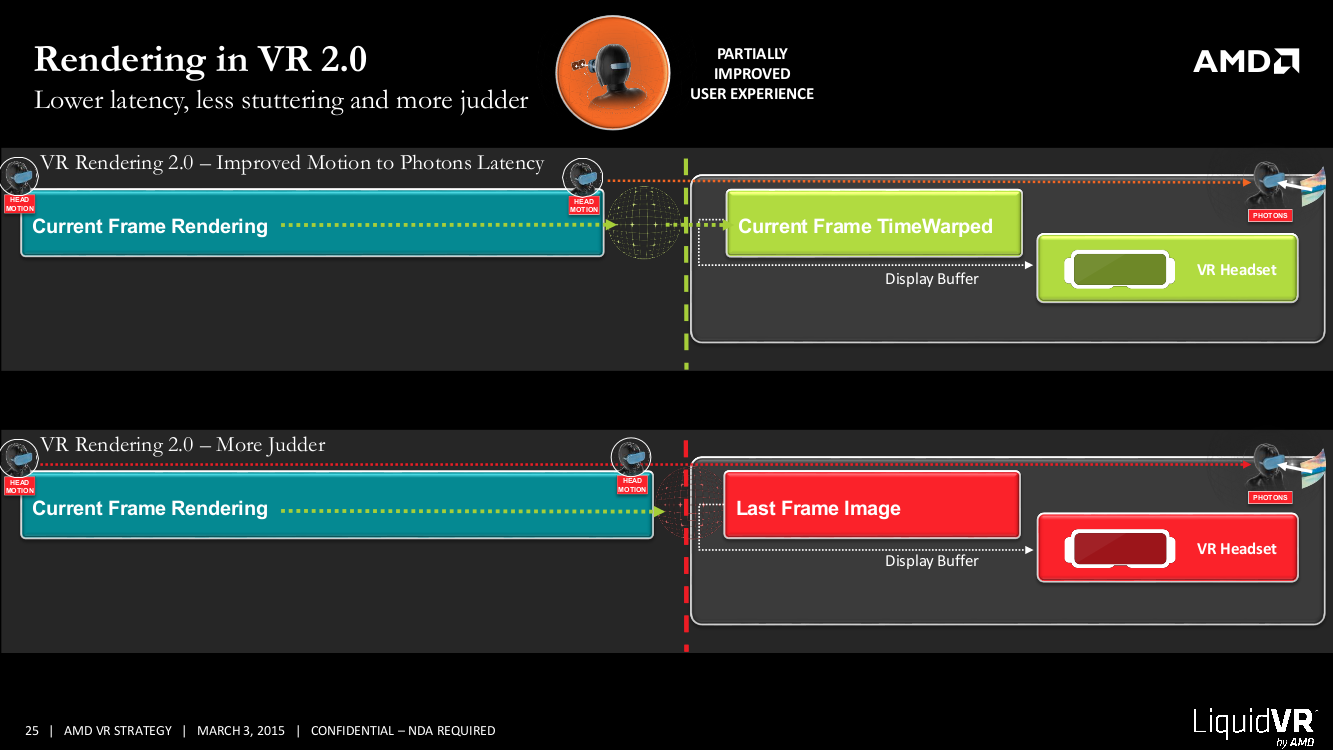

In the middle image, we're basically looking at the latest approaches by companies like Oculus (AMD called this VR 2.0), obviously without deep access to the hardware, or to some of these features; but even there, the latest head tracking data gets rendered and time warped, reducing the appearance of latency. But the time warp is synchronous, and so the frame rendering and time warp have to happen before VSync, Mah said. If it happens after VSync, you get judder.

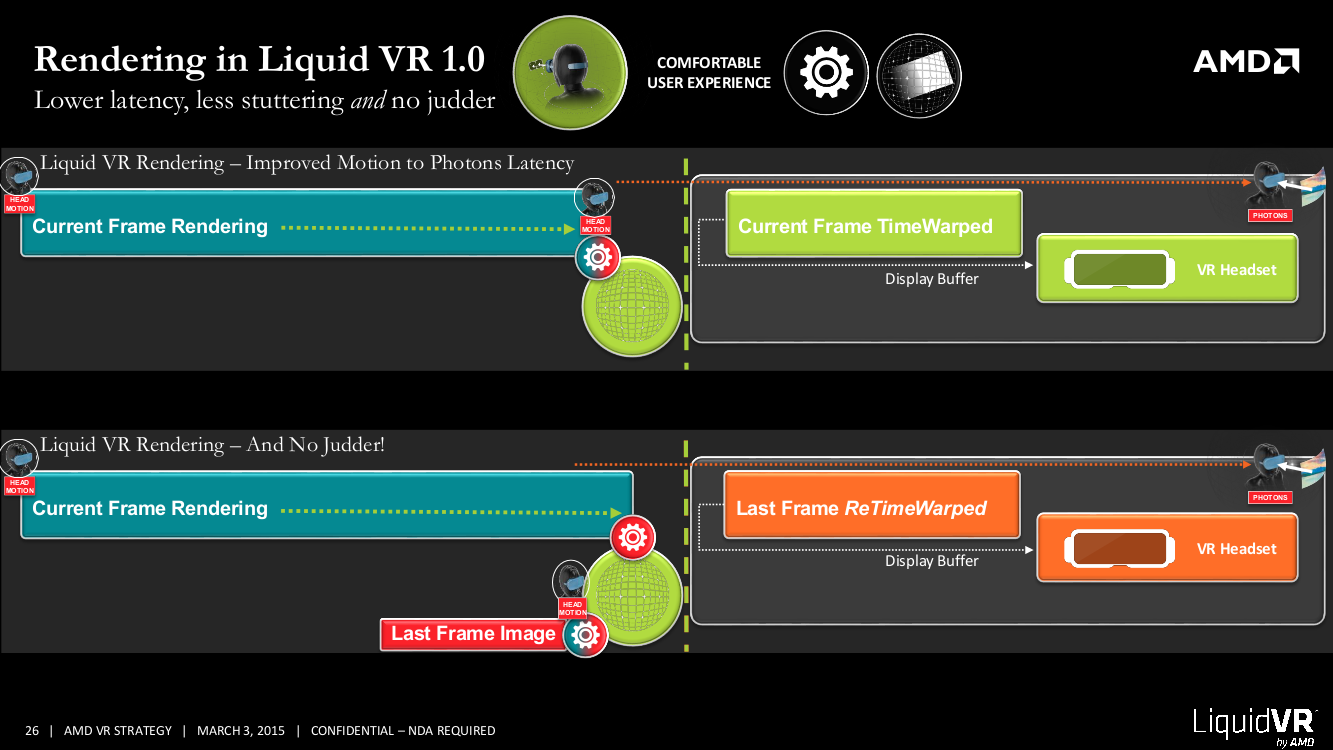

In the after image (the last one above), Liquid VR removes all of those problems. AMD is exposing the ability to move the warp asynchronously before VSync. If the frame isn't ready, the latest data latch is used (using the latest frame, or an old frame if that's all that's ready), and this process always happens before VSync. As we'll see in a moment, AMD can also squeeze one last benefit here, using another feature of Liquid VR.

AMD's direct to display capability promises to give direct application control to the headset (through an AMD Radeon graphics card, of course), regardless of the headset provider, and outside of the headset SDK.

It also enables direct front buffer rendering. You can see the benefits of that in the second image directly above; here, the time warp can be moved into the display at scan up time. Essentially, the warp starts during scan up, removing the cases where you latch an old frame buffer. Scan up happens after VSync, so you basically get a little more time to complete the frame (a millisecond or more, Mah said), and further reducing latency. The typical latency in the motion-to-photon sequence is about 11 milliseconds at 90 Hz, Mah said, so one or two milliseconds is a pretty big deal.

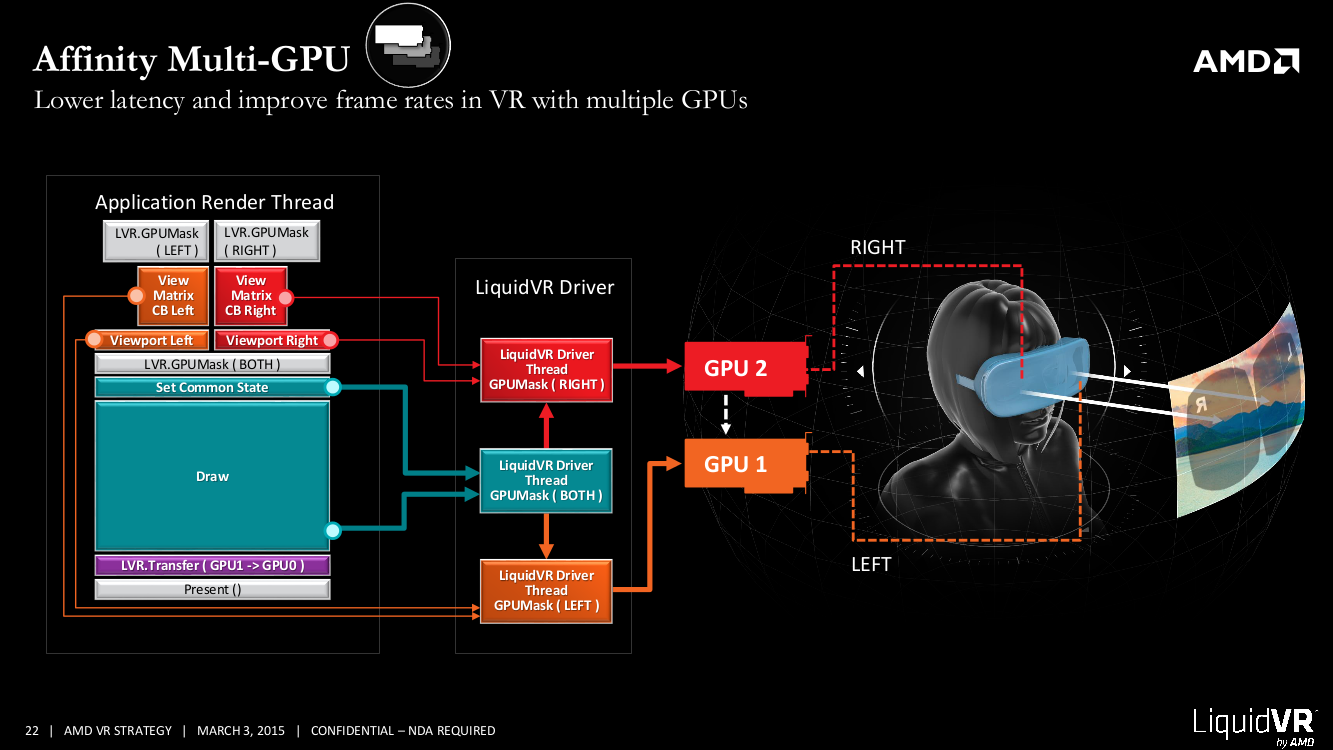

Affinity multi-GPU can take things even further, depending on the demands of the content. This capability assigns a GPU to each eye. Where AFR (alternate frame rendering) might have sped up processing, Mah said, the potential for latency between the eyes is obviously undesirable. In the second image above you can see how commands can be sent to all GPUs, or to each separately, before the driver does its work to composite the output.

You can see the rendering process in the final image above. Even here, AMD exposes the ability to do one time warp per GPU. The developer can warp the eyes separately and then transfer the data, Mah said, or transfer the data and then time warp it on one GPU. If the developer takes advantage of front buffer rendering, that obviously has to come from the GPU to which the display is attached.

GDC is already witnessing a series of announcements around advances in various APIs, including DirectX 12 and OpenGL, so AMD would only say (so far) that it had leveraged much of the engineering behind Mantle, but that Liquid VR would be enabled through all relevant APIs, but for now DirectX 11.

AMD's Kaduri indicated that support for the Razer-led OSVR initiative was premature, but that the company had taken a look at it.

Follow Fritz Nelson @fnelson. Follow us @tomshardware, on Facebook and on Google+.

-

turkey3_scratch Seems very cool. If you ever have gone to Disney World and went onto the Carousel of Progress, the very last one is supposed to be what the future will be like. Much of the stuff is already here now (the ride needs updated, it's been about 15 years probably). For instance, the woman spoke to her stove which set the temperature, and in the background a kid was playing a space shooting video game with real-time glasses on his face that captured his motions. It reminds me so much of this and how we are advancing.Reply -

falchard Much more awesome to build out a room with the dedicated purpose of gaming. 4 projectors. 1 in front, 1 to the left, 1 to the right, and 1 to the ceiling. Program the shader to have 4 cameras in each of those directions, and... project the world to your room. The cooler thing is that this is already possible with a single AMD GPU.Reply -

rokit Something strange happened to AMD lately, they started to devleop not just hardware but a way to use it efficiently.Reply -

beetlejuicegr nono guys you are not looking a head,Reply

a gaming corner with full vr for me is

a flexible overlay material as screen that covers all surfaces of your gaming room and has sensors to see you, your 3d image and it's changes (movement) and display everything in perspective. Now this is what it will become in some decades and thats the really ahead of time thinking. no glasses and stuff, just you and the room.

Until then, i think the previous step than this wil lbe glasses that are getting rendered video from cloud computing (the real cloud as definition, supercomputers with extreme graphic capabilities that will stream to YOUR glasses and others's what you would be seeing). -

rokit to beetlejuicegr, well if you want to take stakes higher we could always look forward to the tech shown in Sword Art Online. And there're some researches for this kind of technology, it is developing for years already but it won't come to SAO level of tech for quite a while. And room made of screens is to expensive and troublesome.Reply -

The_Trutherizer As far as I know AMD has had superior hardware level support for 3D displays for a long time. They are probably exposing that via this library with some extras to a certain degree.Reply -

renz496 Reply15416164 said:As far as I know AMD has had superior hardware level support for 3D displays for a long time. They are probably exposing that via this library with some extras to a certain degree.

3D as in stereoscopic 3D? -

The_Trutherizer Reply15417115 said:15416164 said:As far as I know AMD has had superior hardware level support for 3D displays for a long time. They are probably exposing that via this library with some extras to a certain degree.

3D as in stereoscopic 3D?

Stereoscopic support yes. I cannot remember exactly, but I think they have dual frame buffers at the hardware level or something like that. Not sure if it applies at all. But I thought it was nifty at the time I read it.

-

renz496 Reply15428922 said:15417115 said:15416164 said:As far as I know AMD has had superior hardware level support for 3D displays for a long time. They are probably exposing that via this library with some extras to a certain degree.

3D as in stereoscopic 3D?

Stereoscopic support yes. I cannot remember exactly, but I think they have dual frame buffers at the hardware level or something like that. Not sure if it applies at all. But I thought it was nifty at the time I read it.

i don't know i think i read somewhere that AMD says 3D was gimmick because of the adoption was considered as fail.