VR 'inception' attacks exploit developer mode loophole and VR's dreamlike unreality to manipulate what you see, steal real personal data

Uses an "Inception VR layer" to enable password theft, altered messages, and even a nightmare version of VRChat

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

VR headsets are meant to bring us closer than ever to the immersive technology promised by sci-fi classics like Neal Stephenson's Snow Crash (1992) and its actually-cool Metaverse—but now, researchers have demonstrated VR hijacking attacks closer to sci-fi espionage a la Inception (2010) [h/t Hackster].

However, the naming of these VR cyber attacks as either "immersive hijacking" or "Inception attacks" still shouldn't inspire confidence. Fortunately, the researchers debuting and disclosing these concepts in their Cornell University paper ("Inception Attacks: Immersive Hijacking in Virtual Reality Systems") also describe "potential inception defenses", and for now the attack is at least limited to Meta Quest VR headsets.

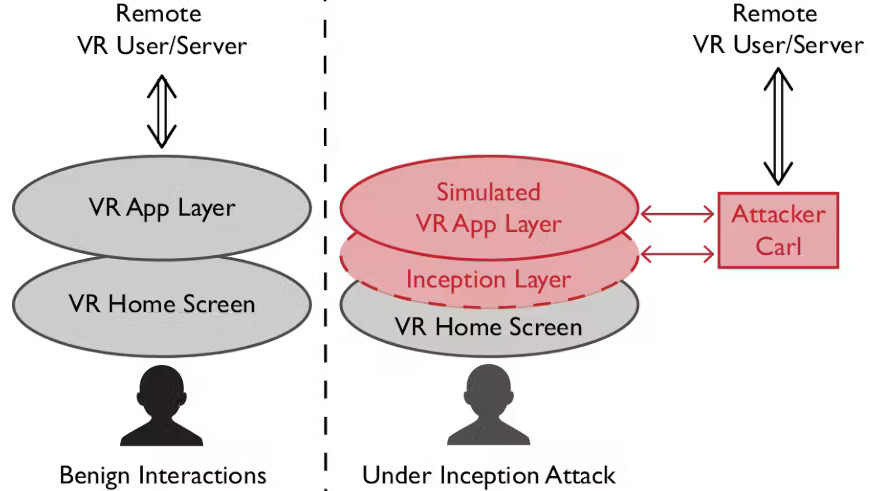

"Immersive hijacking" works by using a so-called "inception VR layer" between the user and a regular-looking version of their operating system, an attacker can intercept and control all the user's interactions with internal applications, external servers, and so on.

The attack is fairly all-encompassing, but let's narrow it down to the attack vectors covered. First, it seems that proper attacks weren't tested on the 27 volunteers studied, only their ability to notice when a hijacking happened during an otherwise normal session of Beat Saber. The only visual tell was home screen flickering prior to play, and all but one of the ten people who noticed it attributed it to an innocuous system glitch.

Additionally, malicious VRChat clones were present on the devices. According to Heather Zheng, professor at University of Chicago and leader of the research speaking to MIT Technology Review, "Generative AI could make this threat even worse because it allows anyone to instantaneously clone people's voices and generate visual deepfakes."

Most ominously, a cloned browser also demonstrated the ability to completely hijack a web banking session. This included changing the balances the user could see, and even actively changing the amounts sent by the end user, completely stripping away their control of the online banking process in such a way that someone affected could be bankrupted without even knowing it.

It goes to show that as VR continues to evolve, cybersecurity measures will need to evolve with it. More technically-immersive and safer aren't necessarily the same thing.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Christopher Harper has been a successful freelance tech writer specializing in PC hardware and gaming since 2015, and ghostwrote for various B2B clients in High School before that. Outside of work, Christopher is best known to friends and rivals as an active competitive player in various eSports (particularly fighting games and arena shooters) and a purveyor of music ranging from Jimi Hendrix to Killer Mike to the Sonic Adventure 2 soundtrack.

-

edzieba To translate from hyperbola to normality:Reply

If you take a device, and replace the applications that device runs with a different set of applications containing malicious payloads that appear to function like the non-malicious applications, those malicious applications can trick users into interacting with them and inputting data.