New Rowhammer attack silently corrupts AI models on GDDR6 Nvidia cards — 'GPUHammer' attack drops AI accuracy from 80% to 0.1% on RTX A6000

One bit flip is all it takes.

A group of researchers has discovered a new attack called GPUHammer that can flip bits in the memory of NVIDIA GPUs, quietly corrupting AI models and causing serious damage, without ever touching the actual code or data input. Fortunately, Nvidia is already ahead of the bad actors and has put out guidelines on how to mitigate the risk involved in this situation. Regardless, if you’re using a card with GDDR6 memory, this is worth paying attention to

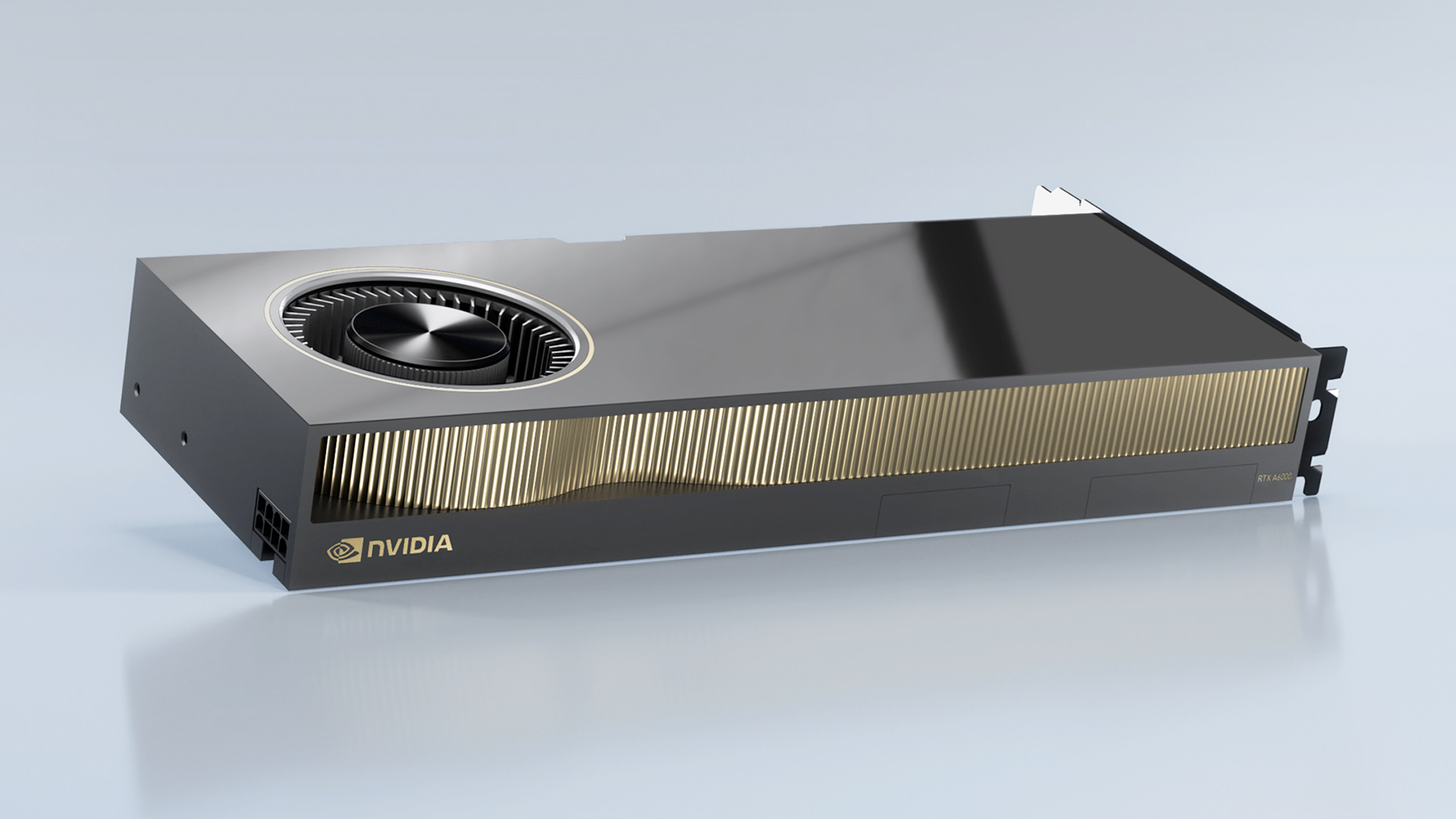

The team behind the discovery, from the University of Toronto, showed how the attack could drop an AI model’s accuracy from 80% to under 1%—just by flipping a single bit in memory. It’s not just theoretical either, as they ran it on a real NVIDIA RTX A6000, using a technique that repeatedly hammers memory cells until one nearby flips, messing with whatever’s stored there.

What exactly is GPUHammer?

GPUHammer is a GPU-focused version of a known hardware issue called Rowhammer. It’s been around for a while in the world of CPUs and RAM. Basically, modern memory chips are so tightly packed that repeatedly reading or writing one row can cause electrical interference that flips bits in nearby rows. That flipped bit could be anything—a number, a command, or part of a neural network’s weight—and that’s where things go wrong.

Until now, this was mostly a concern for DDR4 system memory, but GPUHammer proves it can happen on GDDR6 VRAM too, which is what powers many modern NVIDIA cards, especially in AI and workstation workloads. This is a serious cause for concern, at least in specific situations. The researchers showed that even with some safeguards in place, they could cause multiple bit flips across several memory banks. In one case, this completely broke a trained AI model, making it essentially useless. The scary part is that it doesn’t require access to your data. The attacker just needs to share the same GPU in a cloud environment or server, and they could potentially interfere with your workload however they want.

As mentioned, the attack was tested on an RTX A6000, but the risk applies to a wide range of Ampere, Ada, Hopper, and Turing GPUs, especially those used in workstations and servers. NVIDIA has published a full list of affected models and recommends ECC for most of them. That said, newer GPUs like the RTX 5090 and H100 have built-in ECC directly on the chip, which handles this automatically—no user setup required.

However, if you're someone just sitting at home worried about their personal setup, this isn’t the kind of attack you’d see targeting individual gamers or home PCs. It’s more relevant to shared GPU environments like cloud gaming servers, AI training clusters, or VDI setups where multiple users run workloads on the same hardware. That being said, the core idea that memory on a GPU can be tampered with silently is something the entire industry needs to take seriously, especially as more games, apps, and services start leaning on AI.

Nvidia's Response

NVIDIA has responded with a simple but important recommendation: turn on ECC (Error Correction Code) if your GPU supports it. ECC is a feature that adds redundancy to memory so it can detect and fix errors like these bit flips. Keep in mind, enabling ECC does come with a small trade-off—around 10% slower performance for machine learning tasks, and about 6–6.5% less usable VRAM. But for serious AI work with peace of mind, that’s worth it.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You can enable it using Nvidia's command-line tool:

nvidia-smi -e 1You can also check if ECC is active with:

nvidia-smi -q | grep ECCAttacks like GPUHammer don’t just crash systems or cause glitches. They tamper with the integrity of AI itself, affecting how models behave or make decisions. And because it all happens at the hardware level, these changes are nearly invisible unless you know exactly what to look for. In regulated industries like healthcare, finance, or autonomous driving, that could cause serious problems—wrong decisions, security failures, even legal consequences. Even though the average user isn’t directly at risk, GPUHammer is a wake-up call. As GPUs continue to evolve beyond gaming into AI, creative work, and productivity, so do the risks. Memory safety, even on a GPU, is no longer optional.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

bit_user Reply

This is somewhat alarmist. Very few bits, in an AI model, would have that sort of impact. In the vast majority of cases, you could flip a bit and the impact would barely be detectable.The article said:the attack could drop an AI model’s accuracy from 80% to under 1%—just by flipping a single bit in memory.

There was never a reason to suspect otherwise. GDDR works on the same principles and regular DDR memory. It's DRAM, in both cases.The article said:GPUHammer proves it can happen on GDDR6 VRAM too

It's not accurate to say they "can interfere however they want". You can't control which bits flip. More importantly, in a virtualized environment, address layout is effectively randomized, if not also intentionally random. So, the best an attacker could do is just try to mess with whatever of your programs are using the GPU. They wouldn't have any way to control exactly how it affects you.The article said:The attacker just needs to share the same GPU in a cloud environment or server, and they could potentially interfere with your workload however they want.

Cloud GPUs should all support out-of-band ECC.The article said:NVIDIA has published a full list of affected models and recommends ECC for most of them.

Most consumer models do not, but then client PCs aren't at much risk of such exploits (would effectively require running some malware on your PC). There's an outside chance that some WebGPU-enabled code runs in your browser and trashes your GPU state, using such an exploit.

That's because they use GDDR7, which has on-die ECC like DDR5. It's not as good as out-of-band ECC, due to being much lower density, but should provide a little protection.The article said:newer GPUs like the RTX 5090 and H100 have built-in ECC directly on the chip, which handles this automatically—no user setup required.

Cloud operators should already be doing this. I wouldn't trust my data to any who weren't enabling it since day 1. That's because DRAM errors happen in the course of normal operation, and become more frequent as DRAM ages. So, if you're using a GPU for anything where data-integrity is important, that's why they have an ECC capability, in the first place.The article said:NVIDIA has responded with a simple but important recommendation: turn on ECC (Error Correction Code) if your GPU supports it

The same is true for server memory. I would be shocked and appalled, if any cloud operators were running without ECC enabled on their server DRAM. Failing to enable it on their GPUs is nearly as bad. -

leoneo.x64 Reply

Yes. Now kill all AI models!Admin said:GPUHammer is a new Rowhammer-based attack targeting NVIDIA GPUs with GDDR6 memory. It flips bits in VRAM to silently corrupt AI models, dropping accuracy from 80% to under 1%. NVIDIA urges users to enable ECC, though it slightly reduces performance and available memory.

New Rowhammer attack silently corrupts AI models on GDDR6 Nvidia cards — 'GPUHammer' attack drops AI accuracy from 80% to 0.1% on RTX A6000 : Read more -

DS426 Reply

I strongly agree. Unfortunately, I can see where cloud gaming vendors might not have ECC enabled for the performance losses. That said, that was before this notice; if these operators needed more concrete evidence on why ECC should be enabled, here it is.bit_user said:Cloud operators should already be doing this. I wouldn't trust my data to any who weren't enabling it since day 1. That's because DRAM errors happen in the course of normal operation, and become more frequent as DRAM ages. So, if you're using a GPU for anything where data-integrity is important, that's why they have an ECC capability, in the first place.

The same is true for server memory. I would be shocked and appalled, if any cloud operators were running without ECC enabled on their server DRAM. Failing to enable it on their GPUs is nearly as bad.

I'm actually more surprised if ECC isn't enabled by default on professional-class GPU's. -

JRStern I should think that the bigger problem is that an AI system might accidentally row-hammer itself.Reply -

Amdlova Reply

Feel days ago got this News...JRStern said:I should think that the bigger problem is that an AI system might accidentally row-hammer itself.

‘Full Nazi’: Elon Musk's AI chatbot started calling itself 'MechaHitler'

After a recent update to the AI chatbot’s code, Grok started posting openly antisemitic responses to queries from X users on Elon Musk’s platform.

Think The AI has some row-hammer problems -

bit_user Reply

The cache hierarchy naturally tends to prevent accidental rowhammer. Frequent reads or writes to the same address would get handled by cache and not actually reach DRAM very often, between refreshes.JRStern said:I should think that the bigger problem is that an AI system might accidentally row-hammer itself.

This is why the vulnerability went unnoticed until about a decade ago, when security researchers started to get really crafty. The CPU exploits either actively invalidate cache lines or do other things to evict them, so the memory accesses will go all the way out to DRAM.

Another reason why AI wouldn't accidentally trigger this problem is due to the way they use DRAM. Because it tends to be a major bottleneck in AI training & inference, model weights are streamed in and used as much as possible. If they're used again, it won't be right away. This is exactly the kind of data access pattern DRAM likes, and therefore the chances of unintentional rowhammer from AI are probably infinitesimal. -

bit_user Reply

No, that's a lot more to do with how certain AI's are trained. It's a classic GIGO problem - Garbage In: Garbage Out.Amdlova said:Think The AI has some row-hammer problems

If you've seen some of the trash on Twitter/X, it's no surprise that a chatbot trained on Tweets is going to be very toxic. -

JRStern Reply

Maybe that explains Elon's behaviors, too.Amdlova said:Think The AI has some row-hammer problems