Intel's Xe3 graphics architecture breaks cover — Panther Lake's 12 Xe Core iGPU promises 50+% better performance than Lunar Lake

Just don't call it Celestial

As part of its recent Tech Tour US event, Intel took the wraps off its trio of upcoming Panther Lake SoCs. It also walked us through the improvements and expected performance of its new Xe3 graphics architecture, which is coming to market in the form of two integrated GPU tiles for Panther Lake. For more details on the three primary configurations of Panther Lake SoCs, as well as a more general overview of Panther Lake's CPU resources, check out our dedicated article.

First things first: Intel emphasized that Xe3 is not based on the Celestial architecture, even though its name conveniently maps to that codename's place in Intel's past roadmaps. Let us repeat: this is not Celestial. Intel classifies Xe3 GPUs as part of the Battlemage family because the capabilities the chip presents to software are similar to those of existing Xe2 products. Therefore, it will include Panther Lake iGPUs under the Arc B-series umbrella. The company admits this naming scheme isn't ideal, but it appears to be the least worst option for the time being.

Indeed, once you start digging into the changes Intel highlights for Xe3, you'll see that it's more of a continuous improvement of the existing Battlemage architectural lineage than an all-new design. The next "clean" generational leap will come with Xe3P Arc GPUs, but it's unclear when those parts will arrive. Intel was understandably mum about Xe3P products during the Panther Lake event.

The building blocks

The basic Xe3 Xe Core (henceforth Xe3 Core) keeps the same basic layout of Xe2: eight Xe Vector Engines for floating-point and integer math, eight XMX engines for acceleration of matrix math for AI applications, and one ray-tracing unit. Intel says the changes within Xe3 are meant to improve two pain points: better utilization of available resources, an ongoing project for Arc GPUs, and making the architecture more scalable, which is important for building larger and higher-performance products.

The prior Xe2 render slice included up to four Xe Cores, from which graphics processors as small as Lunar Lake's iGPU (two render slices) and as large as the Arc B580 (five render slices) were all constructed.

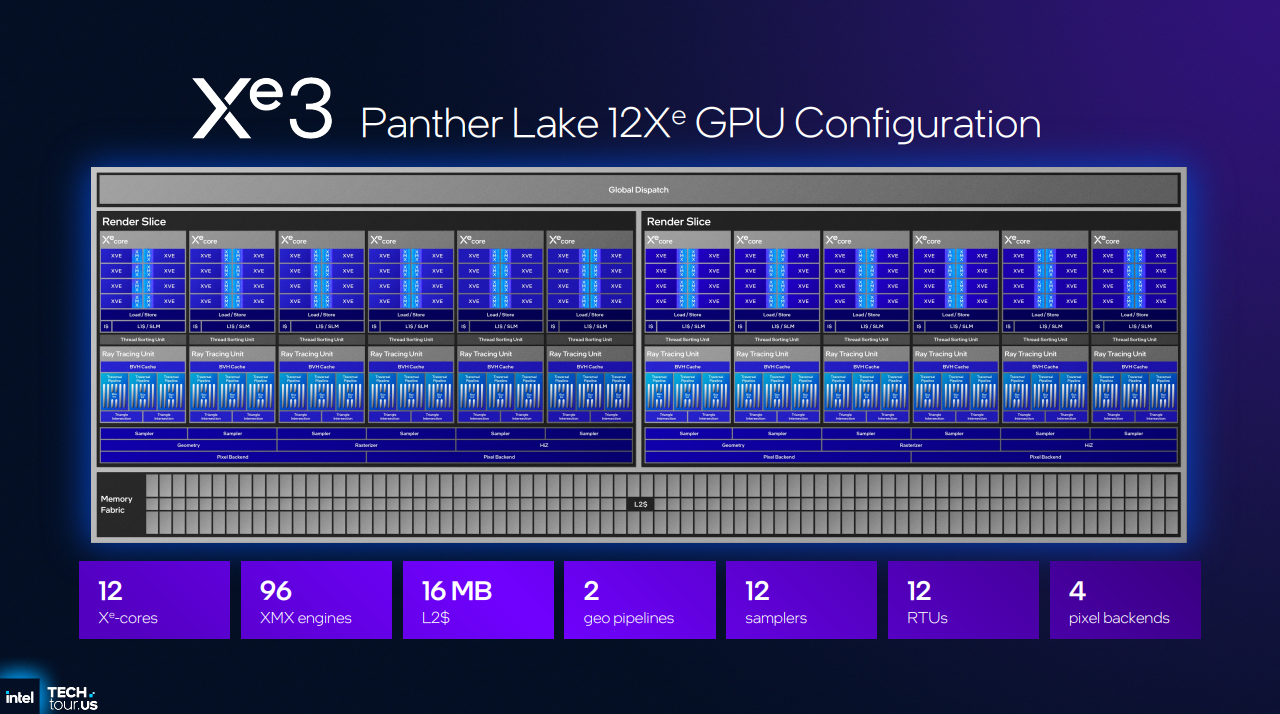

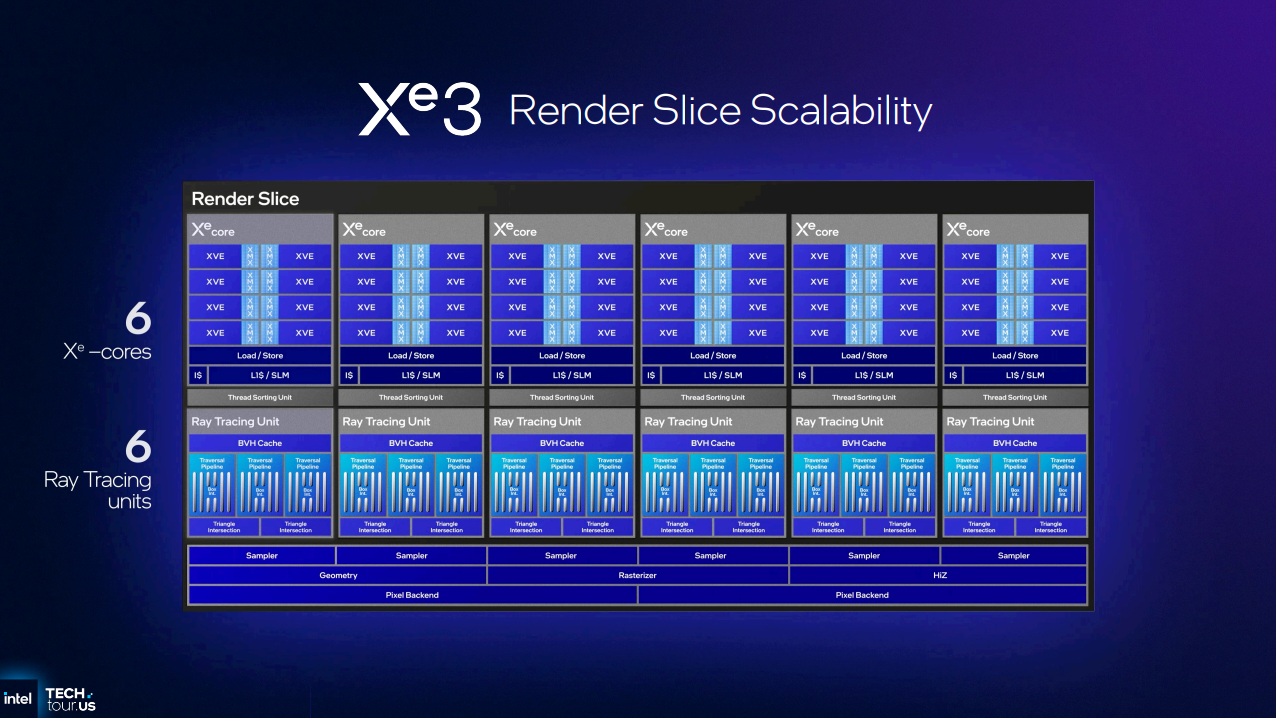

The Xe3 render slice, on the other hand, starts with six Xe Cores, and it's been used to make two iGPUs so far.

The headliner is the two-render-slice, 12 Xe3 Core part that will power gaming, content creation, and AI workloads in the highest-performance variant of the Panther Lake SoC.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

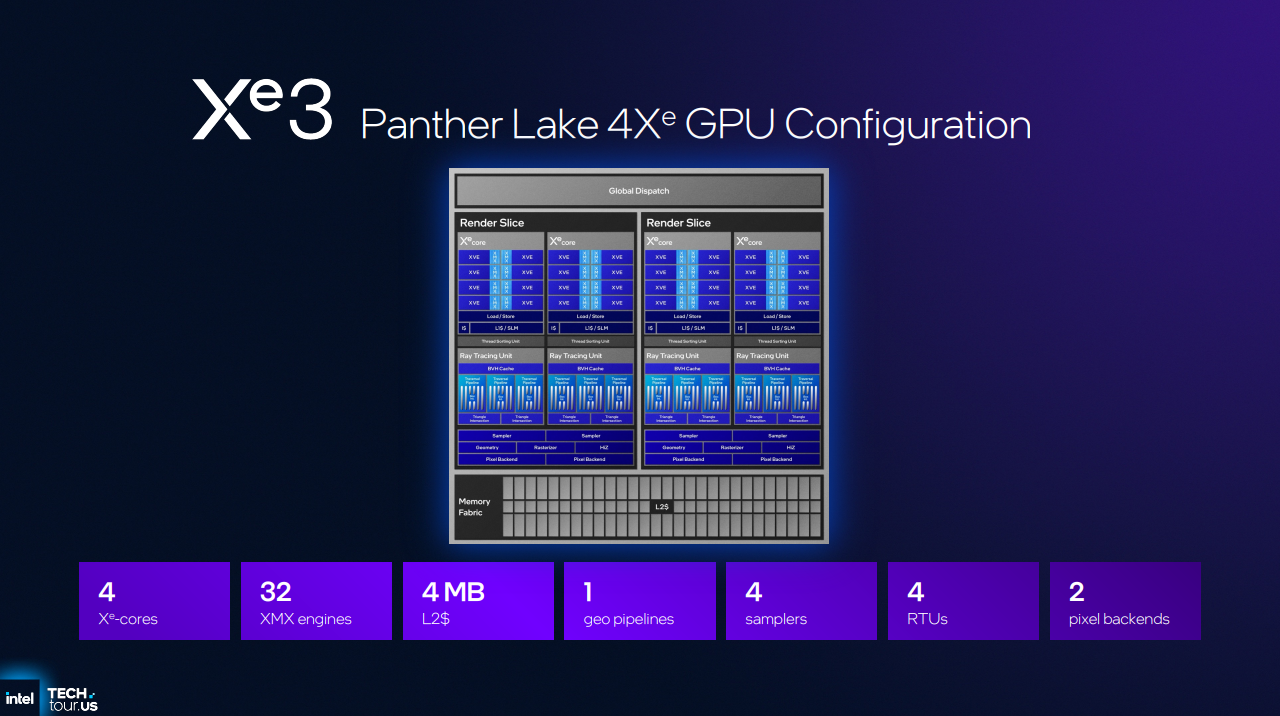

The other is a four-Xe3-Core part that provides graphics functionality on lower-end Panther Lake products.

Observant readers may be wondering why, given that an Xe3 render slice starts with six Xe Cores, the smaller Xe3 GPU on Panther Lake only includes four Xe Cores. Intel has always maintained a level of fine-grained control over the size of its graphics engines (stretching well into the pre-Arc days) to meet various product demands, so even if Xe3 starts with six Xe Cores per render slice, it's no surprise that it can be scaled down.

Improvements large and small

Intel says each Xe3 Xe Core can keep up to 25% more threads in flight - from eight to 10 - versus its predecessor, and the core can variably allocate partitions of each Xe Vector Engine's register file per thread in order to achieve better utilization.

Variable register allocation is truly new to Xe3. Previous Arc GPUs used a coarser per-thread register allocation strategy that posed challenges for fully utilizing the core's available resources. Intel says this is a key improvement in the Xe3 architecture and says that it has "dramatic effects on performance."

One of Xe3's other big structural changes — at least for an Arc iGPU — is an increase in shared local memory per Xe core. Xe3 now includes 256KB of shared local memory, up from 192KB in Xe2 on Lunar Lake and the improved Xe-LPG architecture on Meteor Lake. Intel says that workloads spilling out of this shared local memory is a major reason for performance pitfalls on older Arc iGPUs, so adding more of it is a sensible and relatively simple architectural refinement with a major performance payoff.

It's worth noting that this change simply brings Xe3 iGPUs in line with the basic resources offered by desktop Xe2 products. The Arc B580 and B5700 already had 256KB of local memory per Xe Core, so don't expect performance miracles from this increase. The fact that Intel is adding more local memory here probably shows that the size of this structure on Battlemage discrete GPUs was the right decision and one worthy of carrying through the Arc B-series graphics stack.

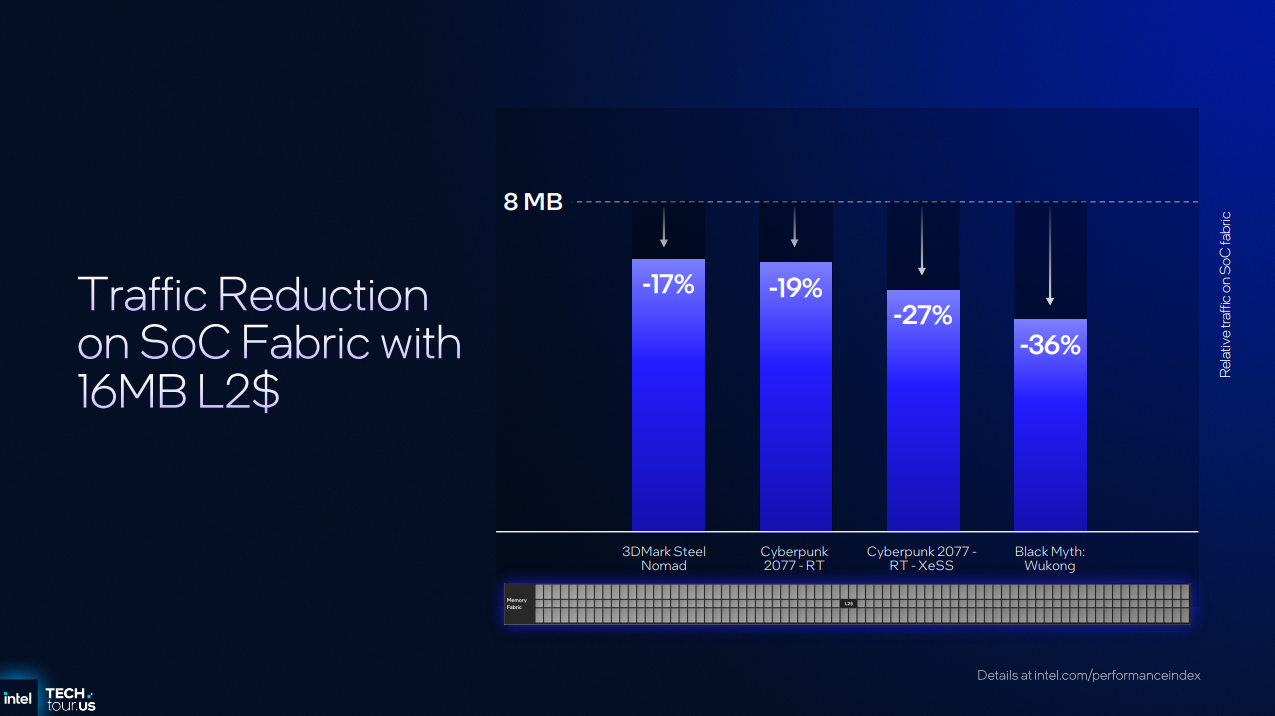

More big changes occur further out in the cache hierarchy. In its largest 12-Xe-Core configuration, Panther Lake's Arc GPU now sports 16MB of shared L2 cache, double that of Lunar Lake's eight-Xe-Core graphics engine. This is an absolutely massive L2 for a GPU of this size; the Arc B580, by comparison, only sports 18MB (or 12.5% more) L2 for a chip with 67% more Xe Cores to feed.

Intel says a larger L2 cache reduces traffic on Panther Lake's on-package fabric that connects the graphics processor to main memory - an important consideration for an integrated graphics processor that might be contending with a CPU and NPU for access to RAM. Intel showed a chart claiming anywhere from a 17% to 36% reduction in fabric traffic compared to a product with an 8MB L2 as a baseline.

Xe3 also features a number of smaller, but still important, refinements. Xe2's ray tracing engine allows for asynchronous evaluation of ray-triangle intersection, but the results of those tests have to be resolved in order. That responsibility falls to a thread sorting unit that could previously cause backups in the ray-tracing pipeline. Intel says the improved ray tracing engine in Xe3 can dynamically slow down dispatch of new rays while the sorting unit catches up to work in flight.

Intel also increased the performance of a cache called the Unified Return Buffer, or URB, which is a means of passing data between functional units on the GPU. The company equipped the Xe3 URB with a new management agent that can make partial updates to this buffer without requiring a full flush for each context switch, lowering the cost of cross-functional-unit communication.

Xe3 also includes improvements to fixed-function hardware in order to improve performance in some common graphics tasks. The company says to expect up to 2x the anisotropic filtering rate, and up to a 2x improvement in stencil test rate.

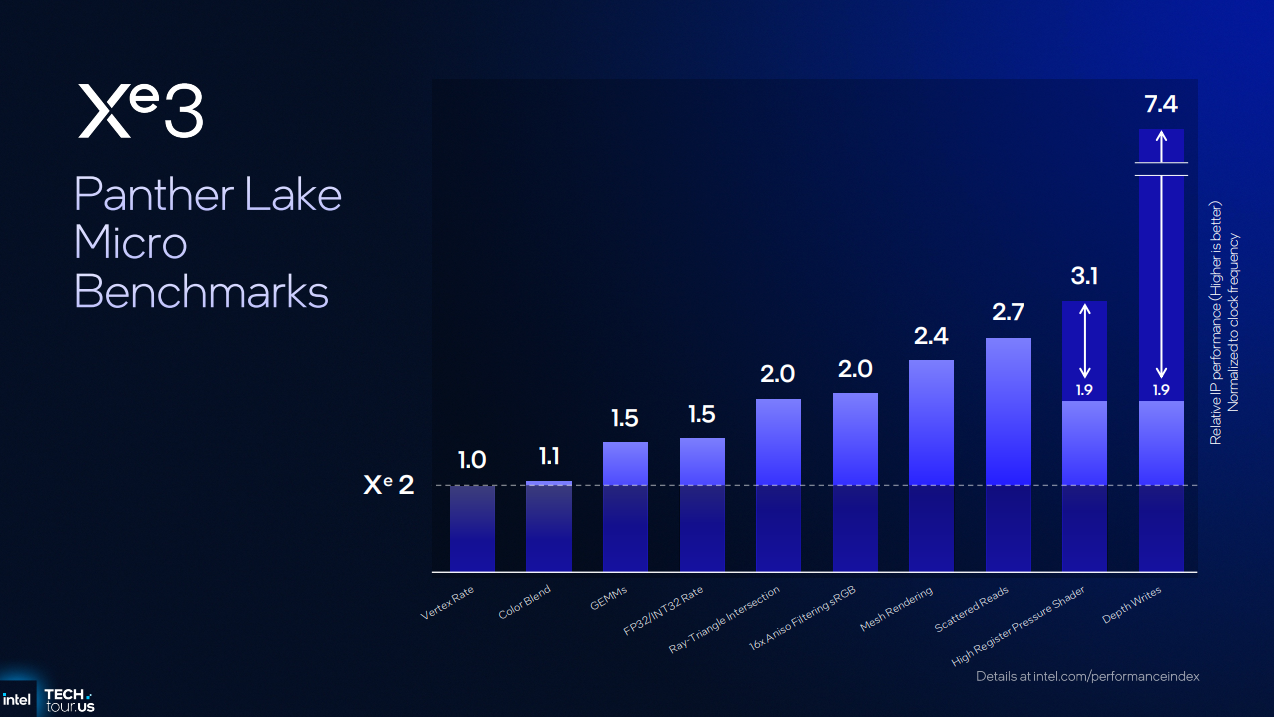

In a range of proprietary microarchitectural benchmarks versus the Xe2 GPU on Lunar Lake, you can see that the performance of the 12 Xe3 Core GPU in some operations hasn't changed between Xe2 and Xe3, simply because the available resources per render slice haven't grown. Intel demonstrates that some other operations have scaled linearly with the 50% increase in Xe Cores from Lunar Lake to Panther Lake's 12 Xe configuration.

Operations like ray-triangle intersection tests, anisotropic filtering, mesh rendering, and scattered reads from memory, start to demonstrate microarchitectural as well as scaling improvements in Xe3; they all enjoy 2x or better speedups relative to Xe2.

For shaders that place a large amount of pressure on registers, Xe3's dynamic register allocation can deliver anywhere from 1.9x to 3.1x faster performance in Intel's internal microbenchmarks. Depth testing operations—an incredibly important and fundamental part of the modern render pipeline—enjoy anywhere from a 1.9x to an incredible 7.4x speedup.

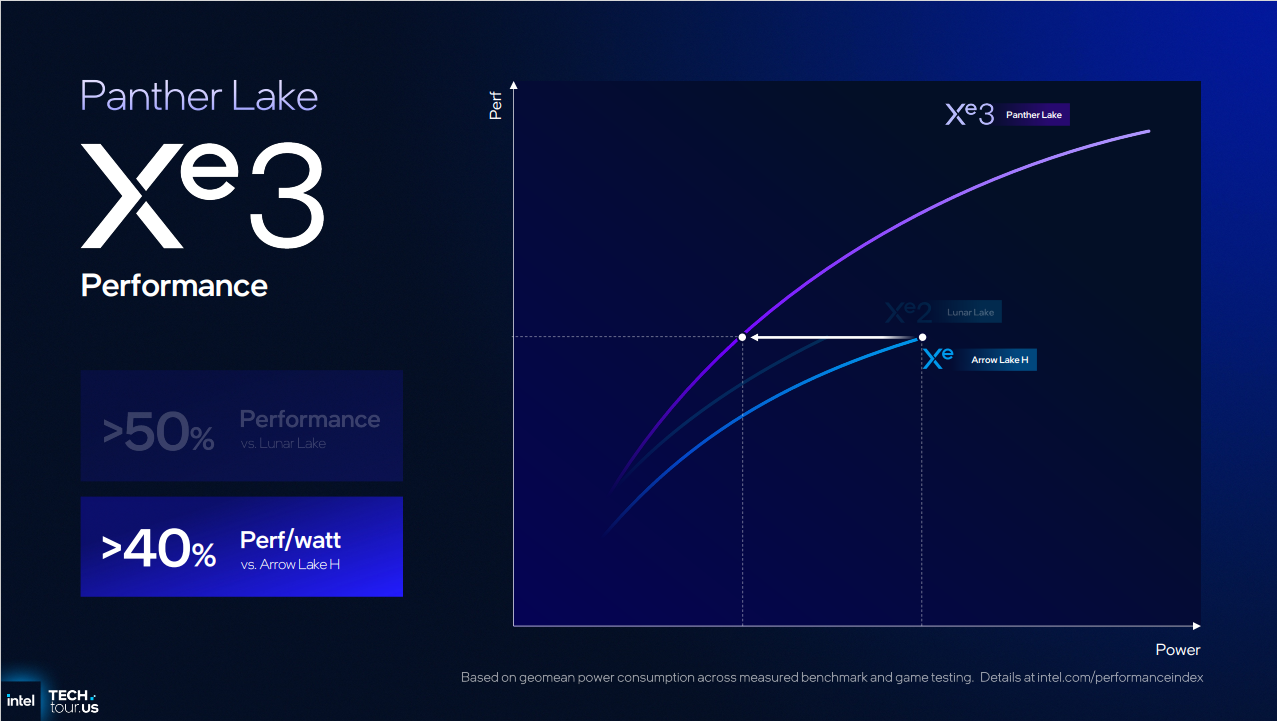

Intel isn't talking about detailed specs like clock speeds for Xe3 GPUs just yet, but the company did provide a set of ballpark power-versus-performance plots that are typical of early looks at silicon.

The first claim shows that the 12 Xe Core GPU has a much broader range of scaling than Lunar Lake. If given a large amount of extra power over Lunar Lake, the 12 Xe Core Arc GPU can offer 50% or greater performance in exchange. If we eyeball the same power level as Lunar Lake in this chart, performance gains are much more modest, but still present.

When discussing performance-per-watt improvements, Intel instead points to the Arrow Lake-H iGPU, against which the company claims a greater than 40% improvement in efficiency for the same performance. We would certainly hope that Panther Lake provides a performance-per-watt improvement in this match-up, as the Arrow Lake-H iGPU is based on the aging Xe-LPG architecture that first made its debut in the almost two-year-old Meteor Lake and has its roots in the three-year-old Alchemist architecture.

If you directly compare Panther Lake to Lunar Lake for efficiency, Xe3 still delivers a performance-per-watt improvement, but it's smaller (probably under 20%, if we had to eyeball it). Gripes about relevant comparisons aside, what we should take away here is that the Lunar Lake iGPU (at least in its 12 Xe Core form) delivers both performance per watt improvements and a broader range of performance scaling possibilities compared to the company's past integrated GPU efforts.

What is impressive, however, is that these improvements have all come by way of architectural refinements. Intel says it hasn't changed the process technology it's using to fabricate the GPU tile (presumably relative to Arrow Lake), so these boosts to both power and performance come exclusively from the architectural improvements discussed above.

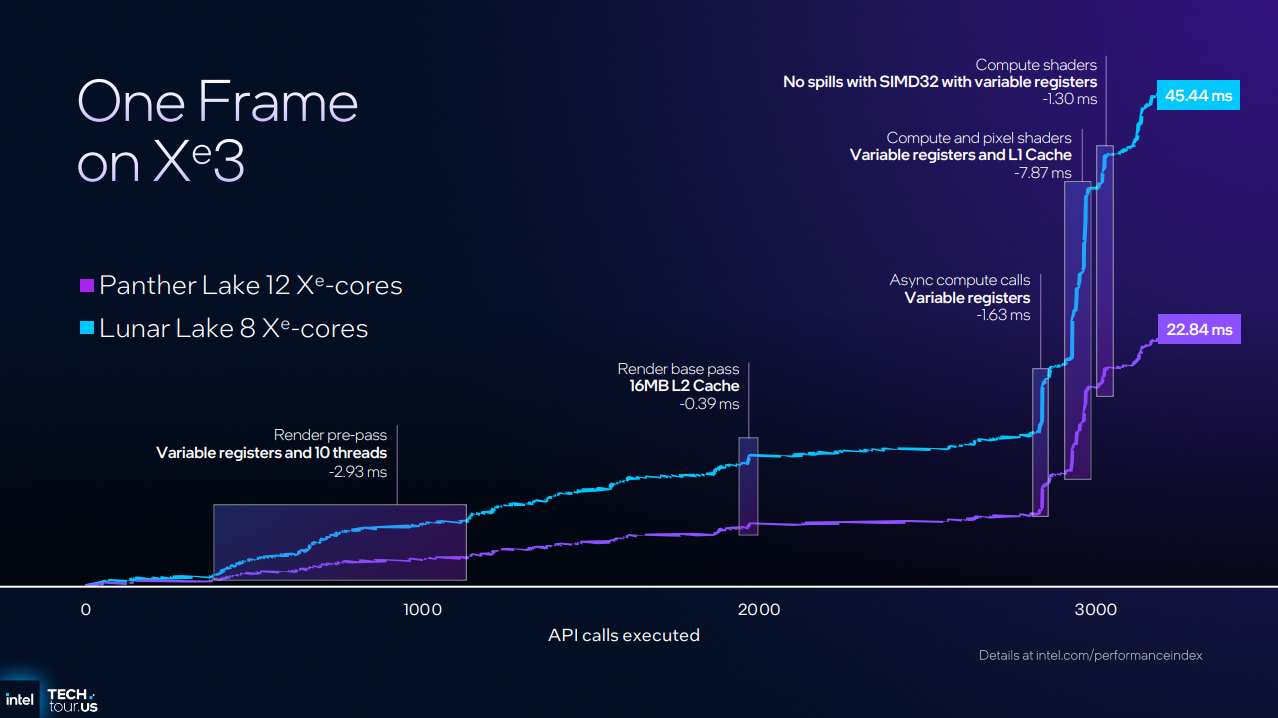

Another way Intel talks about performance with Xe3 is by walking through the time it takes for a hypothetical frame to be rendered on both Lunar Lake and Panther Lake. As any graphics performance enthusiast knows, a lower frame time on average means higher frame rates. One frame isn't terribly useful as a benchmark, but Intel's trip inside the frame time journey shows several key improvements related to the microarchitectural refinements in Xe3. Overall, this same frame renders in 22.84 ms on the 12 Xe3-Core GPU, or 50% less time.

Going hands-on with Xe Multi Frame Generation

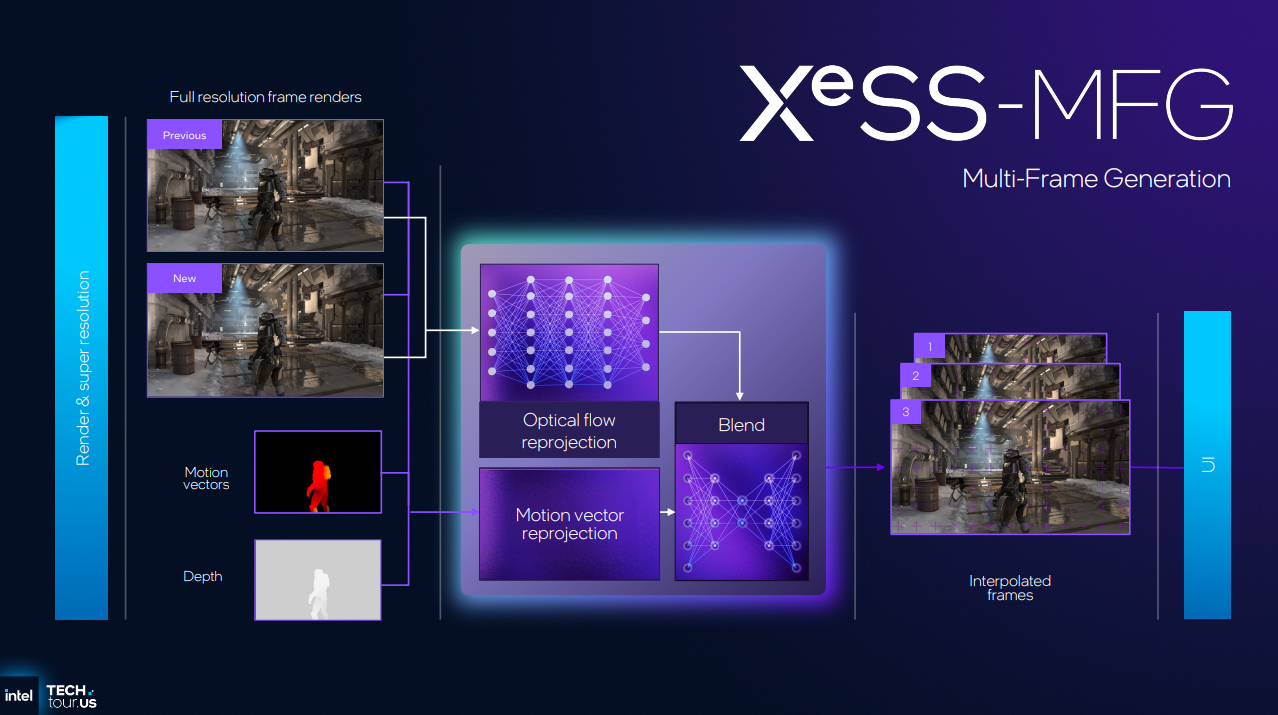

Of course, GPUs these days aren't just a chip - they're part of an entire hardware-software stack. Intel is continuing to invest in its XeSS suite of technologies to keep pace with Nvidia and AMD. On top of upscaling and 2x framegen support in XeSS 2, an upcoming XeSS release will add AI-accelerated multi-frame generation to Intel’s arsenal.

Like Nvidia’s DLSS Multi-Frame Generation, XeSS MFG will offer 2x, 3x, and 4x modes (one, two, or three generated frames). It also won’t require game developers to update their XeSS 2 titles to explicitly support the feature.

Any of the (small) list of titles that already support Xe Frame Generation will work with XeSS MFG through an override in the Intel Graphics Software control panel.

We had an opportunity to go hands-on with XeSS Multi Frame Generation on a Panther Lake engineering system at the Tech Tour event. Based on my limited time with the demo system, the image quality of XeMFG is impressive. I didn't see any distracting artifacts that would have given the framegen-boosted frame rate away.

However, input lag was just a bit too far over the line of tolerability for a great experience in a fast-paced shooter like the upcoming Painkiller, although I'm sure I could have tuned upscaling and quality settings to arrive at a more responsive experience.

I was somewhat frustrated by Intel’s use of baseline frame rates as a proxy for acceptable input lag with XeMFG. We’ve already performed some high-level testing that demonstrates how the two measurements are not necessarily correlated, and Intel’s representatives threw around what seemed to me to be unreasonably low baseline frame rates for a good framegen experience.

We’ll need to see how PresentMon interacts with XeMFG going forward in order to see whether it can be used reliably to gauge a game’s input lag and its suitability for use with XeMFG on a given Arc graphics platform.

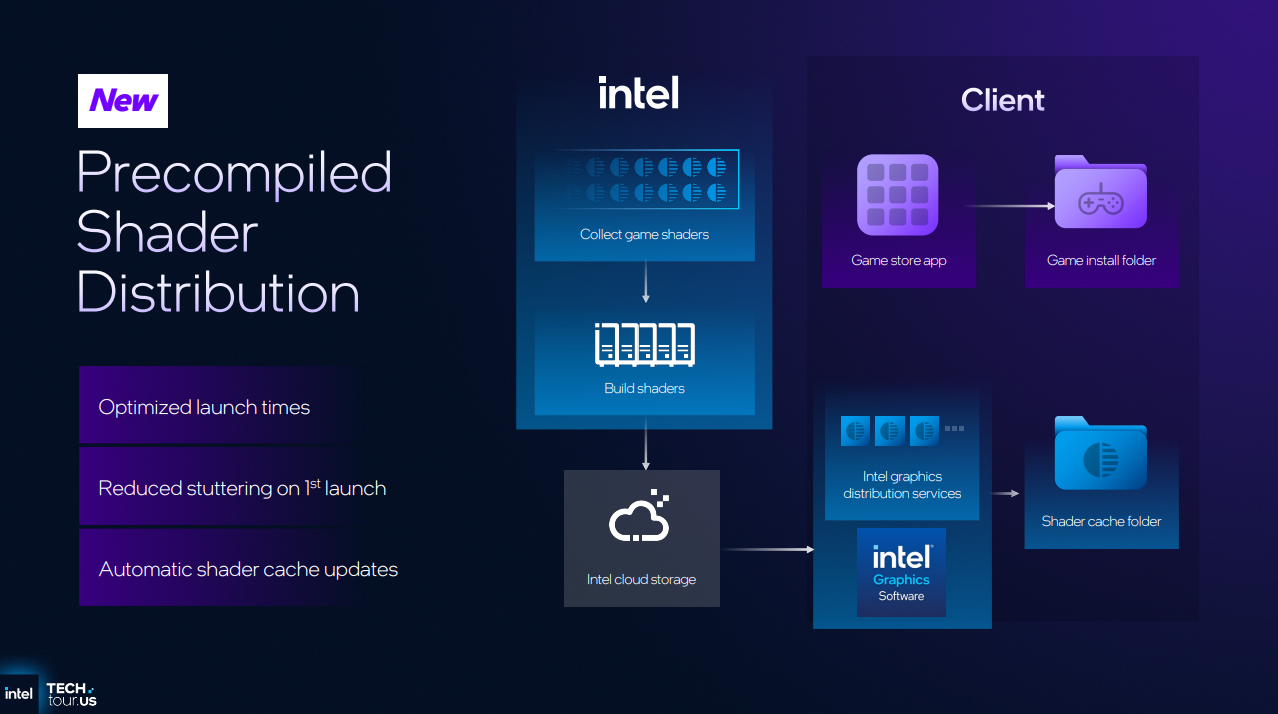

To improve game load times and in-game smoothness, Intel also plans to begin distributing pre-compiled shaders from the cloud for compatible installed games on a user’s system using the Intel Graphics Software utility.

Anybody who’s loaded a modern AAA title knows the pain of long load times and in-game stutter as shaders compile for the first time, and Intel seems to think it’s a worthy investment to allocate cloud resources to make this problem go away entirely. This isn’t a game-changer, but it is a straightforward and handy perk for users of Arc graphics products.

Overall, our first experiences with Xe3 and the larger 12-Xe3-core GPU on Panther Lake are promising. At least for now, Intel remains committed to Arc graphics despite its recent earth-shaking deal with Nvidia, and we can’t wait to test Xe3 in greater depth once shipping Panther Lake systems arrive late this year or early next.

As the Senior Analyst, Graphics at Tom's Hardware, Jeff Kampman covers everything to do with GPUs, gaming performance, and more. From integrated graphics processors to discrete graphics cards to the hyperscale installations powering our AI future, if it's got a GPU in it, Jeff is on it.

-

bit_user Thanks for the detailed rundown! I always like to have a peek under the hood at how GPUs are evolving.Reply

IMO, it's their fault for not simply calling it Xe2.5, or something like that.The article said:Intel emphasized that Xe3 is not based on the Celestial architecture, even though its name conveniently maps to that codename's place in Intel's past roadmaps. Let us repeat: this is not Celestial. Intel classifies Xe3 GPUs as part of the Battlemage family because the capabilities the chip presents to software are similar to those of existing Xe2 products. Therefore, it will include Panther Lake iGPUs under the Arc B-series umbrella.

In fact, didn't they distinguish between the Tiger Lake and Alder Lake iterations of Xe by referring to the latter as gen 12.2? Then, Alchemist dGPUs were 12.7? This is certainly how Wikipedia presents it, and they're supposed to rely on verifiable sources for stuff (i.e. not just invent their own classifications for things).

https://en.wikipedia.org/wiki/List_of_Intel_graphics_processing_units#Gen12.2

It's nice to hear they're still at least talking about future dGPUs, whatever you want to call them!The article said:The next "clean" generational leap will come with Xe3P Arc GPUs, but it's unclear when those parts will arrive.

A lot of people still seem to repeat the mantra that Alchemist was only bad because of its immature "drivers". When you dig into a lot of these GPU whitepapers, resource utilization and bottlenecks tend to receive a lot of focus. Therefore, I was convinced that Alchemist was held back not only by the state of its drivers, but other bottlenecks and scalability issues that would simply take a couple more generations to work through. I'm therefore not surprise to hear confirmation of this assumption.The article said:Intel says the changes within Xe3 are meant to improve two pain points: better utilization of available resources ... and making the architecture more scalable

I think MooreThreads initial dGPU offerings were probably also beset with similar issues.

Maybe I missed it, but this is the first disclosure of SMT width I recall seeing from Intel since the old Skylake-era Gen 9 graphics whitepaper! Good to know!The article said:Intel says each Xe3 Xe Core can keep up to 25% more threads in flight - from eight to 10 - versus its predecessor, and the core can variably allocate partitions of each Xe Vector Engine's register file per thread in order to achieve better utilization.

Interesting coincidence that AMD's RDNA 4 also improved in this regard.The article said:the core can variably allocate partitions of each Xe Vector Engine's register file per thread in order to achieve better utilization.

Interesting. I'd have assumed this was 100% in service of AI workloads, since more on-chip memory benefits convolutions and batched inferencing. The PS5 Pro's GPU allegedly used local memory to enable its blistering 300 TOPS, which is far beyond what you could do if you relied primarily on GDDR6 bandwidth.The article said:Xe3 now includes 256KB of shared local memory, up from 192KB in Xe2 on Lunar Lake and the improved Xe-LPG architecture on Meteor Lake. Intel says that workloads spilling out of this shared local memory is a major reason for performance pitfalls on older Arc iGPUs

I wonder how this compares to Nvidia's Shader Execution Reordering (SER), which they improved in Blackwell and also used ray tracing to highlight.The article said:That responsibility falls to a thread sorting unit that could previously cause backups in the ray-tracing pipeline.

https://developer.nvidia.com/blog/improve-shader-performance-and-in-game-frame-rates-with-shader-execution-reordering/ -

Eximo Bigger numbers sell though. The rebranding saga continues.Reply

(Or it was a marketing mistake and they just chose not to correct it) -

thestryker No matter what the power consumption ends up looking like being able to deliver a linear performance increase is impressive. I'm guessing that's in no small part due to the increase in L2 cache since maximum supported LPDDR5X is +12. 5% maximum memory bandwidth.Reply

Somewhat surprising that this is an inbetween graphics architecture rather than a move to Celestial though now I'm wondering if this was behind the resurrection of bigger Battlemage rumors. I suppose around the end of next year we'll see what Celestial delivers (hopefully some dGPUs with it). -

Notton Panther Lake's 12Xe³ looks impressive on paper, but I'll reserve judgement until someone puts it in a handheld.Reply

If the graphs are accurate, then it looks like it would be slightly faster than Z2E at 17W.

And hopefully it's not overpriced like Z2E.