Nvidia Blackwell and GeForce RTX 50-Series GPUs: Specifications, release dates, pricing, and everything we know (updated)

Here's what we know and expect from Nvidia's next generation Blackwell GPU architecture.

The next-generation Nvidia Blackwell GPU architecture and RTX 50-series GPUs are coming, basically on schedule. Nvidia officially detailed the first four cards in the Blackwell RTX 50-series family at CES 2025, during CEO Jensen Huang's keynote on January 6. We expect the various Blackwell GPUs will join the ranks of the best graphics cards, replacing their soon-to-be-prior-generation counterparts.

When we spoke with some people in early 2024, the expectation was that we'd see at least the RTX 5090 and RTX 5080 by the time the 2024 holiday season rolled around. But then came the delay of Blackwell B200 along with packaging problems, and that appears to have pushed things back. Now, we're looking at a January 2025 announcement with at least one or two models coming before the end of the month, and perhaps as many as four different desktop cards — and the possibility of laptop RTX 50-series also exists.

Nvidia already provided many of the core details for its data center Blackwell B200 GPU. The AI and data center variants will inevitably differ from consumer parts, but there are some shared aspects between past consumer and data center Nvidia GPUs, and that should continue. That gives some good indications of certain aspects of the future RTX 50-series GPUs.

Things are starting to clear up now, with hard specifications and pricing details for the first four GPUs. There's still no official word on the 5060-class GPUs, but those should arrive at some point in the coming months.

Let's talk about specifications, technology, pricing, and other details. We've been updating this article for a while now, as information became available, and we're now in the home stretch. Here's everything we know about Nvidia Blackwell and the RTX 50-series GPUs.

Blackwell and RTX 50-series Release Dates

Despite what we personally heard in early 2024, the RTX 50-series didn't make it out the door in 2024, but the first models will launch in January 2025. There were some delays, but not directly related to the consumer GPUs.

Nvidia's data center Blackwell B100/B200 GPUs encountered packaging problems and were also delayed. Given how much money the data center segment raked in over the past year (see Nvidia's latest earnings), putting more money and wafers into getting B200 ready and available makes sense. Gamers? Yeah, we're no longer Nvidia's top priority.

The consumer Blackwell GPUs are "late," based on historical precedent. The Ada Lovelace RTX 40-series GPUs first appeared in October 2022. The Ampere RTX 30-series GPUs first appeared in September 2020. Prior to that, RTX 20-series launched two years earlier in September 2018, and the GTX 10-series was in May/June 2016, with the GTX 900-series arriving in September 2014. That's a full decade of new Nvidia GPU architectures arriving approximately every two years. Even so, we're still only a few months beyond the normal cadence.

And now we're into 2025, and Nvidia spilled the beans on the RTX 5090, 5080, 5070 Ti, and 5070 — along with mobile variants — during the CES 2025 keynote. The top-tier RTX 5090 and 5080 will arrive first, in January 2025. The RTX 5070 Ti and RTX 5070 will come next, probably in February. The 5060-class hardware could come any time within the next six months after the first 50-series GPUs. As usual, we expect Blackwell GPUs to follow the typical staggered release schedule.

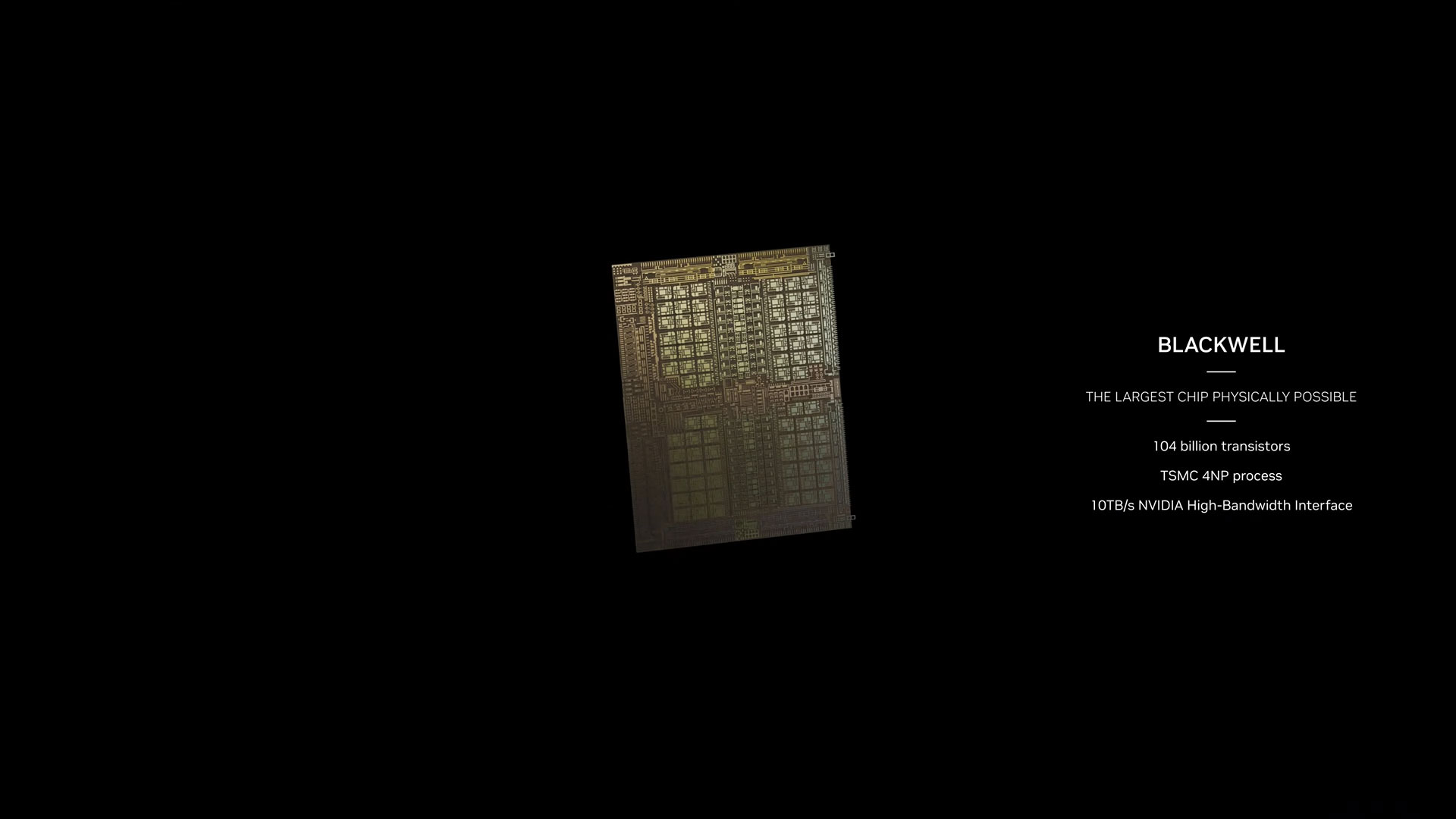

Still TSMC 4N, 4nm Nvidia

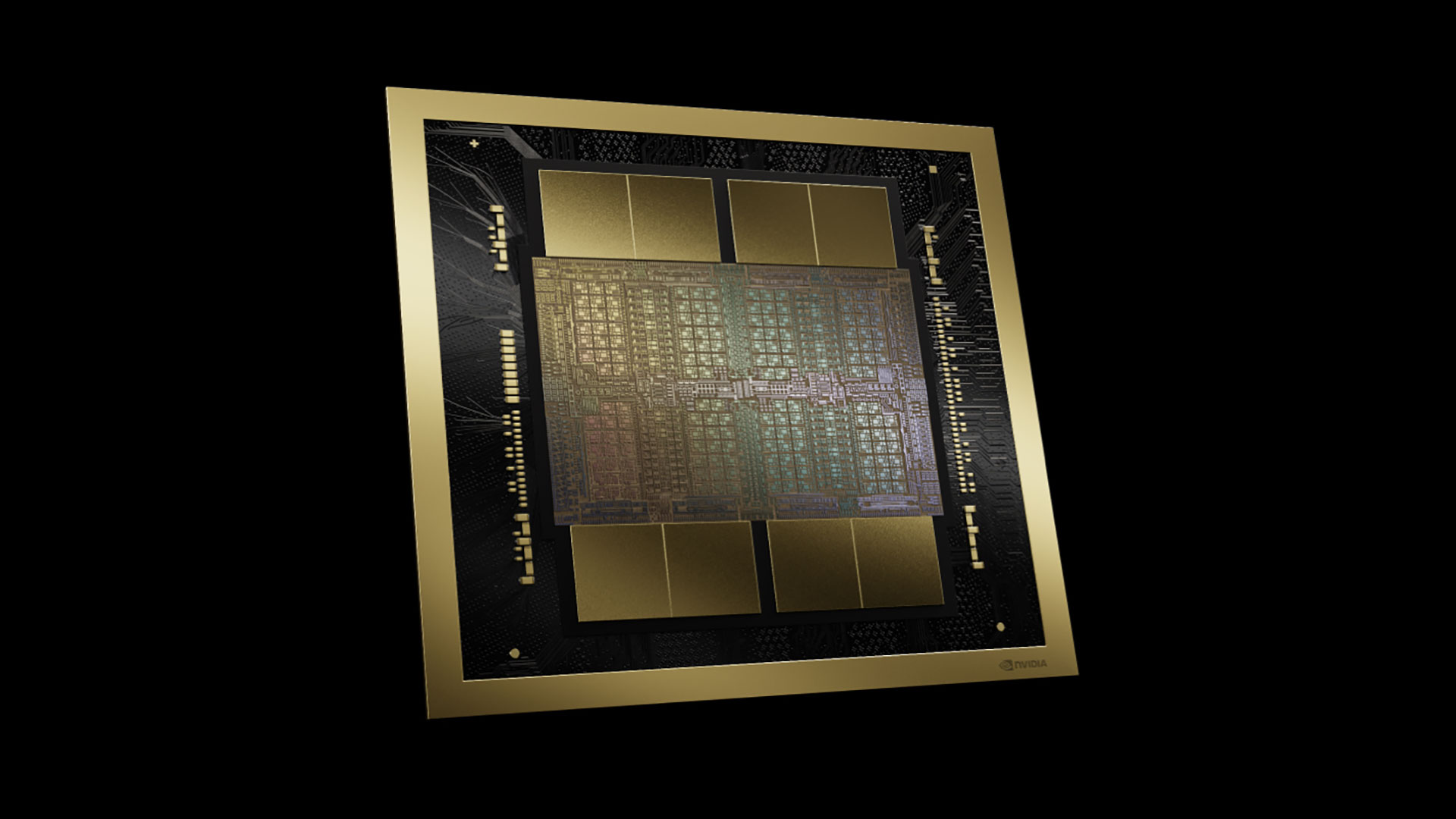

One of the surprising announcements at GTC 2024 was that Blackwell B200 will use the TSMC 4NP node — "4nm Nvidia Performance," or basically a tuned/tweaked variation of the N4 node used on the RTX 40-series. Blackwell B200 also uses a dual-chip solution, with the two identical chips linked via a 10 TB/s NV-HBI (Nvidia High Bandwidth Interface) connection.

While it's certainly true that process names have largely become detached from physical characteristics, many expected Nvidia to move to a variant of TSMC's cutting-edge N3 process technology. Instead, it opted for a refinement of the existing 4N node that has already been used with Hopper and Ada Lovelace GPUs for the past two years.

For the consumer Blackwell chips, though, Nvidia will stick with TSMC 4N. Yes, the same node and process as Ada, with no changes that we're aware of. Going this route certainly offers some cost savings, though TSMC doesn't disclose the contract pricing agreements with its various partners. Perhaps Nvidia just didn't think it needed to move to a 3nm-class node for this generation.

AMD will be moving to TSMC N4, while Intel will use TSMC N5 for Battlemage. So, even though Nvidia didn't choose to pursue 3nm or 2nm this round, it's still equal to or better than the competition. And the Ada architecture was already well ahead in terms of efficiency, performance, and features in many areas.

Next generation GDDR7 memory

Blackwell GPUs will move to GDDR7 memory, at least for the RTX 5070 and above. We don't know for certain what the 5060-class will use, and we don't have official clock speeds, but we do know that the 5070 through 5090 are all using GDDR7 memory.

The current generation RTX 40-series GPUs use GDDR6X and GDDR6 memory, clocked at anywhere from 17Gbps to 23Gbps. GDDR7 has target speeds of up to 36Gbps, 50% higher than GDDR6X and 80% higher than vanilla GDDR6. SK hynix says it will even have 40Gbps chips, though the exact timeline for when those might be available hasn't been given. Regardless, GDDR7 will provide a much-needed boost to memory bandwidth at all levels.

Nvidia won't actually ship cards with memory clocked at 36Gbps, though. In the past, it used 24Gbps GDDR6X chips but clocked them at 22.4Gbps or 23Gbps — and some 24Gbps Micron chips were apparently down-binned to 21Gbps in the various RTX 4090 graphics cards that we tested. The RTX 5090, 5070 Ti, and 5070 will clock their GDDR7 at 28Gbps, while the RTX 5080 opts for a higher memory speed of 30Gbps. Either way, that's still a healthy bump to bandwidth.

At 28Gbps, GDDR7 memory provides a solid 33% increase in memory bandwidth compared to the 21Gbps GDDR6X used on the RTX 4090. The RTX 5080 opts for 30Gbps GDDR7, a 30% increase in bandwidth relative to the RTX 4080 Super, and 34% more bandwidth than the original 4080's 22.4Gbps memory. As with so many other aspects of Blackwell, it remains to be seen just how far Nvidia and its partners will push things.

Nvidia will keep using a large L2 cache with Blackwell. This will provide even more effective memory bandwidth — every cache hit means a memory access that doesn't need to happen. With a 50% cache hit rate as an example, that would double the effective memory bandwidth, though note that hit rates vary by game and settings, with higher resolutions in particular reducing the hit rate.

GDDR7 also potentially addresses the issue of memory capacity versus interface width. At GTC, we were told that 16Gb chips (2GB) are in production, with 24Gb (3GB) chips also coming. Are the larger chips with non-power-of-two capacity ready for upcoming Blackwell GPUs? Apparently not for the initial salvo, or at least not for the initial desktop cards.

There's been at least one rumor suggesting Nvidia might have 16GB (2GB chips) and 24GB (3GB chips) variants of the RTX 5080. As long as the price difference isn't too onerous and the other specs remain the same, that wouldn't be a bad approach. The base models announced so far all come with 2GB chips, while upgraded variants could have 50% more VRAM capacity courtesy of the 3GB chips.

We've already seen this with the RTX 5090 Laptop GPU, which uses the same GB203 chip as the desktop RTX 5080/5070 Ti, with a 256-bit memory interface. But then it uses 3GB GDDR7 chips so that the resulting graphics chip has 24GB of VRAM. If Nvidia can do this for the mobile 5090, why isn't it already doing it for the desktop 5080? Probably because it doesn't feel that it needs to — and to leave room for a mid-cycle refresh using those chips in the future.

Right now, there's no pressing need for consumer graphics cards to have more than 24GB of memory. But RTX 5090 has a 512-bit interface, meaning it will come with a default 32GB configuration and could offer a 48GB variant in the future. The higher capacity GDDR7 chips could be particularly beneficial for professional and AI focused graphics cards, where large 3D models and LLMs are becoming increasingly common. A 512-bit interface with 3GB chips on both sides of the PCB could yield a professional RTX 6000 Blackwell Generation as an example with 96GB of memory.

More importantly, the availability of 24Gb chips means Nvidia (along with AMD and Intel) could put 18GB of VRAM on a 192-bit interface, 12GB on a 128-bit interface, and 9GB on a 96-bit interface, all with the VRAM on one side of the PCB. We could also see 24GB cards with a 256-bit interface, and 36GB on a 384-bit interface — and double that capacity for professional cards. Pricing will certainly be a factor for VRAM capacity, but it's more likely a case of "when" rather than "if" we'll see 24Gb GDDR7 memory chips on consumer GPUs.

But there's more going on than just raw VRAM capacity. Shown during the CES 2025 keynote, RTX Neural Materials could cut the VRAM requirements of textures by about one third. If that needs to be implemented on a per-game basis, it won't help 8GB cards in all situations, but if it's a driver-side enhancement, 8GB could actually be "enough" for most games again.

Blackwell architectural updates

The Blackwell architecture will have various updates and enhancements over the previous generation Ada Lovelace architecture. Nvidia hasn't gone into a lot of detail, but one thing is clear: AI is a big part of Nvidia's plans for Blackwell. We know that AI TOPS (teraops) performance per tensor core has been doubled, but Nvidia is pulling a fast one there. Technically, that's for FP4 workloads (which should be classified as FP4 TFLOPS, not AI TOPS, but we digress).

The native support for FP4 and FP6 formats boosts raw compute with lower precision formats, but the per-tensor-core compute has remained the same otherwise. Every generation of Nvidia GPUs has contained other architectural upgrades as well, and we can expect the same to occur this round.

Nvidia has increased the potential ray tracing performance in every RTX generation, and Blackwell continues that trend, doubling the ray/triangle intersection rates for the RT cores. With more games like Alan Wake 2 and Cyberpunk 2077 pushing full path tracing — not to mention the potential for modders to use RTX Remix to enhance older DX10-era games with full path tracing — there's even more need for higher ray tracing throughput.

What other architectural changes might Blackwell bring? Considering Nvidia is sticking with TSMC 4N for the consumer parts, we don't anticipate massive alterations. There will still be a large L2 cache, and the tensor cores are now in charge of handling the optical flow calculations for frame generation and multi frame generation. DLSS 4 "neural rendering" is coming with various other enhancements including multi-frame generation on the RTX 50-series.

Raw compute, for both graphics and more general workloads, sees a modest bump on the RTX 5090 compared to the RTX 4090, though other GPUs don't seem to be getting as much of an increase. Again, AI might make up for that, but a lot remains to be seen. The 5070 for example offers up 31 TFLOPS of compute, compared to 29 TFLOPS on the 4070. The 5090 has 107 TFLOPS compared to 83 TFLOPS on the 4090.

RTX 50-Series Pricing

How much will the RTX 50-series GPUs cost? Frankly, considering the current market conditions, we're pleasantly surprised so far. A lot of people were understandably angry during the 40-series launches at the generational increase in prices, and with the 50-series Nvidia is mostly holding steady or even stepping back a bit. That doesn't apply to the top-end RTX 5090, though, which will cost $1,999 at launch — $400 more than the 4090.

For dedicated desktop graphics cards we're now living in a world where "budget" means around $300, "mainstream" means $400–$600, "high-end" is for GPUs costing $800 to $1,000, and the "enthusiast" segment targets $1,500 or more. Or at least, that appears to be Nvidia's take on the situation. Other than the increase on the 5090, so far the other GPUs are at the same price or $50 lower than their direct predecessors.

Blackwell specifications

With the official reveal out of the way, we have the specifications for the RTX 5070 and above. The 5060 Ti and 5060 are more speculative, with various unknowns and question marks. But here's our updated specifications table (and we'll update the table as other 50-series GPUs are officially revealed).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

| Graphics Card | RTX 5090 | RTX 5080 | RTX 5070 Ti | RTX 5070 | RTX 5060 Ti | RTX 5060 |

|---|---|---|---|---|---|---|

| Architecture | GB202 | GB203 | GB203 | GB205 | GB207 | GB207 |

| Process Node | TSMC 4N | TSMC 4N | TSMC 4N | TSMC 4N | TSMC 4N | TSMC 4N |

| Transistors (Billion) | 92.2 | 45.6 | 45.6 | 31.0 | ? | ? |

| Die size (mm^2) | 750 | 378 | 378 | 263 | ? | ? |

| SMs | 170 | 84 | 70 | 48 | 36? | 24? |

| GPU Shaders (ALUs) | 21760 | 10752 | 8960 | 6144 | 4608? | 3072? |

| Tensor / AI Units | 680 | 336 | 280 | 192 | 144? | 96? |

| Ray Tracing Units | 170 | 84 | 70 | 48 | 36? | 24? |

| Boost Clock (MHz) | 2407 | 2617 | 2452 | 2512 | 2500? | 2500? |

| VRAM Speed (Gbps) | 28 | 30 | 28 | 28 | 30? | 28? |

| VRAM (GB) | 32 | 16 | 16 | 12 | 8? | 8? |

| VRAM Bus Width | 512 | 256 | 256 | 192 | 128? | 128? |

| L2 Cache | 96 | 64 | 48 | 48 | 32? | 24? |

| Render Output Units | 176 | 112 | 96 | 80 | 48? | 32? |

| Texture Mapping Units | 680 | 336 | 280 | 192 | 144 | 96 |

| TFLOPS FP32 (Boost) | 104.8 | 56.3 | 43.9 | 30.9 | 23.0? | 15.4? |

| TFLOPS FP16 (INT8 TOPS) | 838 (3352) | 450 (1801) | 352 (1406) | 247 (988) | 199? (737?) | 133? (492?) |

| Bandwidth (GB/s) | 1792 | 960 | 896 | 672 | 480? | 448? |

| TBP (watts) | 575 | 360 | 300 | 250 | 200? | 150? |

| Launch Date | Jan 2025 | Jan 2025 | Feb 2025 | Feb 2025 | May 2025? | Jun 2025? |

| Launch Price | $1,999 | $999 | $749 | $549 | $399? | $299? |

GPU boost clocks are slightly lower than the 40-series on several GPUs, but note that the 40-series cards tended to exceed the stated boost clocks by around 200 MHz on average. The rest of the specs all follow from the SMs (Streaming Multiprocessors), which gives the CUDA, RT, and tensor core counts based on the usual 128 CUDA, 1 RT, and 4 tensor cores per SM. There are also four TMUs (Texture Mapping Units) per SM.

GPC (graphics processing cluster) counts aren't listed, but the 5090 has 11 (out of a potential 12 for the full GB202 chip), 5080 has seven, 5070 Ti has six, and 5070 has five. The ROPS are tied to the GPC counts, with 16 ROPS per GPC. The 5060 class GPUs are probably still a few months out, but the RTX 5070 Laptop GPU has been announced and it still has a 128-bit memory interface and 8GB of VRAM. <sigh>

We'll continue to update this table with other official numbers once those become available. Eventually, everything that's unknown or guesswork will get replaced with concrete information. There will almost certainly be far more than the four announced GPUs plus our two placeholders, just as there are ten different RTX 40-series desktop GPUs and twelve different RTX 30-series desktop variants.

16-Power Connectors, Take Three

After the 16-pin meltdown fiasco that plagued the first wave of RTX 4090 cards, many people probably want Nvidia to abandon the new PCI-SIG standard. That's not going to happen, though the change to the modified ATX 12V-2x6 connector has hopefully put any potential problems to rest.

What's interesting is that the RTX 40-series wasn't the first generation of GPUs to come with a 16-pin connector. The RTX 30-series used 12-pin adapters (without the extra four sense pins of 12VHPWR) starting clear back in 2020. We didn't hear a bunch of stories about melting 3090 and 3080 adapters, but then most of those cards had TGPs well under 400W. The RTX 3090 Ti GPUs were the first to use the newer 16-pin connector, but again with no rash of reported meltdowns. With RTX 40-series making widespread use of 16-pin, that means Blackwell will be the third generation of Nvidia GPUs to at least partially adopt the standard.

One of the key elements with the 4090 melting problems seems to be pulling 450W or more through a single relatively compact connector. With the 5090 set to have a stock power level of 575W, it's a big step up from the 4090. Let's hope everyone involved has learned a few lessons from the 4090 meltdowns and builds the rising generation to be more robust.

Also an interesting point here is that Nvidia's Founders Edition cards (5090, 5080, and 5070) have returned to an angled 16-pin connector rather than the vertical orientation of the 40-series. That's good, we think, as the 30-series was a bit easier to fit into cases since it didn't have the 8-pin to 16-pin adapters sticking straight up. The adapters aren't necessary if you have an ATX 3.0 power supply, but if you have an older PSU the adapters become necessary. The cables on the new adapters are more flexible as well, which should help with system builders.

The future GPU landscape

Nvidia won't be the only game in town for next-generation graphics cards. Intel's Battlemage has already launched, at least with the Arc B580, with Arc B570 now available. AMD RDNA 4 will also arrive at some point — the RX 9070 XT and RX 9070 should launch by March, with the 9060 cards coming by mid 2025.

But while there will certainly be competition, Nvidia has dominated the GPU landscape for the past decade. At present, the Steam Hardware Survey indicates Nvidia has 75.8% of the graphics card market, AMD sits at 16.2%, and Intel accounts for just 7.7% (with 0.3% "other"). That doesn't even tell the full story, however.

Both AMD and Intel make integrated graphics, and it's a safe bet that a large percentage of their respective market shares comes from laptops and desktops that lack a dedicated GPU. AMD's highest market share for what is clearly a dedicated GPU comes from the RX 6600, sitting at 0.99%. Intel doesn't even have a dedicated GPU listed in the survey — integrated Arc does show up with 0.24%, though. For the past three generations of AMD and Nvidia dedicated GPUs, the Steam survey suggests Nvidia has 92.1% of the market compared to 7.9% for AMD.

Granted, the details of how Valve collects data are obtuse, at best, and AMD may be doing better than the survey suggests. Still, it's a green wave of Nvidia cards at the top of the charts. Recent reports from JPR say that Nvidia controlled 88% of the add-in GPU market compared to 12% for AMD, as another example of the domination currently going on.

Intel apparently wants Battlemage to compete more in the budget to mainstream segment of the graphics space. We'll have to see if there's a higher spec Battlemage GPU, and how high it reaches, but the B580 targets $249 while the upcoming B570 will start at $219. AMD competes better with Nvidia for the time being, both in performance and drivers and efficiency, but we're still waiting for its GPUs to experience their "Ryzen moment" — GPU chiplets so far haven't proven an amazing success. And AMD isn't going after anything above the "5070" level it seems with RDNA 4.

Currently, Nvidia offers higher overall performance at the top of the GPU totem pole, and much higher ray tracing performance. It also dominates in the AI space, with related technologies like DLSS — including DLSS 3.5 Ray Reconstruction — Broadcast, and other features. It's Nvidia's race to lose, and it will take a lot of effort for AMD and Intel to close the gap and gain significant market share, at least outside of the integrated graphics arena. On the other hand, high Nvidia prices and a heavier focus on AI for the non-gaming market could leave room for its competitors. We'll see where the chips land soon enough.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

usertests These generations are getting launches spaced out over a long period of time. For example, there was about 8.5 months between the launches of the RTX 4090 and RTX 4060.Reply

So Nvidia could launch the 5090 and maybe 5080 this year, but will likely hold back lower tiers of cards to avoid driving down the prices of the existing Lovelace cards. It would be funny to see the budget cards use the 24 Gb GDDR7 chips which are not in production yet. RTX 5090 could get a 384-bit bus and 24 GB again, and a 96-bit 5060/5050 could get 9 GB. -

@JarredWaltonGPUReply

Is there any chance Nvidia will stay true to its original roadmap and release RTX-50 on 2025,

https://www.tomshardware.com/news/nvidia-ada-lovelace-successor-in-2025

or are we long past that at this point? -

Dementoss Reply

No doubt prices will be even higher, along with the power consumption.thisisaname said:One fact not to have leaked is the price. -

NightLight TDP will decide if I upgrade. On the 1080 they got everything together. Decent size, great performance. These super high wattage cards are just ridiculous.Reply -

JarredWaltonGPU Reply

Note that based on that image, "Ada Lovelace-Next" lands right near the 2024/2025 transition. Like, if you draw a vertical line upward from the "2025" at the bottom of the chart, and if that represents Jan 1, 2025, then having Blackwell first arrive at the end of 2024 makes sense. But even then, I think that timeline is less of a hard roadmap and more a suggestion as to how things will come out. "Hopper-Next" was announced at the beginning of March, Grace-Next hasn't been announced, and Ada-Next is still to be announced as well. Both of those may be revealed at close to the same time.valthuer said:@JarredWaltonGPU

Is there any chance Nvidia will stay true to its original roadmap and release RTX-50 on 2025,

https://www.tomshardware.com/news/nvidia-ada-lovelace-successor-in-2025

or are we long past that at this point?

Ultimately, it comes down to demand for the products. If all the Ada GPUs (particularly high-end/enthusiast) are mostly sold out, Nvidia will be more likely to release Blackwell sequels sooner than later. And I won't name any person or company in particular, but I did speak to some folks at GTC that were pretty adamant that Blackwell consumer GPUs would arrive this year. Some people also mentioned that the Super refresh of the 40-series was "later than anticipated," but that they still didn't think it would impact Blackwell coming out this year.

If we're correct that these will all be on the TSMC 4NP node, I wouldn't expect significant improvements in power. Do keep in mind that only the 4090 has very high power use, with every other GPU being relatively less power than the 30-series. Sure, Ampere was higher than the previous generations, but it also established a precedent that most people didn't appear to mind: more power, more performance.NightLight said:TDP will decide if I upgrade. On the 1080 they got everything together. Decent size, great performance. These super high wattage cards are just ridiculous.

I'd be very surprised to see TGP go down on any family for the next generation, or really anything going forward. Like whatever comes after Blackwell, let's say it gets made on TSMC 3N or even 2N (or maybe Intel 18A!), I bet it will still stick with ~450W for the 6090, ~320W for the 6080, ~200W for 6070, and ~120W for 6060. And there will be Ti/Super cards spaced between those, so 6070 Ti at ~280W as an example, and 6060 Ti at ~160W. -

oofdragon Spoiler: RTX 5060 = RTX 4070, performance and price. Rest of lineup the same. Only change RTX 5090 around 1.6x times faster, cost around $3000Reply -

hotaru251 Reply

depends on if they gimp bus again.oofdragon said:RTX 5060 = RTX 4070, performance and price.

thats why a 4060 was barely betetr or even worse than the 3060 even though it "should" have been betetr in every case.

Their choice of memory bus effectively downgraded it so it didnt have the generational improvement one expected. -

CmdrShepard My take for 5090 RTX knowing Jensen's megalomaniacal approach:Reply

1. Base power will go from 450W to 600W

2. It will need second 12V HPWR because you will be able to unlock power limit to 750W (OEM cards will go up to 900W)

3. It will come with 360 mm AIO preinstalled

4. It will cost at least 50% more than 4090 RTX

5. It will be almost impossible to buy

Performance? Who cares, it has all the higher numbers so it must be faster.

Oh, and it will take 4 slots. -

Makaveli Reply

If it comes with a AIO preinstalled that will be a 2.5 card slot at max.CmdrShepard said:My take for 5090 RTX knowing Jensen's megalomaniacal approach:

1. Base power will go from 450W to 600W

2. It will need second 12V HPWR because you will be able to unlock power limit to 750W (OEM cards will go up to 900W)

3. It will come with 360 mm AIO preinstalled

4. It will cost at least 50% more than 4090 RTX

5. It will be almost impossible to buy

Performance? Who cares, it has all the higher numbers so it must be faster.

Oh, and it will take 4 slots.

The rest spot on.