GDDR7 graphics memory standard published by JEDEC — Next-gen GPUs to get up to 192 GB/s of bandwidth per device

Likely to be used in RDNA 4 and Blackwell GPUs

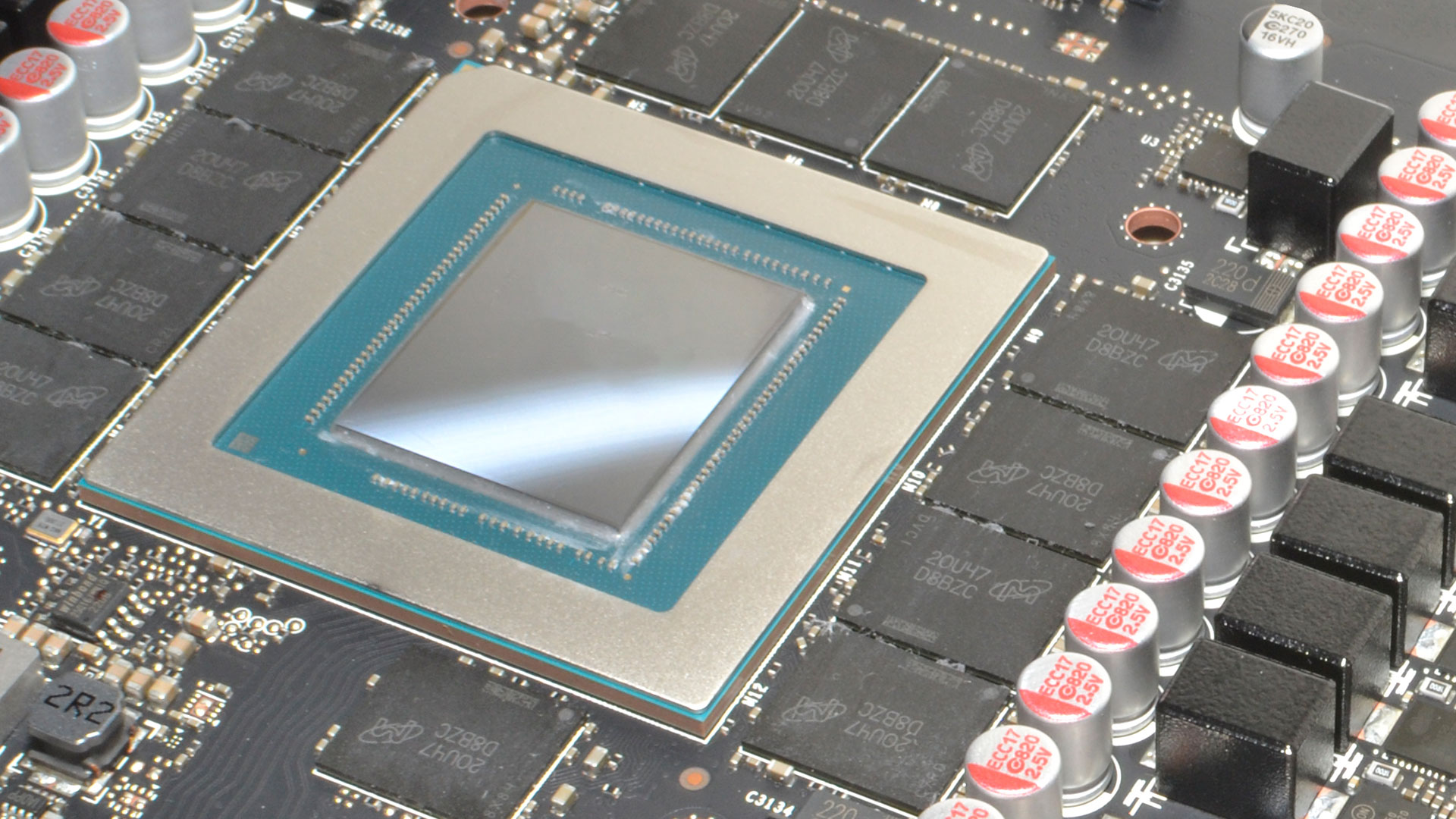

JEDEC has published the specification for the GDDR7 memory standard — next-generation memory that will be used for graphics cards — and AMD, Micron, Nvidia, Samsung, and SK hynix have all weighed in on the matter. We anticipate GDDR7 will be the memory of choice for high-end RDNA 4 and Blackwell GPUs, which are rumored to launch next year and vie for a spot on our list of the best graphics cards.

It has been nearly six years since the first graphics cards began supporting GDDR6 memory. That was Nvidia's RTX 20-series Turing architecture, which launched in September, 2018. The first RTX 2080 and RTX 2080 Ti GPUs with GDDR6 had the memory clocked at 14 Gbps (14 GT/s), providing 56 GB/s per device. Later solutions like AMD's RX 7900 XTX have clocked as high as 20 Gbps, with 80 GB/s.

Nvidia helped to create a faster alternative in GDDR6X, which started at 19 Gbps in the RTX 3080, and eventually went as high as 23 Gbps in the most recent RTX 4080 Super. Officially, Micron rates its GDDR6X chips as high as 24 Gbps, which would yield 96 GB/s per device.

GDDR7 is set to provide a massive generational increase in bandwidth. JEDEC's specification will eventually reach as high as 192 GB/s per device. That works out to a memory speed of 48 Gbps, double the fastest GDDR6X. It gets to that speed in a different way than prior memory solutions, however.

GDDR7 will use three levels of signaling (-1, 0, +1) to transmit three bits of data per two cycles, aka PAM3 signaling. That's a change from the NRZ (non-return-to-zero) signaling used in GDDR6, which transmits two bits over two cycles. That change alone accounts for a 50% improvement in data transmit efficiency, meaning the base clocks don't have to be twice as high as GDDR6. Other changes include the use of core independent linear-feedback shift register training patterns to improve accuracy and reduce the training time, and GDDR7 will have double the number of independent channels (four versus two in GDDR6).

None of this is new information, and Samsung revealed many of the key GDDR7 details last July. However, the publication of the JEDEC standard marks a key milestone and suggests the public availability and use of GDDR7 solutions is imminent — relatively speaking.

Nvidia's next-generation Blackwell architecture is expected to use GDDR7 when it launches. We will likely get a data center version of Blackwell in late 2024, but that will use HBM3E memory instead of GDDR7. The consumer-level products will most likely arrive in early 2025, and as usual, there will be professional and data center variants of those parts. AMD is also working on RDNA 4, and we expect it will also use GDDR7 — though don't be surprised if lower-tier parts from both companies still opt to stick with GDDR6 for cost reasons.

In either case, AMD or Nvidia, using GDDR7 at the highest speeds would potentially provide for up to 2,304 GB/s of bandwidth using today's widest 384-bit interfaces. Will we actually see such bandwidth? Perhaps not, as for example Nvidia's RTX 40-series GPUs with GDDR6X all use slightly lower than maximum clocks. Still, we could easily see double the bandwidth with the upcoming architectures.

When will those actually arrive? We're not ruling out a potential late-2024 launch. Nvidia's RTX 30-series launched in the fall of 2020, and the RTX 40-series came out in the fall of 2022. AMD's RX 6000-series likewise launched in late 2020, with the RX 7000-series arriving in late 2022. If both keep to the same two-year cadence, we could see GDDR7 graphics cards before the end of the year. But don't get your hopes up, as we still feel early 2025 is more likely.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

ekio What we want is the memory directly within the logic silicon.Reply

That would increase perf and efficiency by orders of magnitude. -

bit_user ReplyGDDR7 will use three levels of signaling (-1, 0, +1) to transmit three bits of data per two cycles.

@JarredWaltonGPU , you explained PAM3 and then mentioned it like it's yet further new feature!

...

GDDR7 will have double the number of independent channels (four versus two in GDDR6), and it will use PAM3 signaling.

: D -

bit_user Reply

DRAM is typically made on a different process node than logic. I can't say exactly why.ekio said:What we want is the memory directly within the logic silicon.

There have been some recent announcements of companies working on hybrid HBM stacks, where you have multiple layers DRAM dies stacked atop a logic die at the bottom. That's probably as close as we're going to get.

Eh, memory bandwidth doesn't seem to be such a bottleneck, for graphics. HBM does tend to be more efficient than GDDR memory, but probably at least 80% of the energy burned by a graphics card is in the GPU die, itself.ekio said:That would increase perf and efficiency by orders of magnitude.

And no, you don't get a big latency win by moving the memory closer. Try computing the distance light travels in a single memory clock cycle and then tell me a couple centimeters is going to make any difference. -

usertests Reply

By "memory directly within the logic silicon", I assume ekio means something like 3DSoC, which would have memory layers as close as tens of nanometers from logic. I suppose this is called Processing-near-Memory (PnM).bit_user said:And no, you don't get a big latency win by moving the memory closer. Try computing the distance light travels in a single memory clock cycle and then tell me a couple centimeters is going to make any difference.

At some point in the future, we will probably move towards "mega APUs" that move everything including memory into a single 3D package, for stupendous performance and efficiency gains, and indeed lower latency. But it's not coming soon. -

bit_user Reply

Like Apple's M-series? Even Intel has gotten on board with this, as some Meteor Lake SoCs supposedly have on-package LPDDR5X memory.usertests said:At some point in the future, we will probably move towards "mega APUs" that move everything including memory into a single 3D package,

No, latency of HBM is typically worse than conventional DRAM, though I don't know where current & upcoming HBM stands on that front.usertests said:for stupendous performance and efficiency gains, and indeed lower latency. But it's not coming soon.

LPDDR5 certainly has worse latency than regular DDR5. So far, HBM and LPDDR are the only types of memory to be found on-package.

This level of integration is about energy-efficiency and increasing bandwidth. The one thing it's definitely not about is improving (best case) latency! -

usertests Reply

No, nanometers of distance like I said. What Apple and Intel are getting out of that packaging is nothing compared to what we'll see in the future.bit_user said:Like Apple's M-series? Even Intel has gotten on board with this, as some Meteor Lake SoCs supposedly have on-package LPDDR5X memory.

I'm not addressing those types of memory, just interpreting what ekio said which does correspond to something in development.bit_user said:LPDDR5 certainly has worse latency than regular DDR5. So far, HBM and LPDDR are the only types of memory to be found on-package.

Instead of "single 3D package", substitute "single 3D chip". -

edzieba Reply

That's called 'cache', it's been around basically as long as ICs have, and certainly longer than GPUs have existed. Throwing more cache at a problem does not necessarily make things faster if cache is not your bottleneck.ekio said:What we want is the memory directly within the logic silicon.

That would increase perf and efficiency by orders of magnitude. -

ekio I know what cache is…Reply

I am talking about full memory on the logic die such as Groq LPUs.

Memory transfers are a huge bottleneck in modern processors. -

JarredWaltonGPU Reply

Oops. Fixed!bit_user said:@JarredWaltonGPU , you explained PAM3 and then mentioned it like it's yet further new feature!

: D