TensorWave just deployed the largest AMD GPU training cluster in North America — features 8,192 MI325X AI accelerators tamed by direct liquid-cooling

AI infrastructure company TensorWave has revealed the deployment of a massive 8,192-GPU cluster powered by AMD’s latest Instinct MI325X accelerators, claiming to be the largest AMD-based AI training installation in North America to date. The system also features direct liquid cooling, making it the first public deployment of its kind at this scale. Announced on X, the company showcased photos of the cluster’s high-density racks with bright orange cooling loops, confirming the system is now fully operational.

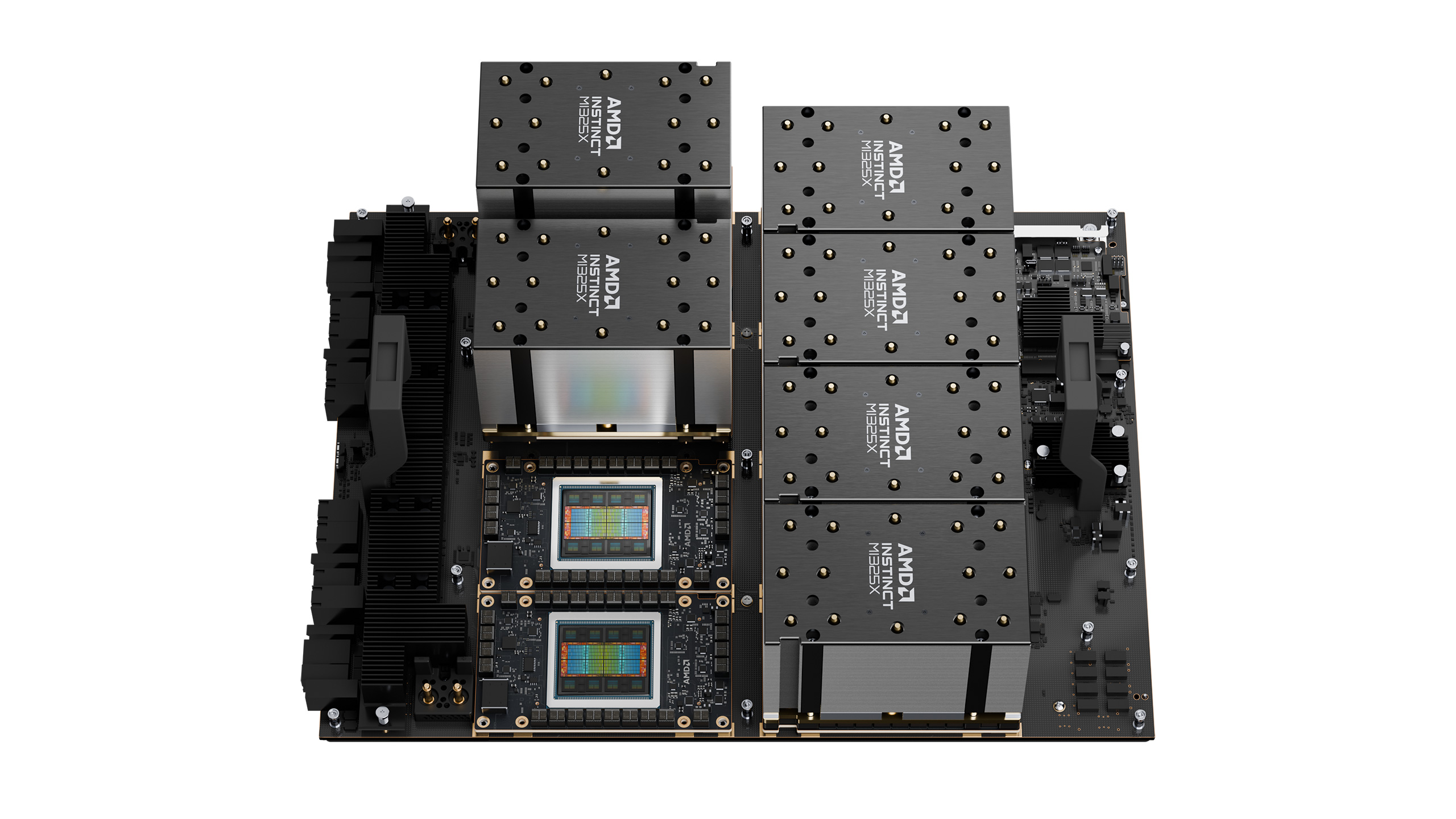

8,192 liquid-cooled MI325X GPUs.The largest AMD GPU training cluster in North America.Built by TensorWave. Ready for what’s next 🌊 pic.twitter.com/RlFY4v2JDuJuly 12, 2025

The AMD Instinct MI325X, officially launched late last year, was the company’s most aggressive attempt to challenge NVIDIA in the AI accelerator space—up until it was succeeded by MI350X and MI355X last month. Regardless, each MI325X unit features 256GB of HBM3e memory, enabling 6TB/s of bandwidth, along with 2.6 PFLOPS of FP8 compute, thanks to its chiplet design with 19,456 stream processors clocked up to 2.10GHz.

The GPU confidently stands its ground against Nvidia's H200 while being a lot cheaper, but you pay that cost elsewhere in the form of an 8-GPU cluster limitation compared to the Green Team's 72. That's one of the primary reasons it didn't quite take off and precisely what makes TensorWave’s approach so interesting. Instead of trying to compete with scale per node, TensorWave focused on thermal headroom and density per rack. The entire cluster is built around a proprietary direct-to-chip liquid cooling loop, using bright orange (sometimes yellow?) tubing to circulate coolant through cold plates mounted directly on each MI325X.

At 1,000 watts per GPU, running even a fraction of this hardware takes serious engineering. Thankfully, there are no 16-pin power connectors in sight. Anyhow, a total of 8,192 GPUs will produce over 2 petabytes/s of aggregate memory bandwidth and an estimated 21 exaFLOPS of FP8 throughput, though, as always, sustained performance depends heavily on model parallelism (splitting the AI model across GPUs) and interconnect design. TensorWave's business model is cloud capacity for rent, so the real challenge of scaling models falls to the tenants themselves.

This installation follows TensorWave’s $100 million Series A round from May, led by AMD Ventures and Magnetar. Unlike most cloud vendors that build primarily around NVIDIA hardware, TensorWave is going all-in on AMD, not just for pricing flexibility, but because they believe ROCm has matured enough for full-scale model training. Of course, NVIDIA still dominates the landscape. Its B100 and H200 accelerators are everywhere, from AWS to CoreWeave, and the entire AI boom seems to be held up by them, but this development shows positive signs for AMD's foothold in the AI sector.

As such, TensorWave’s deployment isn’t a one-off. According to the team, this is phase one of a much larger rollout, with plans to integrate AMD’s MI350X later this year. That chip, based on CDNA 4, introduces FP4 and FP6 precision support, higher bandwidth ceilings, and more power-hungry designs that could push TDPs up to 1,400W per chip—something that couldn't be tamed by air alone, so TensorWave already seems to be on the right track. The ROCm stack still has work to do, but with 8,192 MI325X GPUs already humming away under liquid cooling, AMD finally has the scale to prove it belongs in the same conversation.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

jp7189 This is a great first step, but there are still missing pieces. We need legions of young people just graduating from school that have been tinkering with rocm on their gaming rigs and are willing to put in the extra effort to save a buck. Maybe if amd enables the full rocm stack on everything they make with a GPU and can keep it going for a few years.. then maybe they'll have something. They are working towards it, but they have a ways to go.Reply

The problem is, I dont think they have the bank roll to keep it going that long. As it stands, AMD ventures is paying tensor wave to buy gpus from AMD to show design wins and revenue on the AMD datacenter side. That's a very expensive shell game. The snake can only eat its tail for so long. -

DS426 Reply

AMD knows and pretty much figures hardware and software won't be directly comparable to nVidia's until MI450X and ROCm of that time in 2026. That said, yeah, kids and adults alike need to be tinkering with this stuff now. The whole ecosystem has changed rapidly enough that anecdote of professorsjp7189 said:This is a great first step, but there are still missing pieces...

AMD Ventures doesn't have to keep the shell games going for long -- it's just getting the ground work laid and confidence built in startups like TensorWave.