AMD deploys its first Ultra Ethernet ready network card — Pensando Pollara provides up to 400 Gbps performance

Enabling zettascale AMD-based AI cluster.

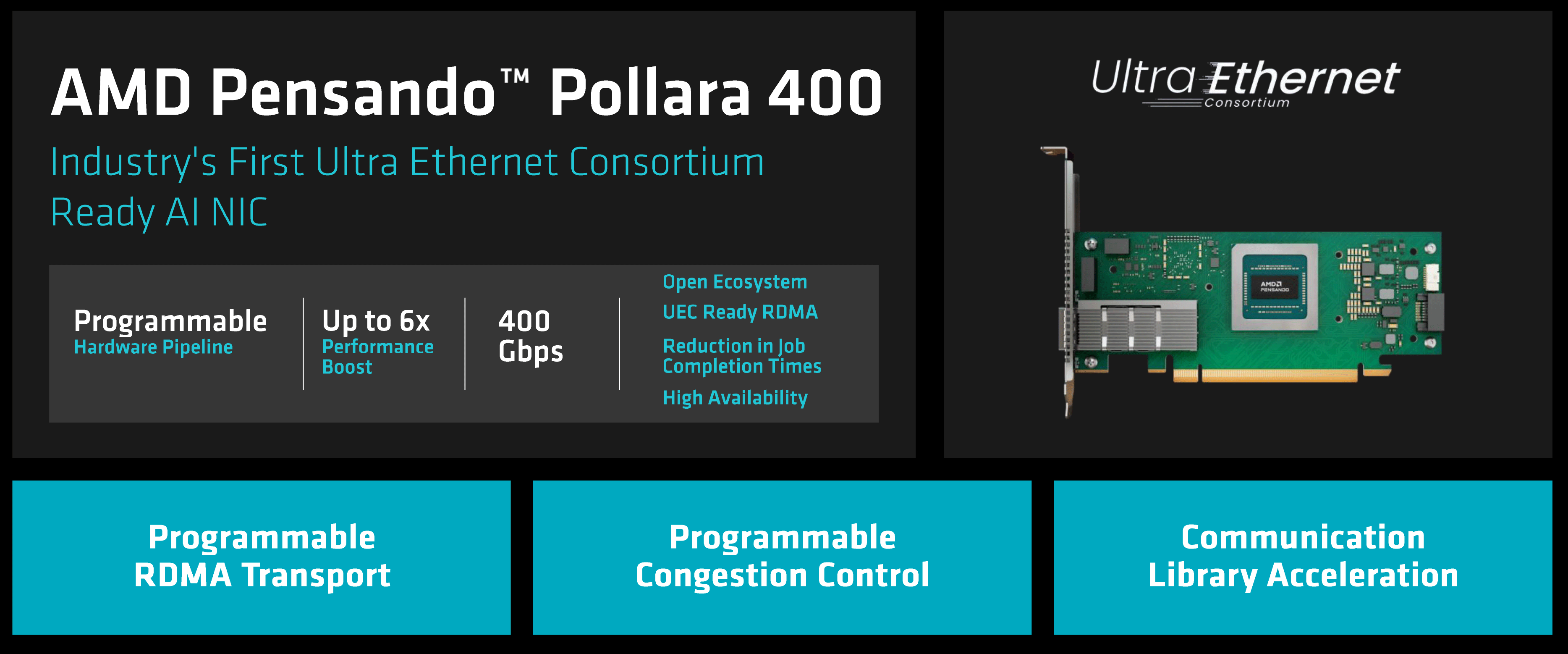

Oracle Cloud Infrastructure will be among the first hyperscalers to deploy AMD's latest Instinct MI350X-series GPUs as well as its Pensando Pollara 400GbE network interface card, which is the industry's first Ultra Ethernet-compliant NIC, AMD disclosed at its Advancing AI event. The announcement comes as the Ultra Ethernet Consortium this week published Specification 1.0 of the Ultra Ethernet technology designed for hyper-scale AI and HPC data centers.

Systems featuring AMD's Instinct MI350X-series GPUs as well as Pensando Pollara 400GbE NICs will be broadly available at OCI and possibly other cloud service providers in the second half of this year, according to the company. The Pensando Pollara 400GbE network cards will be particularly handy for Oracle, which plans to broadly deploy AMD's latest AI GPUs and build a zettascale AI cluster with up to 131,072 Instinct MI355X to enable its customers to train and inference AI models at a massive scale.

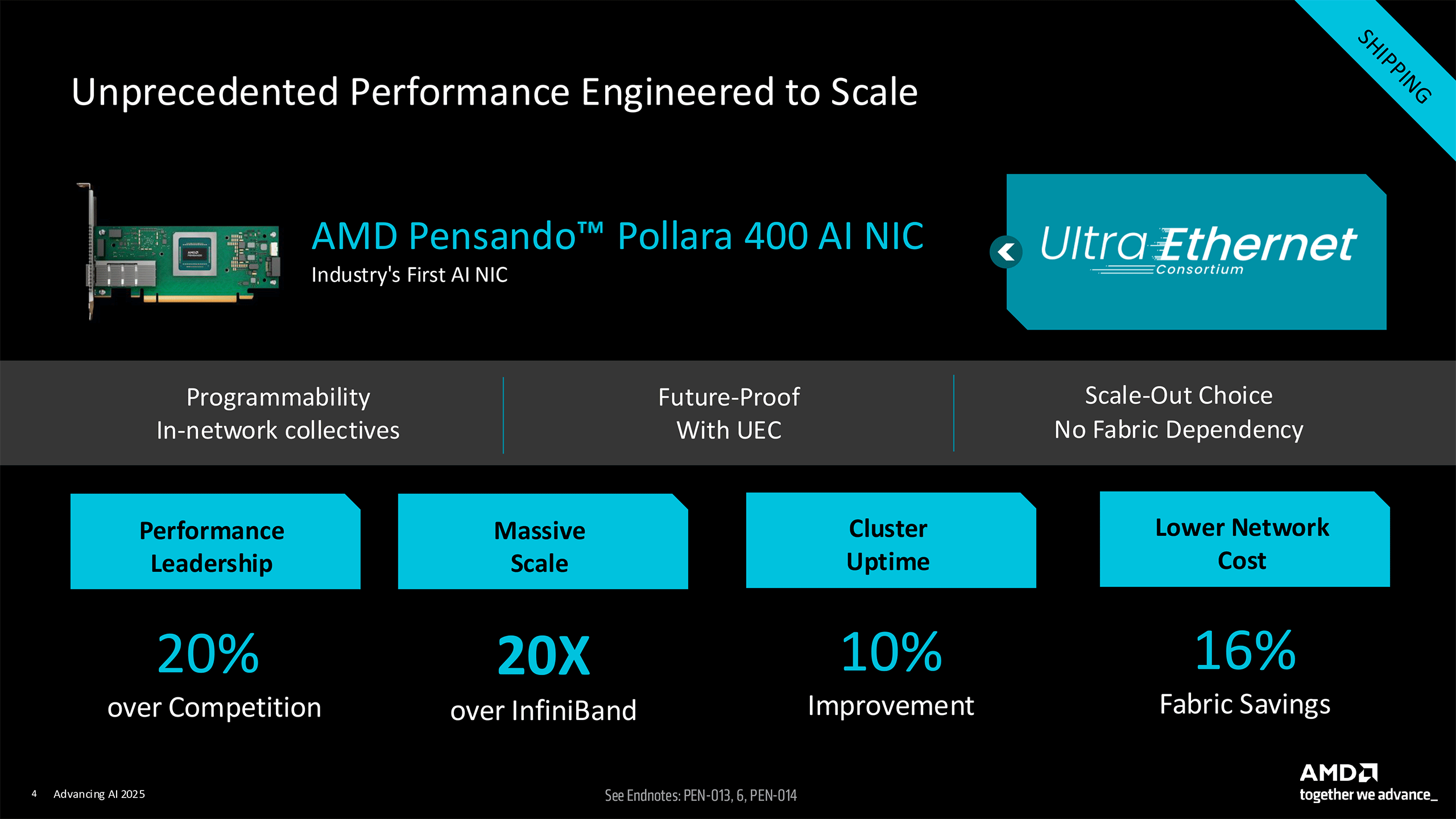

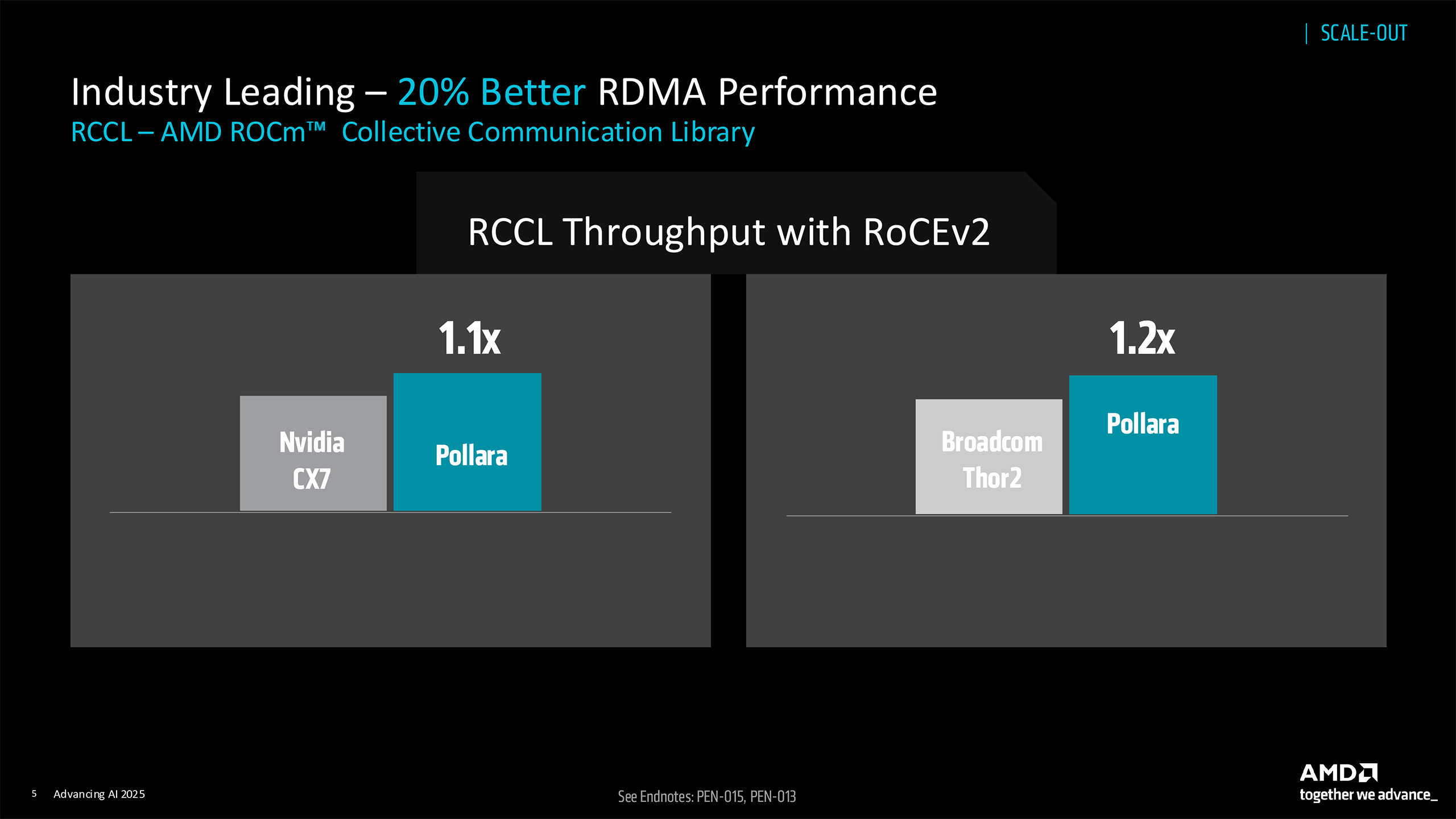

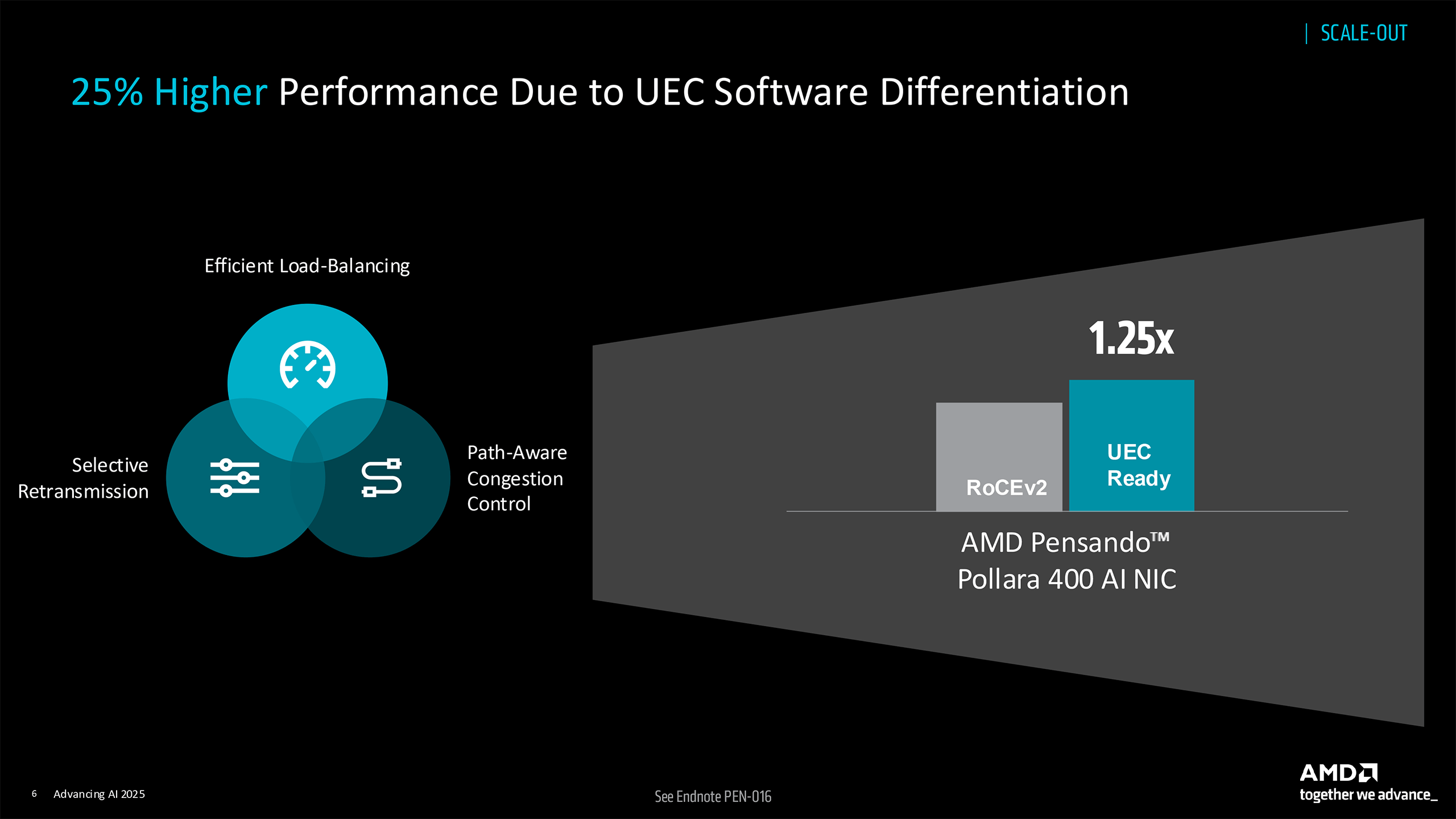

AMD's Pensando Pollara 400GbE NICs — just like other Ultra Ethernet-compliant network hardware — are designed for massive scale-out environments containing up to a million AI processors or GPUs and promise an up to six times performance boost for AI workloads. AMD claims that its Pollara 400GbE card offers a 10% higher RDMA performance compared to Nvidia's CX7 and 20% higher RDMA performance than Broadcom's Thor2 solution. In addition, UEC 1.0 features like efficient load-balancing, selective retransmission, and path-aware congestion control can further improve RDMA performance by 25% compared to traditional RoCEv2.

The Pensando Pollara 400GbE NIC is based on an in-house designed specialized processor with customizable hardware that supports RDMA, adjustable transport protocols, and offloading of communication libraries. The NIC can intelligently split data streams across multiple routes to avoid bottlenecks and dynamically reroute traffic away from overloaded network paths to ensure consistent throughput across large-scale GPU deployments.

In addition, AMD's Pollara 400GbE card features failover technology that rapidly detects and bypasses the connection to preserve high-speed GPU-to-GPU links. Such capabilities are crucial for maintaining cluster utilization and reducing latencies in environments with tens of thousands of interconnected accelerators.

While Oracle will be the first big hyperscaler to deploy AMD's Pollara 400GbE NICs (as it will probably own the largest AMD Instinct MI355X-based cluster), other companies that plan large-scale AMD Instinct deployments will follow soon, popularizing adoption of Ultra Ethernet gear. The cards are currently shipping to interested parties.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

artk2219 Excellent for datacenter use, home users have to wait a bit, switches for this start at a super affordable $25,000 a piece :D .Reply -

SonoraTechnical Replyartk2219 said:Excellent for datacenter use, home users have to wait a bit, switches for this start at a super affordable $25,000 a piece :D .

yeah, but I can still use my existing Cat5e in my house, right? ;) -

Stomx Petascale, zetascale, clusters of 100,000 processors...Ok, there exist two or four processor motherboards where all processors work in parallel on the same task. Can actually anyone in the entire country connect just one more morherboard to the existing one for them to work as a minimal cluster in parallel instead of reading the tales and salivating about future Elon's one million GPU clusters. Show that anyone here, at least one out of 300 million, or one out of 8 billion, can do anything by their handsReply -

Misgar 100Gb/s is becoming more affordable for home users, but obviously nowhere near as cheap as 1GbE.Reply

https://www.servethehome.com/mikrotik-crs504-4xq-in-review-momentus-4x-100gbe-and-25gbe-desktop-switch-marvell/

https://www.amazon.co.uk/MikroTik-CRS504-4XQ-IN/dp/B0B34Y1D6P

If you don't need 100Gb/s, there's always 40Gb/s or 25GB/s. I've been running a mixture of 10GbE and SFP+ OM3 on Netgear and MicroTik switches since 2018.

Second-hand 10G SFP+ server cards can be picked for a few tens of dollars on eBay. -

bit_user Reply

I have two of these, but they have PCIe 2.0 x8 interfaces. All of the cheap ones do. So, you'd better have either a x8 or x16 slot to spare or an open-ended x4. Be aware that consumer motherboards with multiple x16 slots will typically reduce the primary x16 slot to just x8, if you populate others.Misgar said:Second-hand 10G SFP+ server cards can be picked for a few tens of dollars on eBay.

I've seen PCIe 3.0 x4 versions, but they're in much higher-demand and priced correspondingly. -

thestryker Reply

I can only speak for Intel 520s, but they work perfectly fine in x4 slot so long as you're only using one port (just need to be willing to demel an x4 slot if needed!). I did move on to 710s when I built my new server and primary machine though because I still wanted full bandwidth and client platform lanes <insert rant here>.bit_user said:I have two of these, but they have PCIe 2.0 x8 interfaces. -

bit_user Reply

x4 would work, if it's open-ended. The cards I'm talking about are physically x8, so they need either an open-ended slot or one that's at least x8 in length.thestryker said:I can only speak for Intel 520s, but they work perfectly fine in x4 slot so long as you're only using one port

As for how much bandwidth you actually need, PCIe 2.0 has roughly 500 MB/s (4 Gb/s) of throughput per lane, so four slots should be plenty for running a single port @ 10 Gb/s. I'm not aware of a PCIe card that won't work, at all, in fewer lanes than it was designed for. So, put in a slot that has just one lane connected and 4 Gb/s is what I'd expect to achieve.

Sometimes, there are other components in the way, once you go past the end of a shorter slot. I have a B650 board that's quite the opposite situation, where it has 3 slots that are physically PCIe 4.0 x16, but only have x1 lane active!thestryker said:(just need to be willing to demel an x4 slot if needed!).

I got a server board with built-in 10 Gb/s NICs, because it's micro-ATX form factor and has just 3 slots. If you put a 2.5 width graphics card in the x16 slot, that'd be your only PCIe card! I don't have any plans to do that, but the weird decision they made was to put the primary slot at the top. So, even a 2-wide card would block the middle x4 slot and force you to either run it at x8 or forego the 3rd slot.thestryker said:I did move on to 710s when I built my new server and primary machine though because I still wanted full bandwidth and client platform lanes <insert rant here>.

So, I reasoned that it's not a good move to plan on using a network card in there. -

thestryker Reply

That would have been my ideal, but LGA 1700 boards all had pretty big compromises so it wasn't a realistic option.bit_user said:I got a server board with built-in 10 Gb/s NICs

That would drive me nuts!bit_user said:Sometimes, there are other components in the way, once you go past the end of a shorter slot. I have a B650 board that's quite the opposite situation, where it has 3 slots that are physically PCIe 4.0 x16, but only have x1 lane active!