Nvidia shares Blackwell Ultra's secrets — NVFP4 boost detailed and PCIe 6.0 support

Nvidia offers an inside look at the ultra-efficient upcoming architecture

Nvidia and its partners began shipping and deploying systems based on the company's Blackwell Ultra architecture a little while ago, namely the GB300 (with Grace CPUs) and B300 (with x86 CPUs). Now, at the Hot Chips 2025 conference, the company revealed some additional information about both the underlying architecture and the 'Blackwell Ultra' implementation of it.

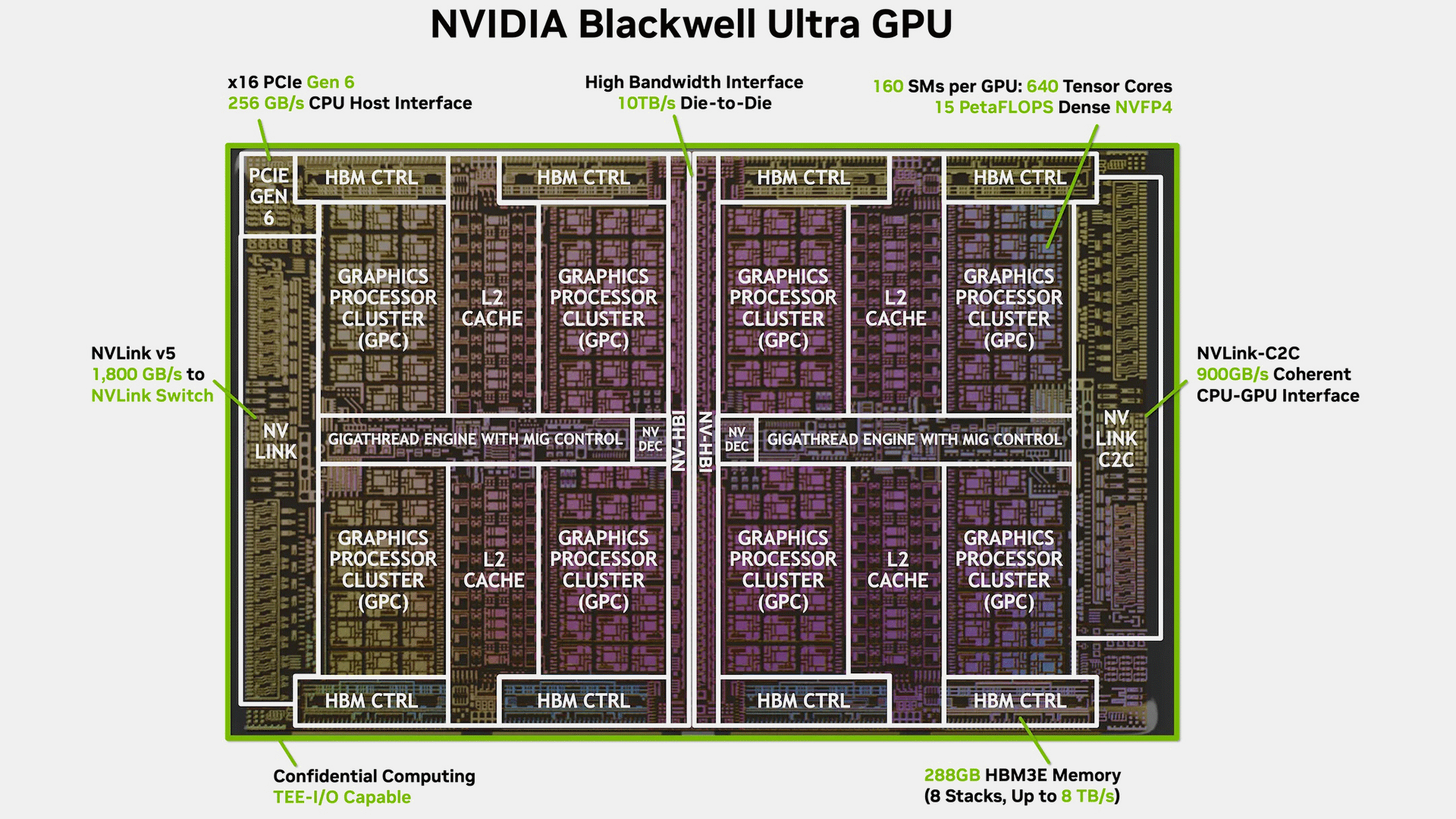

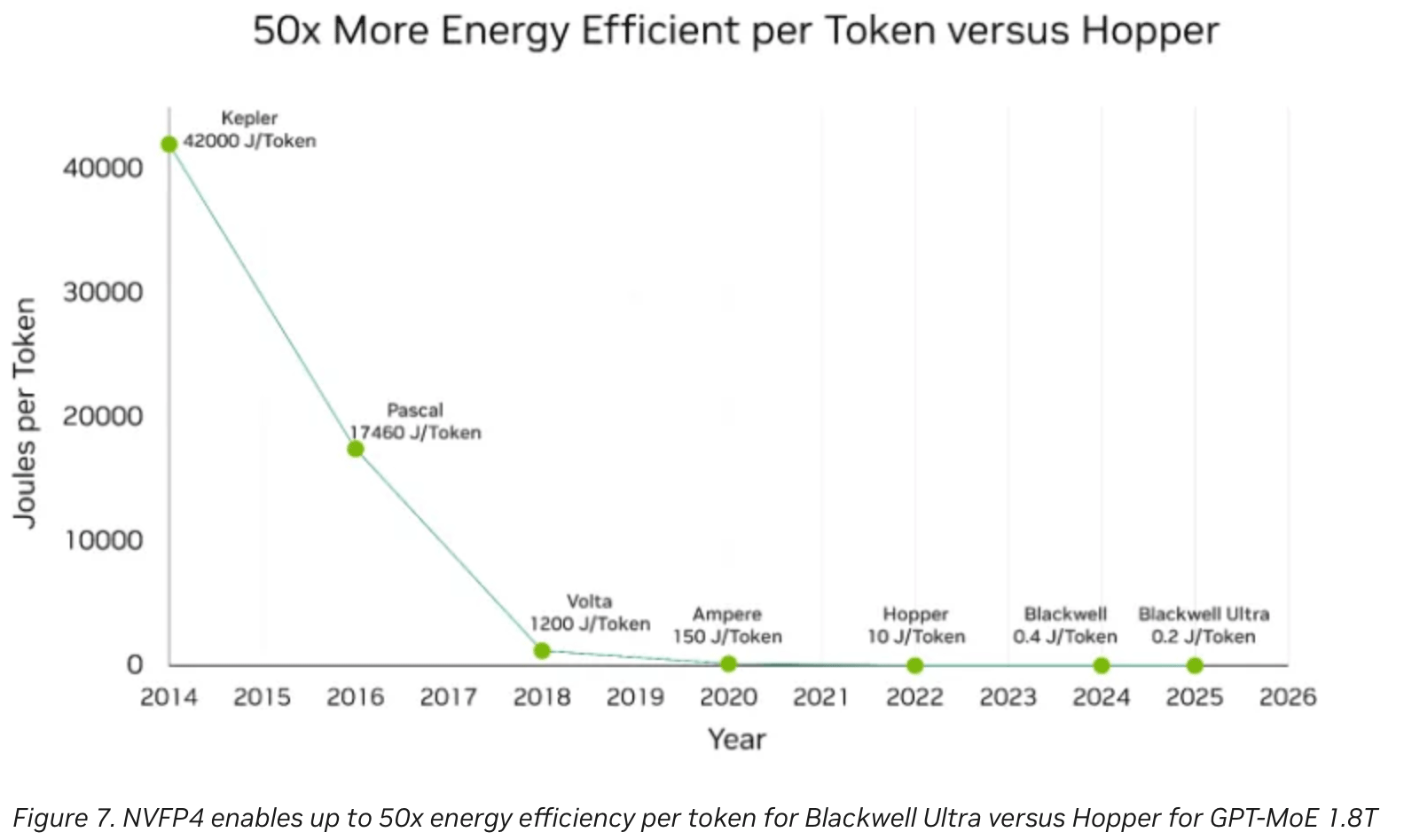

In general, Nvidia's Blackwell-based B100/B200 and Blackwell Ultra-based B300 GPUs are very similar. However, the Blackwell Ultra B300-series offers new Tensor cores optimized for the NVFP4 data format, which delivers up to 50% more NVFP4 PetaFLOPS (dense) performance, at the cost of INT8 and FP64 performance. It also carries 288 GB of HBM3E memory (up from 186 GB), and officially supports PCIe 6.x interconnection for host CPUs (vs. PCIe 5.0). All of these differences come at the cost of a 200W higher TDP: 1,400W vs 1,200W.

Architecture | Blackwell | Blackwell Ultra |

GPU | B200 | B300 (Ultra) |

Process Technology | 4NP | 4NP |

Physical Configuration | 2 x Reticle Sized GPUs | 2 x Reticle Sized GPUs |

Packaging | CoWoS-L | CoWoS-L |

FP4 PFLOPs (per-package) | 10 | 15 |

FP8/INT6 PFLOPs (per-package) | 4.5 | 10 |

INT8 PFLOPS (per-package) | 4.5 | 0.319 |

BF16 PFLOPs (per-package) | 2.25 | 5 |

TF32 PFLOPs (per-package) | 1.12 | 2.5 |

FP32 PFLOPs (per-package) | 1.12 | 0.083 |

FP64/FP64 Tensor TFLOPs (per-package) | 40 | 1.39 |

Memory | 192 GB HBM3E | 288 GB HBM3E |

Memory Bandwidth | 8 TB/s | 8 TB/s |

HBM Stacks | 8 | 8 |

PCIe | PCIe 5.x at 64 GB/s | PCIe 6.x at 128 GB/s |

NVLink | NVLink 5.0, 200 GT/s | NVLink 5.0, 200 GT/s |

GPU TDP | 1200 W | 1400 W |

CPU | 72-core Grace | 72-core Grace |

NVFP4: Proprietary hardware accelerated proprietary data format

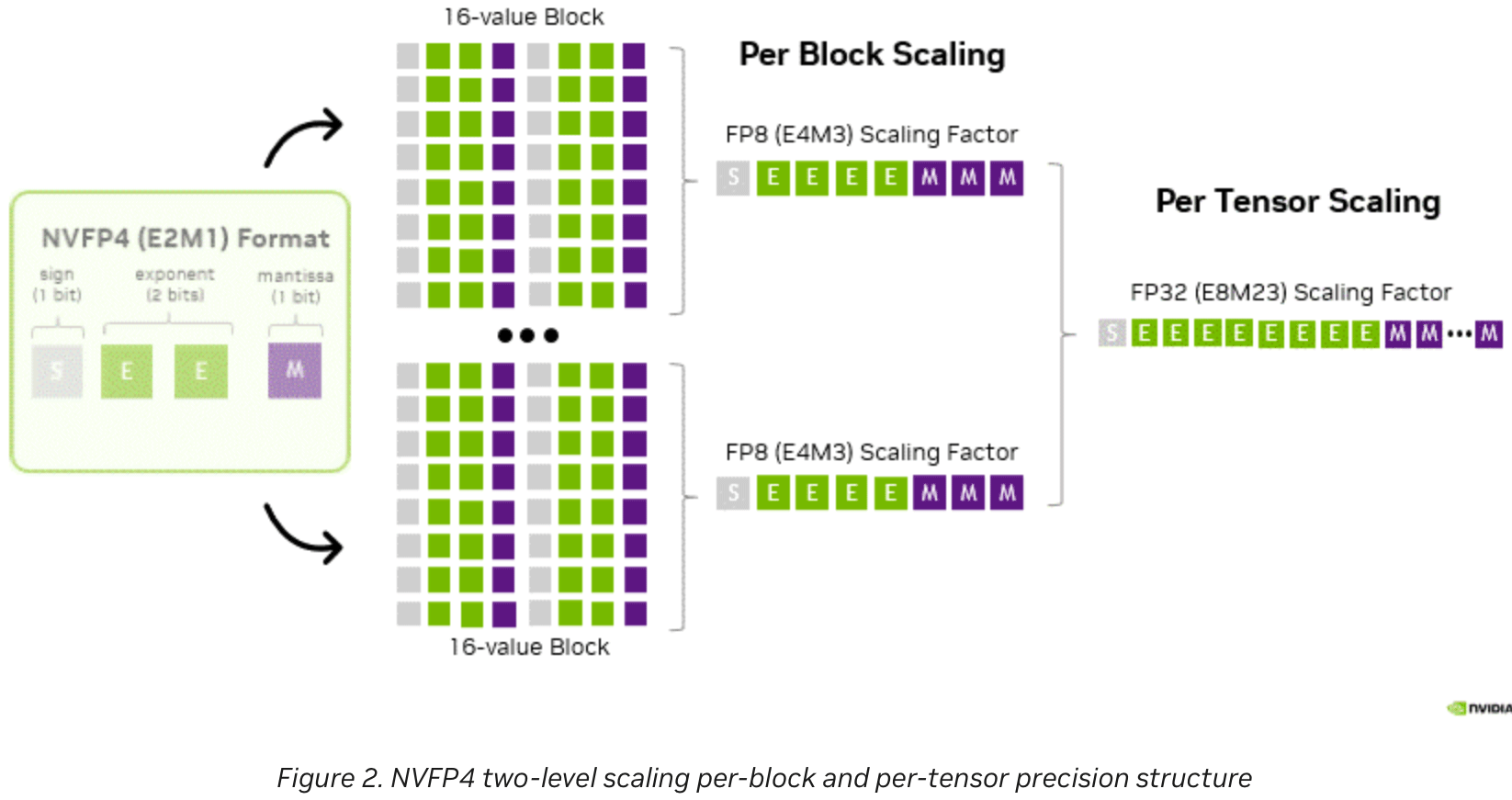

When Nvidia first introduced its Blackwell processors in early 2024, it disclosed that they all support an FP4 data format, which could be useful both for AI inference and for AI pretraining. An FP4 is the 'smallest' possible format that maintains compatibility with an IEEE 754 standard (1-bit sign, 2-bit exponent, and 1-bit mantissa), providing more flexibility than INT4 (just four raw bits), but requiring less compute capability than FP8 or FP16 formats. However, when it comes to Nvidia's Blackwell and Blackwell Ultra, it's not a standard FP4, but Nvidia's proprietary NVFP4 format.

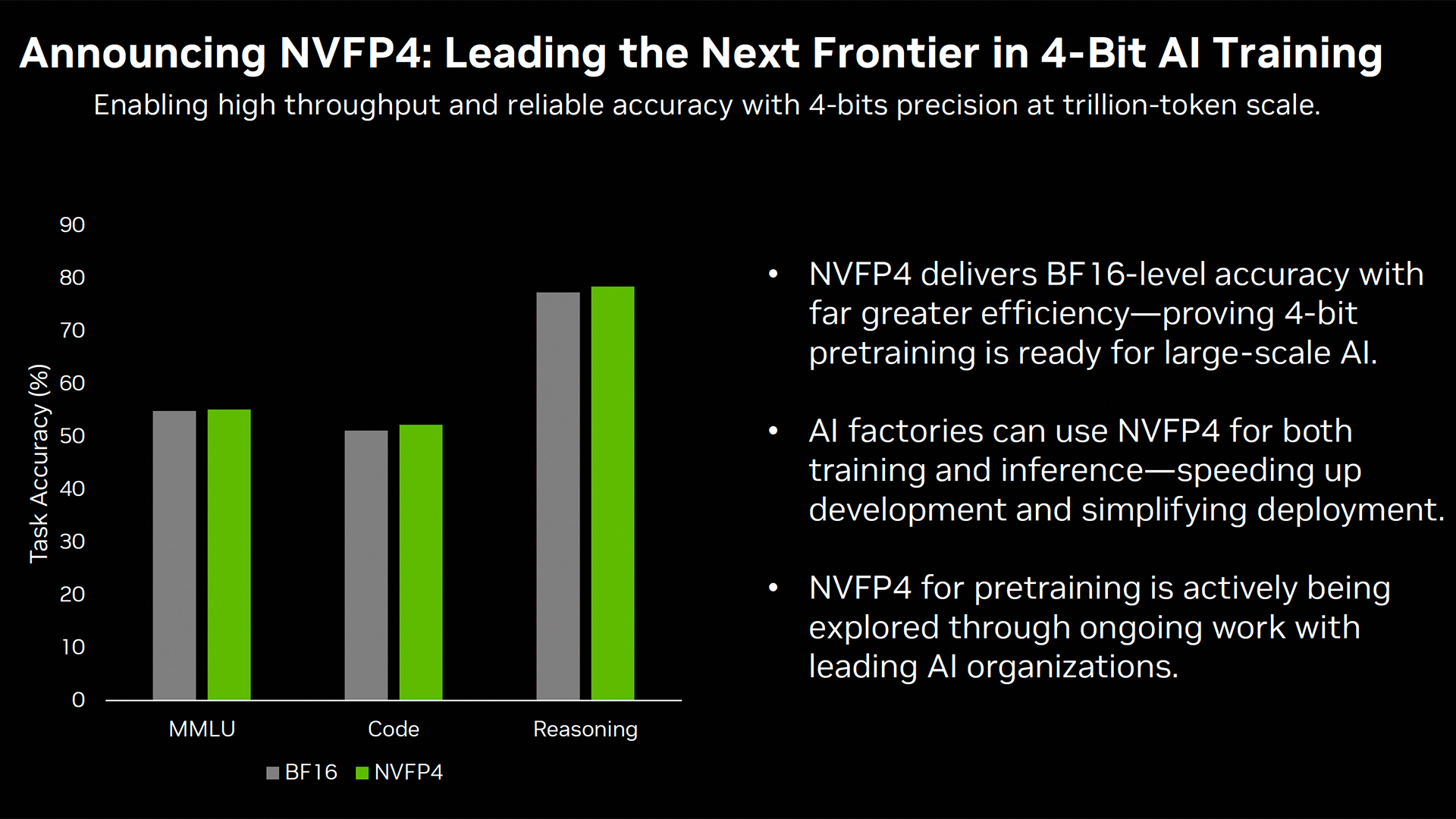

NVFP4 is a custom 4-bit floating-point format designed by Nvidia for its Blackwell processors to increase the power efficiency of both training and inference workloads. The format scheme weds compact encoding with multi-level scaling, which enables results close to BF16 accuracy while delivering gains in performance and memory use, which makes it particularly viable both for training and inference.

Like 'traditional' FP4, Nvidia's NVFP4 uses a compact E2M1 layout — 1-bit sign, 2-bit exponent, and 1-bit mantissa — to provide a numerical span between roughly -6 and +6. However, to address the limited dynamic range of such a small format, Nvidia added a dual scaling approach: each group of 16 FP4 values is assigned a scale factor stored in FP8 (E4M3), while a global FP32-based factor is applied to the entire tensor. Nvidia says that this two-tier system keeps numerical noise low without losing the performance efficiency that four bits offers.

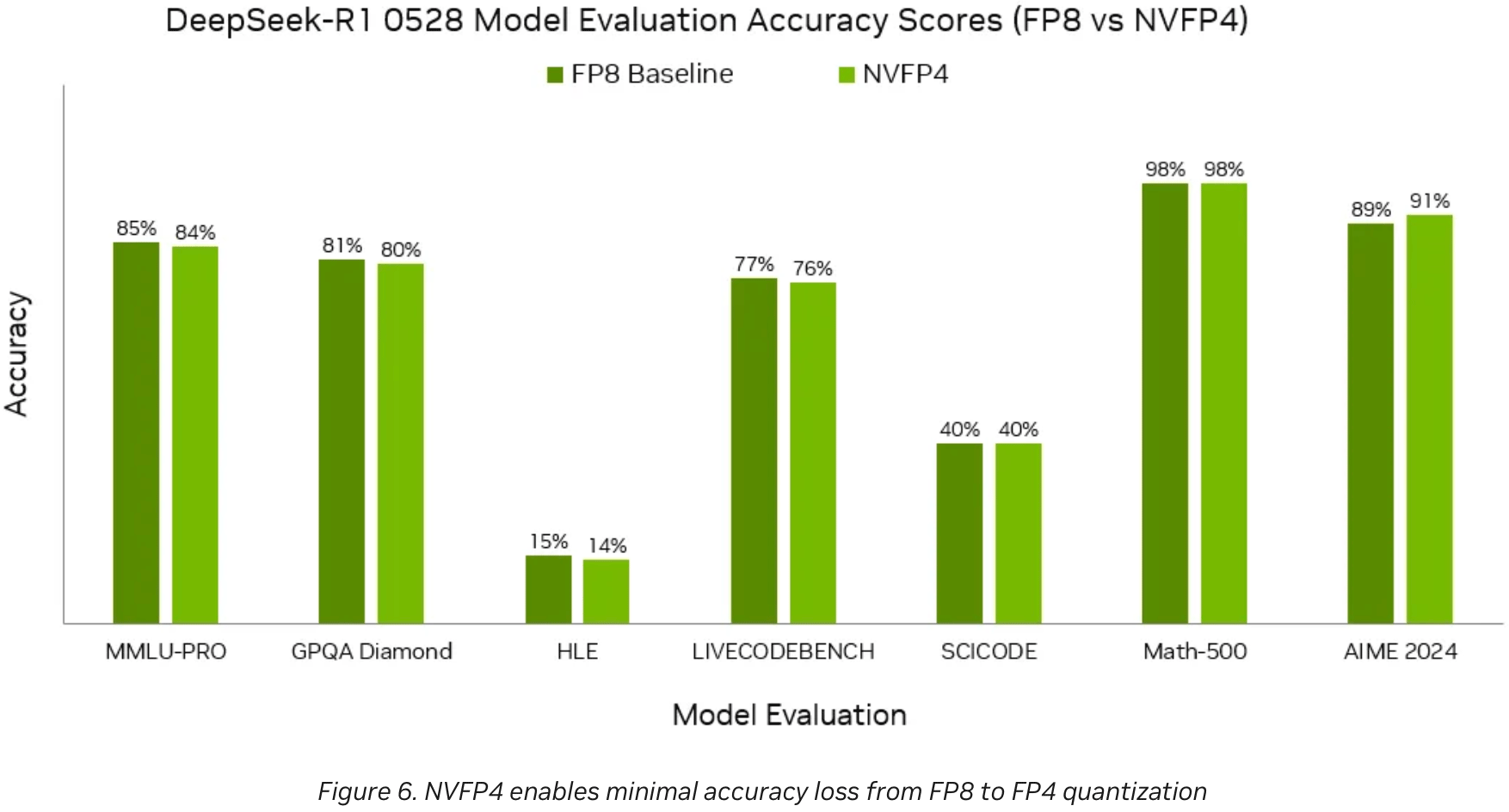

Regarding accuracy, Nvidia's internal results claim that deviations versus FP8 are generally under 1%, and in many workloads, performance can improve because smaller blocks adapt more closely to value distributions. Memory requirements are also claimed to shrink significantly: around 1.8 times lower than FP8, and as much as 3.5 times lower than FP16, which cuts storage and data-movement overhead across NVLink and NVSwitch fabrics. For developers building large clusters, this means you can run larger batch sizes and longer sequences without exceeding hardware limits.

Inference and training workloads

The majority of Nvidia's marketing materials about Blackwell data center GPUs demonstrate strong performance of B200 and B300 processors in inference compared to predecessors. Nvidia's tests with the OpenAI GPT-OSS 120B model on B200 Blackwell GPUs claim up to four times faster interactivity with no trade-off in throughput. With the DeepSeek-R1 671B model deployed on a GB200 NVL72 rack, throughput per processor allegedly increased by 2.5 times, without raising inference cost. As demand for faster reasoning models, where token latency is as critical as overall capacity, is rising, Blackwell appears to deliver. So long as Nvidia's claims remain true in real-world usage.

However, NVFP4 is not limited to inference; Nvidia presents it as the first 4-bit floating-point format viable for pretraining at a trillion-token scale. Early experiments with a 7-billion-parameter model trained on 200 billion tokens are claimed to have results comparable to BF16. This is made possible by applying stochastic rounding in the backpropagation and update steps, while using round-to-nearest in the forward pass. As a result, NVFP4 is not only a great deployment enhancement for inference, but also potentially a viable format for the entire AI lifecycle. This could mean significant cost and energy savings for hyperscale AI data centers.

Integrated into open source frameworks

Although NVFP4 is proprietary, Nvidia is embedding it in open libraries and releasing pre-quantized models. Frameworks like Cutclass (GPU kernel templates), NCCL (multi-GPU communication), and the TensorRT Model Optimizer already support NVFP4. Meanwhile, higher-level frameworks such as NeMo, PhysicsNeMo, and BioNeMo extend these capabilities for large language, physics-informed, and life sciences models. NVFP4 is also supported in the Nemotron reasoning LLM, Cosmos physical AI model, and Isaac GR00T vision-capable language action model for robotics.

Only available on Nvidia hardware with a 50% boost with Blackwell Ultra

Although NVFP4 provides numerous benefits for inference and training, and is being integrated into open source frameworks, the format is currently only supported by Nvidia. It's unlikely that NVFP4 will be supported by other independent hardware vendors (IHVs). This can reduce its appeal to developers (particularly among hyperscalers) who strive to build models that can work on a broad range of hardware.

Nvidia understands this issue, and appeals that NVFP4 is supported across a broad range of Blackwell processors, not only by data center hardware. In addition to B100/B200 and B300 processors for servers, the company's GB10 solution for DGX Spark machines and the GeForce RTX 5090 fully support NVFP4; however, Nvidia didn't mention whether this is true for all GB102-based products.

Despite this, only Nvidia's B300 GPUs feature NVFP4-optimized Tensor cores, which significantly boost NVFP4 performance at the cost of performance in other formats, such as INT8 and FP64.

The first official GPU to support PCIe Gen6

In addition to featuring a +50% NVFP4 boost and carrying 288 GB of HBM3e memory, Nvidia's Blackwell Ultra is officially the first data center GPU to support PCIe 6.x interconnection with a host CPU, but Grace is currently the only processor with this capability. PCIe 6.0 increases bandwidth to 128 GB/s bidirectional per x16 slot, thanks to PAM4 signaling and FLIT-based encoding, which are important for AI servers and AI clusters. By doubling the speed at which the GPU gets data from the CPU, SSD, or NIC, PCIe 6.0 accelerates the performance of the whole cluster, which is hard to overestimate. Unfortunately, Nvidia has not disclosed other PCIe 6.x-related performance enhancements, but they should be quite substantial. All of these details make Blackwell Ultra appear to be a performant chip for AI and data center workloads, with NVFP4 offering yet another attractive reason for hyperscalers to choose Nvidia over other IHVs.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.