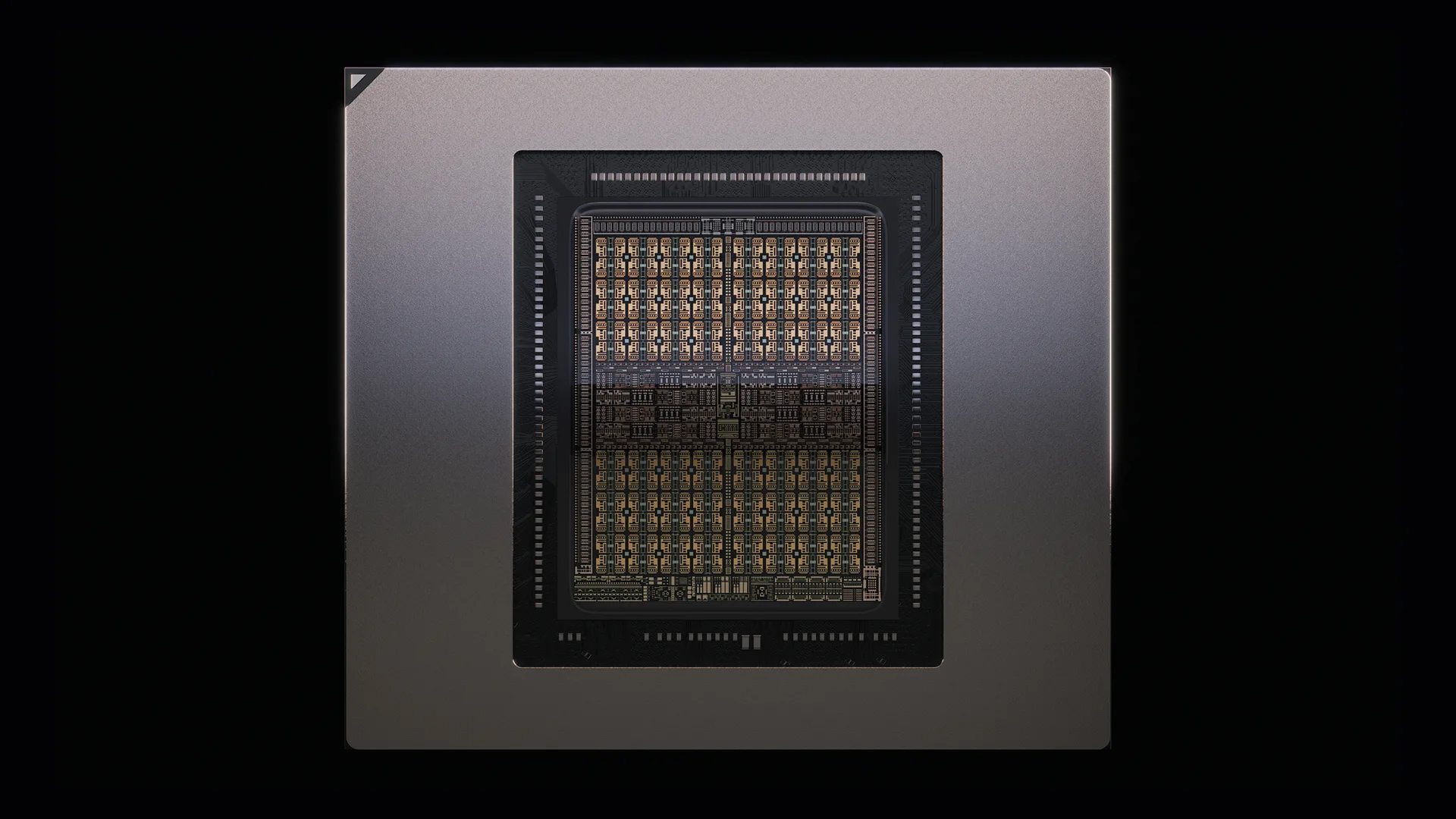

Nvidia wants 10Gbps HBM4 to blunt AMD’s MI450, report claims — company said to be pushing suppliers for more bandwidth

Nvidia’s Rubin platform bets big on 10Gb/s HBM4, but speed brings supply risk, and AMD’s MI450 is around the corner.

Nvidia is pressing its memory vendors to push beyond JEDEC’s official HBM4 baseline. According to TrendForce, the company has requested 10Gb/s-per-pin stacks for its 2026 Vera Rubin platform, a move designed to raise per-GPU bandwidth ahead of AMD’s next-generation MI450 Helios systems.

At 8Gb/s per pin — the rate JEDEC specifies for HBM4 — a single stack delivers just under 2 TB/s across the new 2,048-bit interface. Raising that to 10Gb/s bumps the total to 2.56 TB/s per stack. With six stacks, a single GPU clears 15 TB/s of raw bandwidth. Rubin CPX, Nvidia's compute-optimized config built to handle the most demanding inference workloads, is advertised with 1.7 petabytes per second across a full NVL144 rack. The higher the pin speed, the less margin Nvidia needs elsewhere to hit those numbers.

But driving 10Gb/s HBM4 isn’t a given. Faster I/O brings higher power, tighter timing, and more strain on the base die. TrendForce notes that Nvidia may segment Rubin SKUs by HBM tier if costs or thermals spike. That means 10Gb/s parts for Rubin CPX and lower-speed stacks for the standard Rubin configuration. The fallback is already in view: staggered supplier qualification and extended validation windows to stretch yield.

SK hynix remains Nvidia’s dominant HBM supplier and says it has completed HBM4 development and is ready for mass production. The company has referenced “over 10Gb/s” capability but hasn’t published die specs, power targets, or process details.

Samsung, by contrast, is more aggressive on node migration. Its HBM4 base die is moving to 4nm FinFET, a logic-class node intended to support higher clock speeds and lower switching power. That could give Samsung an edge at the high end, even if SK hynix ships more volume. Micron has confirmed sampling of HBM4 with a 2,048-bit interface and bandwidth exceeding 2 TB/s, but hasn’t said whether 10Gb/s is in scope.

AMD’s MI450 is still on the horizon, but the memory spec is already known. Helios racks are expected to support up to 432GB of HBM4 per GPU, giving AMD a route to match or exceed Nvidia on raw capacity. With CDNA 4, it also gains architectural upgrades that aim squarely at Rubin’s inference advantage.

Nvidia clearly wants to make memory faster. But the more it leans on 10Gb/s HBM4, the more exposed it becomes to supplier variation, yield risks, and rack-level power constraints at a time when the margin for error is shrinking.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button!

Luke James is a freelance writer and journalist. Although his background is in legal, he has a personal interest in all things tech, especially hardware and microelectronics, and anything regulatory.

-

richardvday This means Nvidia feels threatened, maybe not a lot but that's a good thing. Competition is what we need not monopolies. Nvidia owns way too much market share. AMD needs to continue to compete at the high end. Consumer graphics would be nice if amd would choose to compete.Reply -

Moores_Ghost Reply

There was a time we're there were a few CPU makers and double or more of that in VGA accelerators. This 3 way monopoly is ridiculous and obvious.richardvday said:This means Nvidia feels threatened, maybe not a lot but that's a good thing. Competition is what we need not monopolies. Nvidia owns way too much market share. AMD needs to continue to compete at the high end. Consumer graphics would be nice if amd would choose to compete. -

ManDaddio Reply

Market share doesn't equal monopoly. It just means people buy them more. A monopoly is where no one else can get in the space.richardvday said:This means Nvidia feels threatened, maybe not a lot but that's a good thing. Competition is what we need not monopolies. Nvidia owns way too much market share. AMD needs to continue to compete at the high end. Consumer graphics would be nice if amd would choose to compete.

NVIDIA is not the only GPU maker. There are dozens of companies that supply GPU chips for TVs, phones, cars, specialized equipment, etc. Nvidia is Ai and desktop/laptop. That's it.

Therefore they are far from a monopoly. They just do well in their space.