Kioxia’s new 5TB, 64 GB/s flash module puts NAND toward the memory bus for AI GPUs — HBF prototype adopts familiar SSD form factor

Latency remains a question.

Kioxia has developed a prototype of the 5TB high-bandwidth flash memory module with a bandwidth of 64 GB/s. It's essentially NAND-based memory for GPUs. Compared to HBM, High Bandwidth Flash (HBF) adapts the concept to NAND flash, offering 8-16x the capacity of DRAM-based HBM. By combining speed with persistent storage, HBF enables large AI datasets to be accessed efficiently while using less power. One of these HBF modules, which Kioxia has pushed to 64 GB/s, is what allows this capability.

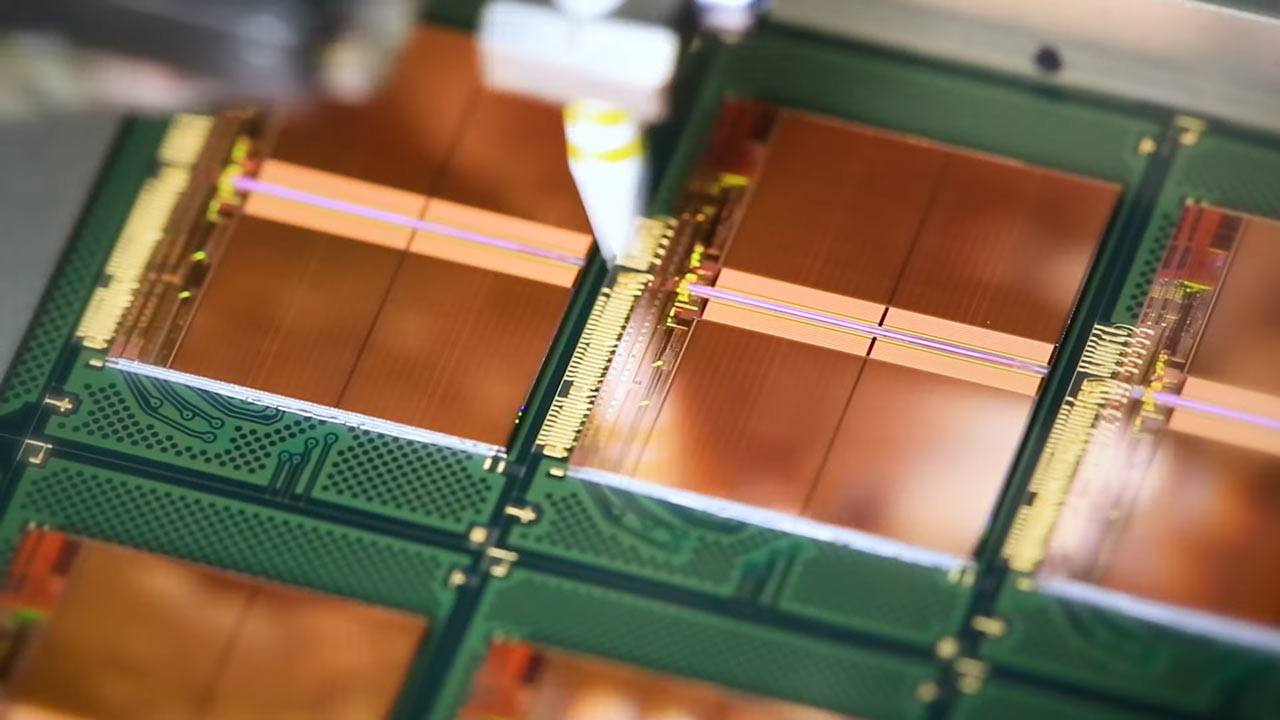

When you hear “flash storage,” you usually think in terms of capacity first, speed second. Even the fastest PCIe 5.0 SSDs today—14 GB/s class drives like Samsung’s 9100 Pro—are dwarfed by the bandwidth demands of modern GPUs and CPUs. Kioxia’s new prototype turns that expectation on its head: a single flash module delivering 5 TB of capacity and 64 GB/s of sustained bandwidth over PCIe 6.0. To put that into perspective, that’s over 4x faster than the fastest PCIe 5.0 drives shipping today, and within striking distance of HBM2E’s per-stack throughput.

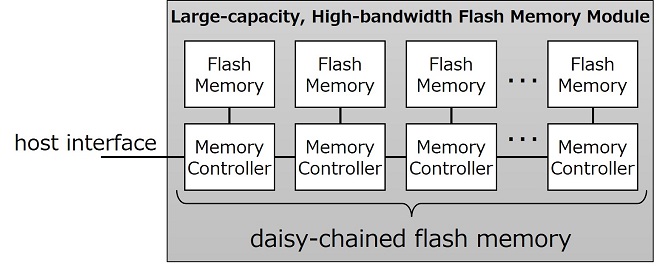

The key is how the system scales; instead of one central controller trying to manage an entire bank of NAND—which quickly becomes a bottleneck as more dies and channels are added—Kioxia gives each module its own controller. That controller sits right next to its NAND and links to others in a daisy-chain layout. This reduces crosstalk and eliminates the complexity of wide parallel buses, which become increasingly challenging to manage as speeds increase. Instead, data is passed along in series, with each link pushing 128 Gbps using PAM4 signaling.

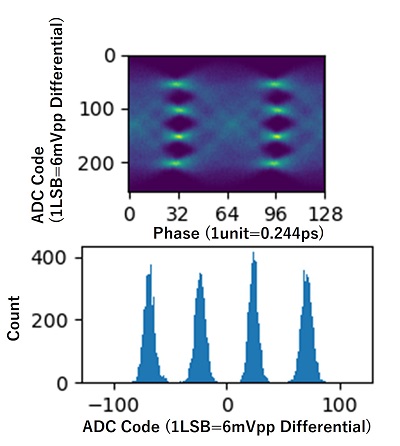

PAM4 (Pulse Amplitude Modulation with four levels) doubles the data rate per symbol compared to traditional NRZ signaling, but it’s also more sensitive to noise and bit errors. To maintain signal integrity, Kioxia relies on equalization, error correction, and stronger pre-emphasis—similar to what PCIe 6.0 itself requires.

This helps explain the move to PCIe 6.0 as the host interface, as x16 lanes of PCIe 6.0 can theoretically handle around 128 GB/s bidirectional. Kioxia’s 64 GB/s target sits just under half that limit, leaving enough headroom for error correction and overhead without maxing out the bus.

As you might be expecting, latency is the main tradeoff. HBM memory works in hundreds of nanoseconds, almost like an extension of GPU registers. NAND flash—even with advanced controllers—still accesses data in tens of microseconds, which is magnitudes slower. Kioxia counters this with aggressive prefetching and controller-level caching, so sequential workloads are less affected. It doesn’t make NAND as fast as DRAM, but it narrows the gap enough that for streaming datasets, AI checkpoints, or large graph analytics, bandwidth matters more than raw latency.

Power is another crucial factor here, as Kioxia claims under 40W per module, which appears impressive when compared to traditional Gen5 SSDs that can draw up to 15W for ~14 GB/s. On a GB/s per Watt basis, this module is dramatically more efficient. That matters because in a hyperscale rack, a few hundred drives can easily consume multiple kilowatts. AI datacenters—already ballooning in power budgets thanks to H100 clusters—need every watt saved at the storage layer.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

These modules also open up new system design options. With daisy-chained controllers, adding more modules doesn’t consume additional bandwidth, so performance scales linearly with capacity. A complete set of 16 could reach 80 TB of flash and over 1 TB/s of throughput—numbers once limited to parallel file systems or DRAM scratchpads. This makes it possible to treat storage as near-memory, sitting directly on the PCIe fabric alongside accelerators, rather than being stuck in back-end I/O.

This isn’t Kioxia’s first foray into bandwidth-heavy flash, either. The company has been experimenting with long-reach PCIe SSDs and GPU peer-to-peer flash links, including research with Nvidia into XL-Flash drives tuned for 10 million IOPS. Pair those efforts with its newly announced fab expansions in Japan — driven by an expectation that flash demand will nearly triple by 2028—and it’s clear this prototype isn’t a one-off. It’s a roadmap hint toward NAND not just being bigger, but to be faster, and fast enough to sit closer to the compute stack.

For now, the module remains in prototype stage, and there are unanswered questions: how it handles mixed random workloads, how ECC scaling impacts latency, and what real-world throughput looks like under AI training conditions. However, the bigger message here is that flash is breaking out of its role as slow, deep storage and moving up the hierarchy. If Kioxia’s vision (as outlined in their press release) pans out, the next generation of data centers might see storage modules competing for bandwidth bragging rights alongside GPUs themselves.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

Jame5 So it's NVMe storage in RAID0 scaled down to modules. PAM4 signalling, daisy-chained controllers to increase throughput.Reply

They are literally describing an array of NVMe storage in RAID0, just done at the "module" level.

They don't actually describe the size of the module though.