SpaceX CEO Elon Musk says AI compute in space will be the lowest-cost option in 5 years — but Nvidia's Jensen Huang says it's a 'dream'

AI is flying high?

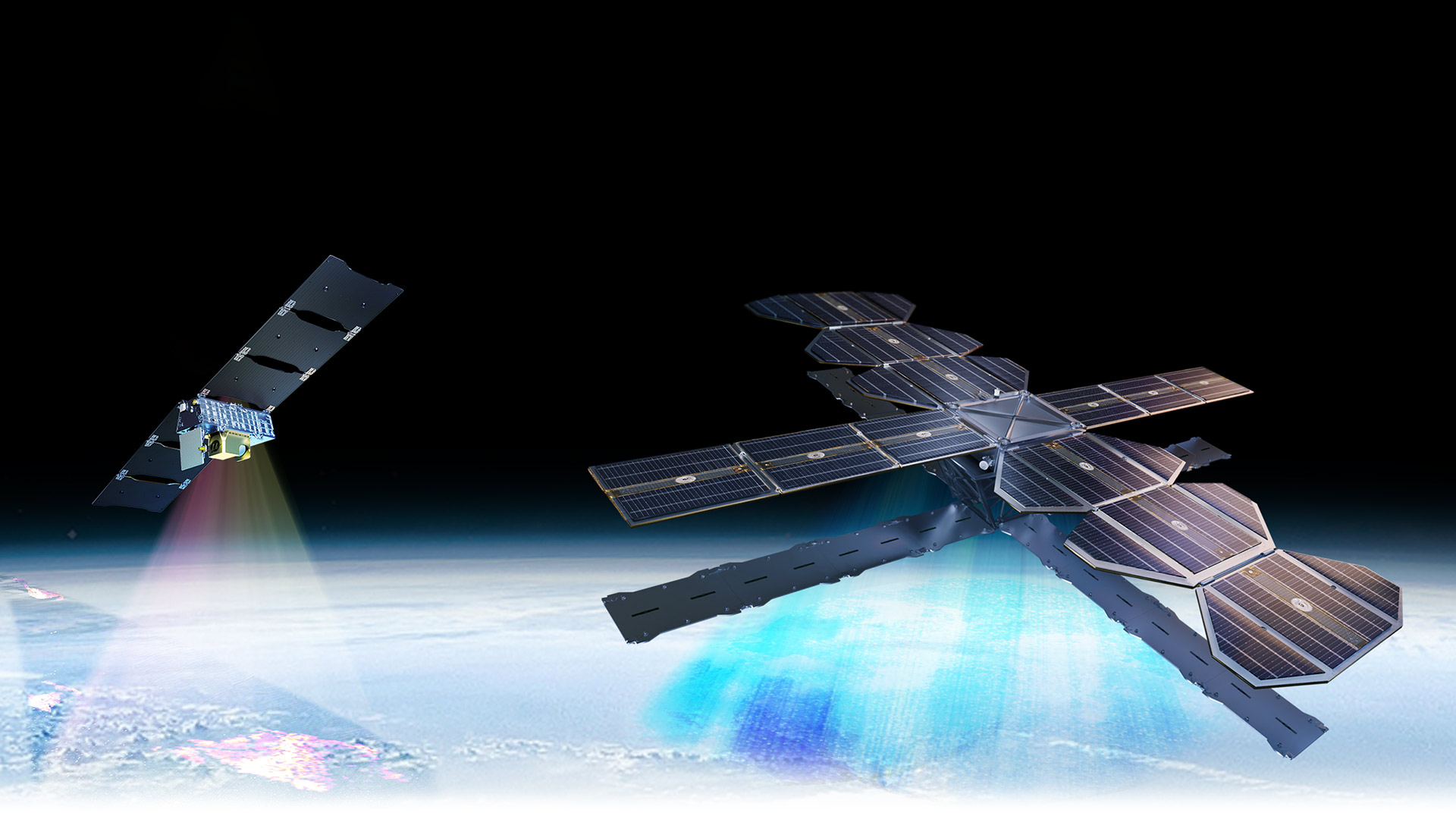

In addition to hardware costs, power generation and delivery and cooling requirements will be among the main constraints for massive AI data centers in the coming years. X, xAI, SpaceX, and Tesla CEO Elon Musk argues that over the next four to five years, running large-scale AI systems in orbit could become far more economical than doing the same work on Earth.

That's primarily due to 'free' solar power and relatively easy cooling. Jensen Huang agrees about the challenges ahead of gigawatt or terawatt-class AI data centers, but says that space data centers are a dream for now.

Terawatt-class AI datacenter is impossible on Earth

"My estimate is that the cost of electricity, the cost effectiveness of AI and space will be overwhelmingly better than AI on the ground so far, long before you exhaust potential energy sources on Earth," said Musk at the U.S.-Saudi investment forum. "I think even perhaps in the four- or five-year timeframe, the lowest cost way to do AI compute will be with solar-powered AI satellites. I would say not more than five years from now."

Jensen Huang, chief executive of Nvidia, notes that the compute and communication equipment inside today's Nvidia GB300 racks is extremely small compared to the total mass, because nearly the entire structure — roughly 1.95 tons out of 2 tons — is essentially a cooling system.

Musk emphasized that as compute clusters grow, the combined requirements for electrical supply and cooling escalate to the point where terrestrial infrastructure struggles to keep up. He claims that targeting continuous output in the range of 200 GW – 300 GW annually would require massive and costly power plants, as a typical nuclear power plant produces around 1 GW of continuous power output. Meanwhile, the U.S. generates around 490 GW of continuous power output these days (note that Musk says 'per year,' but what he means is continous power output at a given time), so using the lion's share of it on AI is impossible. Anything approaching a terawatt of steady AI-related demand is unattainable within Earth-based grids, according to Musk.

" There is no way you are building power plants at that level: if you take it up to say, a [1 TW of continuous power], impossible," said Musk. You have to do that in space. There is just no way to do a terawatt [of continuous power on] Earth. In space, you have got continuous solar, you actually do not need batteries because it is always sunny in space and the solar panels actually become cheaper because you do not need glass or framing and the cooling is just radiative."

While Musk may be right about issues with generating enough power for AI on Earth and the fact that space could be a better fit for massive AI compute deployments, many challenges remain with putting AI clusters into space, which is why Jensen Huang calls it a dream for now.

"That's the dream," Huang exclaimed.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Remains a 'dream' in space too

On paper, space is a good place for both generating power and cooling down electronics as temperatures can be as low as -270°C in the shadow. But there are many caveats. For example, they can reach +120°C in direct sunlight. However, when it comes to earth orbit, temperature swings are less extreme: –65°C to +125°C on Low Earth Orbit (LEO), –100°C to +120°C on Medium Earth Orbit (MEO), –20°C to +80°C on Geostationary Orbit (GEO), and –10°C to +70°C on High Earth Orbit (HEO).

LEO and MEO are not suitable for 'flying data centers' due to unstable illumination pattern, substantial thermal cycling, crossing of radiation belts, and regular eclipses. GEO is more feasible as there is always sunny (well, there are annual eclipses too, but they are short) and it is not too radioactive.

Even in GEO, building large AI data centers faces severe obstacles: megawatt-class GPU clusters would require enormous radiator wings to reject heat solely through infrared emission (as only radiative emission is possible, as Musk noted). This translates into tens of thousands of square meters of deployable structures per multi-gigawatt system, far beyond anything flown to date. Launching that mass would demand thousands of Starship-class flights, which is unrealistic within Musk's four-to-five-year window, and which is extremely expensive.

Also, high-performance AI accelerators such as Blackwell or Rubin as well as accompanying hardware still cannot survive GEO radiation without heavy shielding or complete rad-hard redesigns, which would slash clock speeds and/or require entirely new process technologies that are optimized for resilience rather than for performance. This will reduce feasibility of AI data centers on GEO.

On top of that, high-bandwidth connectivity with earth, autonomous servicing, debris avoidance, and robotics maintencance all remain in their infancy given the scale of the proposed projects. Which is perhaps why Huang calls it all a 'dream' for now.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user Sometimes, I really wonder just how much Elon believes some of these things vs. just saying them in an attempt to lure investors to buy stock in his companies.Reply

This entire digression is somewhat pointless, because he's talking about a polar orbit. That's the easiest way to achieve the continuous sunlight that he mentioned (the other being the far less practical solution of parking at a Sun-Earth Lagrange point).The article said:On paper, space is a good place for both generating power and cooling down electronics as temperatures can be as low as -270°C in the shadow. But there are many caveats. For example, they can reach +120°C in direct sunlight. However, when it comes to earth orbit, temperature swings are less extreme: –65°C to +125°C on Low Earth Orbit (LEO), –100°C to +120°C on Medium Earth Orbit (MEO), –20°C to +80°C on Geostationary Orbit (GEO), and –10°C to +70°C on High Earth Orbit (HEO).

BTW, ambient temperatures in orbit are also somewhat meaningless, due to the fact that it's a near vacuum. As Elon said, cooling will have to be radiative. -

vanadiel007 Just calculate how many solar panels would be required to generate the amounts of power he's talking about, and you will realize it's a non-starter.Reply -

Klathra If the AI compute satellite needed to radiate 1 terrawatt of energy and you could run it at 127 C it would need to have 690 km² of surface area, assuming a perfectly black surface for emissivity. As temperature goes up the surface area needed goes down, but even if you could somehow get the electronics to survive much higher temperatures the surface area is still far too large.Reply

Am I missing something? -

SkyBill40 The efficiency of solar panels would need to make a quantum leap in order to pull off something on this level. That doesn't take into account the other heavy lifting of just getting that mass up there in the first place.Reply

And then what? How long before it falls behind newly developed architectures? It's not like it's easy to just send people up there on a mission to hardware swap, especially at some of the orbital levels discussed. I mean... just look at how much work it took to get the HST right. That required multiple shuttle missions to correct. -

bit_user Reply

I assume the solar panels he'd use would be more efficient, but if we take the example of the ISS, it gets about 100 W/m^2 (source: https://en.wikipedia.org/wiki/Solar_panels_on_spacecraft#Spacecraft_that_have_used_solar_power ). So, 1 TW would require 1 * 10^10 m^2 or about 10,000 km^2 (i.e. 100x100 km array).vanadiel007 said:Just calculate how many solar panels would be required to generate the amounts of power he's talking about, and you will realize it's a non-starter.

I wonder how much energy it would take to shoot that mass into orbit, and what the break-even point would be for offsetting that initial energy expenditure vs. just using ground-based solar. -

vanadiel007 Replybit_user said:I assume the solar panels he'd use would be more efficient, but if we take the example of the ISS, it gets about 100 W/m^2 (source: https://en.wikipedia.org/wiki/Solar_panels_on_spacecraft#Spacecraft_that_have_used_solar_power ). So, 1 TW would require 1 * 10^10 m^2 or about 10,000 km^2 (i.e. 100x100 km array).

I wonder how much energy it would take to shoot that mass into orbit, and what the break-even point would be for offsetting that initial energy expenditure vs. just using ground-based solar.

Exactly, which does not even take into account the herculean task of assembling that in space, and ensuring space debris does not destroy it once it's fully "deployed".

And while I am far from a space expert, I am thinking such a structure would cast a huge shadow over earth and likely be visible with the naked eye.

It would likely be much more practical and less costly to generate that power here on good old earth. -

bit_user Reply

Spacecraft typically use more exotic panel technology than terrestrial applications, I think because it's a lot more economical to launch a smaller panel for a given output level, even if it costs more to manufacture.SkyBill40 said:The efficiency of solar panels would need to make a quantum leap in order to pull off something on this level.

https://en.wikipedia.org/wiki/Solar_panels_on_spacecraft#Types_of_solar_cells_typically_used

Oh, that's where his Optimus robots enter the picture!SkyBill40 said:And then what? How long before it falls behind newly developed architectures? It's not like it's easy to just send people up there on a mission to hardware swap, especially at some of the orbital levels discussed.

🤖

That was also 40 years ago. Most satellites don't need field maintenance. Due to its orbit, it would be virtually impossible to send a manned service mission to JWST, which is far more complex than Hubble.SkyBill40 said:I mean... just look at how much work it took to get the HST right. That required multiple shuttle missions to correct.

(if this image doesn't load, see it here: https://www.space.com/james-webb-space-telescope-secondary-mirror-deployed ) -

bit_user Reply

It wouldn't cast a shadow if it's in a polar orbit that aligns with the dusk/dawn boundary. That's what it would have to use for 24/7 sunlight. Otherwise, you'd need to add batteries, which would be a whole lot more mass to launch and something else that needs maintenance/replacement.vanadiel007 said:And while I am far from a space expert, I am thinking such a structure would cast a huge shadow over earth and likely be visible with the naked eye.