Nvidia aims to solve AI's water consumption problems with direct-to-chip cooling — claims 300X improvement with closed-loop systems

Nvidia Blackwell AI datacenters get cooling upgrade

Modern cloud data centers not only consume an immense amount of power for computing and cooling, but also a significant amount of water, as most use evaporative liquid cooling.

By contrast, Nvidia's GB200 NVL72 and GB300 NVL72 machines utilize direct-to-chip liquid cooling systems, which are claimed to be 25 times more energy-efficient and 300 times more water-efficient than today's coolers. However, there is a catch, as NVL72 rack-scale systems consume over seven times more power than typical racks.

Typical data center server racks consume around 20kW of power, whereas Nvidia's H100-based racks consume over 40kW of power. However, Nvidia's GB200 NVL72 and GB300 NVL72 rack-scale systems consume 120kW – 140kW of power, outpacing the vast majority of racks already installed.

As a result, air-based cooling methods are no longer sufficient to manage the thermal loads produced by these high-density racks. Therefore, Nvidia had to adopt a new cooling solution for its Blackwell machines, which led to the development of a new solution.

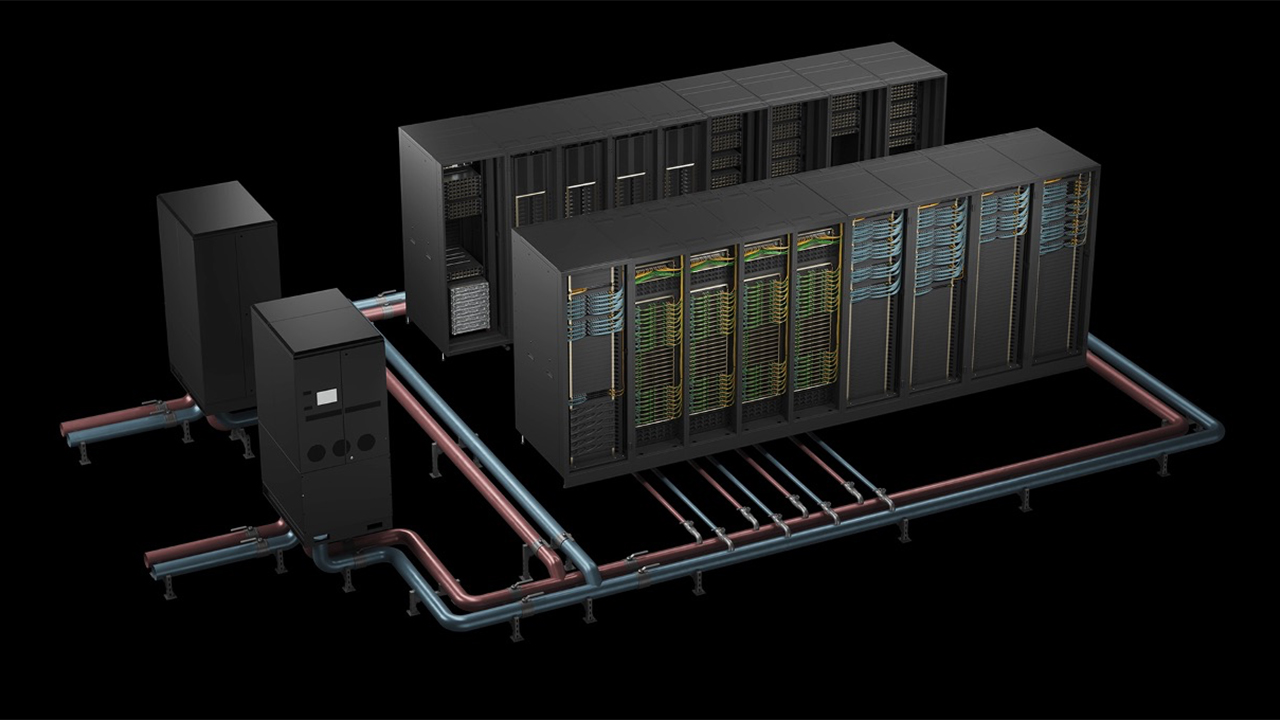

Nvidia's GB200 NVL72 and GB300 NVL72 systems use direct-to-chip liquid cooling. This approach involves circulating coolant directly through cold plates attached to GPUs, CPUs, and other heat-generating components, efficiently transferring heat away from these devices without relying on air as the intermediary.

Unlike evaporative cooling or immersion cooling, NVL72's liquid cooling is a closed-loop system, so coolant does not evaporate or require replacement due to loss from phase change, saving water.

In the NVL72 architecture, the heat absorbed by the liquid coolant is then transferred to the data center's cooling infrastructure through rack-level liquid-to-liquid heat exchangers. These coolant distribution units (CDUs), such as the CoolIT CHx2000, are capable of managing up to 2 mW of cooling capacity, supporting high-density deployments with low thermal resistance and reliable heat rejection.

Additionally, this setup enables the systems to operate with warm water cooling, thereby reducing or eliminating the need for mechanical chillers, which improves both energy efficiency and water conservation.

There are several things to note about Nvidia's closed-loop direct-to-chip liquid cooling solutions. Although closed-loop liquid cooling solutions are widely used by PC enthusiasts, there are several practical, engineering, and economic reasons why these systems are presently not widely adopted at scale.

Data centers require modularity and accessibility for maintenance, upgrades, and component replacement, which is why they use hot-swappable components. However, hermetically sealed systems make the quick replacement of failed servers or GPUs difficult, as breaking the seal would compromise the entire cluster.

Also, routing sealed liquid loops across racks and the entire data center introduces logistical complexity in piping, pump redundancy, and failure isolation. Fortunately, current direct-to-chip liquid cooling solutions use quick-disconnect fittings with dripless seals, which offer serviceability without full hermetic sealing (at the end of the day, detecting and isolating leaks quickly is cheaper than creating a fully hermetic data center-scale solution). However, using data center-scale liquid cooling still requires redesign of the whole data center, which is expensive.

Nonetheless, since Nvidia's Blackwell processors offer unbeatable performance, adopters of B200 GPUs are willing to invest in such redesigns. Additionally, it is worth noting that Nvidia has co-developed reference designs with Schneider Electric for 1152 GPU DGX SuperPOD GB200 clusters, utilizing Motivair liquid-to-liquid CDUs and fluid coolers with adiabatic assist. This enables the quick deployment of such systems with maximized efficiency.

Although Nvidia mandates the use of liquid cooling with its Blackwell B200 GPUs and systems, the company has invested in reference designs of sealed liquid cooling solutions to avoid the use of evaporative liquid cooling solutions, in an effort to preserve water, which seems to be a reasonable tradeoff.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Pierce2623 When are people other than Nvidia, OpenAI and a chosen few cloud providers supposed to start actually getting revenue out of AI?Reply -

frogr "...the company has invested in reference designs of sealed liquid cooling solutions to avoid the use of evaporative liquid cooling solutions, in an effort to preserve water"Reply

Open-loop evaporative cooling is not used at the chip level or rack level. It is used to produce cold water at the building level which is used to cool the air or water supplied to the server racks. The chilling technology at the chip/ rack level and building level are basically independent.

https://datacenters.microsoft.com/wp-content/uploads/2023/05/Azure_Modern-Datacenter-Cooling_Infographic.pdf -

DS426 Reply

Yeah, datacenters still have to expel the heat from the room (and I assume hot and cold aisles are still used). The only thing CLC provides is more power density. This in turn reduces serviceability of these racks.frogr said:"...the company has invested in reference designs of sealed liquid cooling solutions to avoid the use of evaporative liquid cooling solutions, in an effort to preserve water"

Open-loop evaporative cooling is not used at the chip level or rack level. It is used to produce cold water at the building level which is used to cool the air or water supplied to the server racks. The chilling technology at the chip/ rack level and building level are basically independent.

https://datacenters.microsoft.com/wp-content/uploads/2023/05/Azure_Modern-Datacenter-Cooling_Infographic.pdf

As for these proprietary racks, DC operators aren't having a good time having to go to nVidia when there is almost any kind of problem, whether hardware or software related. This mini-monopoly hardware system is a step backwards. THN had an article on Pat G. at Intel complaining about the industry needing to get away from CUDA and instead embrace open standards, and yet here we are today with nVidia supposedly having up to 98% of AI training GPU market share. *facepalm* -

AngelusF Reply

Strange he didn't also say that the industry needed to get away from x86 and instead embrace open standards.DS426 said:THN had an article on Pat G. at Intel complaining about the industry needing to get away from CUDA and instead embrace open standards -

Vanderlindemedia ReplyPierce2623 said:When are people other than Nvidia, OpenAI and a chosen few cloud providers supposed to start actually getting revenue out of AI?

It's all based on training large, huge if not infinite models at this point.

Once you have a solid product, you can rent it out through subscription(s), and use even that data for new training.

With direct chip cooling, voltages could be lowered, and thus making the energy requirement on that scale, in the hundred of thousands of watts less. -

Pierce2623 Reply

Yeah it’s been all about training large models since Hopper released a couple years ago. ChatGPT is still the only real monetizable project.Vanderlindemedia said:It's all based on training large, huge if not infinite models at this point.

Once you have a solid product, you can rent it out through subscription(s), and use even that data for new training.

With direct chip cooling, voltages could be lowered, and thus making the energy requirement on that scale, in the hundred of thousands of watts less. -

hotaru251 so proprietary cooling? meaning they'd have to upgrade EVERYTHING & redesign to accommodate.Reply

nvidia already has..Pierce2623 said:When are people other than Nvidia, OpenAI and a chosen few cloud providers supposed to start actually getting revenue out of AI?

They sell the gpu's. They sell their cloud gaming (which uses their ai).

the rest of em are all racing to AGI as they know only one person wins the payoff. -

Vanderlindemedia Reply

I've been using both (ChatGPT + Grok) and i have to say Grok is far better then ChatGPT.Pierce2623 said:Yeah it’s been all about training large models since Hopper released a couple years ago. ChatGPT is still the only real monetizable project. -

wwenze1 "These coolant distribution units (CDUs), such as the CoolIT CHx2000, are capable of managing up to 2 mW of cooling capacity,"Reply

2MW not 2mW -

Crotus of Borg Reply

This is 100% correct and is the major fault of this whole article. The use of liquid cooling does not reduce water use at all unless the DC operator creates a primary fluid network independent of the racks that does not use water to cool the coolant at the coolant distribution unit. It can be done, absolutely, but you have to design for it.frogr said:"...the company has invested in reference designs of sealed liquid cooling solutions to avoid the use of evaporative liquid cooling solutions, in an effort to preserve water"

Open-loop evaporative cooling is not used at the chip level or rack level. It is used to produce cold water at the building level which is used to cool the air or water supplied to the server racks. The chilling technology at the chip/ rack level and building level are basically independent.

https://datacenters.microsoft.com/wp-content/uploads/2023/05/Azure_Modern-Datacenter-Cooling_Infographic.pdf