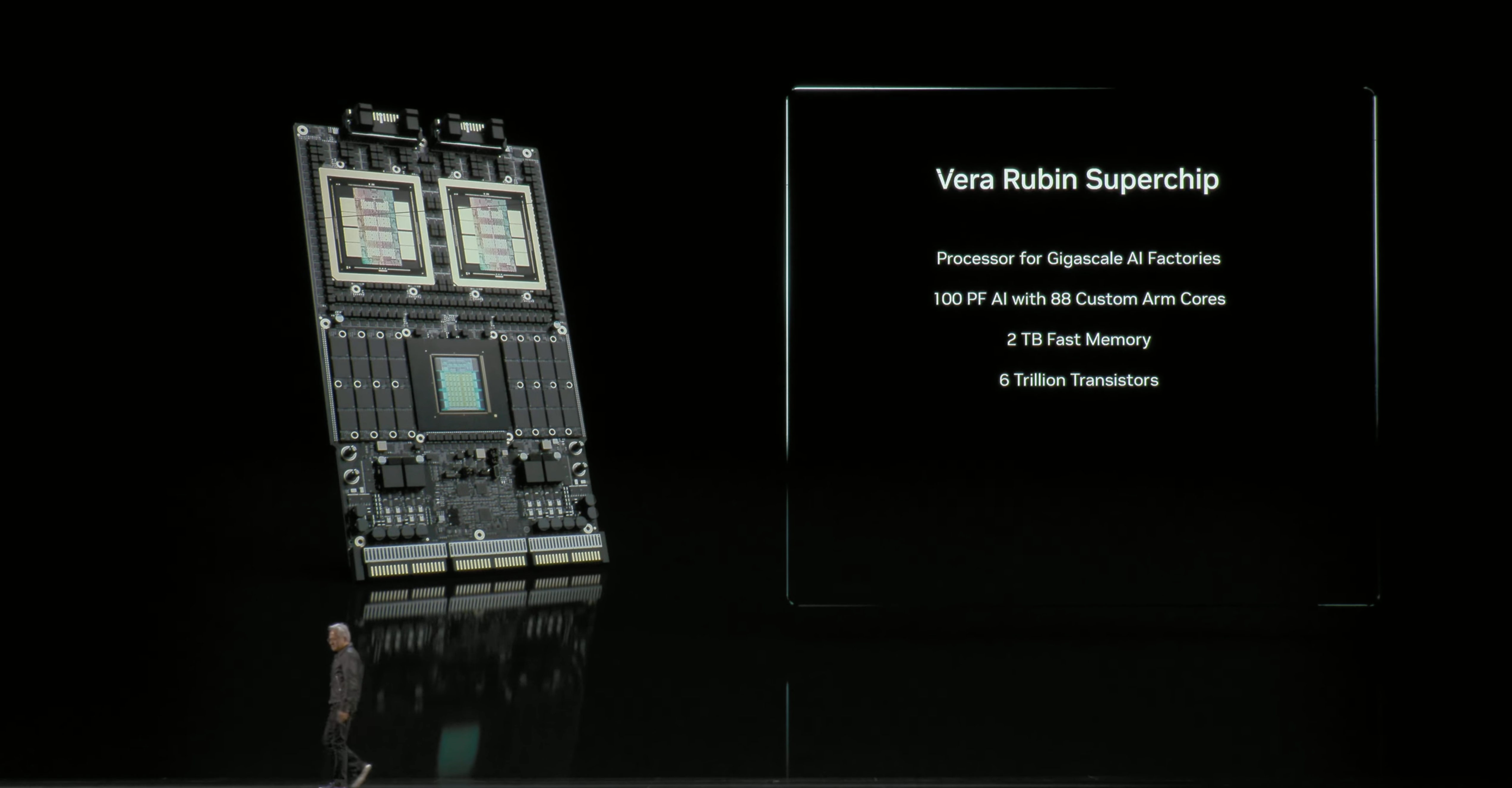

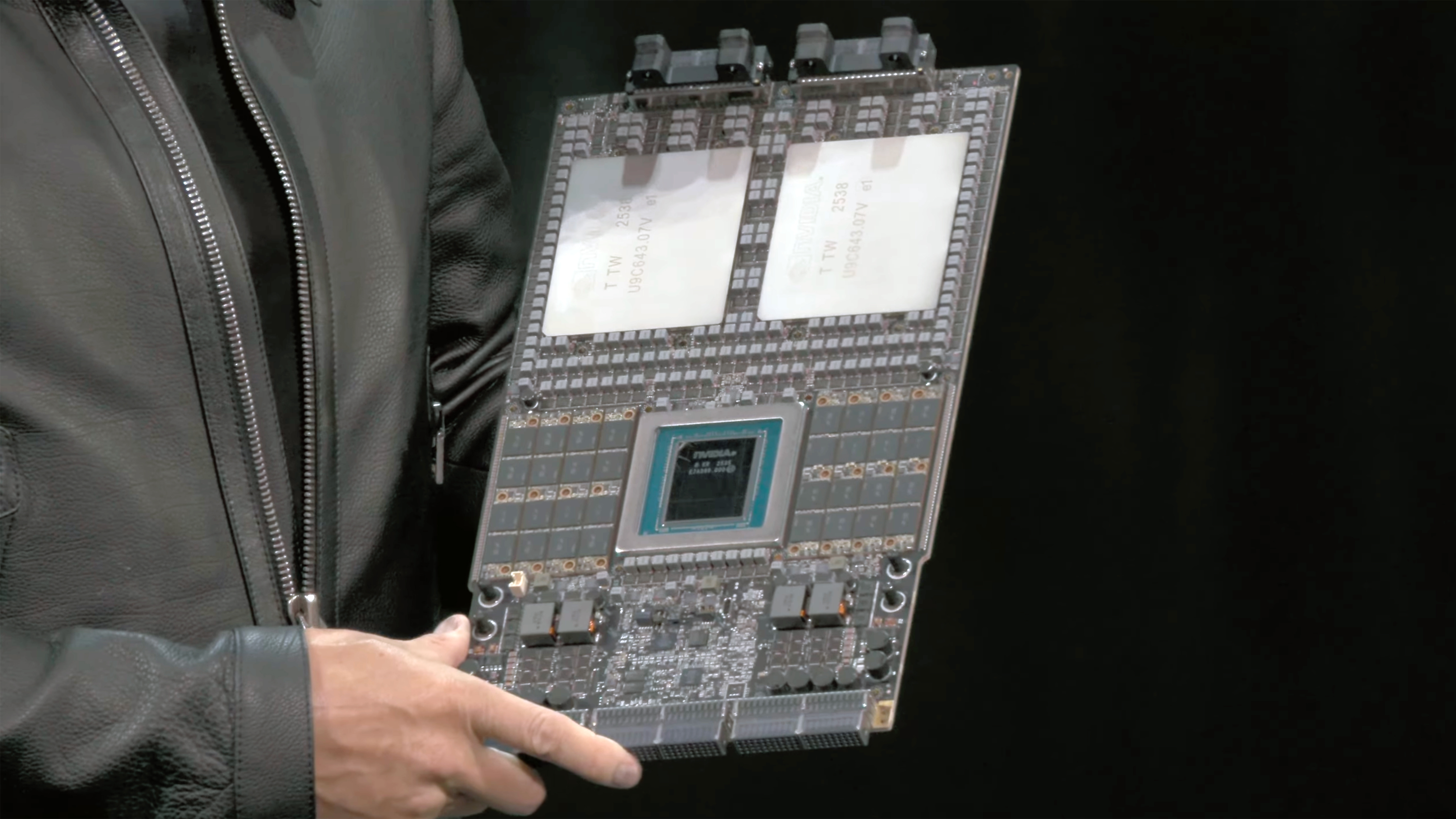

Nvidia reveals Vera Rubin Superchip for the first time — incredibly compact board features 88-core Vera CPU, two Rubin GPUs, and 8 SOCAMM modules

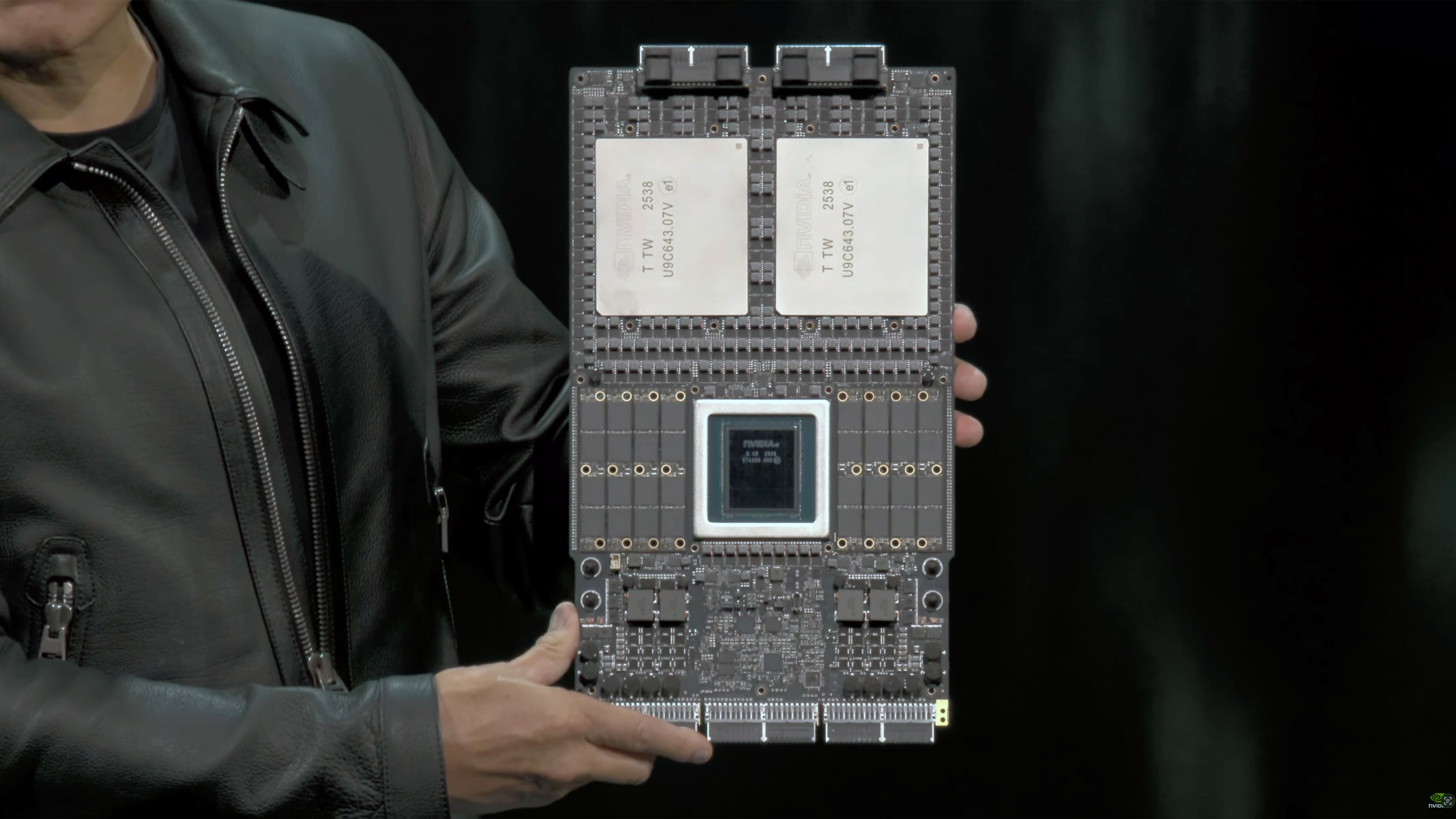

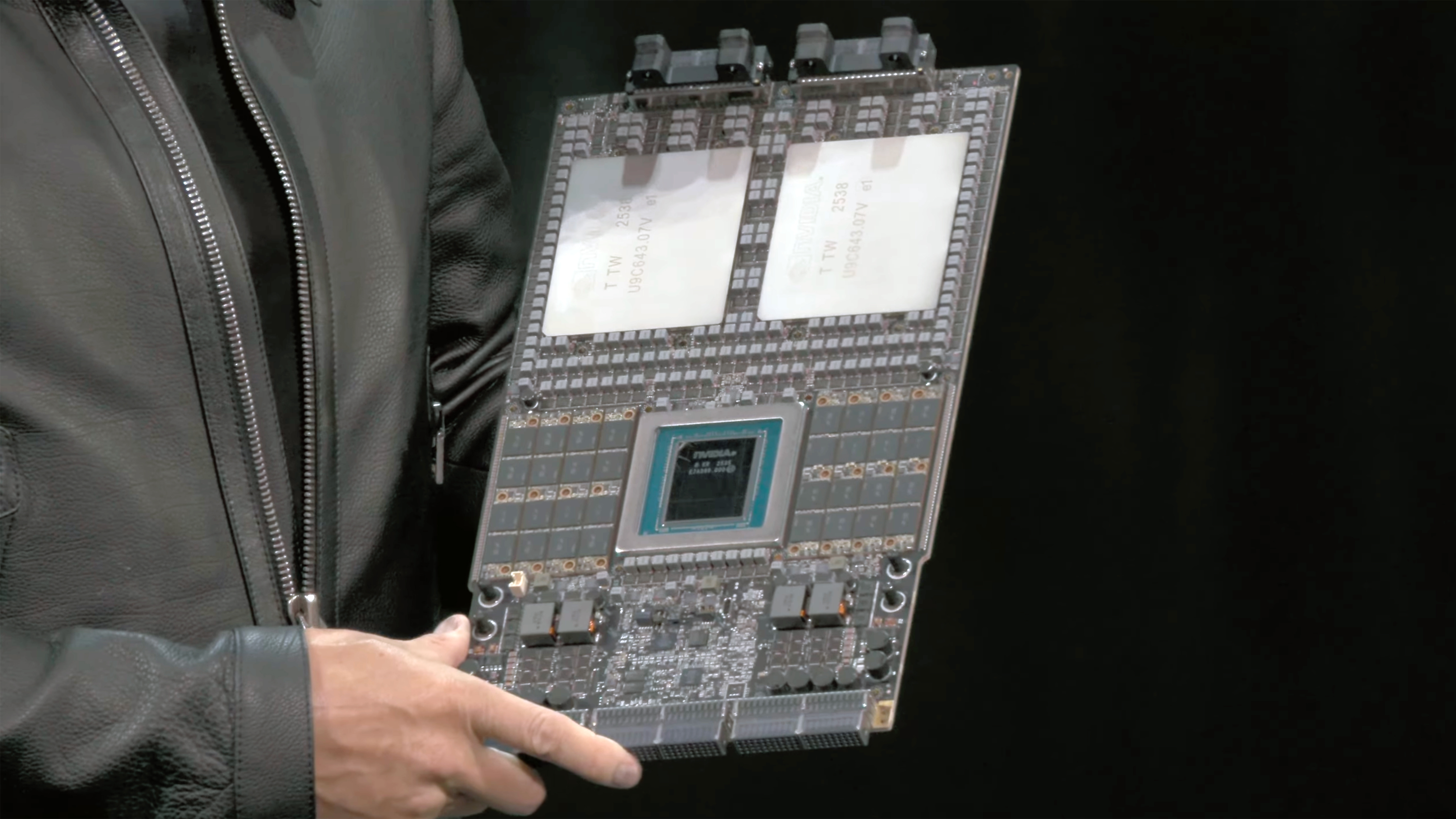

Live images of Nvidia's Vera Rubin Superchip.

At its GTC 2025 keynote in DC on Tuesday, Nvidia unveiled its next-generation Vera Rubin Superchip, comprising two Rubin GPUs for AI and HPC as well as its custom 88-core Vera CPU. All three components will be in production this time next year, Nvidia says.

"This is the next generation Rubin," said Jensen Huang, chief executive of Nvidia, at GTC. "While we are shipping GB300, we are preparing Rubin to be in production this time next year, maybe slightly earlier. […] This is just an incredibly beautiful computer. So, this is amazing, this is 100 PetaFLOPS [of FP4 performance for AI]."

Indeed, Nvidia's Superchips tend to look more like a motherboard (on an extremely thick PCB) rather than a 'chip' as they carry a general-purpose custom CPU and two high-performance compute GPUs for AI and HPC workloads. The Vera Rubin Superchip is not an exception, and the board carries Nvidia's next-generation 88-core Vera CPU surrounded by SOCAMM2 memory modules carrying LPDDR memory and two Rubin GPUs covered with two large rectangular aluminum heat spreaders.

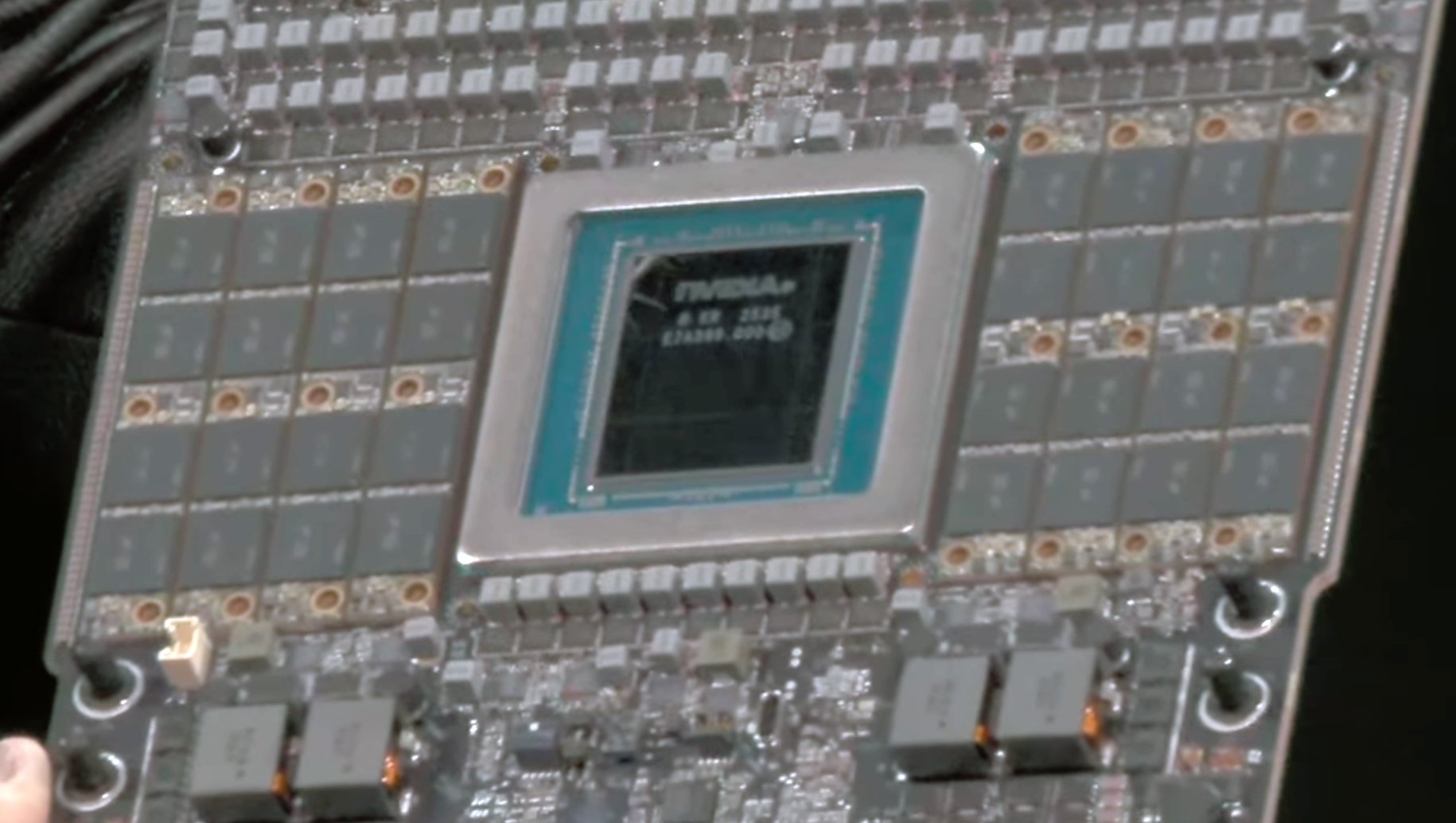

Markings on the Rubin GPU say that they were packaged in Taiwan on the 38th week of 2025, which is late September, something that proves that the company has been playing with the new processor for some time now. The size of the heatspreader is about the same size as the heatspreader of Blackwell processors, so we cannot figure out the exact size of GPU packaging or die sizes of compute chiplets. Meanwhile, the Vera CPU does not seem to be monolithic as it has visible internal seams, implying that we are dealing with a multi-chiplet design.

A picture of the board that Nvidia demonstrated once again reveals that each Rubin GPU is comprised of two compute chiplets, eight HBM4 memory stacks, and one or two I/O chiplets. Interestingly, but this time around, Nvidia demonstrated the Vera CPU with a very distinct I/O chiplet located next to it. Also, the image shows green features coming from the I/O pads of the CPU die, the purpose of which is unknown. Perhaps, some of Vera's I/O capabilities are enabled by external chiplets that are located beneath the CPU itself. Of course, we are speculating, but there is definitely an intrigue with the Vera processor.

Interestingly, the Vera Rubin Superchip board no longer has industry-standard slots for cabled connectors. Instead, there are two NVLink backplane connectors on top to connect GPUs to the NVLink switch, enabling scale-up scalability within a rack and three connectors on the bottom edge for power, PCIe, CXL, and so on.

In general, Nvidia's Vera Rubin Superchip board looks quite baked, so expect the unit to ship sometime in late 2026 and get deployed by early 2027.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user Reply

Well, their SXM modules are daughter cards, not motherboards. Also, sometimes called mezzanine cards. They fit into spots on a specially-designed motherboard.The article said:Nvidia's Superchips tend to look more like a motherboard

However, the connectors at either edge of this board + its size are suggesting to me that it probably fits into a blade-server style chassis.

In spite of the dull finish, I really doubt that's aluminum.The article said:the board carries Nvidia's next-generation 88-core Vera CPU surrounded by SOCAMM2 memory modules carrying LPDDR memory and two Rubin GPUs covered with two large rectangular aluminum heat spreaders. -

JRStern > quite baked,Reply

LOL

HBM4? I thought they'd be up to HBM42 by then, is the HBM pace slowing down?

I notice the new Qualcomm entry is managing to not use HBM, I guess that's part of what makes it an "inference chip".

Y'know, in normal times, in the marketing textbooks, you're not supposed to show future products, it suppresses demand for current products. Especially when you have just about zero competitors nipping at your heels (Qualcomm and Broadcom and AMD and Intel and Huawei aside). So apparently these are not normal times.

I'm not sure what NVidia can find to make Rubin that much faster, Blackwell got FP4 that allowed semi-absurd claims of speedup, otherwise it would have been maybe 10% faster at the processor level. I hear there are more storage optimizations coming to move data on and off the board faster, perhaps something in that area. -

bit_user Reply

They sell to a captive market, which will take what it can get, when it can get it.JRStern said:Y'know, in normal times, in the marketing textbooks, you're not supposed to show future products, it suppresses demand for current products.

They don't sell shrink-wrapped stuff for consumers, like Apple. These products have infrastructure requirements they need to let partners and customers know about, well in advance. That means their datacenter products will never be top secret until they're right about to start shipping.JRStern said:Especially when you have just about zero competitors nipping at your heels (Qualcomm and Broadcom and AMD and Intel and Huawei aside). So apparently these are not normal times.

They're also trying to sell investors on the notion that they're continuing to make progress at a pace that justifies their valuation. Jensen needs to keep the hype train going for as long as he can. That means continuing to show and announce new things, even if they're not shipping to customers for a while, yet.

I think they'll break CUDA compatibility, at some stage, because it's actually holding them back.JRStern said:I'm not sure what NVidia can find to make Rubin that much faster, Blackwell got FP4 that allowed semi-absurd claims of speedup, otherwise it would have been maybe 10% faster at the processor level.

The other thing I've been expecting to see is for them to drop fp64 support, which is wasting a bunch of die space. However, two recently-announced HPC contracts based on Vera/Rubin indicate that it's not happening in Rubin:

https://www.tomshardware.com/tech-industry/supercomputers/nvidia-unveils-vera-rubin-supercomputers-for-los-alamos-national-laboratory-announcement-comes-on-heels-of-amds-recent-supercomputer-wins

Rubin is being fabbed on TSMC N3P, which is probably the main thing they're relying on, for more performance. -

George³ Reply

No. Just on time. All supply chain deliveries on this level are synchronised.JRStern said:HBM4? I thought they'd be up to HBM42 by then, is the HBM pace slowing down? -

JRStern Reply

If the product is used only for LLMs, then perhaps. I wonder if they've discussed it publicly.bit_user said:The other thing I've been expecting to see is for them to drop fp64 support, which is wasting a bunch of die space.

But then they'd need a separate model that still has fp64 for most scientific uses. Whether anyone uses fp64 for graphics, I dunno, not usually I'd guess. For neo-crypto ... also probably not.

I actually wonder if they'd like to go for an fp2, I mean, twice as fast as fp4, lol. Fp1 I presume they already have, since it's just bool, unless they want to make some weird function where it's vectorized 1/0. FP1, lol.

(1980's work attempting to assign Bayesian certainty or other weights to expert system rules generally failed, found binary switches generally did just as good a job as any fancier system anyone could think of) -

bit_user Reply

Why? It's such a niche market and supporting it is actively hurting the value of their products for AI customers. Nvidia should just let it go. Otherwise, I guess they should make a dedicated fp64 accelerator for it.JRStern said:But then they'd need a separate model that still has fp64 for most scientific uses.

Maybe both will come at the same time. When they drop CUDA from their AI products, that would probably be the best time to drop fp64 from the training chips.

Actually, yes. Graphics needs a certain amount of fp64, but not a lot. That's why client GPUs typically implement it at like 1:32 or so.JRStern said:Whether anyone uses fp64 for graphics, I dunno, not usually I'd guess.

Intel dropped hardware fp64 from Xe, only to bring it back in Xe2. That's further proof that you do need some, for graphics, and emulation just doesn't cut it.

People have dabbled in binary neural nets (and I don't mean spiking), but it never really seemed to catch on. What AMD and Nvidia are now onto is block-oriented compression schemes, for the weights.JRStern said:I actually wonder if they'd like to go for an fp2, I mean, twice as fast as fp4, lol. Fp1 I presume they already have, since it's just bool, unless they want to make some weird function where it's vectorized 1/0. FP1, lol.

https://developer.nvidia.com/blog/introducing-nvfp4-for-efficient-and-accurate-low-precision-inference/

I've got to say, I expected this to come a lot sooner. It has echoes of how they do texture compression, and the texture engines would seem to be a natural place to implement weight decompression. -

Tanakoi Reply

Circular logic. I have quite a bit of simulation software that requires this precision, but unless you want to define something like a snippet of physics code in a game as 'graphics', I can't imagine anything else would qualify. Intel brought back FP64 to at least pretend Battlemage could compete in the HPC sector.bit_user said:Actually, yes. Graphics needs a certain amount of fp64, but not a lot...Intel dropped hardware fp64 from Xe, only to bring it back in Xe2. That's further proof that you do need some, for graphics...

With a $5T valuation and upcoming products like this one, they're not having to 'sell investors' on anything. And with the amount of stock Jensen and his allies own, they don't need to chase short-term valuations to avoid a hostile takeover.bit_user said:They're also trying to sell investors on the notion that they're continuing to make progress...

It'll never happen. What might happen, though, is spinning off FP64 capable processors to a separate product line, with their AI specific model optimized for FP8/16.bit_user said:...The other thing I've been expecting to see is for them to drop fp64 support. -

JTWrenn ReplyJRStern said:

Y'know, in normal times, in the marketing textbooks, you're not supposed to show future products, it suppresses demand for current products. Especially when you have just about zero competitors nipping at your heels (Qualcomm and Broadcom and AMD and Intel and Huawei aside). So apparently these are not normal times.

This is marketing to investors not of the product itself. They want to keep the hype train rolling and are not worried about selling everything because they have sold out of everything for so long. -

thestryker Reply

Word is that if a company was putting in orders for nvidia AI hardware today it's a year out for delivery. When the delivery schedule is so far behind announcing products this early also serves to entice customers buying now to put in orders early for what's next, but it also means they can't afford to just wait to buy.JRStern said:Y'know, in normal times, in the marketing textbooks, you're not supposed to show future products, it suppresses demand for current products. Especially when you have just about zero competitors nipping at your heels (Qualcomm and Broadcom and AMD and Intel and Huawei aside). So apparently these are not normal times. -

bit_user Reply

LOL, wut?Tanakoi said:Circular logic.

I can't say definitively why it's needed, but it seems to me that it's probably used in things like setting up viewing transforms. Transforming from homogeneous to normalized device coordinates seems like an especially fraught stage. Also, dealing in world coordinates can pose precision issues in large worlds, since epsilon grows pretty big for fp32 as you reach larger scales. Lastly, matrix inversion is another case where you'd definitely want it, due to numerical stability issues.Tanakoi said:unless you want to define something like a snippet of physics code in a game as 'graphics', I can't imagine anything else would qualify.

Edit: Here's a video interview with a game developer, explaining why they took the trouble of porting their CryEngine fork to use 64-bit world coordinates:

OB_AI9ukSp8View: https://www.youtube.com/watch?v=OB_AI9ukSp8

One choice quote I picked up on: "There was stuff in the engine that was already 64-bit. What we really needed was physics and positioning to be changed.”

It would be interesting to know where the engine was already using it.

Nope. For HPC, you'd need vector fp64. We haven't seen a client GPU with vector fp64 support since 2019's Radeon VII. Battlemage only has scalar fp64. Here, you can clearly see that their implementation is on par with other client GPUs:Tanakoi said:Intel brought back FP64 to at least pretend Battlemage could compete in the HPC sector.

Source: https://www.phoronix.com/review/intel-arc-b580-gpu-compute

And it's not only their Xe2 dGPU that has it - the iGPUs have it, too.

Source: https://www.phoronix.com/review/intel-xe2-lunarlake-compute

Sure they do. They need to keep investors in their seats, and convince them not to get up and head for the exits! Over and above that, they need to motivate new share purchases, to maintain some momentum in the share price.Tanakoi said:With a $5T valuation and upcoming products like this one, they're not having to 'sell investors' on anything.

Making a HPC-oriented accelerator is one scenario I mentioned.Tanakoi said:It'll never happen. What might happen, though, is spinning off FP64 capable processors to a separate product line, with their AI specific model optimized for FP8/16.

But, how big is the HPC sector, really? When these chips are costing $Billions to design and bring to market, could they really even justify making a dedicated chip just for HPC? Would they make a chip they might lose money on, just to "stay in the game"? Nvidia can only juggle so many balls at a time. They've been struggling in pretty much all major product introductions they've done over the past year, from the server Blackwell to RTX 5000 series, N1/N1X, and most recently Spark.