New 3D-stacked memory tech seeks to dethrone HBM for AI inference — d-Matrix claims 3DIMC will be 10x faster and 10x more efficient

HBM may soon be edged out in some use cases

Memory startup d-Matrix is claiming its 3D stacked memory will be up to 10x faster and run at up to 10x greater speeds than HBM. d-Matrix's 3D digital in-memory compute (3DIMC) technology is the company's solution for a memory type purpose-built for AI inference.

High-bandwidth memory, or HBM, has become an essential part of AI and high-performance computing. HBM memory stacks memory modules on top of each other to more efficiently connect memory dies and access higher memory performance. However, while its popularity and use continue to expand, HBM may not be the best solution for all computational tasks; where it is key for AI training, it can be outmatched in AI inference.

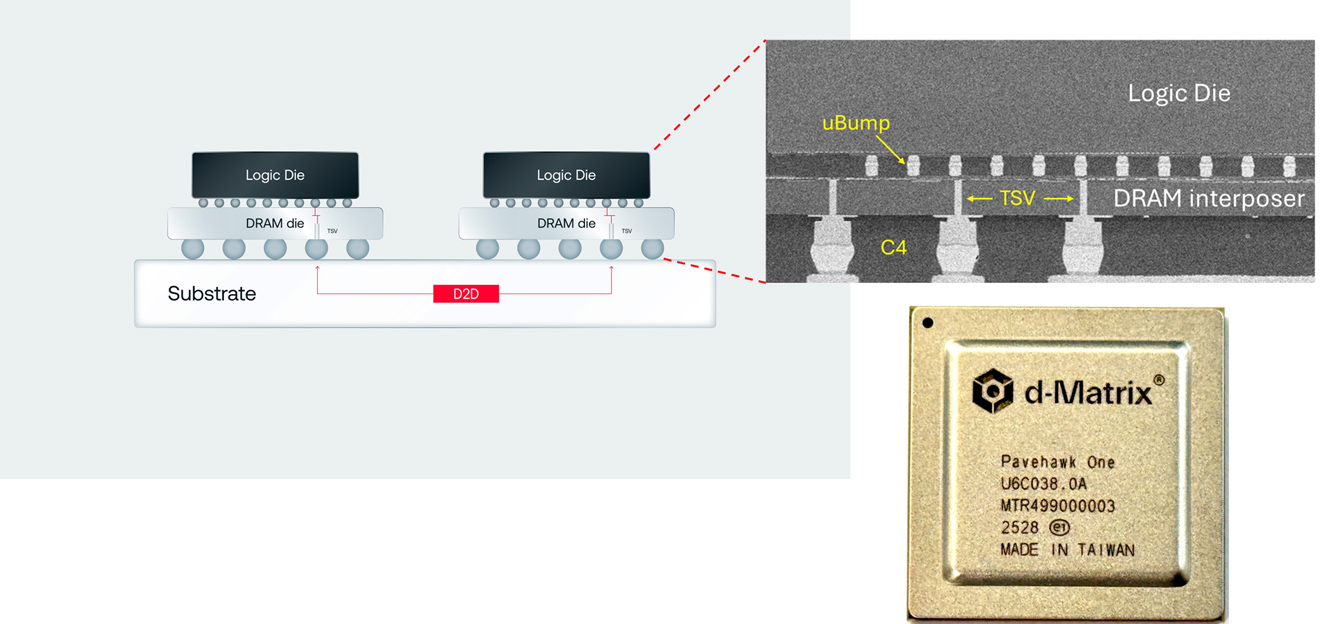

To this end, d-Matrix has just brought its d-Matrix Pavehawk 3DIMC silicon online in the lab. Digital in-memory compute hardware currently looks like LPDDR5 memory dies with DIMC chiplets stacked on top, attached via an interposer. This setup allows the DIMC hardware to perform computations within the memory itself. The DIMC logic dies are tuned for matrix-vector multiplication, a common calculation used by transformer-based AI models in operation.

"We believe the future of AI inference depends on rethinking not just compute, but memory itself," said Sid Sheth, founder and CEO of d-Matrix, in a recent LinkedIn post. "AI inference is bottlenecked by memory, not just FLOPs. Models are growing fast, and traditional HBM memory systems are getting very costly, power hungry, and bandwidth limited. 3DIMC changes the game. By stacking memory in three dimensions and bringing it into tighter integration with compute, we dramatically reduce latency, improve bandwidth, and unlock new efficiency gains."

With Pavehawk currently running in d-Matrix's labs, the firm is already looking ahead to its next generation, Raptor. In a company blog post as well as Sheth's LinkedIn announcement, the firm claims that this next-gen, also built on a chiplet model, will be the one to outpace HBM by 10x in inference tasks while using 90% less power.

The d-Matrix DIMC project follows a pattern we've seen in recent startups and from tech theorists: positing that specific computational tasks, such as AI training vs. inference, should have hardware specifically designed to efficiently handle just that task. d-Matrix holds that AI inference, which now makes up 50% of some hyperscalers' AI workloads, is a different-enough task than training that it deserves a memory type built for it.

A replacement for HBM is also attractive from a financial standpoint. HBM is only produced by a handful of global companies, including SK hynix, Samsung, and Micron, and its prices are anything but cheap. SK hynix recently estimated that the HBM market will grow by 30% every year until 2030, with price tags rising to follow demand. An alternative to this giant may be attractive to thrifty AI buyers — though a memory made exclusively for certain workflows and calculations may also seem a bit too myopic for bubble-fearing potential clients.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button!

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Sunny Grimm is a contributing writer for Tom's Hardware. He has been building and breaking computers since 2017, serving as the resident youngster at Tom's. From APUs to RGB, Sunny has a handle on all the latest tech news.

-

ejolson What's the difference between a processor with built-in memory versus memory with a built-in processor?Reply