SK hynix reveals DRAM development roadmap through 2031 — DDR6, GDDR8, LPDDR6, and 3D DRAM incoming

No, HBF will not replace DRAM.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

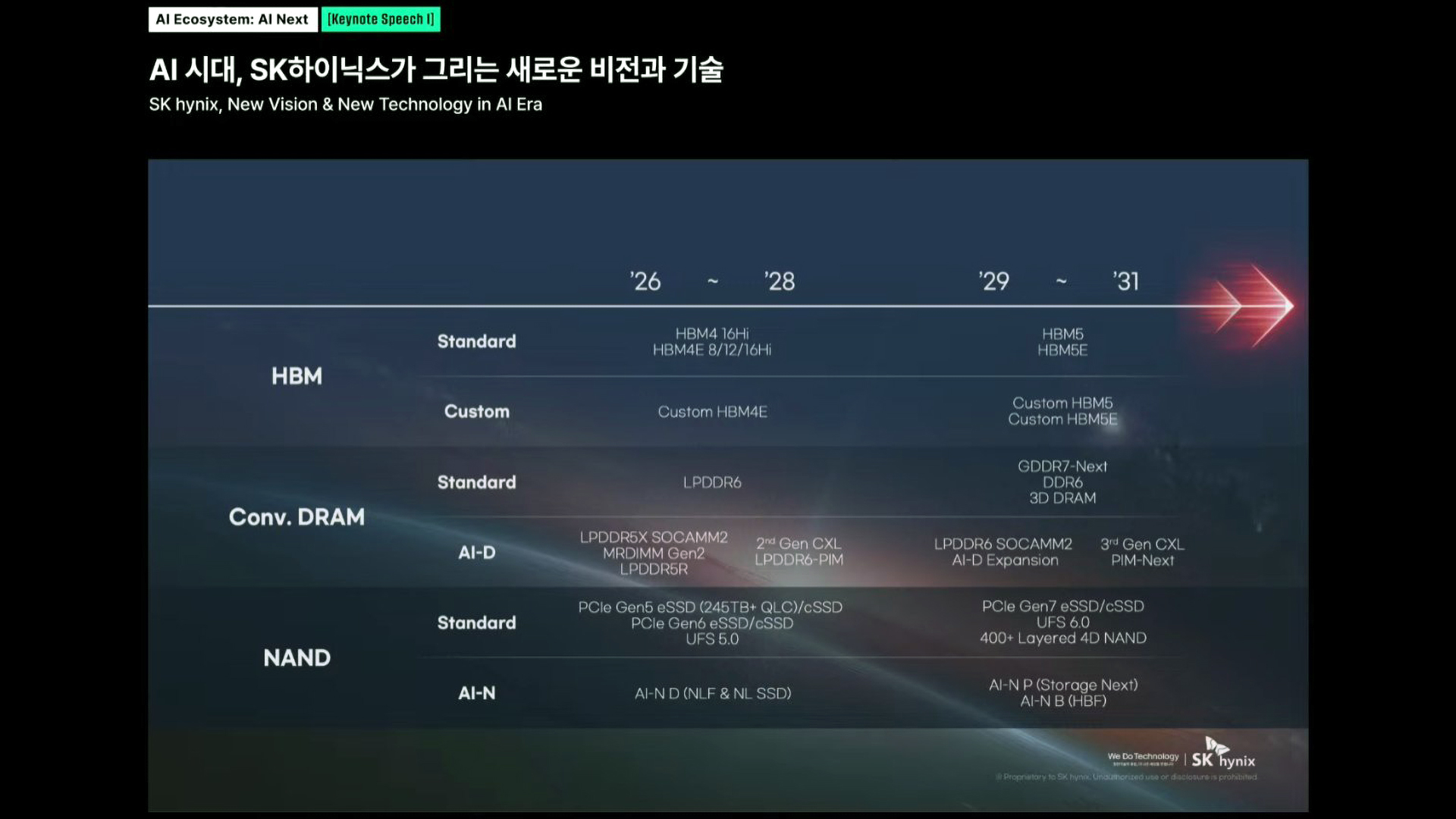

SK hynix has published its DRAM roadmap at its SK AI Summit 2025. While the plans are displayed in a very general way and do not reveal important specifics, they still show the direction of DRAM technology evolution and approximate timelines of the emergence of new technologies. Naturally, since SK hynix demonstrated the roadmap at an AI event, it has a clear bias towards AI servers.

Conventional types of DRAMs — such as DDR, GDDR, and LPDDR — will continue to serve the memory needs of AI servers for the foreseeable future, albeit for different applications. Meanwhile, HBM memory will continue to serve the bandwidth-hungry AI and HPC processors. SK hynix lists 3D DRAM as something that is expected to come in 2030, but details are scant, so we can only speculate what the technology will offer at its inception early next decade.

DDR5 will continue to offer the balance between cost, density, and performance for years to come, albeit in form factors like MRDIMM Gen2 that is set to arrive in 2026 – 2027 and support data transfer rates of 12,800 MT/s, or 2nd Generation CXL memory expanders expected to hit the market in 2027 – 2028. As reported multiple times, DDR6 will only arrive in 2029 or 2030; before that, DDR5 will keep evolving.

LPDDR6 — which now features a host of data center-oriented capabilities on the silicon level — is projected to wed high-capacity, high-performance, and lowered power consumption towards the end of the decade. SK hynix expects SOCAMM2 modules based on LPDDR6 to arrive in the late 2020s, possibly when Nvidia rolls out its post-Vera CPUs, and will need a new memory subsystem. Interestingly, SK hynix plans to release LPDDR6-PIM (processing-in-module) solutions for specialized applications sometime in 2028.

As for GDDR7, it will remain a niche solution for inference accelerators, such as Nvidia's Rubin CPX, as it combines very high performance and relatively low cost (compared to HBM), but lacks much-needed capacity. SK hynix lists 'GDDR7-Next,' which probably means GDDR8, as the company is not known for developing Nvidia-specific solutions, unlike Micron, which has developed GDDR5X and GDDR6X for Nvidia.

The highest-performing DRAM solutions from SK hynix in the coming years will be HBM4, HBM4E, HBM5, and HBM5E memory solutions that will be released in 1.5 – 2-year cadences from now through 2031. Interestingly, it does not look like HBM5 — which presumably powers Nvidia's Feynman, that is set to land in late 2028 — will show up until 2029 or even 2030. For customers that need customized memory solutions, SK hynix will also offer custom HBM4E, HBM5, and HBM5E modules, though it remains to be seen how its customers plan to customize such devices.

As for high-bandwidth flash (HBF) products that promise to offer performance comparable to HBM and capacity comparable to 3D NAND, SK hynix does not expect them to arrive before 2030, as the company must develop all-new media as well as agree on the final specification with other makers of NAND memory, particularly SanDisk, which proposed the technology earlier this year.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

usertests I can't wait for 3D DRAM so that 128 GB looks like the new 8 GB.Reply

Actually, I can wait 8 years. -

bit_user Reply

I often wonder at just how it is that we're using so much RAM. I get that higher speeds require larger buffers, more threads mean more stacks, etc. Still, modern machines really do have an awful lot of RAM, and yet software does seem to gobble it up!usertests said:I can't wait for 3D DRAM so that 128 GB looks like the new 8 GB.

Actually, I can wait 8 years.

My current desktop has 64 GiB (2x 32), not that I realistically think I'll ever need that much. -

usertests Reply

There's no downside to caching as much as possible, as long as you don't spill over into a page file on the SSD/HDD. Right now I have ~24 GB used, ~35 GB cached. Mostly from some browser windows. Usage may be high but we want the whole thing filled somehow.bit_user said:I often wonder at just how it is that we're using so much RAM. I get that higher speeds require larger buffers, more threads mean more stacks, etc. Still, modern machines really do have an awful lot of RAM, and yet software does seem to gobble it up!

My current desktop has 64 GiB (2x 32), not that I realistically think I'll ever need that much.

I think we're in a good place (as long as you bought the dip on memory). We have specialized distros (e.g. Batocera or LibreELEC) that can scale down to 1-2 GB, most people can cope with 8-16 GB on modern desktops, but you get a better experience with 32-64 GB.

Next-gen consoles could drive PC gaming memory usage up, but only with games not supported on current-gen. It could be 5+ years until that happens. 32 GB RAM and 16 GB VRAM will likely be fine at a minimum (1080p), with 64 GB RAM and 24-36 GB VRAM for enthusiasts (4K). Adoption of AI features in gaming could drive up memory requirements of one of these pools, but it's very uncertain right now.

Part of the promise of 3D DRAM is not just capacity increases, but $/GB decreases. If everyone ends up with large amounts of cheap RAM, LLMs and other AI models are an obvious place to waste it on. Run a 70 billion parameter model on top of everything else the desktop is doing? Maybe you want 128 GB. The sky's the limit. If you can get 512 GB for $100 in the mid-2030s, run a 300B model instead.

If you don't use AI, 64 GB could be fine for the next 15 years. But that's a long time in the tech industry. Some of will be dead too, preemptive RIP. -

bit_user Reply

Not quite. I got ECC memory and grabbed it when it was on the rise, in June of last year. I paid $295 for 64 GiB of DDR5-5600 (Kingston).usertests said:I think we're in a good place (as long as you bought the dip on memory).

The seller is currently out-of-stock, but now has it listed for $620. I guess I didn't do as badly as I thought, at the time?

You will need lots of bandwidth, though. I guess they could do some sort of on-package memory with a wide interface, though. Sort of like Apple's M-series.usertests said:If you can get 512 GB for $100 in the mid-2030s, run a 300B model instead.

I also think 3D DRAM won't reduce prices as much as you think, since each die will take a lot longer to make.

16 GB lasted me a dozen years, in my last PC. I thought it was too much, when I bought it, and I was almost right.usertests said:If you don't use AI, 64 GB could be fine for the next 15 years. But that's a long time in the tech industry. Some of will be dead too, preemptive RIP. -

usertests Reply

It's like 3D NAND, but a harder problem. I am confident it will lower cost per bit just as 3D NAND did.bit_user said:I also think 3D DRAM won't reduce prices as much as you think, since each die will take a lot longer to make.

Samsung has done 16 layers in the lab. They might commercialize at 16-32 layers and slowly climb from there.

https://www.tomshardware.com/pc-components/dram/samsung-outlines-plans-for-3d-dram-which-will-come-in-the-second-half-of-the-decadehttps://www.tomshardware.com/pc-components/ram/samsung-reveals-16-layer-3d-dram-plans-with-vct-dram-as-a-stepping-stone-imw-2024-details-the-future-of-compact-higher-density-ramhttps://www.prnewswire.com/news-releases/samsung-reportedly-achieves-technical-breakthrough-stacking-3d-dram-to-16-layers-302164563.html -

abufrejoval For me VMs have been the main RAM capacity drivers, yet I distinctly remember running Windows NT 3.51 or 4.0 with VMware 1.0 around 1999 and some Linux inside a VM on a 32MB Socket 7 K6 system (the max capacity on most Socket 7 boards).Reply

I've pretty near always simply put the maximum RAM in all my systems since, at least when the price curve wasn't exponential per DIMM capacity. And I would sometimes add extra DIMMs later, making sure I'd be able to by choosing modules accordingly.

These days my workstations typically have 128GB, µ-servers 64GB and notebooks the maximum I can fit (16-64GB).

Is that necessary? Mostly not.

Is it useful? Definitely, when I run e.g. a nested virtualization 3--5 node Proxmox/CEPH cluster for functional QA, which isn't all that often, but that's part of what pays the bills.

Where I tend to draw the line is that I really don't go beyond entry level hardware: it's classic PC stuff, never went with a dual socket in the home-lab or any CPU or mainboard that was near or beyond €1000 when I bought it. Because it doesn't run production loads in the home-lab, it's all about functional and failure testing: I want to save time, not go broke.

A Xeon or two would have been way too expensive when new, but those I bought when they became available cheap from China: 128GB of ECC RAM were then split into two chunks for better digestion, X99 boards were cheap!

Mostly prices for the extra RAM just were less than what I make in an hour or perhaps a few, so long deliberations on RAM capacity made no sense. And it's somewhat similar now with 8 vs 16 cores on Ryzens: not spending much time thinking about it almost closes the price gap! At least when you don't buy on launch day.

And I simply don't need top clocks, because gaming only becomes important when I pass my machines on to my kids. At that point they are still tons better than anything they could afford on their own, so few complaints there.

I'm writing this on one of my 24x7 workstations, 128GB ECC and a 16-core Ryzen.

It shows 80GB used... without a single VM running.

And no, it's not a memory leak or a huge application: Windows has just started to behave a lot like Linux or Solaris: they'll keep anything they come across in cache, because that is just a tad better than leaving it unused in case somebody might want that data again.

It will purge that, once somebody else has a better use for that RAM, but you can also force those buffers empty e.g. with SysInternal's RAMMap.

With ECC RAM I feel somewhat more comfortable with all that data potentially growing stale in RAM, ECC on storage seems much stronger and thus safer. But let's not wake that monster again! -

bit_user Reply

I stopped filling the second DIMM slot per channel, once it started affecting the memory clock speed. I now either buy more RAM up front, or I buy less and plan for a mid-life upgrade, where I just replace the current DIMMs with new ones.abufrejoval said:I've pretty near always simply put the maximum RAM in all my systems since, at least when the price curve wasn't exponential per DIMM capacity. And I would sometimes add extra DIMMs later, making sure I'd be able to by choosing modules accordingly.

I actually wish 2-DIMM motherboards were more common, because I'd use them if they were. However, like you, I value ECC RAM and so I must limit myself to boards which support that. 2-DIMM boards are even rarer, among that set (unless you go down to mini-ITX).

Another argument against big RAM configurations that I've recently run across is that it increases refresh times. I need to see some real data on that.

The main reason I have 64 GB in my desktop is due to 32 GB DIMMs being the smallest dual-rank capacity DDR5 is available in. Anandtech benchmarked single-rank vs. dual-rank DDR5 and found the dual-rank were faster, at the same clock speed & timings. Since Anandtech did application benchmarks, their results should account for any loss by longer refresh times from higher capacities, so it should still be a net win. But, I do wonder to what extent that's a measurable effect.

The main argument for single-rank seems to be overclocking and trying to reach higher speeds or tighter timings. As I don't overclock, this seemed to be the way to get the highest performance out of my memory.

Indeed. It gives me the confidence simply to use "sleep", instead of hibernating or powering down, unless there's some reason I have to.abufrejoval said:With ECC RAM I feel somewhat more comfortable with all that data potentially growing stale in RAM, -

abufrejoval Reply

Actually I had already forgotten I had to do the same on my Ryzen 7950X3D with DDR5... It's only 96GB in with dual 48GB modules, because with four DIMMs speeds were atrocious enough to actually notice. Dual 64GB was exponential in cost so it got stuck with 96, which is a good compromise.bit_user said:I stopped filling the second DIMM slot per channel, once it started affecting the memory clock speed. I now either buy more RAM up front, or I buy less and plan for a mid-life upgrade, where I just replace the current DIMMs with new ones.

I'd stick with quad just for the flexibility and because they won't pass any savings on to you. But then again, my four most recent mainboards have all been Mini-ITX, all dual DIMMs with three of them SO-DIMMs.bit_user said:I actually wish 2-DIMM motherboards were more common, because I'd use them if they were. However, like you, I value ECC RAM and so I must limit myself to boards which support that. 2-DIMM boards are even rarer, among that set (unless you go down to mini-ITX).

Since I've just retired, I'll probably stick a bit longer to what I already have around, because it's no longer a money maker nor tax deductable. Perhaps a Zen 6 upgrade just for kicks, not that I'd probably ever need it now.

RAM refresh is measurable, but not noticeable: single percent less bandwidth between 'default' and 'barely still working' is what I remember. Andrei was excellent at making those tiny differences measurable with his synthetic benchmarks: real world impact wasn't his focus. If high frequency trading hadn't moved to FPGA or even ASICs long ago, perhaps that's a niche where it might be important. Since you already use ECC for peace of mind, I can only recommend you stick with that and never worry about refresh again since it's likely much less impact than a single speed rank.bit_user said:Another argument against big RAM configurations that I've recently run across is that it increases refresh times. I need to see some real data on that.

By far the biggest portion of CPU transitor budgets go into caches, because locality on general purpose computing is so poor. But those caches make it much harder to still archieve any gains via coding or tuning. And the scientific HPC workloads, which used to be more sensitive to RAM, have long moved to GPGPU compute, where kernels are tuned to HBM or VRAM if register files do spill over. Both make worrying about RAM tuning much less of an issue, except perhaps for some games, where V-cache then comes to the rescue. You're at drag racing levels of engneering with your worries, I'd say, and actually driving compacts to work. If you still worry, I'd call it a hobby. I'd go along for choosing between iso price alternatives, because nobody wants to just leave free performance on the table, but I think it's not enough to actually worry or bench systematically.bit_user said:The main reason I have 64 GB in my desktop is due to 32 GB DIMMs being the smallest dual-rank capacity DDR5 is available in. Anandtech benchmarked single-rank vs. dual-rank DDR5 and found the dual-rank were faster, at the same clock speed & timings. Since Anandtech did application benchmarks, their results should account for any loss by longer refresh times from higher capacities, so it should still be a net win. But, I do wonder to what extent that's a measurable effect.

The main argument for single-rank seems to be overclocking and trying to reach higher speeds or tighter timings. As I don't overclock, this seemed to be the way to get the highest performance out of my memory.

Energy consumption may perhaps be a bit more of a concern: my old Haswell/Broadwell E5-2696 Xeons consistently reported much more Wattage being consumed by those 128GB in eight DDR4-2133 ECC DRAM DIMMs than the 18/22 Xeon cores. Not that both ever consumed as much as the GPUs it was carrying. It never really gets hot, but its far from Mini-ITX idle power, while compute performance on say a Hawk-Point APU is actually better at much lower idle. Some progress was actually made between 14 (Intel) and 6 nm (TSMC). -

bit_user Reply

Eh, it's really not about savings, but rather that dual-DIMM motherboards have better DRAM signal quality. In the early days of LGA1700, IIRC there was some guidance about 2-slot boards reaching higher memory speeds, even if you only populated 2 slots of a 4-slot board.abufrejoval said:I'd stick with quad just for the flexibility and because they won't pass any savings on to you.

ASUS devised a new DIMM connector which supposedly mitigates the reflections caused by having an unpopulated DIMM slot. However, I read someone claiming that the precise benefits of the connector weren't accurately characterized by ASUS. Maybe it was Der8auer who discovered this?

https://www.tomshardware.com/pc-components/ram/empty-ram-slots-can-harm-dram-performance-asus-nitropath-slots-curb-electrical-interference-gain-400-mt-s-and-are-40-percent-shorter

I got a 9600X because it was cheap and lets me play with Zen 5, while leaving lots of options. With AVX-512 being more of a thing, I'm glad to have a chance to do some tinkering. Also, since I didn't want another Intel CPU before Nova Lake, it scratched the upgrade itch for now.abufrejoval said:Perhaps a Zen 6 upgrade just for kicks, not that I'd probably ever need it now.

For me, it's mostly a just a matter of curiosity. A little bit about wanting to know the tradeoffs of going higher capacity.abufrejoval said:RAM refresh is measurable, but not noticeable: single percent less bandwidth between 'default' and 'barely still working' is what I remember.

Well, the benefits that X3D CPUs have for gaming and other choice workloads stand as a testament to what one can gain with some clever, cache-aware optimizations.abufrejoval said:those caches make it much harder to still archieve any gains via coding or tuning.

I do think it's funny when people seem to assume linked-list following will automatically blow out of cache. If you have relatively low heap fragmentation, I've found that substantial chains of list elements tend to be contiguous! You do want to take care to allocate them in one shot, rather than adding an element here, and a little while later getting another one.

Sometimes, I like to measure things just to shine a light into what was previously a dark corner of my knowledge (and often others').abufrejoval said:nobody wants to just leave free performance on the table, but I think it's not enough to actually worry or bench systematically.

I've gotten behind at posting some of my findings, but more of that should be forthcoming, in the next couple months.

FWIW, I replaced a Sandybridge i7-2600K with an Alder Lake i5-12600 (4-core -> 6-core). Both using iGPU for graphics, with one SSD and an optical drive. Idle power on the new system is maybe 20% lower. The old system had 2x 8 GB DDR3-1600 DIMMs, while the new one uses 2x 32 GB DDR5-5600.abufrejoval said:Energy consumption may perhaps be a bit more of a concern: my old Haswell/Broadwell E5-2696 Xeons consistently reported much more Wattage being consumed by those 128GB in eight DDR4-2133 ECC DRAM DIMMs than the 18/22 Xeon cores. Not that both ever consumed as much as the GPUs it was carrying. It never really gets hot, but its far from Mini-ITX idle power, while compute performance on say a Hawk-Point APU is actually better at much lower idle. Some progress was actually made between 14 (Intel) and 6 nm (TSMC).

The biggest efficiency improvement seems to be in the area of the iGPU. Running the same OpenGL benchmark, the self-reported iGPU power is like half as much on the new system, even though its iGPU is an order of magnitude higher performance! -

thestryker Put me in the "I really wish there were more 1DPC boards" camp. When I was building my current system I really wanted a 2 DIMM board, but the price premium over what I got was $100/200/220 for ASRock/MSI/Asus and Gigabyte hadn't released theirs. The only other options tend to be entry level boards with nothing in between.Reply

When I was looking to get higher speed memory I almost went with a 32GB kit instead of 48GB due to default refresh timings. Most of the 32GB kits were 1.45V instead of 1.4V so I figured I could go to the minor effort of manually adjusting subtimings.

I would really like to see a test of single rank and dual rank modules with 16/24/32Gb IC density at the same speed. I know 16Gb refresh timings are lower than 24Gb so it's reasonable to assume the same would be true with 32Gb. I was really hoping to test a dual rank kit on my system, but none of them ship at both high clock speed and low latency. With the way the market is looking now I don't see that really changing as I'd imagine the enterprise market is eating up most of the new IC designs.