Nvidia contributes Blackwell platform design to the Open Compute Project — members can build their own custom designs for Blackwell GPUs

Nvidia open-sources Blackwell data center architecture.

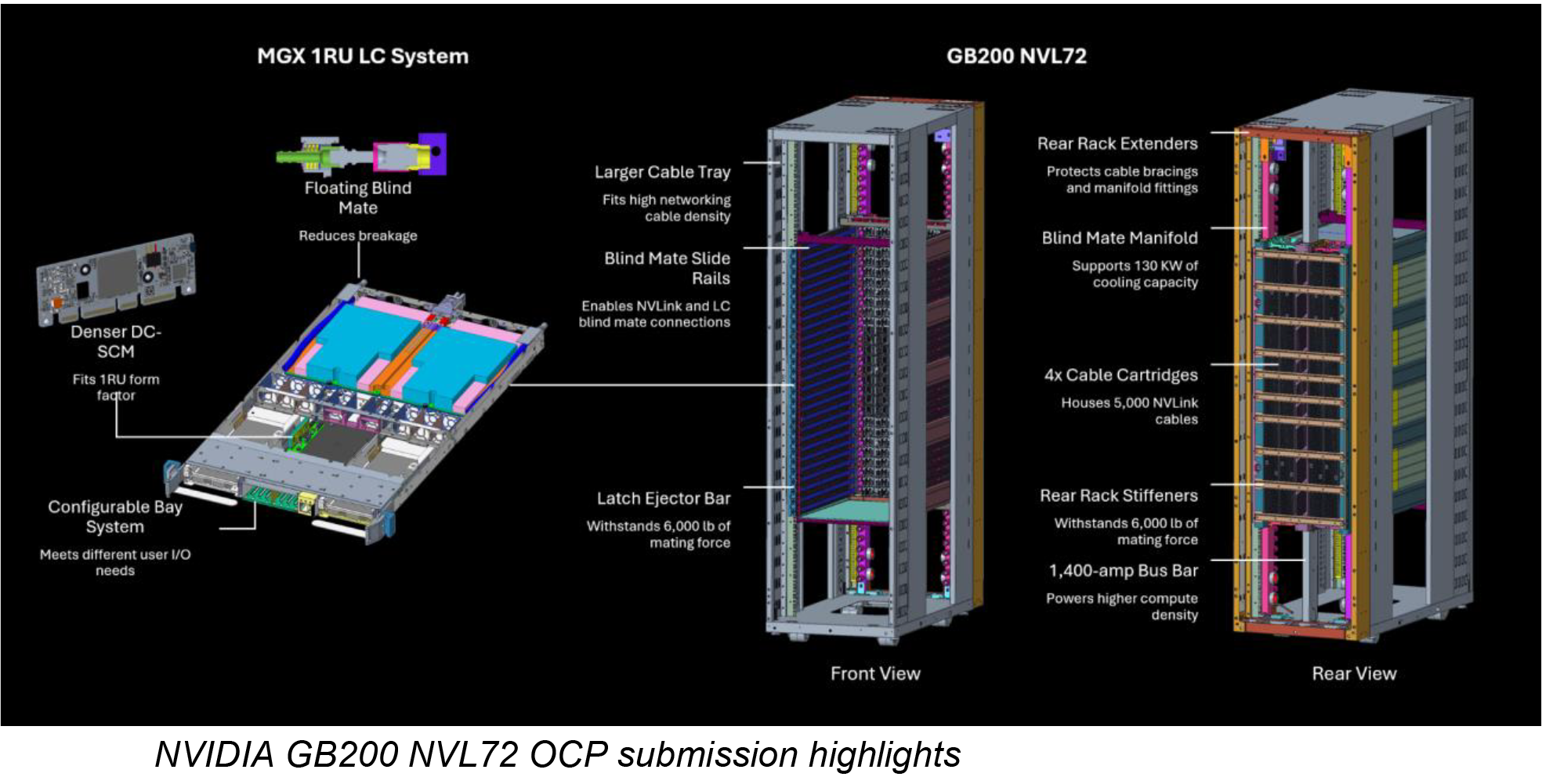

Nvidia has contributed its GB200 NVL72 rack and compute/switch tray designs to the Open Compute Project (OCP), enabling OCP members to build their designs based on Nvidia Blackwell GPUs. The company is sharing key design elements of its high-performance server platform to accelerate the development of open data center platforms that can support Nvidia's power-hungry next-generation GPUs with Nvidia networking.

The GB200 NVL72 system with up to 72 GB100 or GB200 GPUs is at the heart of this contribution. Nvidia shares critical electro-mechanical designs, including details about the rack architecture, cooling system, and compute tray components. The GB200 NVL72 system features a modular design based on Nvidia's MGX architecture that connects 36 Grace CPUs and 72 Blackwell GPUs in a rack-scale configuration. This setup provides a 72-GPU NVLink domain, allowing the system to act as a massive single GPU.

At the OCP event, Nvidia introduced a new joint reference design of the GB200 NVL72. It was developed with Vertiv, a leading power and cooling solution known for its expertise in high-density compute data centers. This new reference design reduces deployment time for cloud service providers (CSPs) and data centers adopting the Nvidia Blackwell platform.

Using this reference architecture, data centers no longer need to create custom power, cooling, or spacing designs specific to the GB200 NVL72. Instead, they can rely on Vertiv's advanced solutions for space-saving power management and energy-efficient cooling. This approach allows data centers to deploy 7MW GB200 NVL72 clusters faster globally, cutting implementation time by as much as 50%.

"Nvidia has been a significant contributor to open computing standards for years, including their high-performance computing platform that has been the foundation of our Grand Teton server for the past two years," said Yee Jiun Song, VP of Engineering at Meta. "As we progress to meet the increasing computational demands of large-scale artificial intelligence, Nvidia's latest contributions in rack design and modular architecture will help speed up the development and implementation of AI infrastructure across the industry."

In addition to hardware contributions, Nvidia is expanding support for OCP standards with its Spectrum-X Ethernet networking platform. By aligning with OCP's community-developed specifications, Nvidia speeds up the connectivity of AI data centers while enabling organizations to maintain software consistency to preserve previous investments.

Nvidia's networking advancements include the ConnectX-8 SuperNIC, which will be available for OCP 3.0 next year. These SuperNICs support data speeds up to 800Gb/s, and their programmable packet processing is optimized for large-scale AI workloads, which is expected to help organizations build more flexible, AI-optimized networks.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

More than 40 electronics manufacturers are working with Nvidia to build its Blackwell platform. Meta, the founder of OCP, is among the notable partners. Meta plans to contribute its Catalina AI rack architecture, based on the GB200 NVL72 system, to the OCP.

By collaborating closely with the OCP community, Nvidia is working to ensure its designs and specifications are accessible to a wide range of data center developers. As a result, Nvidia will be able to sell its Blackwell GPUs and ConnectX-8 SuperNIC to companies that rely on OCP standards.

"Building on a decade of collaboration with OCP, Nvidia is working alongside industry leaders to shape specifications and designs that can be widely adopted across the entire data center," said Jensen Huang, founder and CEO of Nvidia. "By advancing open standards, we’re helping organizations worldwide take advantage of the full potential of accelerated computing and create the AI factories of the future."

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.