Nvidia could be readying B30A accelerator for Chinese market — new Blackwell chip reportedly beats H20 and even H100 while complying with U.S. export controls

Blackwell for China is in the works.

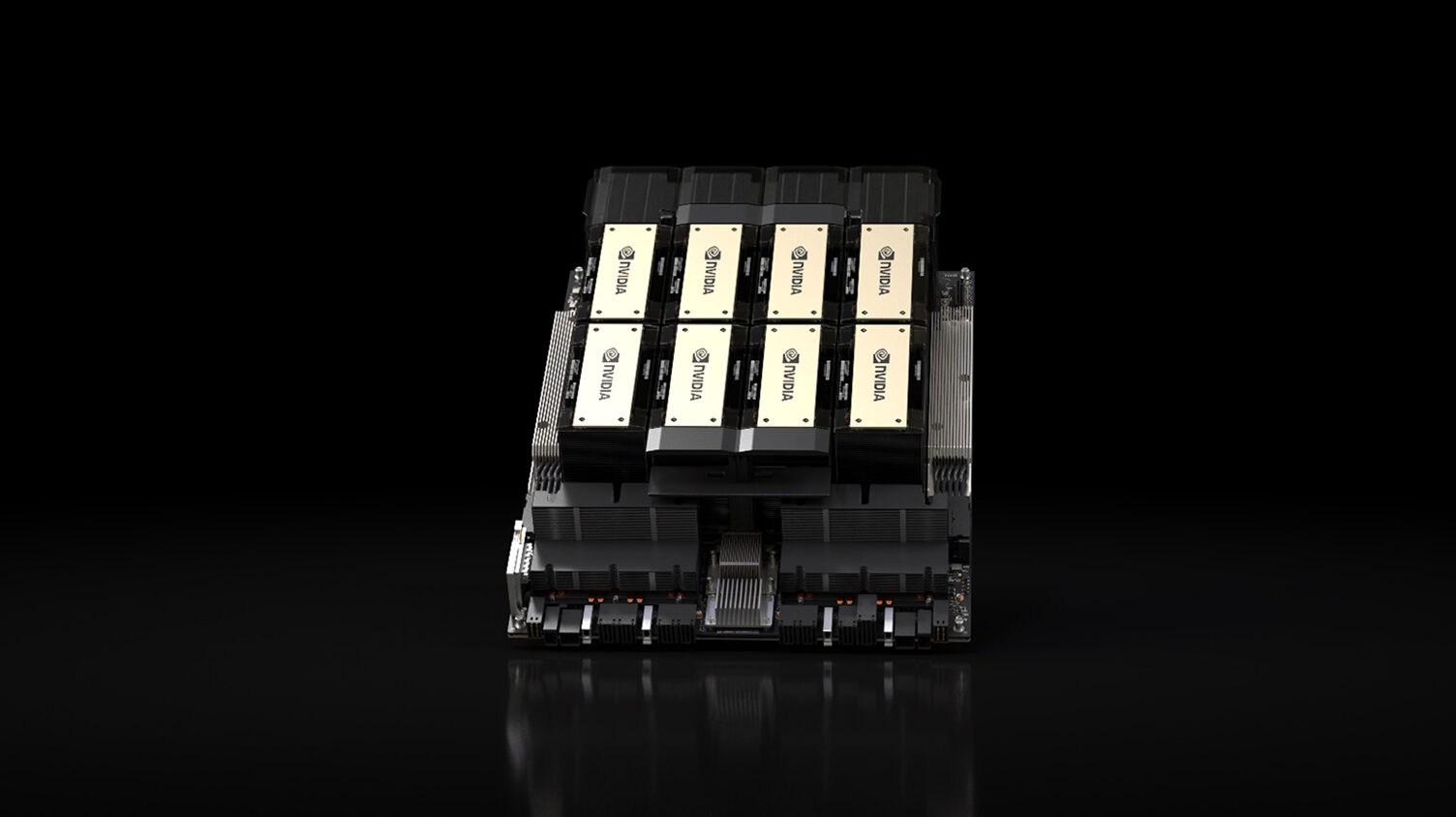

Nvidia is reportedly working on new AI accelerators based on the Blackwell architecture for the Chinese market — the B20A for AI training and the RTX 6000D for AI inference — that will beat existing HGX H20 and L20 PCIe products, but will still meet U.S. export controls, Reuters says, citing sources familiar with the matter. If the information is correct, then Chinese customers may get two rather formidable products with performance on par with previous-generation flagships.

The Nvidia B30A is said to be based on the Blackwell Ultra microarchitecture but relies on only one compute chiplet and offers about half of B300's compute performance and 50% of its HBM3E memory capacity (i.e., 144 GB HBM3E). Previously, such a product was rumored to be called B300A and was intended for the general market, not just China. Since the B30A is said to be a China-oriented SKU, it is possible that Nvidia will introduce certain performance restrictions for this part. "We evaluate a variety of products for our roadmap, so that we can be prepared to compete to the extent that governments allow," an Nvidia spokesperson told Tom's Hardware in a statement. "Everything we offer is with the full approval of the applicable authorities and designed solely for beneficial commercial use.“

If the information is correct and the GPU will hit the reported performance targets, then it will not only outpace the HGX H20, but it will actually beat the previous-generation flagship, the H100. The unit will also feature NVLink for scale-up connectivity, though it is unclear whether Nvidia will restrict building rack-scale solutions or big clusters by cutting down the number of NVLinks for this product.

GPU | B30A (rumored) | HGX H20 | H100 | B200 | B300 (Ultra) |

Packaging | CoWoS-S | CoWoS-S | CoWoS-S | CoWoS-L | CoWoS-L |

FP4 PFLOPs (per Package) | 7.5 | - | - | 10 | 15 |

FP8/INT6 PFLOPs (per Package) | 5 | 0.296 | 2 | 4.5 | 10 |

INT8 PFLOPs (per Package) | 0.1595 | 0.296 | 2 | 4.5 | 0.319 |

BF16 PFLOPs (per Package) | 2.5 | 0.148 | 0.99 | 2.25 | 5 |

TF32 PFLOPs (per Package) | 1.25 | 0.074 | 0.495 | 1.12 | 2.5 |

FP32 PFLOPs (per Package) | 0.0415 | 0.044 | 0.067 | 1.12 | 0.083 |

FP64/FP64 Tensor TFLOPs (per Package) | 0.695 | 0.01 | 34/67 | 40 | 1.39 |

Memory | 144 GB HBM3E | 96 GB HBM3E | 80 GB HBM3 | 192 GB HBM3E | 288 GB HBM3E |

Memory Bandwidth | 4 TB/s | 4 TB/s | 3.35 TB/s | 8 TB/s | 8 TB/s |

HBM Stacks | 4 | 4 | 5 | 8 | 8 |

NVLink | ? | ? | NVLink 4.0, 50 GT/s | NVLink 5.0, 200 GT/s | NVLink 5.0, 200 GT/s |

GPU TDP | 700W (?) | 400W | 700 W | 1200 W | 1400 W |

Building B30A (or B300A) should be fairly easy for Nvidia as one compute chiplet and four HBM3E memory stacks can likely be packaged using TSMC's proven CoWoS-S technology (at least according to SemiAnalysis) that happens to be cheaper than the CoWoS-L used by the B200 and B300 processors, which contain two compute chiplets and eight HBM3E modules.

In addition to the B30A, Nvidia reportedly plans to offer an RTX 6000D product, designed for AI inference and possibly for professional graphics applications. The unit will be cut down compared to the fully-fledged RTX 6000. Reuters suggests that its memory bandwidth will be around 1.398 TB/s, though details are unknown.

Nvidia's customers are expected to get the first samples of B30A and RTX 6000D in September, so if the products are greenlit by the U.S. government, Nvidia will be able to ship commercial B30A modules and RTX 6000D cards by the end of 2025 or in early 2026.

The rumor about new AI accelerators for China from Nvidia comes after President Donald Trump suggested he might permit sales of next-generation Nvidia parts in China as long as a new arrangement under which Nvidia and AMD would give the U.S. government 15% of revenue from their China revenue is met. Still, bipartisan opposition among legislators continues to question whether even cut-down versions of advanced AI hardware should be accessible to Chinese companies.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

popatim I wonder how much longer before China will be able to make better stuff than we allow for export.Reply -

nookoool Tencent just announced in their q2 that they would not be buying anymore h20 since they have enough for training and they will be buying domestic for inference. Likely these chinese companies have been overbuying everything thru legal and illegal channels for the past 4 years. Nvdia has to keep on offering better products as those other domestic firms slowly catch up as well.Reply