HBM roadmaps for Micron, Samsung, and SK hynix: To HBM4 and beyond

HBM3E production is in full-swing, so what's next?

High Bandwidth Memory (HBM) is the unsung hero behind the AI revolution. As the industry seeks to extract the most performance from frontier AI models, HBM powers the world's fastest GPUs and AI accelerators by keeping the intense computational engines fed with data at breakneck speed. This critical technology has rapidly matured over the past several years, and lately the pace of innovation has quickened, as industry behemoths like Nvidia and AMD look to facilitate the creation of more advanced artificial intelligence models.

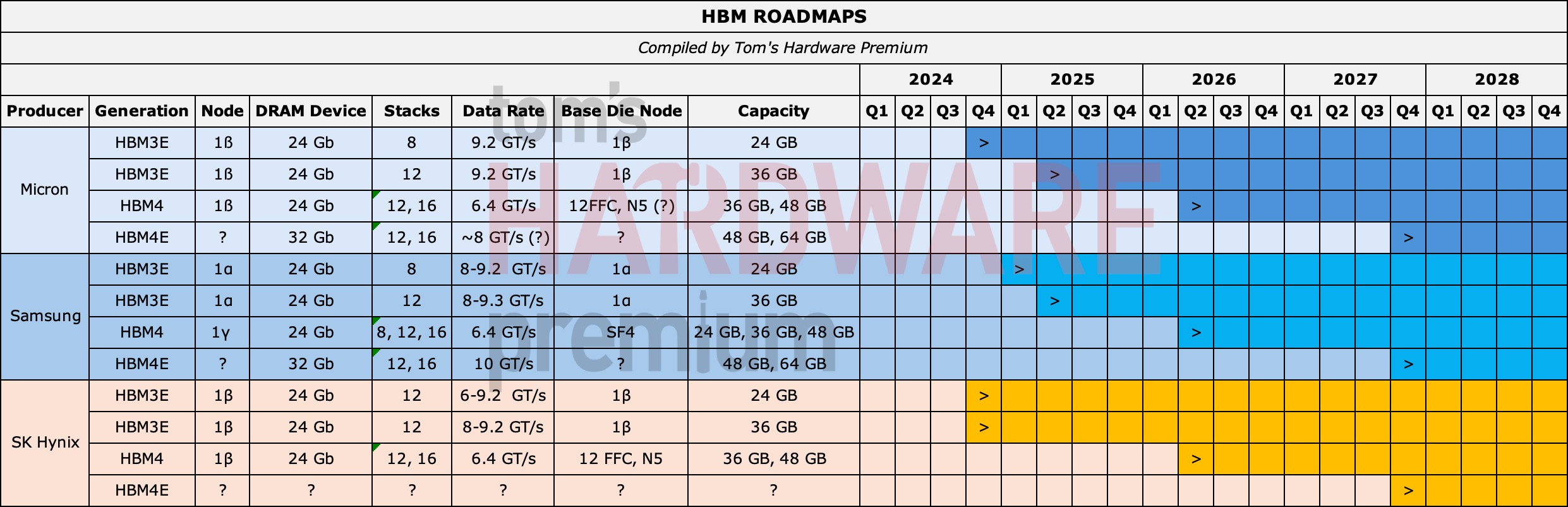

All three major DRAM makers (Micron, Samsung, and SK hynix) have started volume production of 8-Hi HBM3E stacks, the newest form of the technology. And more robust and powerful forms of HBM memory are already in development.

Unfortunately, shortages of sophisticated HBM memory have hampered the supply of AI GPUs, which has manufacturers racing to add more production capacity to address any shortfalls. Meanwhile, the development of next-gen HBM products continues apace, hand-in-hand with new performance-enhancing technologies, which will power the next wave of AI accelerators.

Let's take a look at what's next on the HBM roadmaps for Micron, Samsung, and SK hynix based on official information and other sources.

Speeds and feeds

Memory bandwidth is a critical bottleneck in AI systems — AI models, particularly deep learning models, ingest tremendous amounts of data as they chew through workloads. But most forms of modern memory can't satiate AI's ravenous appetite for more data. That's where HBM steps in.

Traditional memory based on DDR, LPDDR, or GDDR uses 128–bit to 512-bit wide interfaces and employs high data transfer rates to provide bandwidth from 100 GB/s to 2 TB/s.

Unlike traditional memory, high-bandwidth memory (HBM) uses a very wide interface—1024 bits in the case of HBM2 and HBM3, or 2048 bits with HBM4—which multiplies bandwidth up to 4 TB/s—8 TB/s. Given the bandwidth-constrained nature of high-intensity parallel computation in GPUs and accelerators, this increased bandwidth translates directly to more performance.

However, the wide interface makes HBM difficult to produce, requiring multiple specialized DRAM devices interconnected using through-silicon vias (TSVs) stacked on top of a base die. HBM makers also vary the number of stacked memory dies to increase capacity, denoted by terminology such as 8-Hi for eight stacked dies, or 12-Hi for 12 stacked dies.

Due to its huge bandwidth, HBM is, and will continue to be, the de facto memory standard for AI systems, HPC ASICs, and GPUs.

12-Hi HBM3E is almost here

Today's highest-end AI accelerators — including Nvidia's H200 (141 GB), B200 (192 GB), and AMD's Instinct MI300X (192 GB) — use 24 GB 8-Hi HBM3E stacks based on 24 Gb DRAM devices. The next step for the industry is to adopt higher-capacity 36 GB 12-Hi HBM3E packages featuring 24 Gb memory dies. These will be used by Nvidia's upcoming B300-series and AMD's next-gen MI325X AI accelerators.

SK hynix has begun mass production of 36 GB 12-Hi HBM3E chips, whereas Micron has been sampling similar products since September, with mass production of the new packages understood to be imminent.

Samsung, on the other hand, was late to the party with its 8-Hi HBM3E certification, and its 12-Hi HBM3E dies also suffered from a slight delay. Samsung's delay is likely caused by sticking with its 1α fabrication technology, unlike Micron and SK hynix, which use a 1ß (5th Gen, 10nm-class) DRAM process to make their HBM3E DRAM ICs. By the time Nvidia's B300 enters mass production, Samsung will likely be able to compete with 12-Hi HBM3E 36 Gb offerings of its own.

HBM4: 2048-bit I/O and up to 16 layers

While manufacturers are still wrapping their hands around the upcoming HBM3E rollout, HBM4 and HBM4E are both already on the horizon.

The preliminary HBM4 specification (unveiled in July 2024) introduces a wider 2048-bit interface for HBM stacks. It also specifies 24 Gb and 32 Gb DRAM layers at up to 6.40 GT/s. The spec supports 4-Hi, 8-Hi, 12-Hi, and 16-Hi configurations, ensuring greater flexibility and potentially enabling even larger 64 Gb HBM4 packages.

Meanwhile, HBM4E may also boast high interface speeds of around 9 GT/s, as Rambus' HBM4 memory controller IP exceeds the announced capabilities of HBM4's JDEC-standard 6.40 GT/s speeds.

With HBM4E, memory makers will be able to customize base dies of packages (which SK hynix envisioned in early 2024) by adding additional functions, which could potentially extend to enhanced caches, custom interface protocols, and more.

All three leading memory manufacturers, Micron, Samsung, and SK hynix, have confirmed their intentions to produce HBM4 and HBM4E memory, but their overall rollout strategies may differ.

HBM4: 16-Hi stacks, but no 32 Gb devices on horizon

For now, none of the prominent DRAM producers have HBM4 or HBM4E stacks based on 32 Gb memory devices on their roadmaps. As such, all HBM4 and HBM4E products are expected to utilize smaller 24 Gb DRAM dies when they first roll out.

Micron is expected to keep using its proven 1ß (5th Gen, 10nm-class) process technology to make 24 Gb memory ICs for HBM4 stacks; however, Samsung plans to transition to 24 Gb DRAM dies made on its 1γ (6th Gen, 10nm-class) process with HBM4 and HBM4E. This will likely offer Samsung substantial performance, power efficiency, and cost advantages. SK hynix also intends to use 1ß for HBM4 DRAM ICs and may transition to a 1γ process for the HBM4E offering.

When it comes to layers, Micron lists 12-Hi and 16-Hi versions of its HBM4 and HBM4E offerings, whereas Samsung and SK hynix may go straight to 16-Hi HBM4 stacks. With the standard appearing to be 24 Gb DRAM devices and 12-Hi or 16-Hi stacks, HBM4 will increase per-package capacity to 48 Gb, a noticeable leap over HBM3E's 36Gb.

It's possible that by the time HBM4E finally arrives, the number of supported layers may exceed 16, with rumors that South Korean manufacturers could adopt a 20-layer design (which should be taken with a grain of salt). By then, DRAM makers may have also adopted a 32 Gb package for high-bandwidth offerings.

HBM4 & HBM4E production nodes

It should be no surprise that HBM4 and HBM4E memory stacks will rely on base dies produced by logic manufacturers using logic process technologies, thus boosting transfer speeds and signal integrity. TSMC and SK hynix were the first to disclose that they plan to use TSMC’s 12FFC+ and N5 base dies for HBM4. It's likely that Micron will also use TSMC's base dies (as the two companies are partners), though this has not been officially confirmed.

Samsung is expected to use its own Samsung Foundry nodes for HBM4 and HBM4E base dies. There is still uncertainty around the exact node it will employ, though it is reasonable to expect similar process technologies to TSMC's 12FFC+ and N5 processes.

HBM4 is coming in 2026 & HBM4E expected a year later

Samsung and SK hynix are rumored to introduce their first HBM4 offerings around Q3 2025, whereas Micron is projected to follow in Q4 2025. In both cases, 'introductions' likely mean the delivery of the first working samples to partners like AMD and Nvidia, not high-volume manufacturing.

Considering that the mass production of actual processors that support HBM4 is not expected until 2026, Micron's slight delay does not seem like a major issue.

Curiously, Micron began discussing HBM4E around a year before the planned mass production of HBM4 was set to commence. Typically, 'extended' variants of HBM specifications are introduced years after the original standard. According to Micron's official roadmap, HBM4E is set to arrive in late 2027.

HBM4E is likely to be used in the generation after the release of Nvidia's upcoming Rubin architecture and AMD's MI400 AI accelerator. Both are slated to support HBM4 in 2026.

If the industry requires customizable memory, HBM4E might land earlier than expected, but don't hold your breath — HBM development is notoriously challenging.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.