The tale of Nvidia's HGX H20: How an AI GPU became a political lightning rod

When silicon turns strategic.

Nvidia's HGX H20 AI GPU represents a fraction of the company's revenue, but references to it in the business media far outpace mentions of the company's much more powerful and lucrative H100 or B200 processors. In fact, this specific GPU model has gained a fair bit of notoriety over the last few months as it has become a lightning rod in the heated U.S.-China trade war.

To a large degree, this happened because HGX H20 was one of only a few GPU models for AI workloads that the Biden administration let Nvidia ship to China without any export licenses. Today's Trump administration has chosen to use the HGX H20 as a geopolitical tool instead, turning it into a source of federal revenue.

Meanwhile, China reportedly wants to play a similar game, so it is probing whether Nvidia GPUs feature U.S.-mandated tracking features as well as backdoors, and has even asked Chinese companies to halt H20 imports in the meantime, potentially hurting Nvidia's revenue. This new situation is developing while the Trump administration considers letting Nvidia sell Blackwell-based AI processors to Chinese entities.

With swirling reports spanning more than a year during the intensifying U.S.-China trade war, the HGX H20 has earned a rare spot of international notoriety. Here's how the situation has unfolded so far, and where it is potentially headed.

Why Nvidia's HGX H20 stands out

Nvidia released its first cut-down versions of its flagship GPUs, the A800 and H800, for the Chinese market in 2022. This was when Joe Biden's administration restricted shipments of supercomputer-grade hardware to the People's Republic of China on national security grounds. As a result, the A800 and H800 had their NVLink bandwidth cut to 400 GB/s and 450 GB/s (respectively), and interconnect topology options were limited, which limited multi-GPU scaling and limited their efficiency for supercomputers that run communication-heavy workloads. Performance-wise, the A800 and H800 were not too far behind the power of their full-featured brethren.

By late 2023, it became clear that China-based entities had managed to overcome restrictions and use H800 for AI training without any significant problems, enjoying the performance and efficiency of Nvidia's Hopper architecture. This was years ahead of anything China-based developers had to offer.

To that end, the Biden administration imposed the Export Administration Regulations (EAR) rule 3A090.a (covers processors designed or marketed for use in data centers) and 3A090.b (covers those not designed or marketed for data centers) that introduced Total Processing Power (TPP) and Performance Density (PD) restrictions on all compute hardware shipped to China.

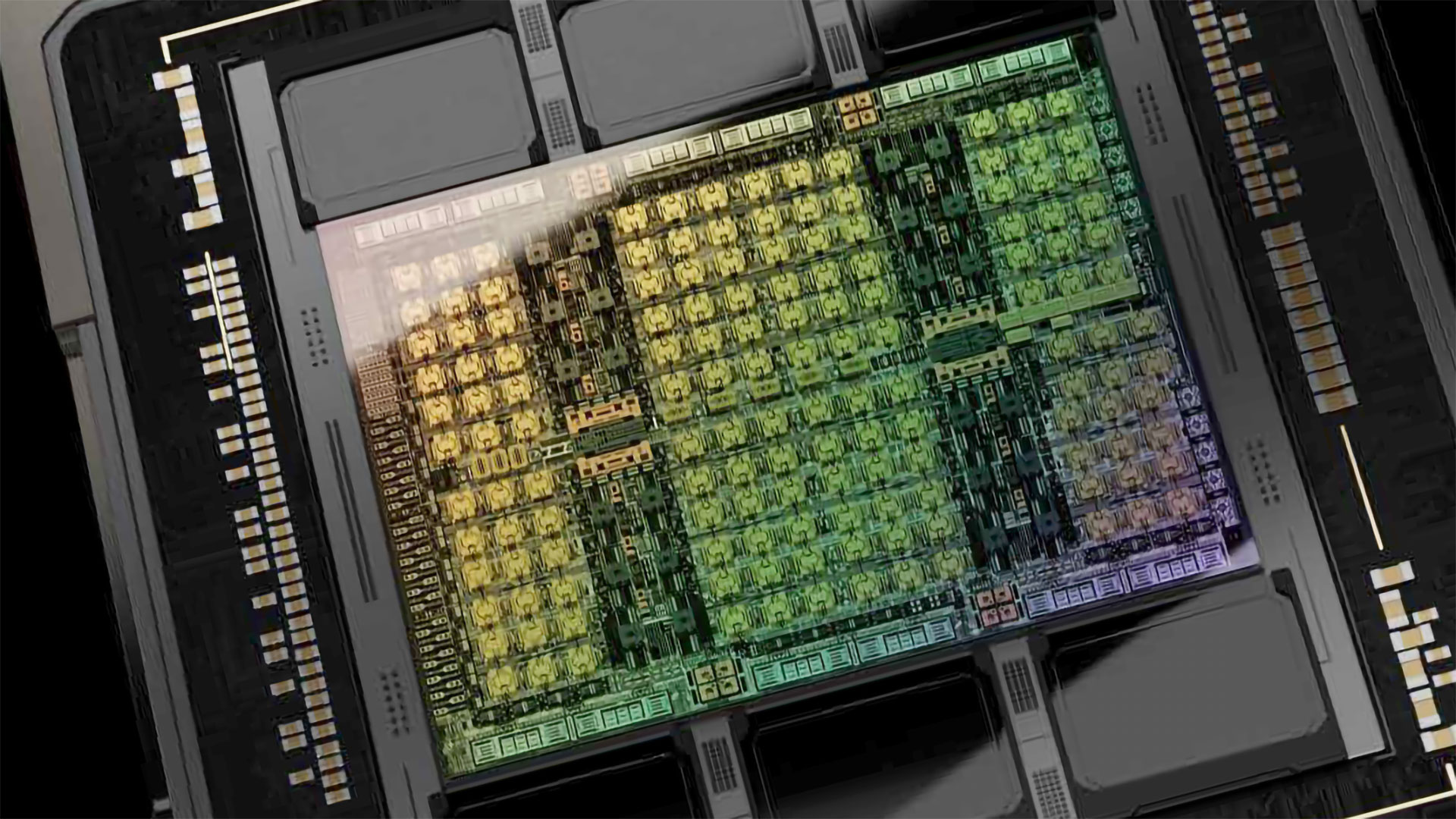

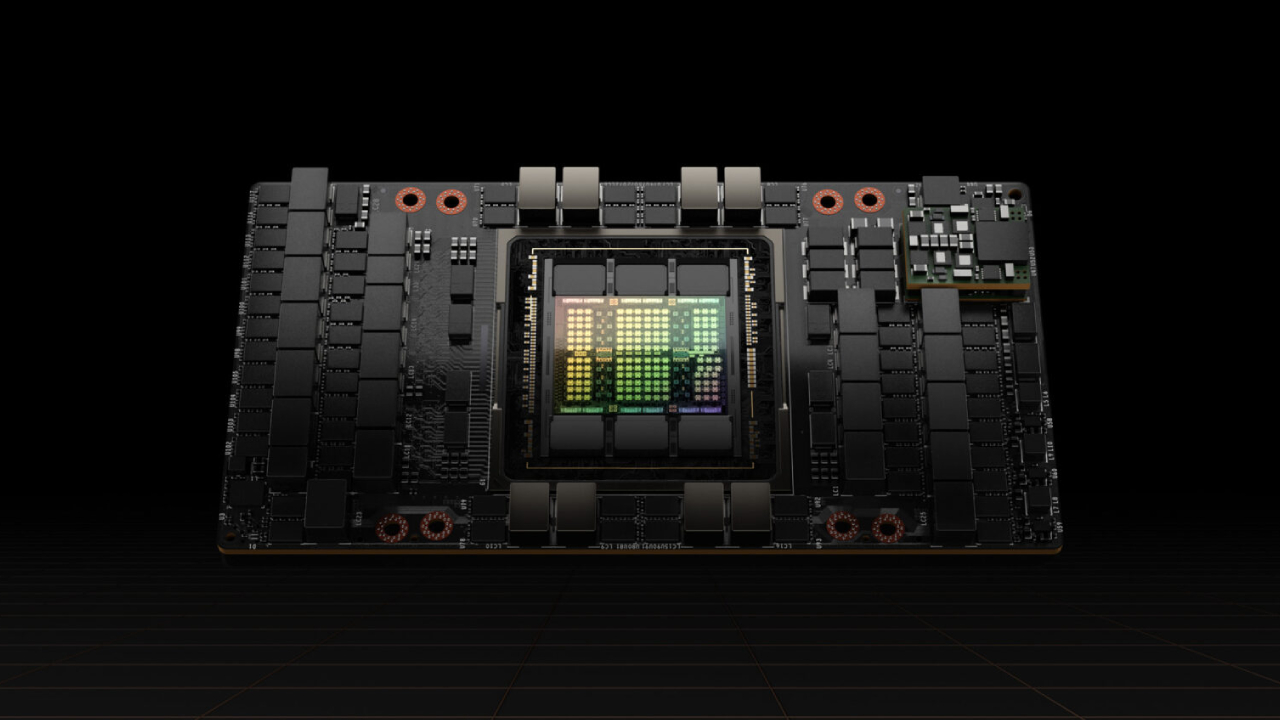

As a result, Nvidia had to cut down its GH100, AD102, and AD104 silicon to build its HGX H20, L20 PCIe, and L2 PCIe products that met both TPP and PD restrictions imposed by the U.S. government. Consequently, Nvidia's HGX H20 was 3.3 – 6.69 times slower than H100 in AI workloads and 34 – 67 times slower than H100 in HPC workloads that require FP64 precision. AMD followed suit with the Instinct MI308 processor.

GPU | HGX H20 | H100 SXM | Difference |

Architecture | GPU | Hopper | GH100 | Hopper | GH100 | - |

Memory | 96 GB HBM3 | 80 GB HBM3 | 0.83X |

Memory Bandwidth | 4.0 TB/s | 3.35 TB/s | 0.83X |

INT8 | FP8 Tensor (dense) | 296 TFLOPS | 1980 TFLOPS | 6.69X |

BF16 | FP16 Tensor (dense) | 148 FLOPS | 495 TFLOPS | 3.34X |

TF32 Tensor (dense) | 74 TFLOPS | 495 TFLOPS | 3.69X |

FP32 | 44 TFLOPS | 67 TFLOPS | 1.52X |

FP64 | 1 TFLOPS | 34 TFLOPS | 34X |

RT Core | N/A | N/A | - |

MIG | Up to 7 MIG | Up to 7 MIG | - |

L2 Cache | 60 MB | 60 MB | - |

Media Engine | 7 NVDEC, 7 NVJPEG | 7 NVDEC, 7 NVJPEG | - |

Power | 400W | 700W | 1.75X |

Form Factor | 8-way HGX | 8-way HGX | - |

Interface | PCIe Gen5 x16: 128 GB/s | PCIe Gen5 x16: 128 GB/s | - |

NVLink | 900 GB/s | 900 GB/s | - |

But while the HGX H20 is massively slower than its full-fat H100 counterpart, the unit is still quite competitive with AI processors designed in China (even though Huawei has rack-scale AI systems that beat Nvidia's flagship GB200 NVL72).

GPU | HGX H20 | Ascend 910B | Ascend 910C | H100 SXM |

Architecture | GPU | Hopper | GH100 | ? | ? | Hopper | GH100 |

Memory | 96 GB HBM3 | 64 GB HBM2E | 128 GB HBM2E | 80 GB HBM3 |

Memory Bandwidth | 4.0 TB/s | 1.6 TB/s | 3.2 TB/s | 3.35 TB/s |

INT8 | FP8 Tensor (dense) | 296 TFLOPS | ? | ? | 1980 TFLOPS |

BF16 | FP16 Tensor (dense) | 148 FLOPS | 390 TFLOPS | 780 TFLOPS | 495 TFLOPS |

TF32 Tensor (dense) | 74 TFLOPS | ? | Row 6 - Cell 3 | 495 TFLOPS |

FP32 | 44 TFLOPS | - | - | 67 TFLOPS |

FP64 | 1 TFLOPS | - | - | 34 TFLOPS |

Furthermore, since the majority of hyperscale cloud service providers (CSPs) in China rely on Nvidia's highly-efficient CUDA software stack, they have been eagerly buying billions of dollars' worth of HGX H20 processors for their workloads, as real-world performance of these chips was higher compared to domestic solutions, according to SemiAnalysis.

As a result, Nvidia's AI GPUs have dominated not only the global AI hardware sector in general, but China's AI hardware sector in particular. This has turned these processors into geopolitical instruments.

How the HGX H20 is being used as a political tool

Earlier this year, the Biden administration introduced its policy called the AI Diffusion Rule, which proposed barring the export of advanced GPUs to China, Russia, and others, while slightly less restrictive rules were proposed for other countries (even though they would have significantly affected supply). The rule was set to come into effect on May 15.

Although the Trump administration scrapped the AI Diffusion Rule, Nvidia’s hardware and related export controls remained a key bargaining tool in U.S.–China trade negotiations.

The AI Diffusion Rule would have divided the world into three licensing tiers. The first tier, made up of the U.S. and 18 close allies, would retain unrestricted access to advanced chips like Nvidia's H100. The second tier, covering over 100 nations (including close allies like the Baltic States, Israel, and Poland), would face a limit of roughly 50,000 H100-class GPUs over several years, unless they secure verified end user (VEU) approval, which would require direct negotiations with the U.S. government.

However, buyers in these Tier 2 countries could still bring in up to 1,700 high-end AI processors annually without needing a license, and these would not count toward the quota. The third tier — which included China, Russia, and Macau — would be entirely prohibited from acquiring advanced processors, due to existing arms bans. Under this rule, AMD and Nvidia would lose the ability to sell the HGX H20 and Instinct MI308 GPUs to Chinese entities.

Recently, Trump admitted that he had been unaware of Nvidia's existence until a conversation with an advisor revealed the company's dominant control over the AI training hardware market. As a result, when told that Nvidia effectively had a 100% market share, he initially proposed regulatory action to split up the company to make space for potential competitors.

However, he was advised that even if the U.S. assembled top-tier talent and formed a company big enough to survive and compete, it would still take at least a decade to match Nvidia's capabilities, even assuming poor management on Nvidia's part. He also recognized that technological lead makes Nvidia a dominant force globally, which could be instrumental on the geopolitical scene.

As a result, while the Trump administration annulled the AI Diffusion Rule and let U.S. companies ship their hardware to China, it could not resist from using export controls on multiple leading American companies — including AMD, Nvidia as well as Electronic Design Automation (EDA) makers Cadence, Synopsys, and Siemens EDA — as bargaining chips in Trump's government's negotiations with China. In mid-April, the U.S. administration banned sales of HGX H20 and Instinct MI308 to Chinese entities, and it followed suit in May, banning sales of EDA tools to Chinese clients. As a result of the export ban on its HGX H20 processor for AI, Nvidia had to write down $4.5 billion worth of inventory (consisting of both ready-to-ship silicon and commitments to production partner TSMC), whereas AMD wrote off $800 million.

However, after China agreed to sign a trade deal with the U.S. and eased exports of some rare earth metals, the U.S. allowed EDA companies to work with China-based customers, and said it would grant export licenses for HGX H20 and Instinct MI308 processors. When China hardliners criticized the move to let Nvidia sell the remainder of the H20 stock to its clients in China, Commerce Secretary Howard Lutnick reportedly argued that the approved processors would be instrumental in tying Chinese AI developers to Nvidia's CUDA ecosystem.

But, because the H20 is a cut-down version of Nvidia's former flagship AI GPU, it doesn't give Chinese entities the ability to develop artificial intelligence comparable to models built in America.

China Responds

The U.S. government's attempt to use leading-edge hardware — including Nvidia's HGX H20 — as elements in geopolitical negotiations were certainly noticed by the Chinese government, so in the recent weeks China's Cyberspace Administration (CAC) ordered major tech companies, including Alibaba, ByteDance, and Tencent, to pause new Nvidia H20 GPUs purchases while it investigates potential security risks, citing fears of U.S.-mandated tracking features and possible backdoors, which Nvidia rebuffed, in a statement given to Tom's Hardware, Nvidia said:

"As both governments recognize, the H20 is not a military product or for government infrastructure. China has ample supply of domestic chips to meet its needs. It won't and never has relied on American chips for government operations, just like the U.S. government would not rely on chips from China. Banning the sale of H20 in China would only harm U.S. economic and technology leadership with zero national security benefit."

Large AI and HPC data centers can be seen from space using IR sensors, and virtually all of the chips located there can theoretically be tracked down using their drivers, so the Chinese authorities are likely making this a political matter.

"You can see data centers with IR sensors from space," says Jon Peddie, the President of Jon Peddie Research, in a statement to Tom's Hardware Premium. "GPUs and CPUs have had telemetry capabilities via the driver for a long time. Presumably, it is a bi-directional path, thereby leading to the Chinese speculation that Nvidia or the U.S. government could shut down the chips remotely. That would be suicide: who would ever buy an Nvidia or any American chip if you thought the supplier or government might shut it down?"

Nvidia has denied that its hardware features any backdoors or kill switches, but halting shipments of H20 is a hit on Nvidia by the Chinese authorities. Which is a testament that AI — both on hardware and software fronts — is not only a strategically important technology, but it is also a crucial new sector of the global economy.

"Cybersecurity is critically important to us. NVIDIA does not have 'backdoors' in our chips that would give anyone a remote way to access or control them," a statement by Nvidia sent to Tom's Hardware reads.

The economic impact of H20

As Nvidia earns tens of billions of dollars on its hardware for AI data centers, the U.S. government wanted its piece of Nvidia's success. As a result, just weeks after the U.S. government announced that it would grant export licenses for AMD's Instinct MI308 and Nvidia's HGX H20 processors bound to China, it turned out that the Trump administration had essentially imposed a sales tax on these export licenses and made companies share 15% of their China revenue with the U.S. government.

"It is a sales tax, nothing strategic or technical," Peddie continues. "It represents double taxation, something Republicans used to get very upset about. This now opens the door to sales tax on export licenses for everything, which is counterintuitive, as the current administration aims to alter the balance of trade."

Imposing export duties is illegal under the U.S. Constitution, but this did not stop President Donald Trump from proposing the deal, then having the Department of Commerce introduce a legal ground for it. Some of the China hardliners in the U.S. legislative branch claimed that even the HGX H20 shipments to China represent national security risks, but not for the current administration.

"This pretty much shows the national security issue is a red herring," Peddie said. "Is it not a security issue if a tax can be collected?"

Interestingly, shortly after the U.S. government said that it would grant export licenses to supply Nvidia's HGX H20 to China, unofficial sources close to the company said that it would not restart production of these processors, but would rather focus on something Blackwell-based. However, Nvidia did put a further order of 300,000 H20 GPUs shortly afterward.

The production cycle of a 4nm-class processor at TSMC is around three months. Then, a data center GPU needs to be packaged, which will also take time. This means that Nvidia could theoretically get a fresh batch of H20s in mid-October at best. It likely makes little sense for Nvidia to produce more GH100/H20 silicon at this point, though the company probably has enough GH100/GH200 dies with defects that can be repurposed to H20s. But whether or not Nvidia can ship them to Chinese companies is now a political question.

Chinese firms had already ordered around 700,000 HGX H20 AI accelerators (which are believed to be priced between $12,000 and $14,000 per unit), and it is unclear if the freeze affects these shipments, according to The Information.

If it does, then Nvidia will remain without over $8.4 billion – $9.8 billion of revenue (as the company sells plenty of data center hardware with its GPUs) and the United States Government will remain without more than $1.26 billion or $1.47 billion of federal revenue.

Beyond HGX H20: Blackwell-based AI accelerators for China?

Nvidia has supplied samples of a modified Blackwell chip for the Chinese market and is developing another, potentially faster model pending U.S. export clearance. Donald Trump recently confirmed that the U.S. government may grant an export license of a Blackwell-based GPU for China, which would strip 30% to 50% of its full-fat performance.

Even with a 30–50% performance cut, Nvidia’s B100, B200, or B300 GPUs would still deliver far more power than anything available in China. Currently, the top option for Chinese firms is the H20 HGX, rated at 148 FP16/BF16 TFLOPS and 296 FP8 TFLOPS. A B100 reduced by half would still offer about 900 FP16/BF16 TFLOPS, 1.75 PF8 PFLOPS, and 3.5 FP4 PFLOPS — unmatched by any domestic Chinese AI chip.

The H20 HGX is already 3.3 to 6.69 times slower than a full H100, having been deliberately downscaled to comply with Biden-era export controls on advanced AI and HPC GPUs. However, even a B100 neutered by 50% will offer performance close to, or better than the H100.

GPU | Hypothetical cut-down Blackwell GPU | H100 SXM | HGX H20 | Ascend 910B | Ascend 910C |

Architecture | GPU | Blackwell | GB100 | Hopper | GH100 | Hopper | GH100 | ? | ? |

Memory | 96 GB or 192 GB HBM3E | 80 GB HBM3 | 96 GB HBM3 | 64 GB HBM2E | 128 GB HBM2E |

Memory Bandwidth | 2 TB/s or 4.10 TB/s | 3.35 TB/s | 4.0 TB/s | 1.6 TB/s | 3.2 TB/s |

INT8 | FP8 Tensor (dense) | 1750 TFLOPS | 1980 TFLOPS | 296 TFLOPS | ? | ? |

BF16 | FP16 Tensor (dense) | 900 TFLOPS | 495 TFLOPS | 148 FLOPS | 390 TFLOPS | 780 TFLOPS |

TF32 Tensor (dense) | ? | 495 TFLOPS | 74 TFLOPS | ? | Row 6 - Cell 5 |

FP32 | ? | 67 TFLOPS | 44 TFLOPS | - | - |

FP64 | ? | 34 TFLOPS | 1 TFLOPS | - | - |

Developing China-specific Blackwell silicon might not be a good idea for Nvidia. While it would be cheaper to produce than B100/B200/B300, it might not meet the performance density requirements of the 2023 U.S. export controls. But then again, since shipments of the HGX H20 to China have become a political issue, previous formal guidelines may become irrelevant.

"I think it would be a difficult task to try to apply logic and economics to a political issue," said Peddie. "[Export control rules] will change every day and depend on the latest political issues."

For now, it only makes sense to wait and see what Nvidia and the U.S. government come up with, with regard to Blackwell-based products for China. But one thing is for sure: we know that it's coming, and that China might get beefier AI abilities once units land in the country, whenever that might be.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.