DGX B200 Blackwell node sets world record, breaking over 1,000 TPS/user

Nvidia breaks another world record in the AI space

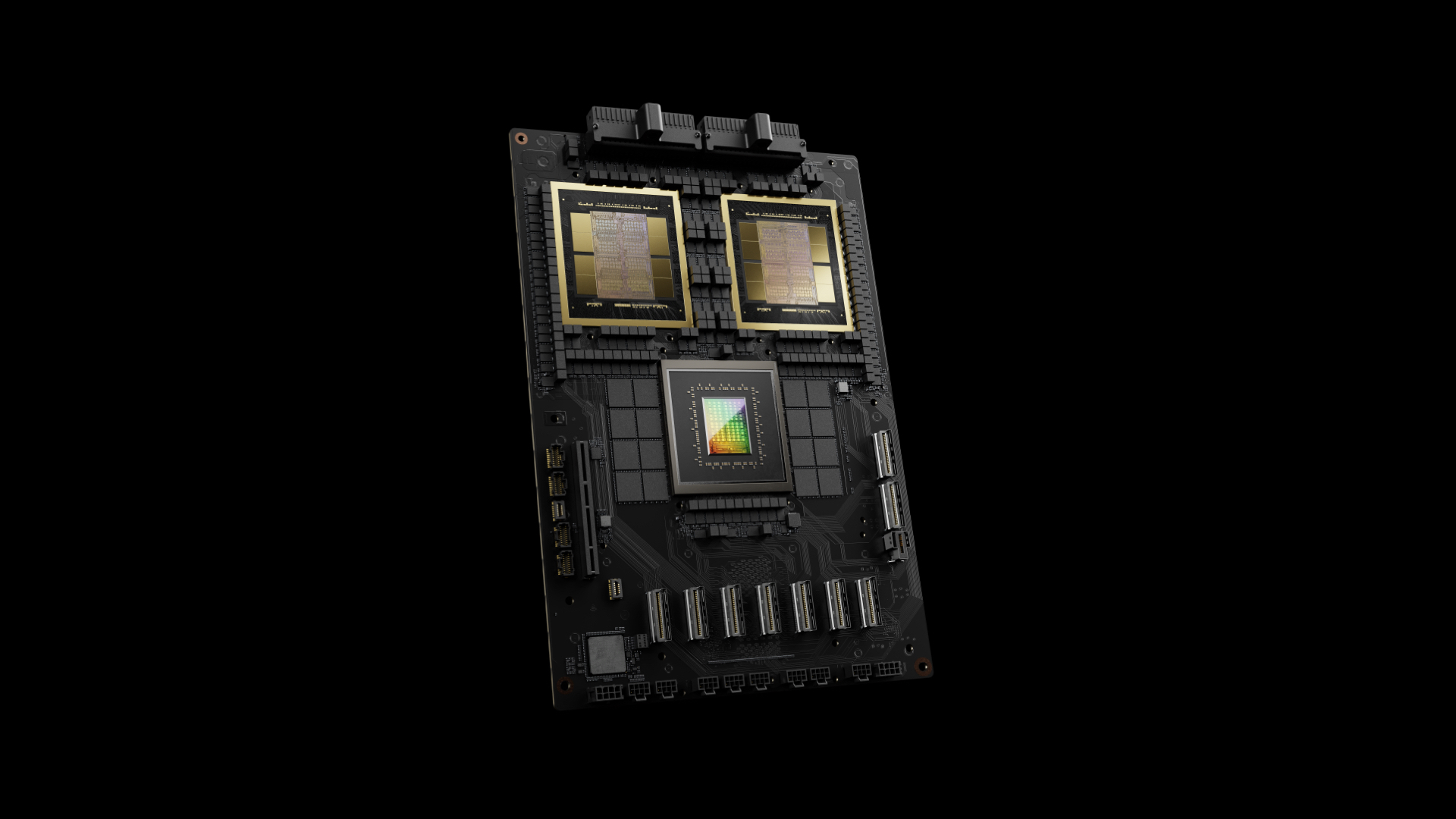

Nvidia has reportedly broken another AI world record, breaking the 1,000 tokens per second (TPS) barrier per user with Meta's Llama 4 Maverick large language model, according to Artificial Analysis in a post on LinkedIn. This breakthrough was achieved with Nvidia's latest DGX B200 node, which features eight Blackwell GPUs.

Nvidia outperformed the previous record holder, SambaNova, by 31%, achieving 1,038 TPS/user compared to AI chipmaker SambaNova's prior record of 792 TPS/user. According to Artificial Analysis's benchmark report, Nvidia and SambaNova are well ahead of everyone in this performance metric. Amazon and Groq achieved scores just shy of 300 TPS/user — the rest, Fireworks, Lambda Labs, Kluster.ai, CentML, Google Vertex, Together.ai, Deepinfra, Novita, and Azure, all achieved scores below 200 TPS/user.

Blackwell's record-breaking result was achieved using a plethora of performance optimizations tailor-made to the Llama 4 Maverick architecture. Nvidia allegedly made extensive software optimizations using TensorRT and trained a speculative decoding draft model using Eagle-3 techniques, which are designed to accelerate inference in LLMs by predicting tokens ahead of time. These two optimizations alone achieved a 4x performance uplift compared to Blackwell's best prior results.

Accuracy was also improved using FP8 data types (rather than BF16), Attention operations, and the Mixture of Experts AI technique that took the world by storm when it was first introduced with the DeepSeek R1 model. Nvidia also shared a variety of other optimizations its software engineers made to the CUDA kernel to optimize performance further, including techniques such as spatial partitioning and GEMM weight shuffling.

TPS/user is an AI performance metric that stands for tokens per second per user. Tokens are the foundation of LLM-powered software such as Copilot and ChatGPT; when you type a question into ChatGPT or Copilot, your individual words and characters are tokens. The LLM takes these tokens and outputs an answer based on those tokens according to the LLM's programming.

The user part (of TPS/user) is aimed at single-user-focused benchmarking, rather than batching. This method of benchmarking is important for AI chatbot developers to create a better experience for people. The faster a GPU cluster can process tokens per second per user, the faster an AI chatbot will respond to you.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.