Fragmented ecosystems and limited supply: Why China cannot break free from Nvidia hardware for AI

Can China's attempts to use homegrown chips work for the country's AI ambitions?

Last week saw major twists in China's AI landscape: Trump imposed a 15% sales tax on AMD and Nvidia hardware sold to China, Beijing froze new Nvidia H20 GPU purchases over security concerns, and DeepSeek dropped plans to train its R2 model on Huawei’s Ascend NPUs — raising doubts about China's ability to rely on domestic hardware for its AI sector.

As part of its recurring five-year strategic plans, China's long-stated goal has been to gain its own technological independence, particularly in new and emerging segments that it sees as key to its national security. However, after years of plowing billions into fab startups and its own nascent chip industry, that country still lags behind its Western counterparts and has struggled to build its own truly insulated supply chain that can create AI accelerators. Additionally, the country lacks an effective software ecosystem to rival Nvidia's CUDA, creating even more challenges. Here's a closer look at how this is impacting the country's AI efforts.

China wants to rely on its own hardware

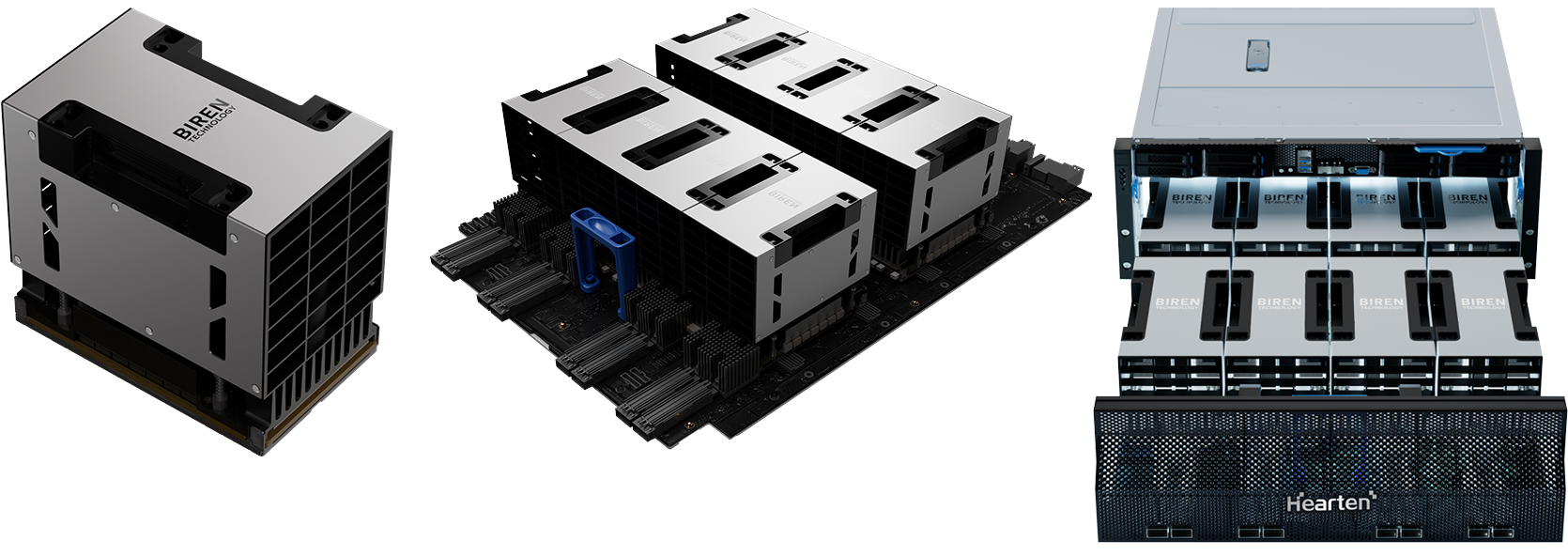

China has had a self-sufficiency plan for its semiconductor industry in general since the mid-2010s. Over time, as the U.S. imposed sanctions against the People's Republic's high-tech sectors, the plan evolved to address supercomputers (including those capable of AI workloads) and fab tools. In 2025, China has created several domestic AI accelerators, and Huawei has even managed to develop its rack-scale CloudMatrix 384.

However, ever since the AI Diffusion Rule was canned, and the incumbent Trump administration banned sales of AMD's Instinct MI308 and Nvidia's HGX H20 to Chinese entities, the PRC doubled down on its efforts to switch crucially important AI companies to using domestic hardware.

As a result, when the U.S. government announced plans to grant AMD and Nvidia export licenses to sell their China-specific AI accelerators to clients in the People's Republic, U.S. President Trump announced an unprecedented 15% sales tax on AMD's and Nvidia's hardware sold to China.

China's government then made shipments of Nvidia's HGX H20 hardware strategic, and instructed leading cloud service providers to halt new purchases of Nvidia’s H20 GPUs while it examines alleged security threats, a move that could potentially bolster demand for domestic hardware. This may be good news for companies like Biren Technology, Huawei, Enflame, and Moore Threads.

There's a twist in this tale, though — DeepSeek reportedly had to abandon training of its next-generation R2 model on domestically developed Huawei's Ascend platforms because of unstable performance, slower chip-to-chip connectivity, and limitations of Huawei's Compute Architecture for Neural Networks (CANN) software toolkit. This all begs the question: can China rely on its homegrown hardware for AI development?

Nvidia is dominating

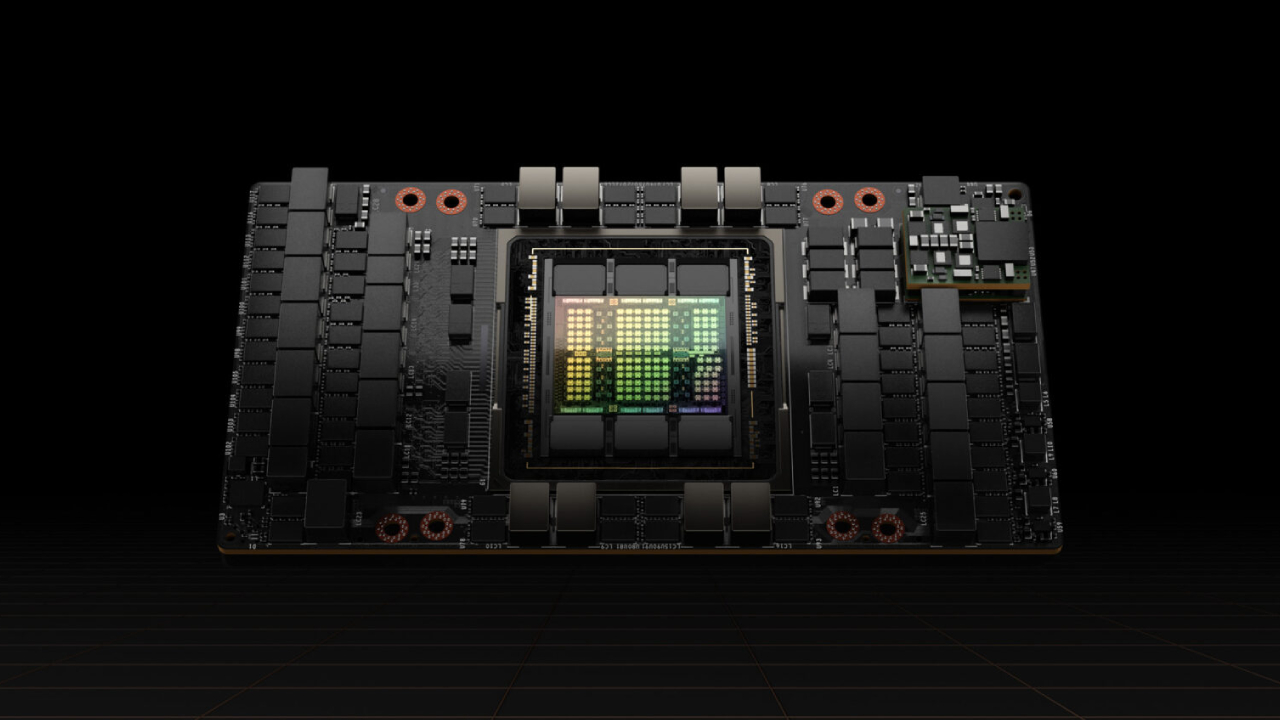

Nvidia has been supplying high-performance AI GPUs fully supported by a stable and versatile CUDA software stack for a decade, so it's not surprising that many, if not all, of the major Chinese AI hyperscalers — Alibaba, Baidu, Tencent, and smaller players like DeepSeek currently use Nvidia's hardware and software. Although Alibaba and Baidu develop their own AI accelerators (primarily for inference), they still procure tons of Nvidia's HGX H20 processors.

SemiAnalysis estimated that Nvidia produced around a million HGX H20 processors last year, and almost all of them were purchased by Chinese entities. No other company in China supplied a comparable number of AI accelerators in 2024. However, analyst Lennart Heim believes that Huawei had managed to illegally obtain around three million Ascend 910B dies in 2024 from TSMC, which is enough to build around 1.4 – 1.5 million Ascend 910C chips in 2024 – 2025. This is comparable to what Nvidia supplied to China in the same period. However, while Huawei may have enough Ascend processors to train its Pangu AI models, it appears that other companies have other preferences.

DeepSeek trained the R1 model on a cluster of 50,000 Hopper-series GPUs. This consisted of 30,000 HGX H20s, 10,000 H800s, and 10,000 H100s. These chips were reportedly purchased by DeepSeek's investor, High-Flyer Capital Management. As a result, it's logical that the whole software stack of DeepSeek — arguably China's most influential AI software developer — is built around Nvidia's CUDA.

However, when the time came to assemble a supercluster to train DeepSeek's upcoming R2 model, the company was reportedly persuaded by the authorities to switch to Huawei's Ascend 910-series processors. However, when it encountered unstable performance, slower chip-to-chip connectivity, and limitations of Huawei's CANN software toolkit, it decided to switch back to Nvidia's hardware for training, but use Ascend 910 AI accelerators for inference. Speaking of these exact accelerators, we do not know whether DeepSeek used Huawei's latest CloudMatrix 384, based on the latest Ascend 910C, or something else.

Since DeepSeek has not disclosed these challenges officially, we can only rely on a report from the Financial Times. The publication claims that Huawei's Ascend platforms did not work well for DeepSeek. Why they were deemed to be unstable is another question. It's a distinct possibility that DeepSeek only began to work with CANN this Spring, so the company simply has not had enough time to port its programs from Nvidia's CUDA to Huawei's CANN toolkit.

Steps into right directions

It is extremely complicated to analyze high-tech industries in China, as companies tend to keep secrets closely guarded and fly under the U.S. government's radar. However, two important factors that may have a drastic effect on the development of AI hardware in China occurred this summer. Firstly, the Model-Chip Ecosystem Innovation Alliance was formed, and secondly, Huawei made its CANN software stack open source.

The Model Chip Ecosystem Innovation Alliance includes Huawei, Biren Technologies, Enflame, and Moore Threads and others. The group aims to build a fully localized AI stack, linking hardware, models, and infrastructure, which is a clear step away from Nvidia or any other foreign hardware. Its success depends on achieving interoperability among shared protocols and frameworks to reduce ecosystem fragmentation. While low-level software unification may be difficult due to varied architectures (e.g., Arm, PowerVR, custom ISAs), mid-level standardization is more realistic.

By aligning around common APIs and model formats, the group hopes to make models portable across domestic platforms. Developers could write code once — e.g., in PyTorch —and run it on any Chinese accelerator. This would strengthen software cohesion, simplify innovation, and help China build a globally competitive AI industry using its own hardware. There is also an alliance called the Shanghai General Chamber of Commerce AI Committee, which focuses on applying AI in real-world industries; this also unites hardware and software makers.

Either as part of the commitment to the new alliance, or as part of the general attempt to make its Ascend 910-series the platform of choice among China-based companies, Huawei open-sourced CANN in early August, which is specifically optimized for AI and its Ascend hardware.

Until this summer, Huawei's AI toolkit for its Ascend NPUs was distributed in a restricted form. Developers had access to precompiled packages, runtime libraries, and bindings, which allowed TensorFlow, PyTorch, and MindSpore to run on the hardware. These pieces worked well enough to allow users to train and deploy models, but the underlying stack, such as compilers or libraries, remained closed.

CANN goes open-source

Now, this barrier has been removed. The company released the source code for the full CANN toolchain; however, it did not formally confirm what exactly it unseals, so we can only wonder or speculate. The list of opened up technologies likely includes compilers that convert model instructions into commands that Ascend NPUs understand, such as low-level APIs, libraries of AI operators that accelerate core math functions, and a system-level runtime. This will allow the management of memory, scheduling, and communication. This isn't officially confirmed, but merely an educated guess as to what CANN's open-sourcing might enable.

By opening up CANN, Huawei can attract a broad community of developers from academia, startups, and other enterprises to its platform, and enable them to experiment with performance tuning or framework integration (beyond TensorFlow and PyTorch). This will inevitably speed up CANN's evolution and bug fixing. Eventually, these efforts could bring CANN closer to what CUDA offers, which will be a useful string in Huawei's bow.

For Huawei, opening up CANN ahead of other Model-Chip Alliance members was beneficial, as it already had the most mature AI hardware platform in production, and needed to position its Ascend platform as the baseline software ecosystem others could rely on. This move makes CANN the default foundation for domestic models and hardware developers (at least for now). By taking this first step, Huawei set a reference point for interoperability and signalled a commitment to shared standards, which could help reduce fragmentation in China's AI software stack.

What about hardware availability?

But while unification of the software stack is a step in the right direction, there is an elephant in the room regarding China's AI hardware self-reliance. The People's Republic still cannot produce hardware that is on par with AMD or Nvidia in volume domestically. The hardware that can be made in China is years behind the processors developed on U.S. soil.

All leading developers of AI accelerators in China, like Biren, Huawei, and Moore Threads, are in the U.S. Department of Commerce's Entity List. This means that they do not have access to the advanced fabrication capabilities of TSMC. To that end, they have to produce their chips at China-based SMIC, whose process technologies cannot match those offered by TSMC. While SMIC can produce chips on its 7nm-class fabrication process, Huawei had to obtain the vast majority of silicon for its Ascend 910B and Ascend 910C processors by deceiving TSMC. Companies like Biren or Moore's Threads do not disclose which foundry they use, but they do not have the luxury of choice.

Of course, neither Huawei nor SMIC stands still. The two companies are working to advance China's semiconductor industry and build a local fab tools supply chain that will replace the leading-edge equipment that SMIC cannot acquire. Before this happens, SMIC is expected to start building chips on its 6nm-class process technology and even 5nm-class production node, so it may well build advanced AI processors for Huawei and other players. But the big question is whether volumes will manage to meet the demands of AI training and inference, especially if Nvidia hardware is largely unobtainable in China.

China's Chicken and egg dilemma

The maturity of Huawei's CANN (and competing stacks) lags behind Nvidia's CUDA largely because there has not been a broad, stable installed base of Ascend processors outside Huawei's own projects. Developers follow scale, and CUDA became dominant because millions of Nvidia GPUs were shipped and widely available, which justified investment in tuning, libraries, and community support. In contrast, Huawei and other Chinese developers have their proprietary software stacks and cannot ship millions of Ascend NPUs or Biren GPUs due to sanctions from the U.S. government.

On the other hand, even if Huawei and others managed to flood the market with Ascend NPUs or Moore Threads GPUs, a weak software stack makes them unattractive for developers. DeepSeek's attempt to train R2 on Ascend is a good example: performance instability, weaker interconnects, and CANN's immaturity reportedly made the project impractical, forcing a return to Nvidia hardware for training. Hardware volume alone will not change that.

The new Model-Chip Ecosystem Innovation Alliance is attempting to address the issue by setting common mid-level standards — things like shared model formats, operator definitions, and framework APIs. The idea is that developers could write code once in PyTorch or TensorFlow and then run it on any Chinese AI accelerator, whether it is from Huawei, Biren, or another vendor. However, until these standards are actually in place, fragmentation means every company will face several problems at once. The hardware and software face competition across multiple fronts in a saturated market.

As a result, the low volume of China-developed AI accelerators, a lack of common standards, and competition on various fronts will make it very hard for Chinese companies to challenge Nvidia's already dominant ecosystem.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.