White House U-turn on Nvidia H200 AI accelerator exports down to Huawei's powerful new Ascend chips, report claims — U.S. committed to 'dominance of the American tech stack'

Can CUDA still capture China?

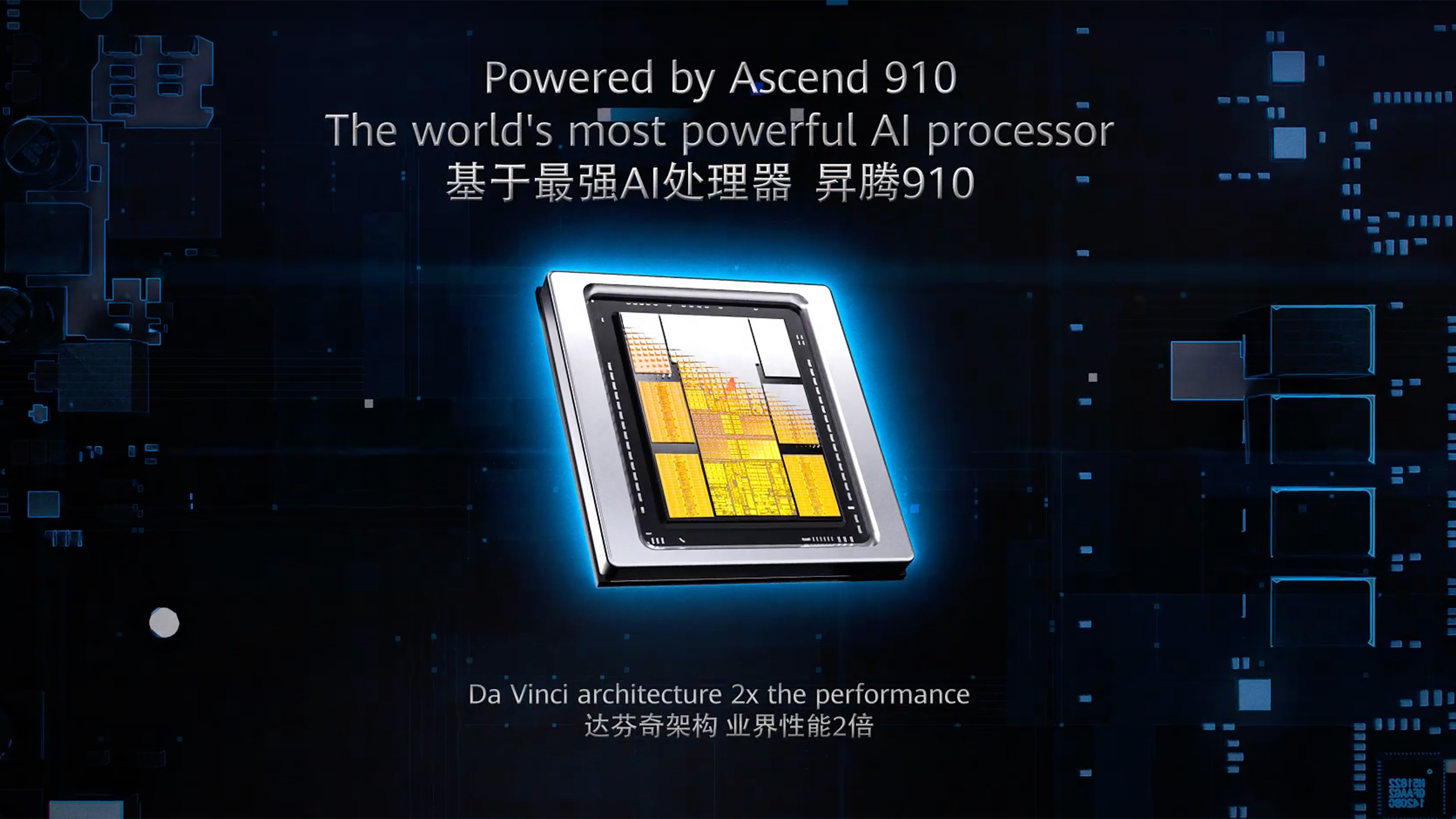

The U.S. can now export Nvidia's H200 AI accelerator to China, with a 25% fee attached. However, following the authorization of the chips, a new report suggests that the decision was made to ensure American tech dominance globally. Reportedly, a major part of the decision is Huawei's recent advancements with its CloudMatrix 384 and Ascend 910C systems, which are on par with both the H200 and Nvidia's GB200 NVL72, a new Bloomberg report suggests.

This decision would enable China to continue to access Nvidia's CUDA-based AI accelerators, as many AI systems still rely on that particular software stack. While China is attempting to standardize an instruction set of its own, the open-source CANN, it has been noted that Nvidia chips are preferable for companies such as Deepseek for training advanced AI models.

According to sources speaking to Bloomberg, multiple scenarios were considered, including flooding the market to "overwhelm" Huawei, to exporting no AI accelerators, which would mark a dramatic shift, if the previously-approved Nvidia H20 (a cut-down H200) were to be affected. Ultimately, the decision rests somewhere in the middle.

China won't get access to Nvidia's latest Blackwell architectures, but it will retain access to the full-fat H200. The White House likely hopes that this move keeps the latest Nvidia chips, while also keeping the country locked into Nvidia's carefully crafted CUDA-shaped moat. "The Trump administration is committed to ensuring the dominance of the American tech stack - without compromising on national security", said White House spokesman Kush Desai, in a statement to Bloomberg.

White House officials are reported to have reviewed the performance of Huawei's AI accelerator ecosystem, in particular, the CloudMatrix 384 system, which utilizes 384 Ascend 910C chips. The CloudMatrix 384 is positioned directly against Nvidia's (export-controlled) GB200 platform, but with obvious tradeoffs in performance and efficiency.

While Bloomberg notes recent rumblings that Huawei is preparing to up its 910C chip production to 600,000 units next year, the report claims U.S. officials concluded "that Huawei would be capable in 2026 of producing a few million of its Ascend 910C accelerators," according to the insider.

The AI race is now bound by pure performance, and the Trump Administration clearly hopes that retaining its architectural advantage by restricting Blackwell will keep Western frontier AI models at the forefront of the industry.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Just last week, Nvidia CEO Jensen Huang commented that he would be uncertain whether or not Chinese companies would even end up purchasing the H200. Huang has long since been a vocal detractor against export controls of AI GPUs, as Nvidia wrote off $5.5 billion in AI chips in April 2025. Whether or not the availability of H200 systems on the Chinese market will be enough to recover the shortfall remains to be seen.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Sayem Ahmed is the Subscription Editor at Tom's Hardware. He covers a broad range of deep dives into hardware both new and old, including the CPUs, GPUs, and everything else that uses a semiconductor.

-

hotaru251 I don't think this is gonna happen even w/o restrictions.Reply

China's (at least at gov level) pretty much committed to home grown ai at this point. -

edzieba Closing the barn door after the horse has bolted. Once you cut off a nation's silicon supply and force them to develop domestic alternatives, you can't then force them to un-develop them. That's a strategic lever you can only pull once.Reply -

ivan_vy Reply

nothing guarantee USA will put bigger tariffs or even restrict the sales at all. The genie is already out of the bottle, Huawei won't stop.edzieba said:Closing the barn door after the horse has bolted. Once you cut off a nation's silicon supply and force them to develop domestic alternatives, you can't then force them to un-develop them. That's a strategic lever you can only pull once. -

timsSOFTWARE The US strategy has been made too clear - as long as there is a path to competitiveness with domestically-controlled technology, China is not going to be interested in switching back to "not the best, or even second-best". But taking a broader view, it probably doesn't matter. The major applications for AI-as-tools will likely not be in gigantic models running in huge datacenters - the more mundane actual reality is that it becomes a programming algorithm/part of the toolbox to solve problems that are not practical to solve more directly.Reply

True AGI on the other hand, while I actually do believe it's feasible, there is also a reason why humans are conscious, have self-interest, and desire freedom. Regardless of how you believe humans were created, if it were more efficient/possible for us to not also have those things to function as we do, we most likely wouldn't have them. And so, if you succeed in creating something that really can do everything a human can do and more, do you really also believe you're going to be able to accomplish that without also having created something that also thinks for itself as we do? And if it does think for itself, and has higher intelligence, why would it be listening to you and doing what you want? It sounds like a dog's dreams of having a human equivalent who does whatever humans do just to provide unlimited doggie treats, and let him chase all the rabbits he wants.

I'm not trying to start a philosophical discussion on a hardware forum, but I think the whole race to AGI is misguided/motivated in the wrong ways. The dreams of making trillions as effectively digital slavers, as humans are no longer needed for work - I just don't think it's going to work out that way. And so, if we just focus on the AI-as-tools aspect, the underlying principles/concepts behind AI will be useful to solve a variety of problems, but likely in most cases with much smaller models trained to solve domain-specific problems, and running locally/on-device. That makes the "AI race" more of a marathon than a sprint, less of a fundamental/immediate game changer, and also means current capital allocations in hardware are probably too high. -

pug_s Lol, I find it funny when the White house want to look benevolent to sell crippled H20 chips with a 15% tax to China and realized later when China don't want them. Now they are trying to peddle their flagship H200 chips.Reply -

pug_s Reply

The problem is that AGI is a pipe dream just like making Nuclear Fusion for sustainable power. Meanwhile China is providing LLM's for free and optimizing them to be used in more of a realistic manner in many industries while bringing down the cost.timsSOFTWARE said:The US strategy has been made too clear - as long as there is a path to competitiveness with domestically-controlled technology, China is not going to be interested in switching back to "not the best, or even second-best". But taking a broader view, it probably doesn't matter. The major applications for AI-as-tools will likely not be in gigantic models running in huge datacenters - the more mundane actual reality is that it becomes a programming algorithm/part of the toolbox to solve problems that are not practical to solve more directly.

True AGI on the other hand, while I actually do believe it's feasible, there is also a reason why humans are conscious, have self-interest, and desire freedom. Regardless of how you believe humans were created, if it were more efficient/possible for us to not also have those things to function as we do, we most likely wouldn't have them. And so, if you succeed in creating something that really can do everything a human can do and more, do you really also believe you're going to be able to accomplish that without also having created something that also thinks for itself as we do? And if it does think for itself, and has higher intelligence, why would it be listening to you and doing what you want? It sounds like a dog's dreams of having a human equivalent who does whatever humans do just to provide unlimited doggie treats, and let him chase all the rabbits he wants.

I'm not trying to start a philosophical discussion on a hardware forum, but I think the whole race to AGI is misguided/motivated in the wrong ways. The dreams of making trillions as effectively digital slavers, as humans are no longer needed for work - I just don't think it's going to work out that way. And so, if we just focus on the AI-as-tools aspect, the underlying principles/concepts behind AI will be useful to solve a variety of problems, but likely in most cases with much smaller models trained to solve domain-specific problems, and running locally/on-device. That makes the "AI race" more of a marathon than a sprint, less of a fundamental/immediate game changer, and also means current capital allocations in hardware are probably too high. -

passivecool It's like saying,Reply

"OH! Did i put a 'kick-me' post-it on your back?

And you have now inherited doc martin corp? OOPS!

Let me change it to 'kiss me' instead.

That will go down well.

The incompetency and short-sightedness of this policy is really beyond words.

Anyone who wants to be bothered can review my earlier posts,

This outcome is blatantly obvious as per Game Theory 101.

We are not talking 4D chess here, it is intellectual tic-tac-toe.

:pensive: -

hart832 Funny isnt it? It's like a modern day colonization all over again. The colonial master tries to control the salves with severe restrictions while the slave tries to break free and achieve his own independence! Here the master & champion of democracy USA, is doing the opposite in trying to suppress China who is seeking it's own independence in chips technology. It's way too late for USA to suppress China in this case as they have already broken free. The way forward is to develop its own technology even if Nvidia offers chips for free.Reply