GPU depreciation could be the next big crisis coming for AI hyperscalers — after spending billions on buildouts, next-gen upgrades may amplify cashflow quirks

Even hyperscalers risk becoming unprofitable.

Managing asset depreciation is a core component of most modern businesses, but where you can spread the cost of some assets over a decade, or less, modern GPUs threaten an altogether more aggressive lifecycle. The gains being made generation upon generation, particularly in AI performance and chip efficiency, threaten to accelerate asset depreciation beyond what even some of the largest companies can handle. Now, analysts worry that the rapid pace of AI processing power advances from new generations of GPUs could overwhelm companies riding the AI train.

Most corporations operate with an understanding that their servers will remain relevant for between three and five years, but in the world of "AI factories," where the speed and efficiency of your data center may equate to how much you can earn, even falling one generation behind could be terminal. What happens when the pace of innovation accelerates beyond the potential profitability of the hardware you spent so much time and money investing in?

Keeping up with the times

For many familiar with the industry, the cycle of new CPU and GPU upgrades is nothing new. But beyond the FOMO, there's nothing problematic about it. However, the optimization equation is slightly different in the context of hyperscalers.

The business world has never had to contend with this kind of upgrade cycle before. Sure, some industries could always benefit from new workstations for their staff, but with AI, there's the potential for your competitors to upgrade, making your hardware less profitable, and therefore your services far less desirable.

A next-gen GPU that can offer, for example, 50% greater performance and/or 30% efficiency savings (some generational upgrades can be greater than that), could make a data center running last-generation hardware decidedly unprofitable. Suddenly, your competitors' services are faster and cheaper to run than your own.

You can upgrade too, but you're competing for a limited pool of hardware, and if everyone else is trying to sell off their old GPUs at the same time, who's buying?

Maybe this wouldn't be so bad if the upgrade cycles were once every half-decade, but Nvidia is pushing for annual GPU releases, and with "Ultra" versions of those architectures often following later. For companies investing tens of billions of dollars in hardware, that could prove entirely unsustainable.

Throw in spiking electricity costs, increasing pressure for eco-conscious data center design, and an ongoing lack of clear profitability for AI in the first place, and it's a disaster waiting to happen.

Napkin math loans

Unfortunately, the problem is far more serious and immediate than the looming GPU depreciation threat. The AI industry's financing has been decidedly circular for some time, prompting many warnings of a looming bubble that could have serious fallout if it bursts. But it could be the confluence of these financing arrangements and the limited lifespan of the GPUs that the deals secured that presents the most pressing danger.

GPU purchases provide material value to a company as an asset that can be used as collateral for loans. The "Neocloud" companies, like CoreWeave, which offer cloud services without their own large software or hardware businesses, have made enormous outlays to secure the hardware they need to serve clients over the coming years. It spent over $14 billion in 2025, and plans to spend double that in 2026, Bloomberg reports.

That won't be a problem if it can maintain strong profitability moving forward with continued business for its cloud services. But that's based on a wide range of assumptions: The AI bubble won't burst, there's no major change in the way AI works that requires a retooling of hardware or software, hyperscalers don't develop their own ASIC designs, international trade blocks won't hamper expansion or service access, and indeed, that it won't fall behind in the AI hardware arms race.

While larger companies like Google, Amazon, Microsoft, and Meta, are more insulated from these problems because of their diverse business interests and large cash stockpiles, they aren't immune. Indeed, they could end up falling foul of many of the same issues of GPU depreciation, accelerating any domino effects that hit the AI industry.

Michael Burry, of Big Short fame, recently warned that these kinds of hyperscale companies have all extended the "useful years" rating for their servers in recent years. This allows them to frontload the expenditure on infrastructure expansion, and then enjoy higher profits even if revenue doesn't catch up because the original outlay was the major investment.

Moving from three years to between five and six years is an enormous difference, and completely underestimates the rapid pace of advancement AI hardware could make in the coming years. Although there will be a place for older hardware and it won't be immediately obsolete a year after deployment, its value may fall dramatically.

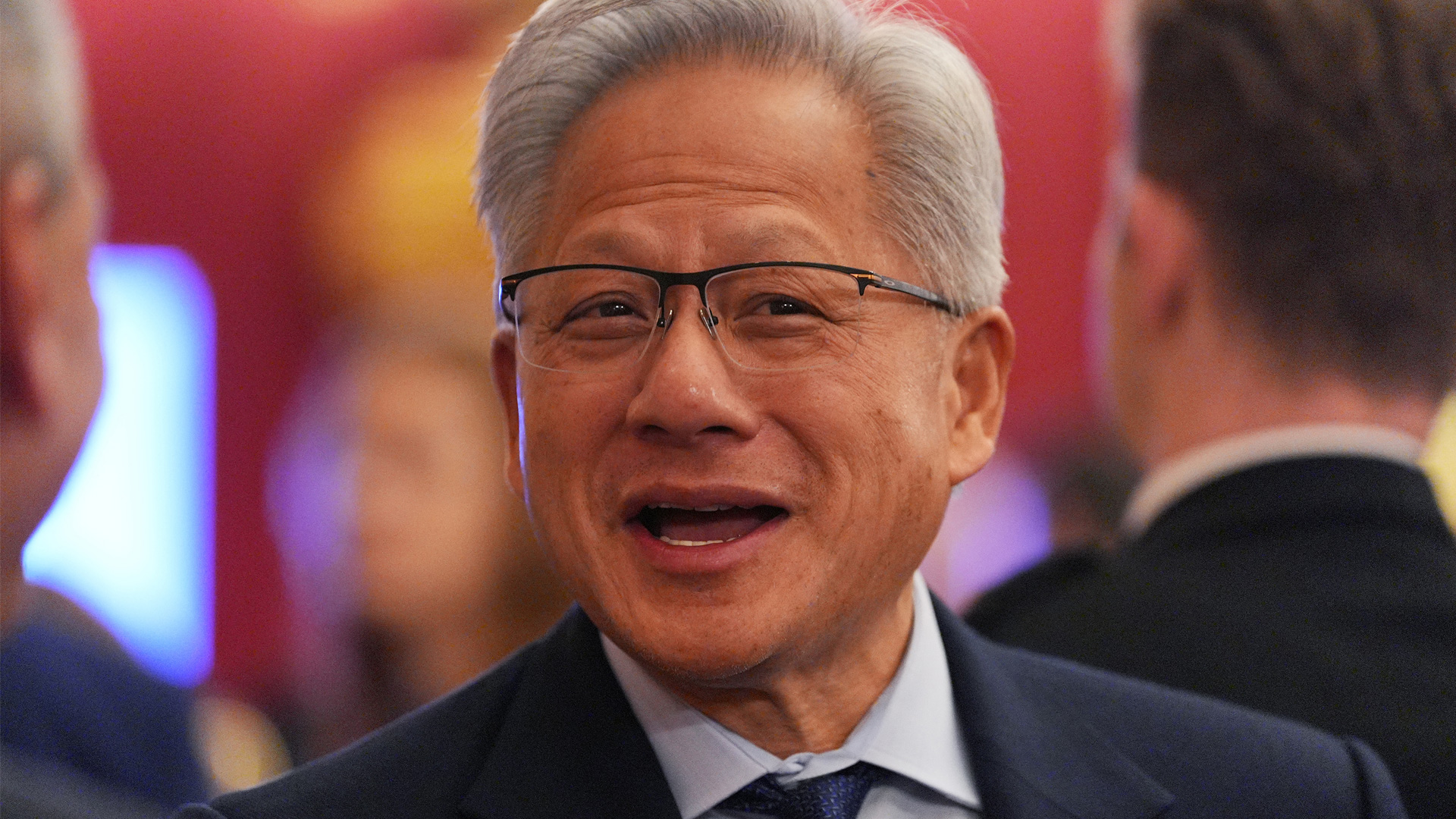

Nvidia CEO Jensen Huang said in March this year that when Blackwell GPUs were readily available, you "couldn't give Hoppers away." Although that's facetious, and Hopper GPUs are still incredibly popular, they are far less desirable than they were a year ago.

That will happen with Blackwell, in due course, and it's next-generation Vera Rubin beyond that. So, companies may be forced back to new financing arrangements quicker than expected, with assets that have depreciated faster than they projected, within an industry that hasn't yet proven a credible business model for profit.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Jon Martindale is a contributing writer for Tom's Hardware. For the past 20 years, he's been writing about PC components, emerging technologies, and the latest software advances. His deep and broad journalistic experience gives him unique insights into the most exciting technology trends of today and tomorrow.