Samsung reportedly slashes HBM3 prices to woo Nvidia — cuts could put the heat on rivals SK hynix and Micron as company attempts to spur AI turnaround

High bandwidth memory could be the savior.

Samsung’s semiconductor business is coming off a rough quarter, with profits plunging nearly 94% year-over-year. It’s the weakest the chip division has looked in six quarters, and the culprit isn’t hard to identify. Between U.S. export restrictions limiting the sale of advanced chips to China, and persistent inventory corrections, the company’s Device Solutions division recorded just 400 billion won ($287 million) in profit for Q2 2025—a steep fall from the 6.5 trillion won ($4.67 billion) it pulled in the same period last year. However, Samsung is betting big that artificial intelligence will flip that narrative by the end of the year.

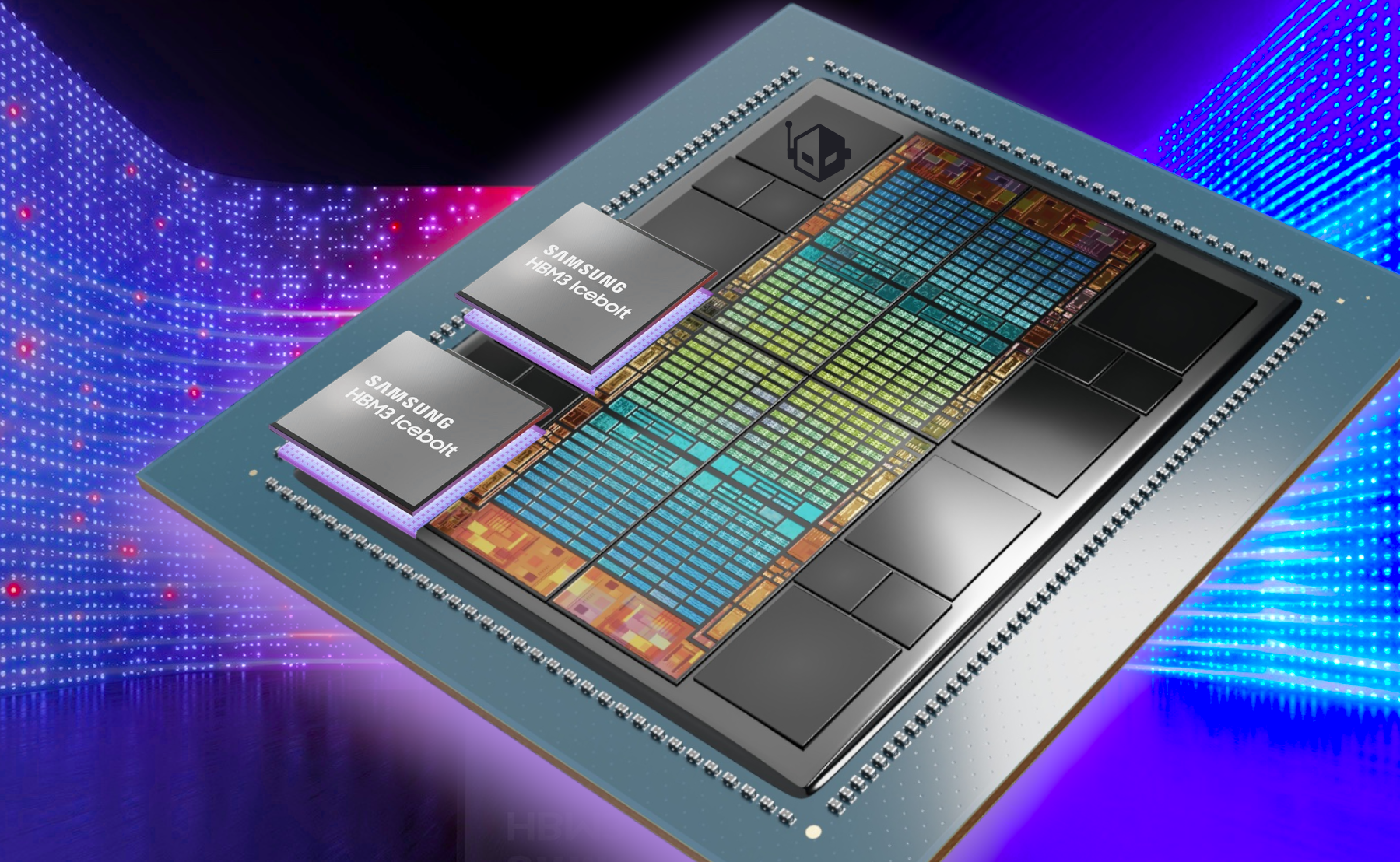

The recovery, as Samsung sees it, lies in HBM3E—the latest generation of High Bandwidth Memory used to feed data to AI accelerators at blazing speeds. According to a ZDNet Korea report, the company is actively working to lower the production costs of HBM3E in an effort to court Nvidia, which has so far leaned heavily on SK hynix for its AI GPU supply chain.

Samsung’s strategy is simple: make HBM3E memory more affordable and available than anyone else and become indispensable to the future of AI computing. For context HBM3E (High Bandwidth Memory Gen 3E) is critical in modern AI accelerators, especially for large language model training where bandwidth and capacity bottlenecks limit performance. It’s the memory found on Nvidia’s top-end AI GPUs like the B300.

The company's memory division’s quarterly sales rose 11% from Q1, reaching 21.2 trillion won ($15.2 billion), thanks in part to the expansion of HBM3E and high-density DDR5 for servers. NAND inventory is being cleared out faster too, with server SSD sales picking up. For the second half, Samsung plans to ramp up its production of 128GB DDR5, 24Gb GDDR7, and 8th-gen V-NAND, particularly for AI server deployments.

Adding a boost to its long-term outlook is Tesla’s recently confirmed $16.5 billion partnership with Samsung, which will see the South Korean firm manufacture next-gen AI6 chips at its Texas foundry through 2033. This could inject much-needed scale and stability into Samsung’s foundry operations, especially as the company battles stiff competition not just from TSMC, but from geopolitical pressures as well.

Just this week, U.S. President Donald Trump, slapped a 15% tariff on South Korean goods (reduced from 25%), clouding the second-half recovery narrative with fresh uncertainty. Samsung is walking a tightrope—betting on AI to pull it out of its slump, while navigating volatile trade dynamics and trying to claw back market share in the fiercely competitive high-end memory business.

If Samsung can pull off cheaper, high-yield HBM3E production, it could change this narrative. Winning back Nvidia, which is reportedly testing Samsung’s HBM3E but still skeptical of thermals and power efficiency, would be a turning point. And with Meta, Microsoft, and Amazon all scaling their in-house AI silicon, memory suppliers like Samsung are in a new race to prove not just capability but value.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.