Nvidia's next-gen B300 GPUs have 1,400W TDP, deliver 50% more AI horsepower: Report

At a 200W higher TDP.

Nvidia's rollout of its first-gen Blackwell B200-series processors faced a roadblock due to yield-killing issues, and several unconfirmed reports of overheating servers have also emerged. However, as reported by SemiAnalysis, it looks like Nvidia's second-gen Blackwell B300-series processors are just around the corner. They will not only feature increased memory capacity but also offer 50% higher performance with just 200W of additional TDP.

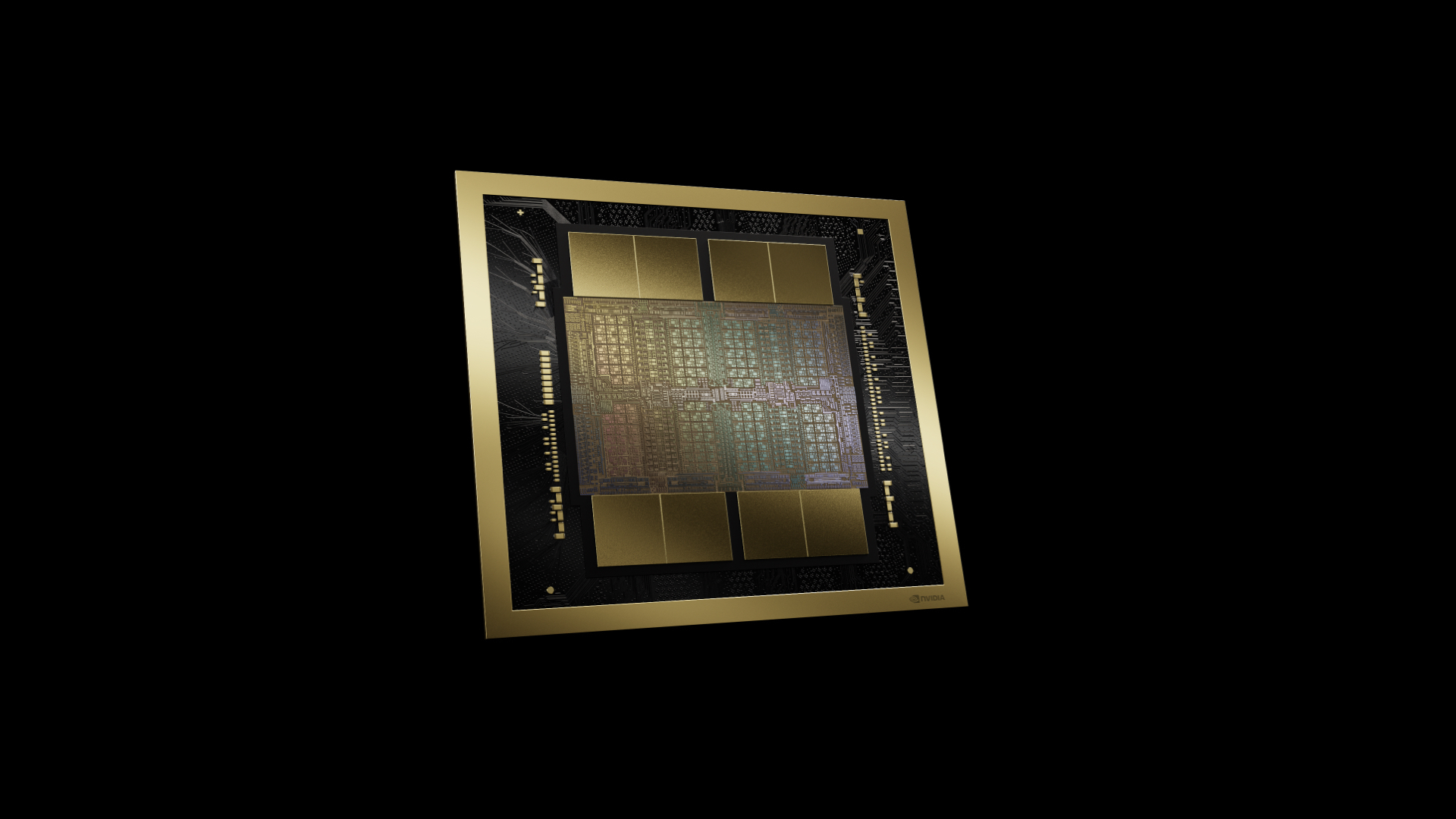

Nvidia's B300-series processors feature a significantly tweaked design that will still be made on TSMC's 4NP fabrication process (a 4nm-class node with enhanced performance tuned for Nvidia), but the report claims they will offer 50% more compute performance than the B200-series processors. That performance increase comes at the cost of a massive 1,400W TDP, just 200W higher than the GB200. The B300 will become available roughly half a year after the B200, SemiAnalysis claims.

The second significant improvement of Nvidia's B300-series is the usage of 12-Hi HBM3E memory stacks, which will provide 288 GB of memory with 8 TB/s of bandwidth. Enhanced memory capacity and higher compute throughput will enable faster training and inference with up to three times lower cost for inference since B300 will handle larger batch sizes and support extended sequence lengths while addressing latency issues in user interactions.

In addition to higher compute performance and more memory, Nvidia's second-gen Blackwell machines may also adopt the company's 800G ConnectX-8 NIC. This NIC has twice the bandwidth of the current 400G ConnectX-7 and 48 PCIe lanes compared to 32 on its predecessor. This will provide significant scale-out bandwidth improvements for new servers, which is a win for large clusters.

Another major enhancement with B300 and GB300 is that Nvidia will reportedly rework the whole supply chain compared to B200 and GB200. The company will no longer attempt to sell the whole reference motherboard or the whole server pod. Instead, Nvidia will only sell the B300 on an SXM Puck module, the Grace CPU, and a host management controller (HMC) from Axiado. As a result, more companies will be allowed to participate in the Blackwell supply chain, which is expected to make Blackwell-based machines more accessible.

With B300 and GB300, Nvidia will give its partners among hyperscalers and OEMs much more freedom to design Blackwell machines, which will affect their pricing and perhaps performance.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

JRStern Sounds largely credible.Reply

However, this is veeeeeeeery dangerous for NVidia, this is like Marketing Sin #0, NEVER announce the superior nextgen product until you've exhausted the market for present generation.

This could cause a huge wave of cancellations for B200.*

Wait and see.

*unless that's actually what NVidia would like to happen, say if they can't actually deliver on promised volumes. -

thestryker Reply

Given the changes to the overall deployment design I think you might be right on here.JRStern said:*unless that's actually what NVidia would like to happen, say if they can't actually deliver on promised volumes. -

JRStern Reply

Fixed In Next Releasesstanic said:Sorry wut? You ran out of keys on the keyboard or something?