Microsoft unveils Azure Cobalt 200 CPU, in-house chip targets higher performance and deeper integration — Arm-based chip is equipped with 132 cores and manufactured using TSMC's 3nm process

New in-house processor targets higher performance, lower power, and deeper integration across Azure’s cloud stack.

Microsoft has revealed its next major in-house server processor, the Azure Cobalt 200, a 132-core Arm-based CPU built on TSMC’s 3nm process and designed to raise the performance ceiling across Azure’s general-purpose compute tiers.

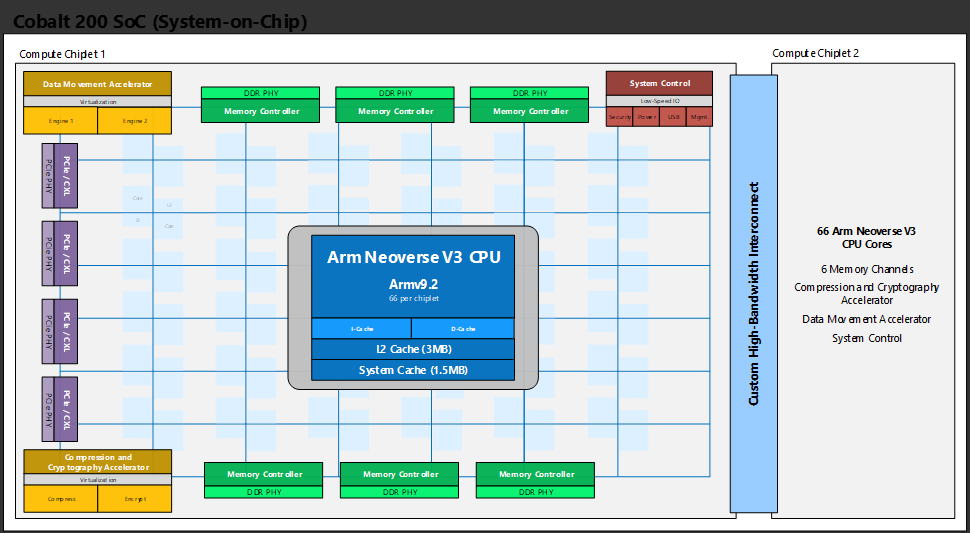

Like the Cobalt 100 before it, the new processor is built around an Arm Neoverse platform. This generation, however, moves to Arm’s latest CSS V3 subsystem and combines two 66-core chiplets for a total of 132 cores. Microsoft describes the chip as its most efficient data center CPU to date. Internal telemetry shows more than 50% higher performance than the Cobalt 100 across a blended set of real-world workloads.

3nm silicon and a rebuilt compute complex

Detailed in the Azure Infrastructure blog, Cobalt 200 is the first Azure processor to use TSMC’s 3nm node, and the new transistor budget is visible in every part of the design. Each of the 132 cores is an Arm Neoverse V3 class CPU with a 3MB L2 cache per core. The processor also includes 12 memory channels, and the controller itself has been customized by Microsoft to enable always-on memory encryption and to support Arm’s Confidential Compute Architecture. That feature allows the chip to isolate tenant memory from the host or hypervisor while keeping performance overhead low enough for multitenant cloud environments.

One of the clearer differences between Cobalt 200 and the previous generation is in how the company has moved certain common data-center tasks into hardware. Compression and cryptography engines are now part of the SoC. These blocks sit alongside the CPU cores and offload encryption and decryption that previously consumed a noticeable share of compute time on database and analytics workloads. Microsoft has highlighted SQL Server as an early beneficiary of these offloads, with I/O encryption handled directly on the silicon.

Thermals and power remain in focus with Cobalt 200, too. Microsoft says the chip delivers more than 50% higher performance than its predecessor while maintaining its status as the most power-efficient platform in Azure. Each core supports dynamic voltage and frequency scaling, allowing the processor to adjust power use in response to workload demands.

Combined with the 3nm process and the inclusion of dedicated hardware accelerators for tasks like compression and encryption, the architecture is built to maximize throughput without requiring all cores to operate at peak frequency simultaneously. While Microsoft has not published detailed thermal or TDP specifications, the company positions Cobalt 200 as a significant performance upgrade.

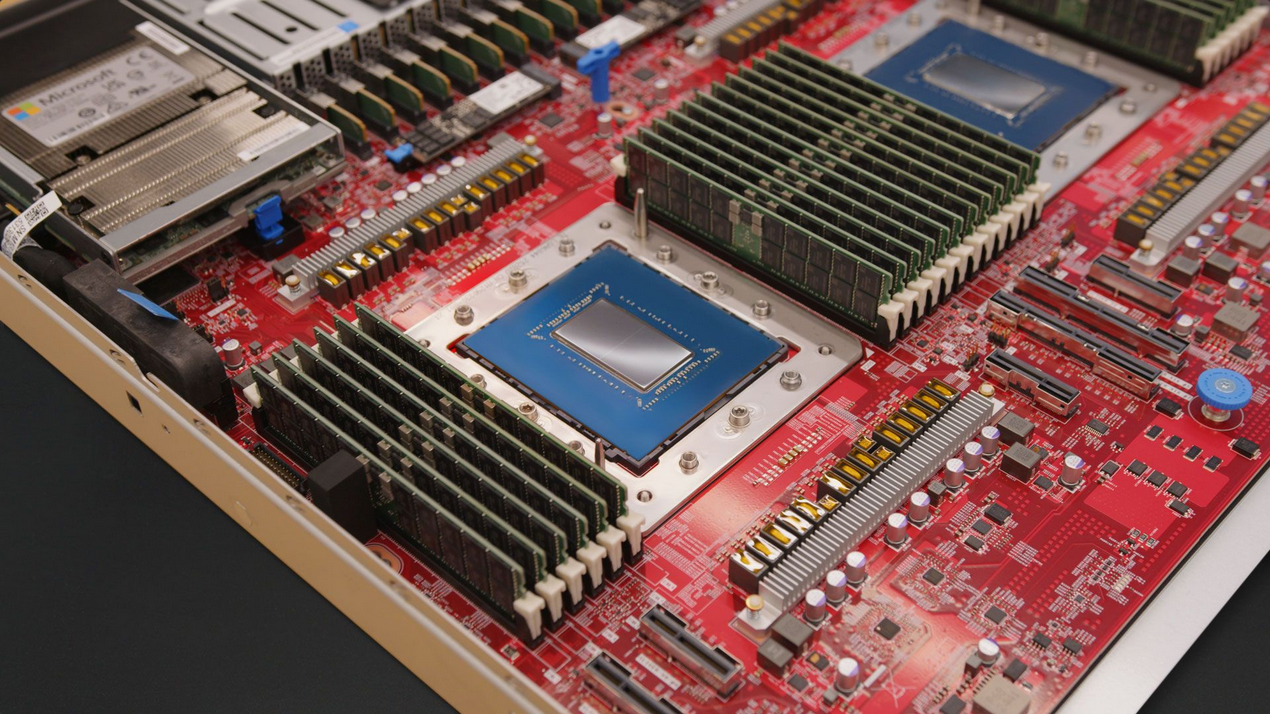

Cobalt 200 is delivered as part of a broader hardware stack that brings together Microsoft’s own CPUs, networking, and storage offload engines, and a new hardware security module. Each server node pairs the processor with Azure Boost, the DPU that handles software-defined networking and remote storage. This offload moves packet processing and I/O scheduling entirely out of the CPU, allowing the chip to reserve its cores for application and service workloads.

A general-purpose chip in a world chasing AI

The cloud CPU market is moving quickly. Amazon has its Graviton line for general compute and the Trainium and Inferentia families for training and inference. Google continues to expand its TPU offerings with high-bandwidth pods tuned for transformers. NVIDIA’s H100 and H200 GPUs remain the dominant accelerators for large-model training, and AMD is pushing MI300 in the HPC and AI segments.

Microsoft’s Cobalt 200 sits in a different part of this spectrum. It’s not an accelerator and is not meant to compete with the H200 or Trainium2 clusters. Its job is to deliver high sustained throughput on the services that form the foundation of Azure’s cloud business, such as web front ends, microservices, transactional databases, streaming ingestion, and the fast-growing category of CPU-bound AI inference. In these categories, core count, cache size, and memory bandwidth matter more than anything else.

A good parallel is Amazon’s Graviton3, which significantly improved AWS’s price-performance for web and database workloads. The difference is that Microsoft has pushed further into customization. The 132-core layout and 3nm node give it a larger execution footprint than Graviton3’s 64 cores on 5nm, while the compression and crypto blocks target specific Azure workloads such as SQL Server, Cosmos DB, and large-scale telemetry processing. Arm’s own data from the CSS V3 subsystem indicates performance-per-socket gains of up to 50% over N2-based designs, a figure that aligns with Microsoft’s internal benchmark results.

Where Cobalt 200 appears most competitive is in the blend of general compute and secure multitenancy. Always-on memory encryption and CCA support allow Microsoft to extend its confidential computing offerings without relying solely on x86 features such as AMD SEV-SNP. These features carry substantial weight in virtual machine selection for regulated enterprises, particularly as more organizations shift toward Arm instances to reduce costs and power consumption.

Cobalt 200 is now live in production servers inside Microsoft’s own data centers, with broader rollout and customer availability planned for 2026. While the company has not yet detailed which VM families will use the chip, it has confirmed that internal workloads — including parts of Office, Teams, and Azure SQL — are already moving to the platform. Given the scale of those services, Cobalt 200 is poised to become one of the most widely deployed Arm-based CPUs in any cloud, rivaled only by AWS’s Graviton line.

Cobalt’s arrival also marks a deeper shift inside Microsoft. After decades of relying on Intel and AMD, the company has moved decisively into custom design, not just with CPUs, but across accelerators, networking, and memory infrastructure. That gives Azure tighter vertical control over power, performance, and cost, and lets Microsoft tune silicon around its own telemetry.

As the cloud infrastructure layer reconfigures itself to meet the demands of large models and global inference, hyperscalers are turning inward to their own chips, stacks, and tooling. Cobalt 200 is the latest indication that the future of the cloud will be built on in-house silicon.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Luke James is a freelance writer and journalist. Although his background is in legal, he has a personal interest in all things tech, especially hardware and microelectronics, and anything regulatory.