AMD’s record quarter shows strength in client and AI, as Ryzen leads the charge — but data center dominance could be out of reach

Ryzen 7 9800 and MI300X sales drive growth, while new risks to AMD’s EPYC foothold emerge from Nvidia-Intel partnership.

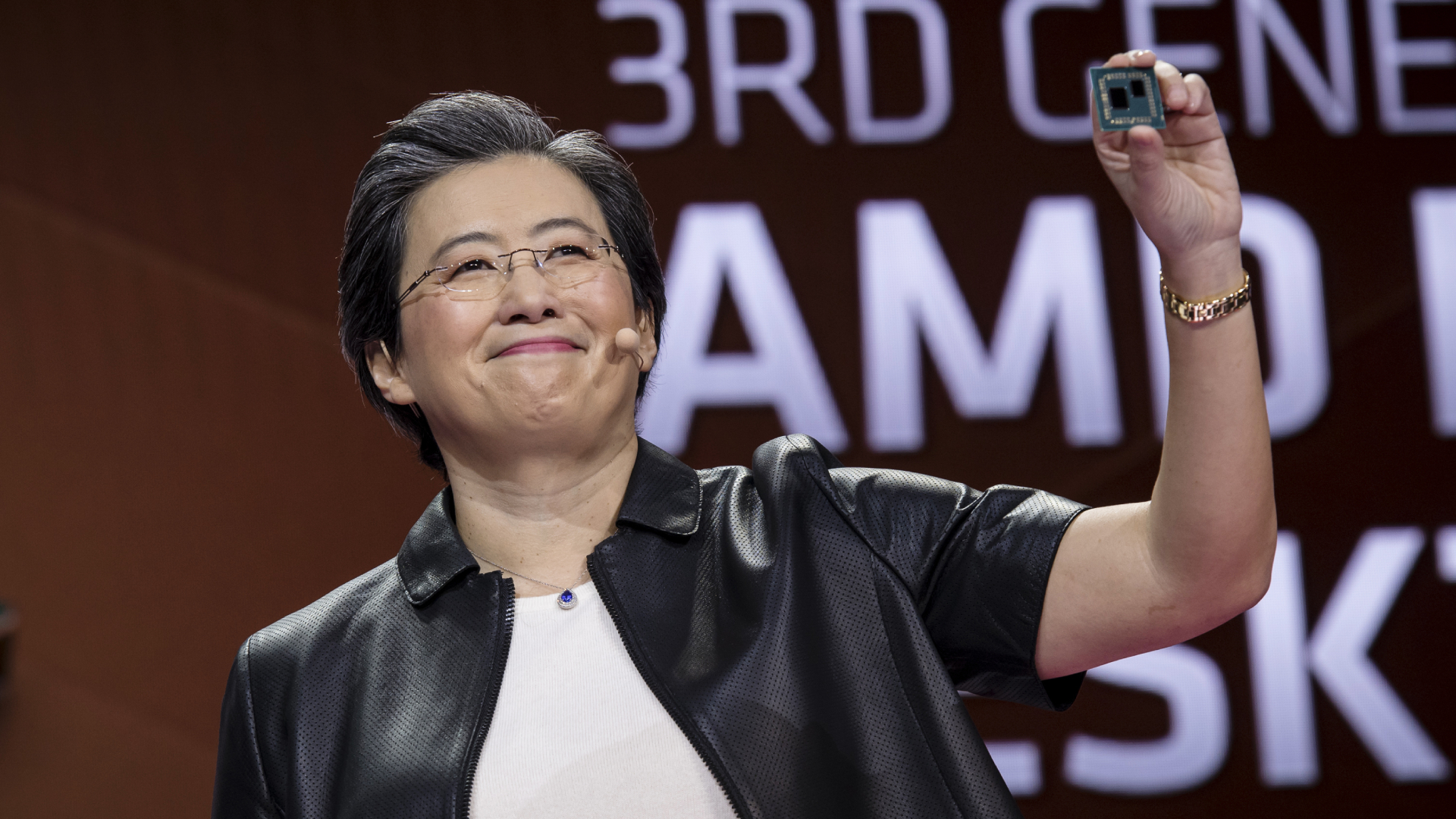

AMD has posted its highest quarterly revenue to date, booking $9.2 billion for Q3 2025. The company broke records in both its client and data center segments, with the Ryzen 7 9800X3D powering a rebound in consumer sales, and the Instinct MI300 series finally achieving meaningful volume.

But while the company’s margins are up and guidance looks stable, AMD also flagged major risks in its data center business — from export constraints and packaging bottlenecks, to a potentially seismic shift in how Nvidia builds its next-generation platforms.

Ryzen drives client revenue to new heights

The biggest surprise in AMD’s earnings print wasn’t just its record-breaking topline, but the fact that $2.8 billion of it came from client processors. That figure includes strong sell-through of the Ryzen 7 9800 and 9800X3D, two chips that have outperformed expectations in gaming laptops and compact desktops. The Zen 5-based 9800X3D in particular has been noted for its aggressive efficiency profile and broad adoption by OEMs.

More telling is what this bounce tells us about AMD’s design focus. While Intel’s Meteor Lake platform continues to find traction with its integrated NPU push, AMD has leaned into gaming-first, performance-per-watt silicon. As a result, AMD has clawed back meaningful share in premium laptop designs.

On the data center side, AMD reported $4.3 billion in revenue (a 22% year-over-year increase), driven by stronger EPYC adoption and meaningful Instinct MI300X shipments. This is the first quarter in which AMD has made a serious dent in the accelerator business, which it has long trailed Nvidia in. That growth came without any revenue from China-bound MI308 shipments, which were blocked under ongoing U.S. export restrictions and domestic bans in China. AMD confirmed Q3 and Q4 guidance excludes those entirely.

The company’s focus isn’t just on GPUs; it’s also building a vertically aware, modular, open platform. Announced back in June and dubbed Helios, AMD’s cabinet reference design combines MI400-class accelerators, EPYC “Venice” CPUs, and Pensando “Vulcano” AI NICs, all connected via Ultra Ethernet. The goal is to win over hyperscalers and HPC customers looking for a coherent, Ethernet-native alternative to Nvidia’s NVLink- and InfiniBand-centric products.

AMD’s Helios pitch is part of a long-term strategy to offer a full-stack, open alternative to Nvidia’s vertically integrated systems. While Helios is built around the upcoming MI400-series GPUs, AMD’s current MI355X — its top-end CDNA 4 part for 2025 — already reflects the shift toward inference-first workloads.

With 288GB of HBM3E, support for FP4 and INT4, and aggressive focus on cost-per-token rather than peak FLOPs, MI355X is optimized for large-scale inference where memory bandwidth and low-precision throughput matter most. AMD is explicitly targeting the economics of production AI, not just training benchmarks.

Oracle, one of AMD’s largest cloud customers, is already rolling out MI300- and MI350-based infrastructure, and plans to adopt MI400-series platforms starting in 2026.

Nvidia-Intel puts pressure on EPYC

The most significant long-term threat to AMD’s data center business may not be in GPUs at all. In September, Nvidia and Intel announced an unexpected partnership that includes a custom x86 CPU built on Intel 18A, designed specifically for future Nvidia platforms. If it becomes the default attach CPU for Nvidia’s Rubin or GB300-class AI systems, AMD’s share of the server CPU footprint inside Nvidia-designed racks could shrink.

This matters because EPYC currently benefits from Nvidia’s lack of an x86 license. If that dependency disappears, AMD risks losing high-margin CPU slots inside third-party AI deployments, even if Instinct wins elsewhere.

AMD also faces growing competition from in-house silicon at hyperscalers. Broadcom is currently working on a custom chip project with OpenAI that could scale to 10 gigawatts of compute by 2029. Amazon already uses its Trainium chips in production, and Microsoft’s Maia program, though delayed, is still expected to launch. Each of these efforts eats into the total accessible market that AMD and Nvidia compete over.

Further challenges for AMD’s outlook include pending litigation. Hybrid bonding, the foundational tech behind its 3D V-Cache stack, is now the subject of two patent suits filed by Adeia. While neither is likely to impact short-term shipments, both could introduce licensing costs or design constraints if successful.

A record quarter with real headwinds

AMD’s Q3 was both strong and coherent. Ryzen’s momentum validated Zen 5’s efficiency push and helped balance a market tilted toward AI. Instinct MI300X found traction. Data center revenue reached new highs. And yet, it was also a map of AMD’s next challenges.

Nvidia’s vertical integration and its new x86 CPU partnership with Intel could erode EPYC’s share in high-value racks. In addition, supply chain bottlenecks will slow Instinct ramps, no matter how strong the architecture, and hyperscaler custom silicon at OpenAI et al is beginning to absorb budget that once went to merchant GPUs. On top of all that, real, unresolved geopolitical risks between the U.S. and China add even more uncertainty.

AMD is executing well, given all these factors, but it’s operating against a backdrop where the rules and alliances are changing constantly.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Luke James is a freelance writer and journalist. Although his background is in legal, he has a personal interest in all things tech, especially hardware and microelectronics, and anything regulatory.