Google deploys new Axion CPUs and seventh-gen Ironwood TPU — training and inferencing pods beat Nvidia GB300 and shape 'AI Hypercomputer' model

Chips complete Google's portfolio of custom silicon.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Today, Google Cloud introduced new AI-oriented instances, powered by its own Axion CPUs and Ironwood TPUs. The new instances are aimed at both training and low-latency inference of large-scale AI models, the key feature of these new instances is efficient scaling of AI models, enabled by a very large scale-up world size of Google's Ironwood-based systems.

Millions of Ironwood TPUs for training and inference.

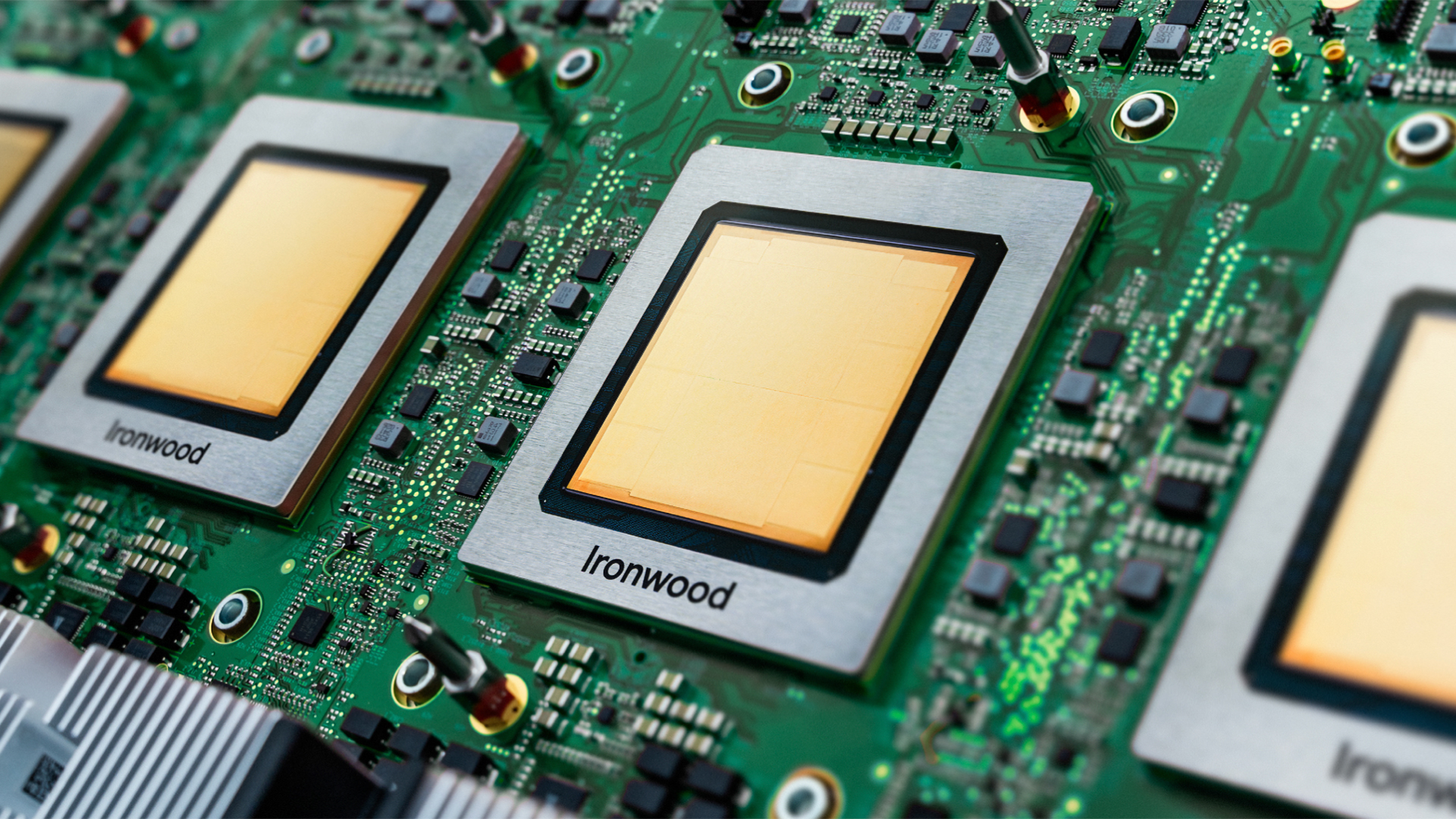

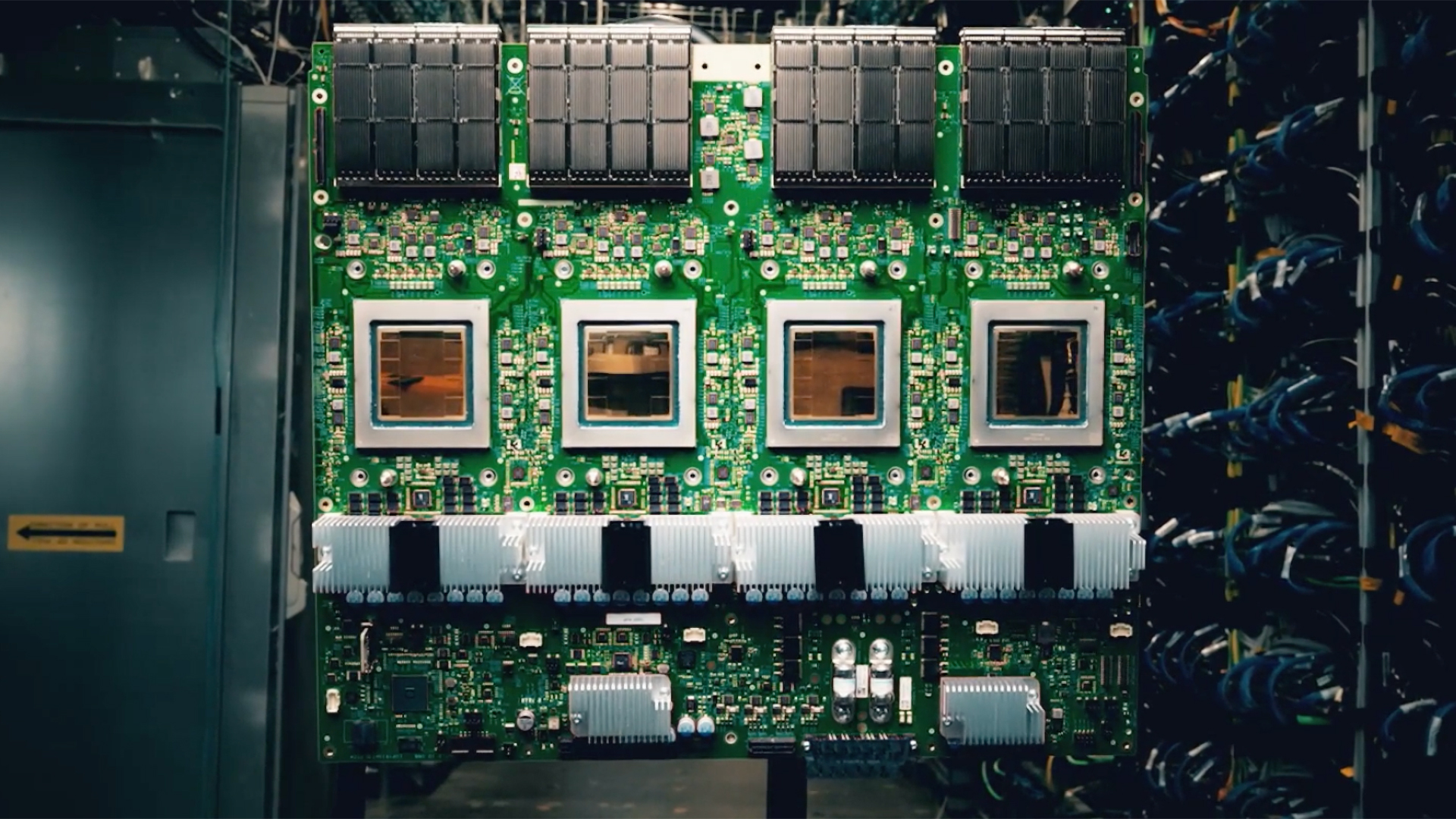

Ironwood is Google's 7th Generation tensor processing unit (TPU), which delivers 4,614 FP8 TFLOPS of performance and is equipped with 192 GB of HBM3E memory, offering a bandwidth of up to 7.37 TB/s. Ironwood pods scale up to 9,216 AI accelerators, delivering a total of 42.5 FP8 ExaFLOPS for training and inference, which by far exceeds the FP8 capabilities of Nvidia's GB300 NVL72 system that stands at 0.36 ExaFLOPS. The pod is interconnected using a proprietary 9.6 Tb/s Inter-Chip Interconnect network, and carries roughly 1.77 PB of HBM3E memory in total, once again exceeding what Nvidia's competing platform can offer.

Ironwood pods — based on Axion CPUs and Ironwood TPUs — can be joined into clusters running hundreds of thousands of TPUs, which form part of Google's adequately dubbed AI Hypercomputer. This is an integrated supercomputing platform uniting compute, storage, and networking under one management layer. To boost the reliability of both ultra-large pods and the AI Hypercomputer, Google uses its reconfigurable fabric, named Optical Circuit Switching, which instantly routes around any hardware interruption to sustain continuous operation.

IDC data credits the AI Hypercomputer model with an average 353% three-year ROI, 28% lower IT spending, and 55% higher operational efficiency for enterprise customers.

Several companies are already adopting Google's Ironwood-based platform. Anthropic plans to use as many as one million TPUs to operate and expand its Claude model family, citing major cost-to-performance gains. Lightricks has also begun deploying Ironwood to train and serve its LTX-2 multimodal system.

Axion CPUs: Google finally deploys in-house designed processors

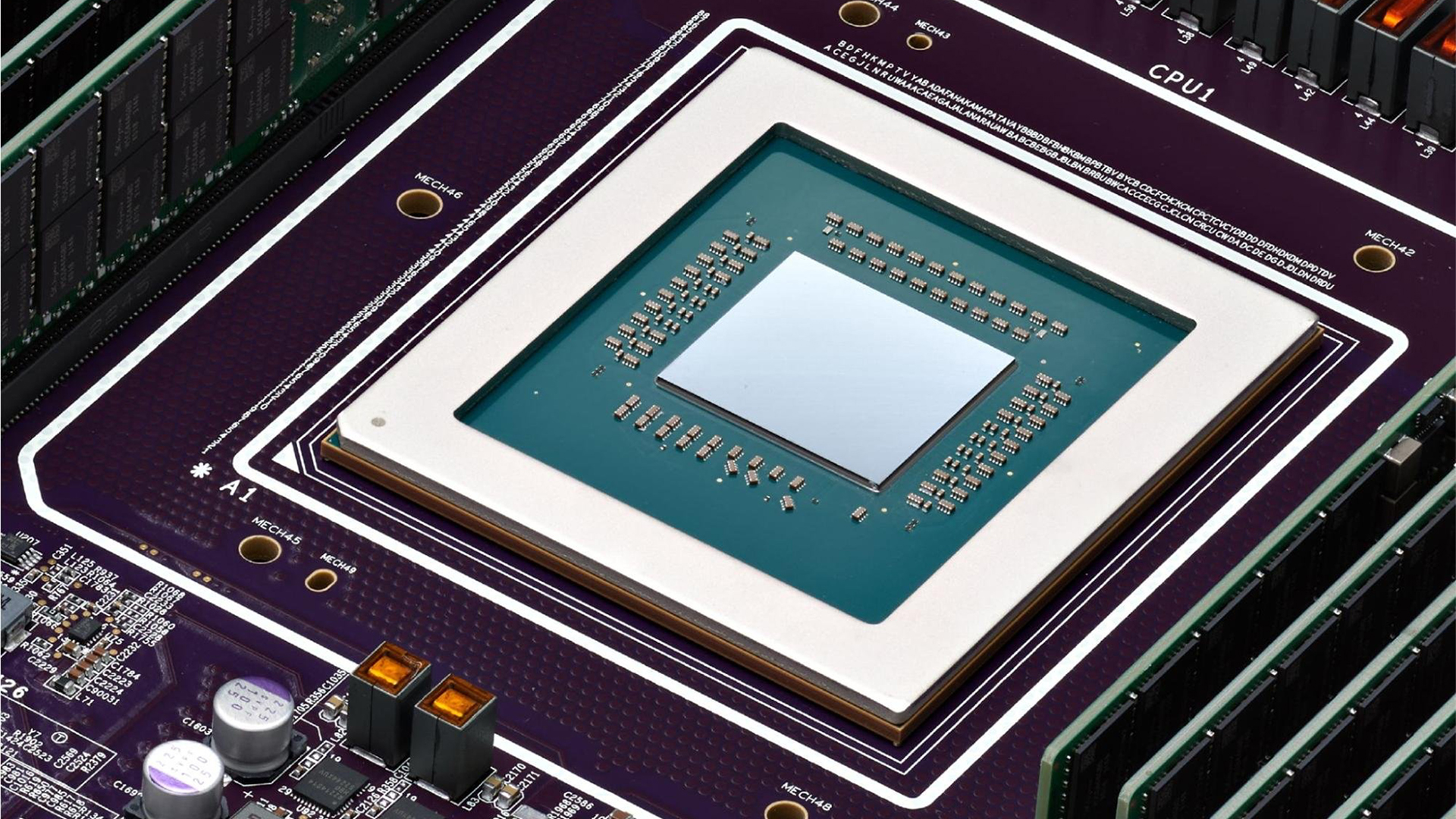

Although AI accelerators like Google's Ironwood tend to steal all the thunder in the AI era of computing, CPUs are still crucially important for application logic and service hosting as well as running some of AI workloads, such as data ingestion. So, along with its 7th Generation TPUs, Google is also deploying its first Armv9-based general-purpose processors, named Axion.

Google has not published the full die specifications for its Axion CPUs: there are no confirmed core count per die (beyond up to 96 vCPUs and up to 768 GB of DDR5 memory for C4A Metal instance), no disclosed clock speeds, and no process node publicly detailed for the part. What we do know is that Axion is built around the Arm Neoverse v2 platform, and is designed to offer up to 50% greater performance and up to 60% higher energy efficiency compared to modern x86 CPUs, as well as 30% higher performance than 'the fastest general-purpose Arm-based instances available in the cloud today. There are reports that the CPU offers 2 MB of private L2 cache per core, 80 MB of L3 cache, supports DDR5-5600 MT/s memory, and Uniform Memory Access (UMA) for nodes.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Servers running Google's Axion CPUs and Ironwood CPUs come equipped with the company's custom Titanium-branded controllers, which offload networking, security, and I/O storage processing from the host CPU, thus enabling better management, resulting in higher performance.

In general, Axion CPUs can serve both AI servers and general-purpose servers for a variety of tasks. For now, Google offers three Axion configurations: C4A, N4A, and C4A metal.

The C4A is the first and primary offering in Google's family of Axion-powered instances, also the only one that is generally available today. It provides up to 72 vCPUs, 576 GB of DDR5 memory, and 100 Gbps networking, paired with Titanium SSD storage of up to 6 TB of local capacity. The instance is optimized for sustained high performance across various applications.

Next up is the N4A instance that is also aimed at general workloads such as data processing, web services, and development environments, but it scales up to 64 vCPUs, 512 GB of DDR5 RAM, and 50 Gbps networking, making it a more affordable offering.

The other preview model is C4A Metal, which is a bare-metal configuration that presumably exposes the full Axion hardware stack directly to customers: up to 96 vCPUs, 768 GB of DDR5 memory, and 100 Gbps networking. The instance is ment for specialized or license-restricted applications or Arm-native development.

A complete portfolio of custom silicon

These new launches built upon a decade of Google's custom silicon development which began with the original TPU and continued through YouTube's VCUs, Tensor mobile processors, and Titanium infrastructure. The Axion CPU — Google's first Arm-based general-purpose server processor — completes the portfolio of the company's custom chips, and the Ironwood TPU set the stage for competition against the best AI accelerators on the market.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user According to this, Google's Axion CPUs are made on TSMC N3:Reply

https://www.trendforce.com/news/2025/10/21/news-googles-axion-cpu-reportedly-built-on-tsmcs-3nm-set-to-drive-foundrys-data-center-revenue-growth/

Probably 96 GiB/channel @ 6-channel (384-bit). It works out to 8 GiB/core, which is probably enough for most general-purpose server workloads.The article said:It provides up to 72 vCPUs, 576 GB of DDR5 memory -

jp7189 I don't see any data about power usage which is just as important as performance numbers these days.Reply -

bit_user Reply

The only time I ever saw any power data on a cloud CPU is when Amazon claimed Graviton 3 used only 100W for 64 Neoverse V1 cores + PCIe 5 + DDR5.jp7189 said:I don't see any data about power usage which is just as important as performance numbers these days.

https://newsletter.semianalysis.com/p/amazon-graviton-3-uses-chiplets-and

In general, I wouldn't be surprised if the cloud operators considered it sensitive information. The more a big customer knows about what their costs are, the more negotiating leverage they have over pricing.