Acer unveils Project Digits supercomputer featuring Nvidia's GB10 superchip with 128GB of LPDDR5x

Acer joins Asus, Lenovo, and Dell with its third-party Project Digits variation.

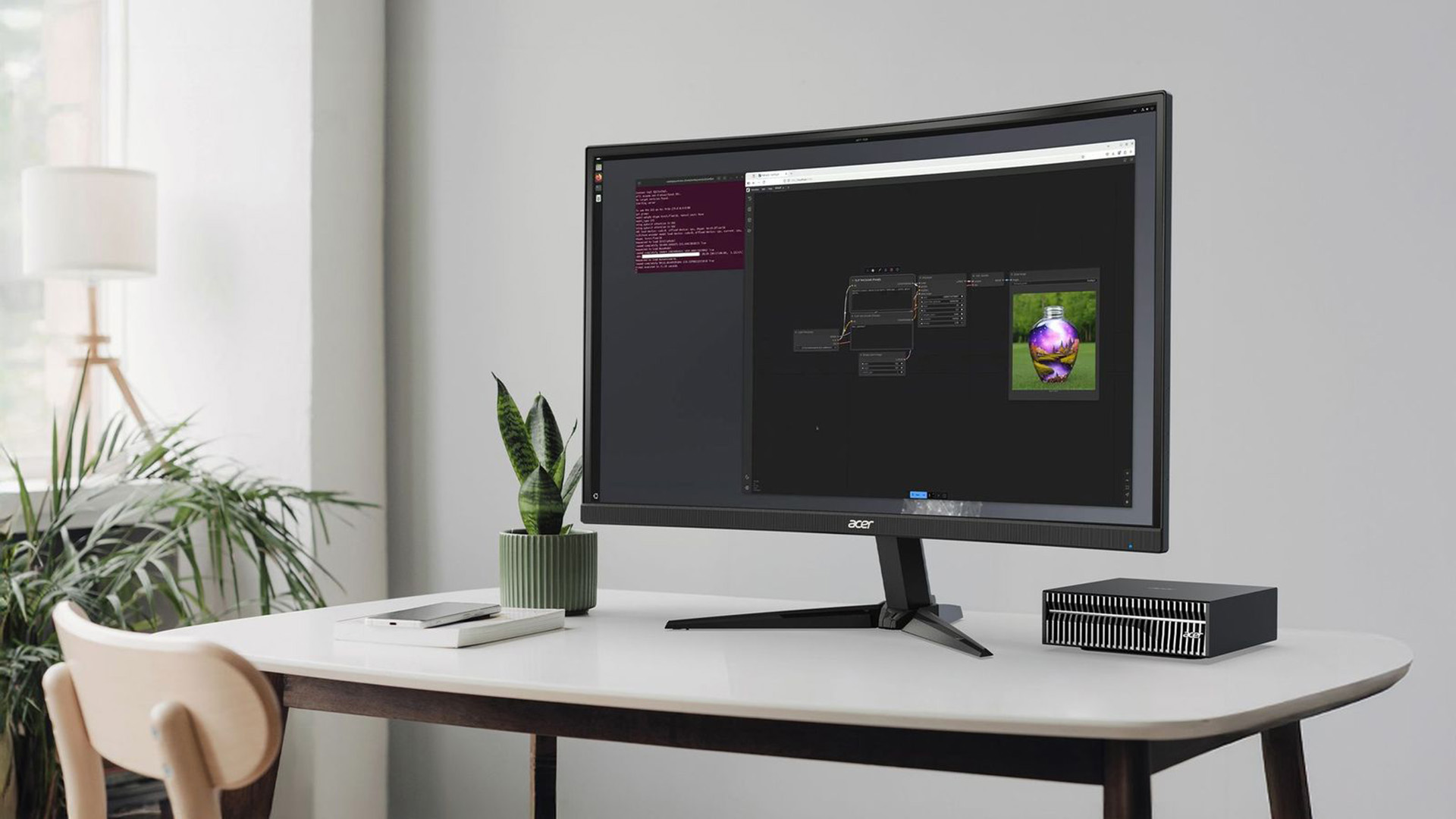

Acer has unveiled its own version of Nvidia's Project Digits mini-supercomputer, the Acer Veriton GN100 AI Mini Workstation, which is geared toward developers, universities, data scientists, and researchers who need a compact and high-speed AI system. North American pricing starts at $3,999.

The Veriton GN100 is a compact mini-PC (measuring 150 x 150 x 50.5mm), that comes housed in a black chassis with a silver grill on the front. The system features Nvidia's GB10 Grace Blackwell Superchip, which has 20 ARM CPU cores (10 Cortex-X925 and 10 A725 cores), and a Blackwell-based GPU sporting one petaFLOP of FP4 floating point performance. The GB10 Superchip is fed by 128 GB of LPDDR5x memory and can house up to four 4TB of M.2 NVMe storage with self-encryption capabilities.

I/O includes four USB 3.2 Type-C ports, one HDMI 2.1b port, an RJ-45 Ethernet connector, and a proprietary Nvidia ConnectX-7 NIC that allows two GN100 units to work in tandem — similar to SLI on older Nvidia graphics cards. The Veriton GN100 also supports Wi-Fi 7 and Bluetooth 5.1 for wireless connectivity.

Thanks to the inclusion of Nvidia's GB10 chip, the GN100 benefits from Nvidia's AI software stack — giving AI developers all the tools they need to develop and deploy large language models and other AI-based tools. Nvidia's software stack includes the CUDA toolkit, cuDNN, and TensorRT, and supports popular AI frameworks, such as TensorFlow, PyTorch, MXNet, and Jax.

Acer's Veriton GN100 is one of several third-party variants of Nvidia's Project Digits mini-supercomputer. Acer, Lenovo, Asus, and Dell have built their own versions of Project Digits featuring different chassis designs. This is similar to the way Nvidia partners with third-parties for its graphics cards — Nvidia's Project Digits is the "Founders Edition," while Acer, Lenovo, Asus, and Dell will offer third-party variations with identical specs and performance. Third-party versions can offer benefits, such as better warranties and extra software support, and are often discounted cheaper than Nvidia's OEM version.

These new Nvidia-powered mini-computers are designed to provide a high-speed, local AI solution for users who don't want to deal with the footprint or headache of a full-blown AI supercluster. A high-speed AI system can be useful for keeping sensitive data offline, minimizing latency, and optimizing performance.

Some might argue that building an RTX 5090-powered gaming/workstation system might be better — and, on the surface, that's probably true. But Nvidia's GB10 supports 128GB of system memory and has native support for Nvidia's proprietary NVFP4 — two important factors for dedicated AI work, which the RTX 5090 cannot provide. The extra memory allows users to run AI models that would be impossible on a single RTX 5090, and NVFP4 is a new FP4 standard that can significantly improve processing efficiency in AI workloads (with accuracy that approaches BF16).

This makes Nvidia's Project Digits architecture much more attractive for dedicated, professional AI developers. As of this writing, Acer has yet to announce an exact release date for the Veriton GN100, though it has said that availability will vary by region.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button!

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

kealii123 My m4 macbook from work, even with all the IT bloat ware on it, is as fast as my 4090 laptop when running smaller models, tok/sec, but has triple the ram. For coding, Are all the nvidia tool kits and accelerators worth anything? For $4k I can get a 128 gig macbookReply -

checksinthemail Superkewl, give us project 50900 with a couple 5090s and 320gb gddr7, because the bandwidth to cpu for ddr5 is crap in comparisonReply -

bit_user Reply

But Apple managed to scale up LPDDR5X to comparable levels, simply by adding more channels (which is also leads to higher capacity). The top-end M-series processors use a 1024-bit memory interface, which can equal GDD6 at 384-bit. It's true that GDDR7 ups the ante, but you won't get enough compute to exploit that bandwidth in a processor like what these Digits boxes have.checksinthemail said:the bandwidth to cpu for ddr5 is crap in comparison

In Digits, Nvidia played it fairly safe with a 256-bit data bus. The next gen will undoubtedly use higher-bandwidth memory and probably go to 384 or 512-bit.

The main problem with GDDR memory is that it's relatively low-density. Also, quite energy-intensive. That's why Nvidia didn't use it here, and I don't foresee that changing. I'm sure price is another issue. -

thaddeusk An important note is that the 5090 also supports NVFP4 and has about 3.3x the raw AI performance of the DGX. You can fit a pretty large LLM into 32gb if you're using a 4-bit quant and that extra performance is great for diffusion models.Reply