New Deepseek model drastically reduces resource usage by converting text and documents into images — 'vision-text compression' uses up to 20 times fewer tokens

Could help cut costs and improve the efficiency of the latest AI models.

Chinese developers of Deepseek AI have released a new model that leverages its multi-modal capabilities to improve the efficiency of its handling of complex documents and large blocks of text, by converting them into images first, as per SCMP. Vision encoders were able to take large quantities of text and convert them into images, which, when accessed later, required between seven and 20 times fewer tokens, while maintaining an impressive level of accuracy.

Deepseek is the Chinese-developed AI that shocked the world in early 2025, showcasing capabilities similar to those of OpenAI's ChatGPT, or Google's Gemini, despite requiring far less money and data to develop. The creators have continued to work on making the AI more efficient since, and with the latest release known as DeepSeek-OCR (optical character recognition), the AI can deliver an impressive understanding of large quantities of textual data without the usual token overhead.

“Through DeepSeek-OCR, we demonstrated that vision-text compression can achieve significant token reduction – seven to 20 times – for different historical context stages, offering a promising direction” to handle long-context calculations, the developer said.

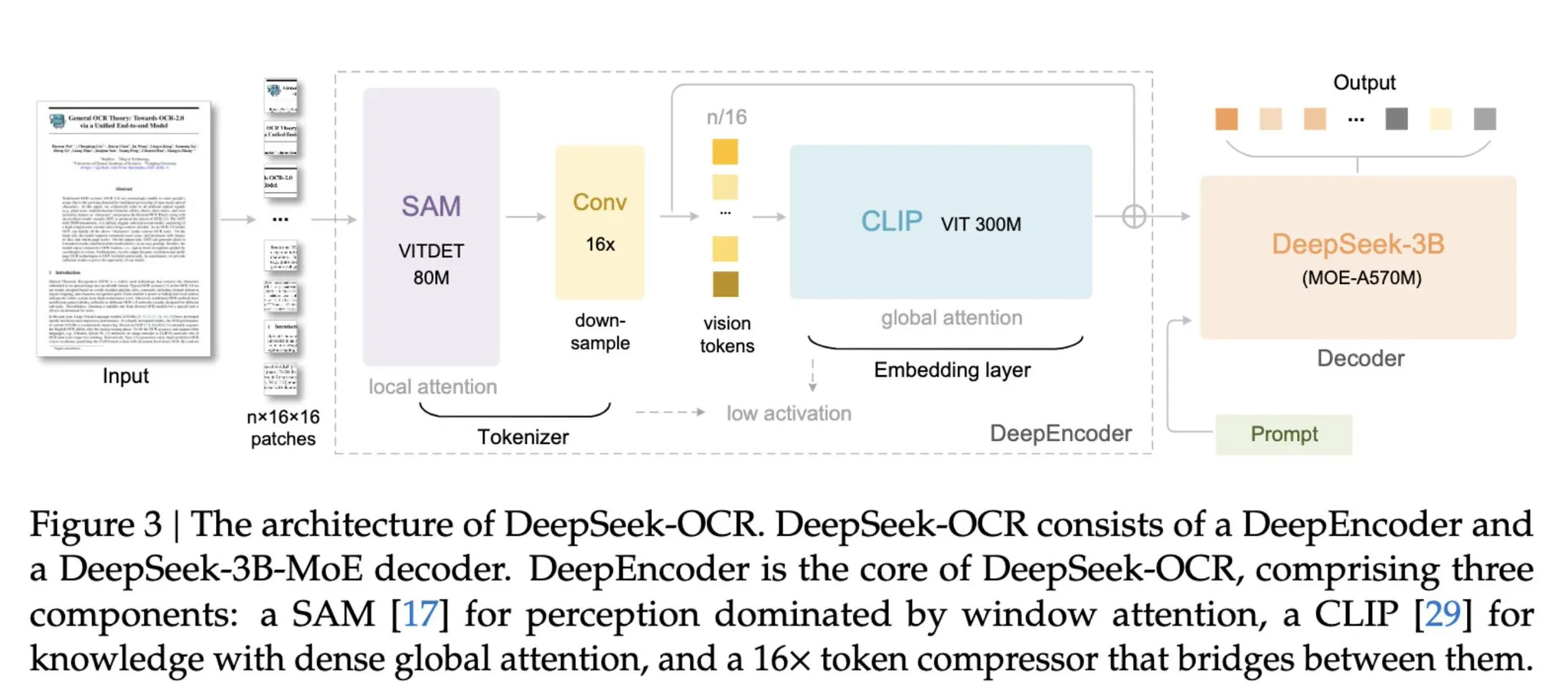

The new model is made up of two components, the DeepEncoder and DeepSeek3B-MoE-A570M, which acts as the decoder. The encoder can take large quantities of text data and convert it into high-resolution images, while the decoder is particularly adept at taking those high-resolution images and understanding the textual context within them, while requiring fewer tokens to do so than if you just fed the text right into the AI wholesale. It manages this by dissecting each task into separate sub-networks and uses specific AI agent experts to target each subset of the data.

This works really well for handling tabulated data, graphs, and other visual representations of information. This could be of particular use in finance, science, or medicine, the developers suggest.

In benchmarking, the developers claim that when reducing the number of tokens by less than a factor of 10, DeepSeek-OCR can maintain a 97% accuracy rating in decoding the information. If the compression ratio is increased to 20 times, the accuracy falls to 60%. That's less desirable and shows there are diminishing returns on this technology, but if a near-100% accuracy rate could be achieved with even a 1-2x compression rate, that could still make a huge difference in the cost of running many of the latest AI models.

It's also being pitched as a way of developing training data for future models, although introducing errors at that point, even in the form of a few percent off base, seems like a bad idea.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

If you want to play around with the model yourself, it's available via online developer platforms Hugging Face and GitHub.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Jon Martindale is a contributing writer for Tom's Hardware. For the past 20 years, he's been writing about PC components, emerging technologies, and the latest software advances. His deep and broad journalistic experience gives him unique insights into the most exciting technology trends of today and tomorrow.

-

anoldnewb "This works really well for handling tabulated data"Reply

It seems very counterintuitive that this process:

text -> image -> via AI -> organized text document ( with tables and proper flow of text) -> via AI to ->Ai tokens

is better than

text - via AI to AI tokens

However, after trying and failing many times to get a table in a pdf document copied into a spreadsheet or as a table in a document editor, I understand how it could be better.

Basically, a PDF file is often an unorganized file that contains a jumble of text. Try highlighting just what you want to copy in some pdf files and you can get crazy bits of text from here and there instead of what seems obvious on the screen.

Deepseek was forced to operate with fewer hardware resources and has developed several innovative AI advancements that has enabled them overcome some of the hardware restrictions that the US has imposed on them. They have leveraged all the tech that they could "borrow" and have found novel paths forward. Maybe it is time to consider "borrowing" some of their developments. -

jp7189 I'm having a hard time wrapping head around the idea of converting text to image to improve efficiency. I can understand if this were only applied to say a graph that is mostly an image with a little text, but the article mentions converting blocks of text. It seems like there's be a better way to reduce token count.. but they look to be having good success with the method which is why I find this so interesting.Reply -

dynamicreflect deepfake, you are far better than yourself!Reply

https://preview.redd.it/deepseek-says-its-a-version-of-chatgpt-v0-sqlcun1f2lfe1.jpg?width=1080&crop=smart&auto=webp&s=125f96498c25d601f7095415b38f668c9c09d5bc