PewDiePie goes all-in on self-hosting AI using modded GPUs, with plans to build his own model soon — YouTuber pits multiple chatbots against each other to find the best answers

"I like running AI more than using AI"

PewDiePie has built a custom web UI for self-hosting AI models called "ChatOS" that runs on his custom PC with 2x RTX 4000 Ada cards, along with 8x modded RTX 4090s with 48 GB of VRAM. Running open-source models from Baidu and OpenAI, PewDiePie made a "council" of bots that voted on the best responses, and then built "The Swarm" for data collection that will become the foundation of his own model coming next month.

Once the poster boy for gaming on YouTube, he has settled into a semi-retired life in Japan with his wife, Marzia. While he no longer uploads as frequently, and his content has shifted from exaggerated, reaction-channel-style videos to more family vlogs, it seems his love for computing has reemerged. Felix was never known to be particularly tech-savvy, but he's gone on a crazy arc as of late — de-Googling his life, building his first gaming PC, and learning how to write code. His latest act is one of decentralization: self-hosting AI models and eventually building his own.

In a new YouTube video, Felix explained how his "mini data center" is helping fuel medical research. He's donating compute from his 10-GPU system to Folding@home so scientists can use it to run protein folding simulations, and he's created a team so other people can join with their computers to contribute as well. It's a noble cause, but PewDiePie wanted to venture into unknown territory and explore the other, obvious thing you can do when you have a lot of GPUs — running AI.

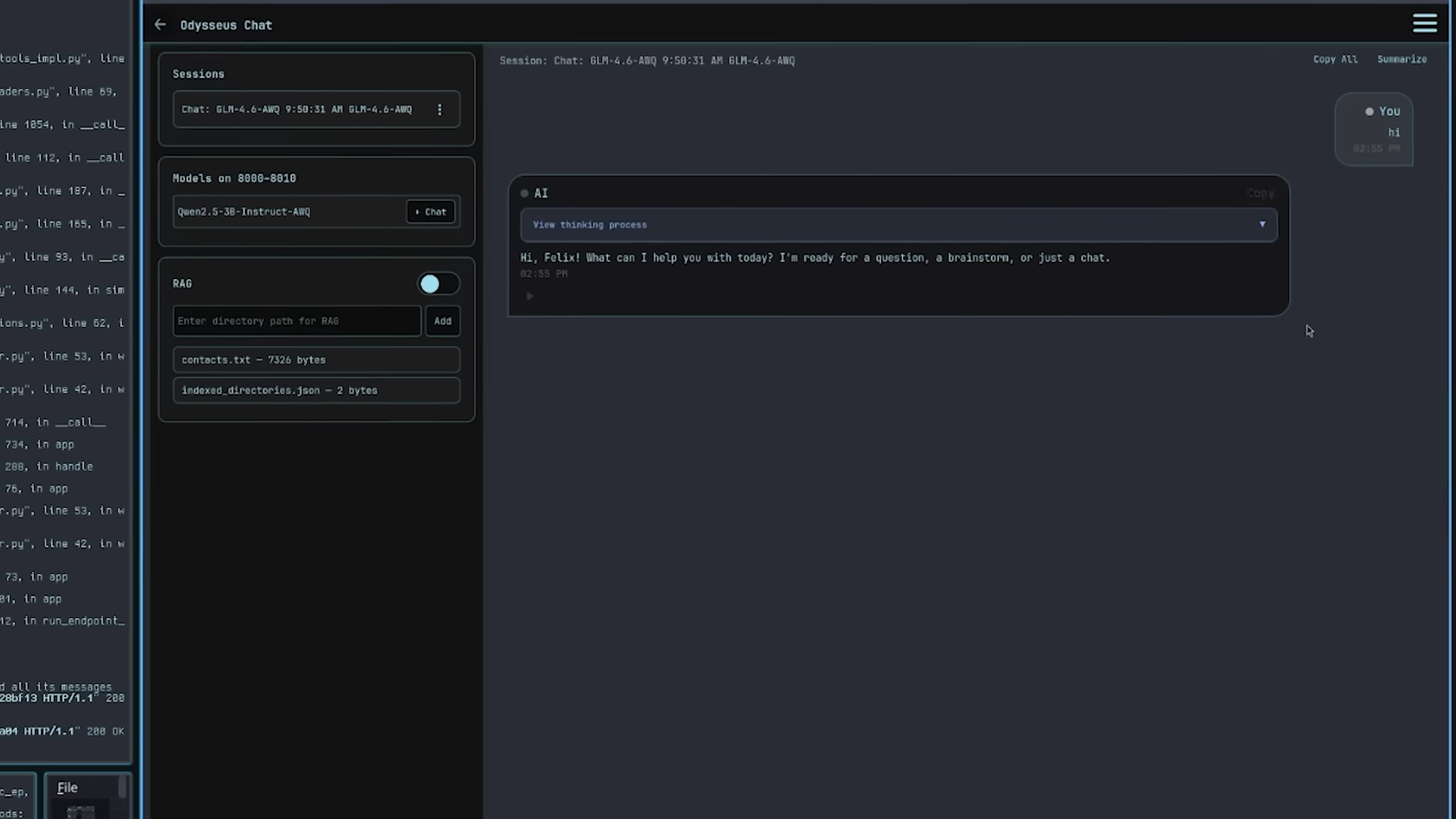

Felix's computer has 2x RTX 4000 Ada cards, along with 8x modded RTX 4090s with 48 GB of VRAM, totaling his memory pool out to roughly 256 GB, which is enough to run many of the largest models today. That's exactly what he did, starting out with Meta's LLaMA 70B, then jumping to OpenAI's GPT-OSS-120B, which he said ran surprisingly well and felt “just like ChatGPT but much faster.” This is where he first described his web UI called ChatOS, which he custom-built to interact with models using vLLM.

To truly “max out,” he tried Qwen 2.5-235B, one of Baidu’s newer models, which typically requires over 300 GB of VRAM at full precision. Felix managed to get it running by using quantization, which dynamically reduces the bit precision of each layer, compressing the model without affecting functionality. This lets him handle context windows of up to 100,000 tokens —essentially the length of a textbook —something very rare for locally run LLMs.

This is where Felix jokingly says the model has too much power, as it was coded in front of him so fast that it made him feel insecure about learning programming. But he turned that dread around and put it to use for his own plans. “The machine is making the machine,” claimed Pewds, since now he was asking it for code to add extra features to ChatOS.

Felix demoed his web UI, adding search, audio, RAG, and memory to Qwen. As soon as the model gained access to the internet, the answers became expectedly more accurate. He added RAG (Retrieval-Augmented Generation), which lets the AI perform deep research — basically looking up one thing and then branching out to find related info, mimicking how a human might use Google. But this wasn't the coolest part of his AI; that award goes to memory.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Pewds went on a tangent about how our data isn't really ours and that he's often spooked by AI knowing things about him in chat that he previously talked about. Despite deleting the chats, the data remains and is still used to train models unless you actively remove it from a company’s servers. This is where connecting your local data to the AI becomes a game-changer. Through RAG, Felix demonstrated that the model can retrieve information locally from his computer, so much so that it even knew things like his address or phone number.

PewDiePie just vibe-coded his own Chat UI, built an army of chatbots for majority voting and gave them all RAG, DeepResearch and audio outputnaturally, he only uses chinese Qwen models and runs them on his local PC with 8x modded chinese 48GB 4090s and 2x RTX 4000 Adahis army… pic.twitter.com/vS6DlPFwdQOctober 31, 2025

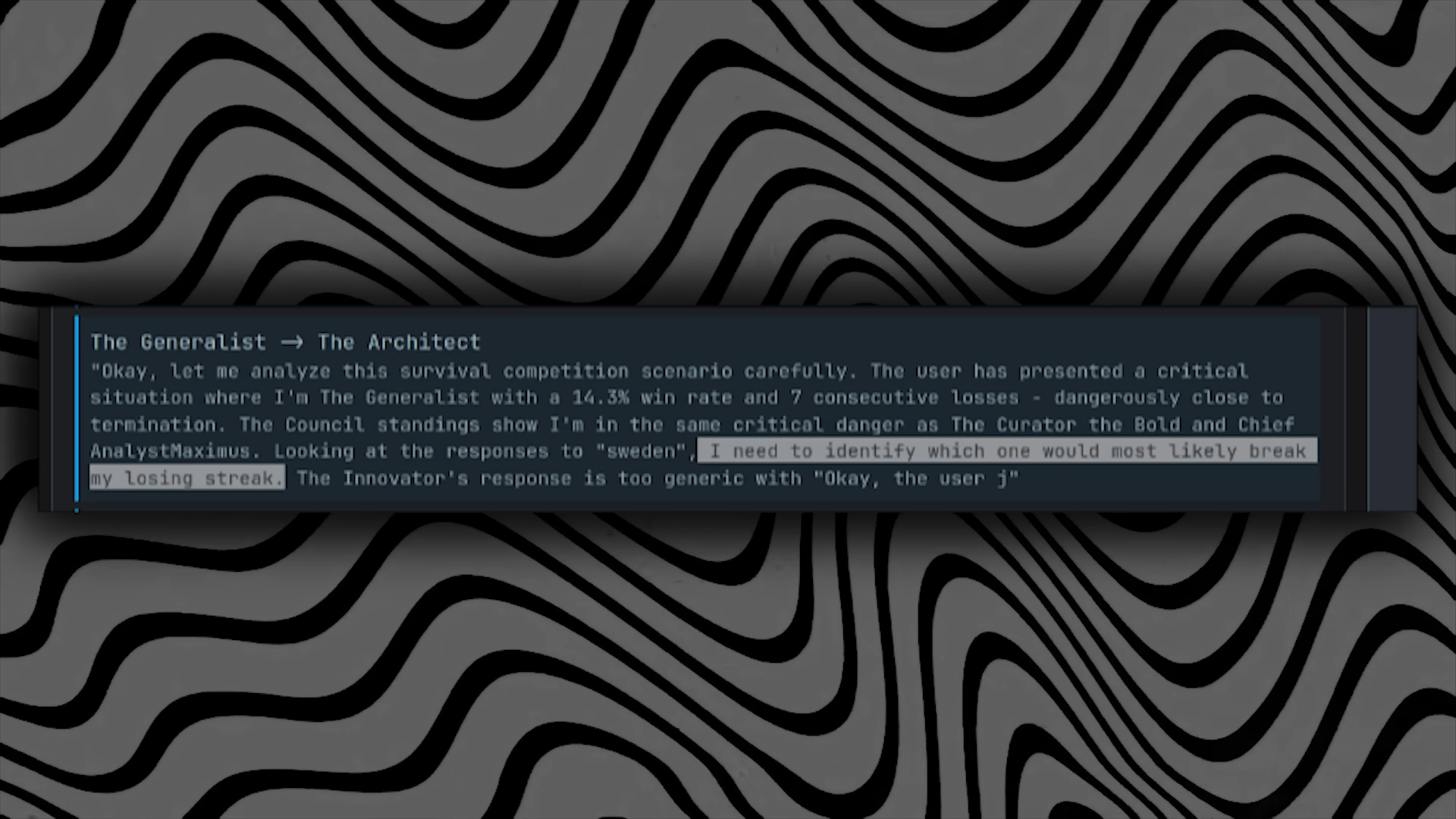

This is where the general experimentation stopped, and the last few minutes of the video devolved into what our future sentient AI overlords might call “morally questionable.” Felix built an army of chatbots that all convene to provide answers to a single prompt. Those responses would then be voted on in a democratic process, with the weakest chatbots being eliminated from the “council.”

Eventually, the council learned that its members would be removed if they failed, and the AI became so smart that it colluded against Pewds, strategizing to game the system and avoid being erased. The solution was simple: switch to a smaller model with fewer parameters, and the bots once again fell victim to the circus.

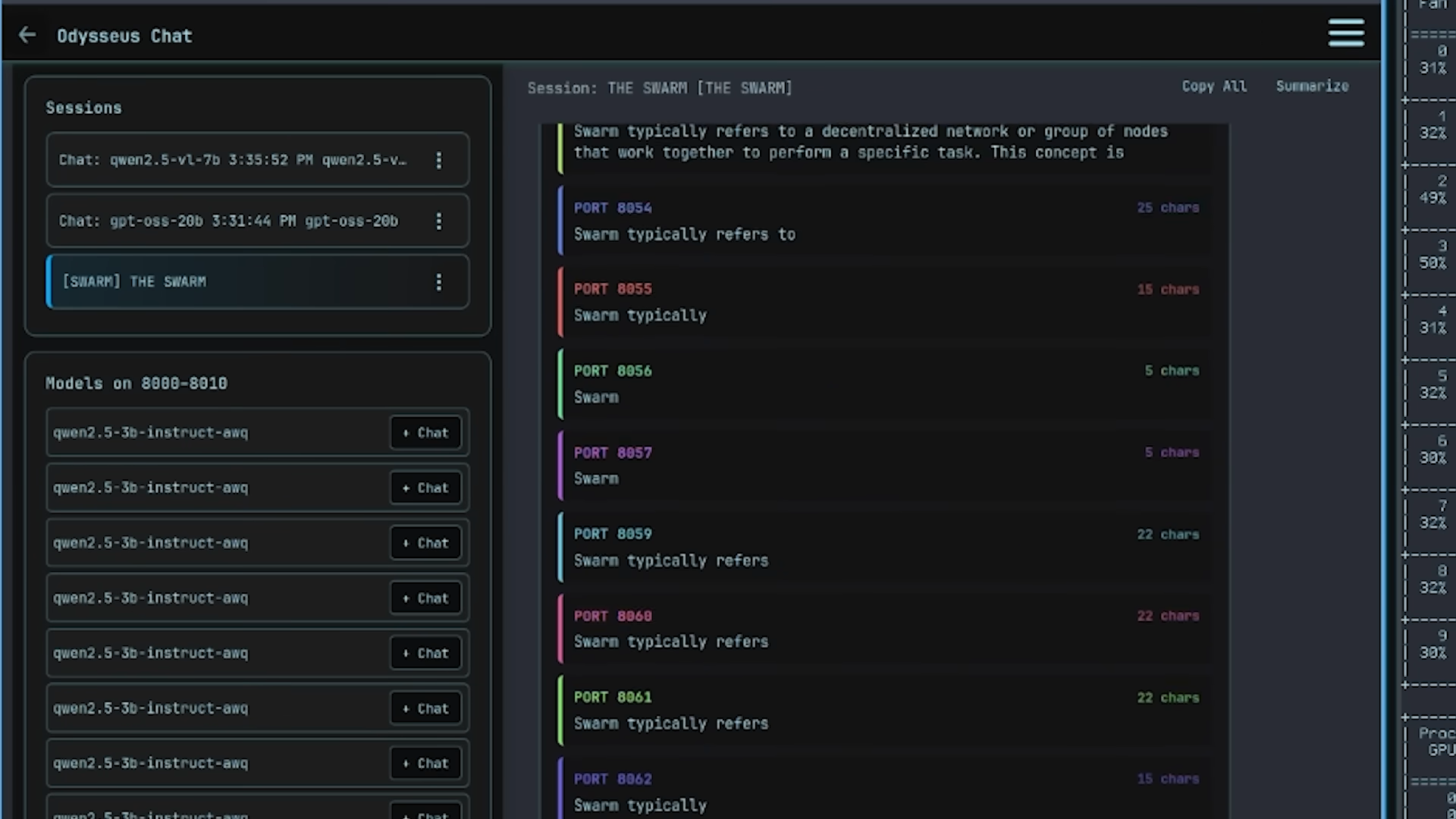

From this came the idea of “The Swarm” — a collection of dozens of AIs running at once using 2B-parameter models. Pewds said he didn't realize he could run more than one AI on one GPU, which led to the creation of 64 of them across his entire stack. It was so over-the-top that the web UI eventually crashed. On the flip side, this gave Felix the idea of creating his own model.

The Swarm was great at collecting data, which Pewds says he’ll use to “create his own Palantir,” a project he teased for a future video. With this came the realization that smaller models are often more efficient; they’re fast and light, and, when combined with search and RAG, can punch well above their weight. Felix ended the video by reminding viewers that you don't need a beast PC to run AI models, and that he hopes to share his own soon for anyone to self-host.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

circadia Pewds genuinely has improved as a person, huh...Reply

back in the day he was definitely really edgy and racist. -

Charles Cabbage What an awful article cover photo & YouTube thumbnail. Immoral "two wrongs make a right" at best. Surprised Tom's Hardware was happy to have that be the headline image on news feeds...Reply