Two GTX 580s in SLI are responsible for the AI we have today — Nvidia's Huang revealed that the invention of deep learning began with two flagship Fermi GPUs in 2012

Back then, Nvidia had no idea GPUs could be used to accelerate Deep Learning at scale.

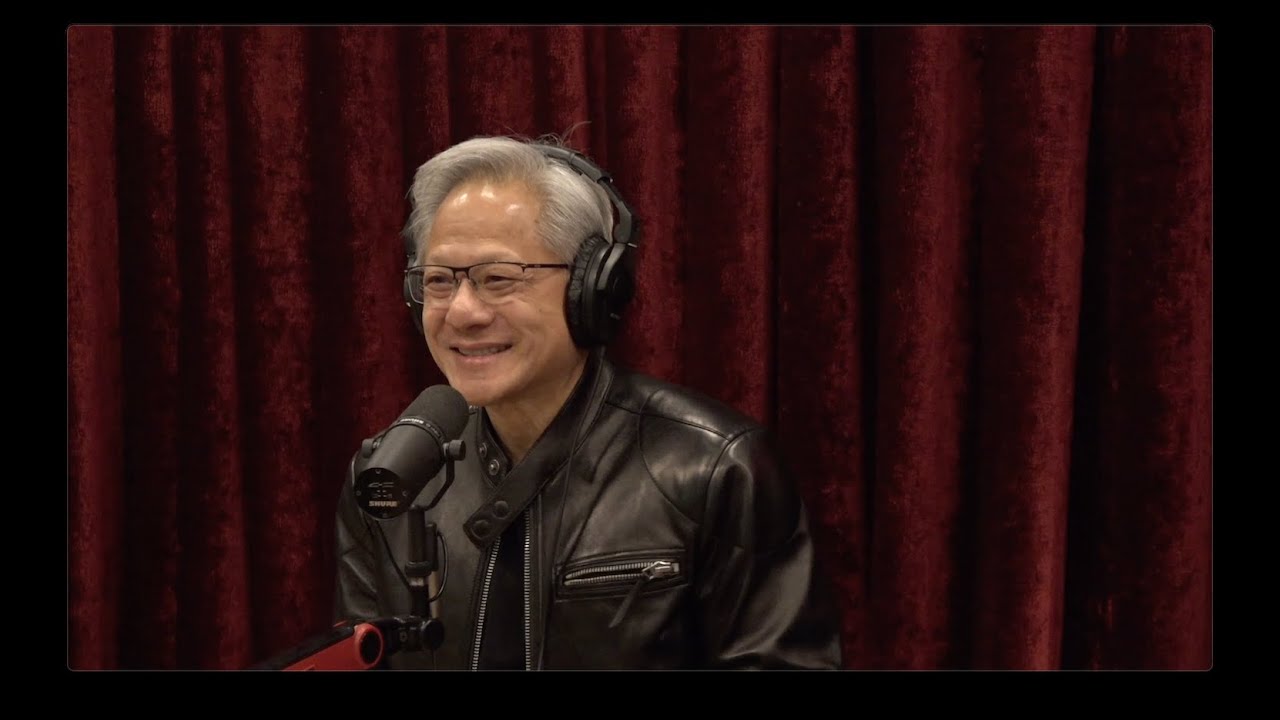

Deep learning is the powerhouse behind the AI industry that enables AI to learn by itself, powered by GPUs that are designed to run machine learning algorithms at scale. However, the invention of deep learning was based on hardware that was not explicitly intended for this type of computing. Nvidia CEO Jensen Huang revealed on the Joe Rogan podcast that researchers who first developed deep learning did it all on a pair of 3GB GTX 580s in SLI way back in 2012.

Researchers at the University of Toronto invented deep learning to improve image detection in computer vision. In 2011, Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton were researching better ways to build image recognition tools. At the time, there was no such thing as a neural network; instead, developers used manually designed algorithms to detect edges, corners, and textures for image recognition.

The three researchers built AlexNet, an architecture comprising eight layers totaling around 60 million parameters. What made this architecture so special was its ability to learn on its own, using a combination of convolutional and deep neural network layers. This architecture was so good that, when it first came out, it outperformed the leading image recognition algorithm (at the time) by over 70%, immediately capturing the industry's attention.

Jensen Huang revealed that the AlexNet developers built their image recognition algorithm on a pair of GTX 580s in SLI. Furthermore, the network was optimized to run on both GPUs, and the two GPUs exchanged data only when needed, significantly reducing training time. This makes the GTX 580 the world's first graphics card to run a deep learning/machine learning AI network.

Ironically, this milestone was made at a time when Nvidia had very little investment in AI. Most of its graphics research and development was geared toward 3D graphics and gaming, as well as CUDA. The GTX 580 was designed specifically for gaming and had no advanced support for accelerating deep learning networks. It turned out that GPUs' inherent parallelism was exactly what neural networks needed to run fast.

Jensen Huang further revealed that AlexNet, combined with its use on the GTX 580s, is how Nvidia got into developing AI hardware. Huang stated that once the company realized deep learning could be used to solve the world's problems, it invested all its money, development, and research in deep learning technology in 2012. It is what gave birth to the original Nvidia DGX in 2016, which was shipped to Elon Musk, the Volta architecture with first-gen Tensor cores, and DLSS. If it were not for a pair of GTX 580s running AlexNet, Nvidia might not be the AI giant it is today.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

ezst036 Reply

Nvidia keeps saying this.Admin said:might not be the AI giant it is today

It seems as if Nvidia has simply outgrown the gamer crowd. You served your purpose.