Nvidia CEO Jensen Huang says "the future is neural rendering" at CES 2026, teasing DLSS advancements — RTX 5090 could represent the pinnacle of traditional raster

"The way graphics ought to be"

For the first time in five years, Nvidia, the largest GPU manufacturer in the world, didn't announce any new GPUs at CES. The company instead brought the next-gen Vera Rubin AI supercomputer to the party. Gaming wasn't entirely sidelined, though, as DLSS 4.5 and MFG 6X both made their debut, major upgrades to AI-powered rendering that seems even more crucial given the comments that have followed its announcement.

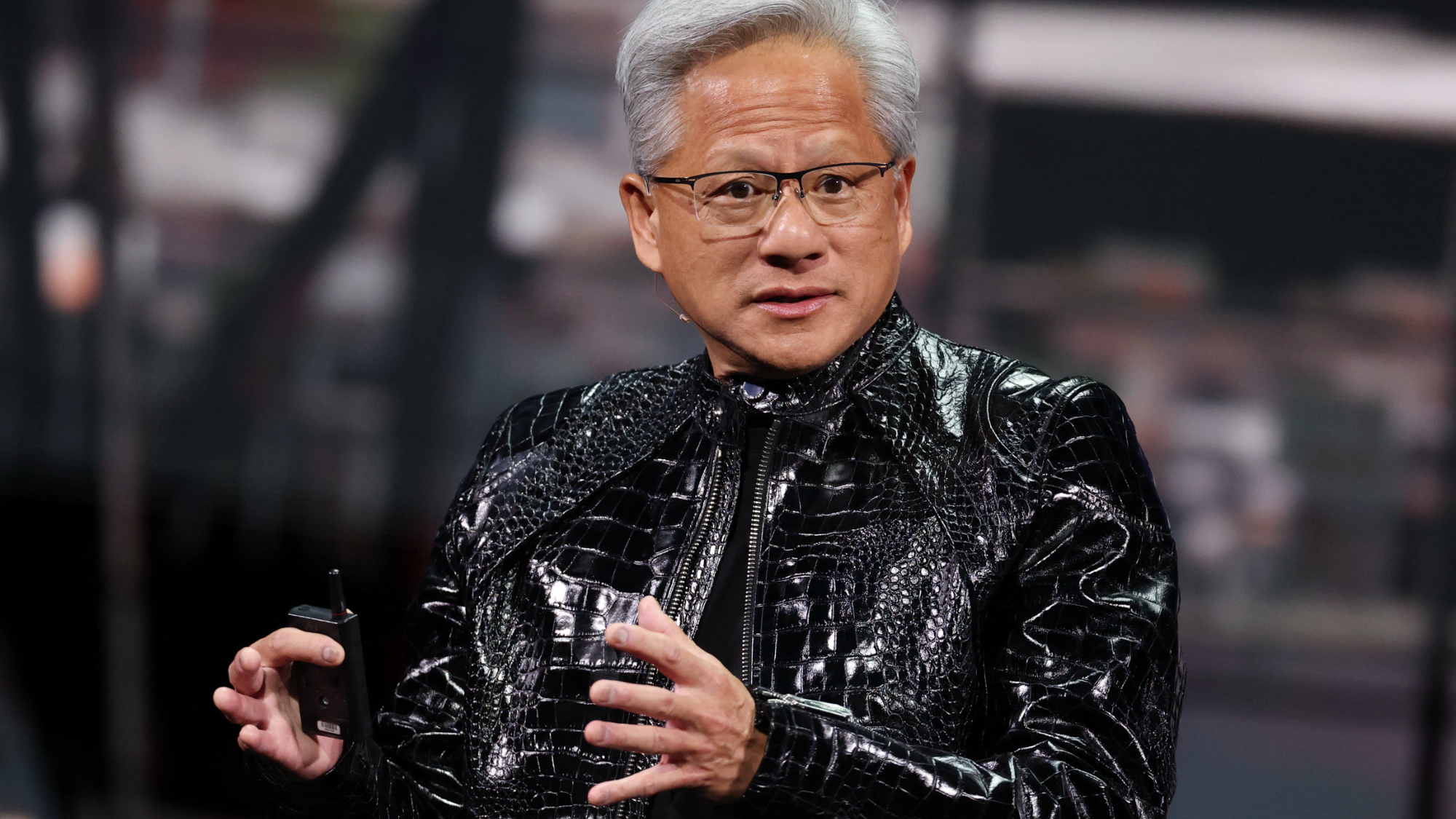

At a Q&A session with Nvidia CEO Jensen Huang, attended by Tom's Hardware in Las Vegas, the executive offered his thoughts about the future of AI as it pertains to gaming toPC World's Adam Patrick Murray, who asked Huang: "Is the RTX 5090 the fastest GPU that gamers will ever see in traditional rasterization, and what does AI gaming look like in the future?" Jensen responded by saying:

“I think that the answer is hard to predict. Maybe another way of saying it is that the future is neural rendering. It is basically DLSS. That’s the way graphics ought to be. I think you’re going to see more and more advances of DLSS... I would expect that the ability for us to generate imagery of almost any style — from photo realism, extreme photo realism, basically a photograph interacting with you at 500 frames a second, all the way to cartoon shading if you want — that entire range is going to be quite sensible to expect."

Huang further speculated that the future of rendering likely involves more AI operations on fewer, extremely high quality pixels, and shared that "we're working on things in the lab that are just utterly shocking and incredible."

With the way games are optimized (or not) these days, upscaling and even frame-gen are expected parts of the performance equation at this point. Developers often count DLSS as part of the default system requirements now, so Jensen's enthusiasm for the tech is timely and, of course, characteristic.

Going as far as to say that the "future is neural rendering" is a strong indication that the raster race might be over, and that it's "basically DLSS" that will push us past the finishing line now. As companies experiment with more and more neural techniques for operations like texture compression and decompression, neural radiance fields, frame generation, and even an entire neural rendering replacement for the traditional graphics pipeline, it's clear that matrix math acceleration and purpose-built AI models will play ever larger roles in real-time rendering going forward.

The CEO extended his passion for AI by talking about how in-game characters will also be overtaken by AI, built from scratch with neural networks at the center of them, turning NPCs lifelike. It's not just photorealism, but also emotional realism, perhaps taking a load off the CPU that would otherwise compute logic for random characters. Nvidia's ACE platform has already been working toward this for a while now and is currently present across the landscape.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

"You should also expect that future video games are essentially AI characters within them. Every character will have their own AI, and every character will be animated robotically using AI. The realism of these games is going to really, really climb in the next several years, and it’s going to be quite extraordinary."

Beyond the photorealism, this aspect of AI can actually help cut development times since no studio will tirelessly animate and breathe life into every single NPC. We'd walk away with a more polished outcome, but it will still lack that human touch, the sheer creativity that many put up as the argument against generative AI today.

It's important to note that Jensen himself never said that the RTX 5090 represents the peak of traditional raster, but he didn't push back on that comment. The 5090 is a ludicrously powerful GPU, and it will still be a while before that kind of performance trickles down to the masses.

But it seems like the traditional shader compute ceiling may no longer grow as much or as fast, and AI-reliant features like DLSS are likely to beocme the new frontier of innovation. We're already seeing this happen, and it might become our permanent reality if the AI boom doesn't cool off soon.

For now, though, there's no escaping AI when it comes to computer hardware, both in a literal and metaphysical sense. The very thing that caused the current component crisis is being touted as its antidote. Jensen ended his answer to this question by saying, "I think this is a great time to be in video games.”

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

- Jeffrey KampmanSenior Analyst, Graphics

- Paul AlcornEditor-in-Chief

-

hotaru251 yes the future Nvidia wants juts like they wanted RTX to kick off and how long did that take to actually have a handful of titles?Reply

Or DLSS 1.0 which was absolute trash?

I live in the present and the future has no impact on the now we live in. -

thestryker This huge push for shifting the rendering pipeline has everything to do with margins and that's about it. Potentially being able to use less silicon and lower memory capacities on client video cards not only increases margins, but lowers the chance of them being used in place of enterprise parts.Reply

It seems to me that if they'd used a fixed size tensor core setup for client parts they'd have more room for raster/RT. Of course this would increase costs most likely and as we've seen the last couple of generations nvidia is hyper focused on margins. -

mynamehear The future is going to be wearing a barrel as your 401k and retirement fund us tapped out to the biggest fraud the world has ever seen- Huang and the ai griftReply -

ekio This guy will say anything that raises the stock price and cash of his company.Reply

“Stop learning how to code, AI will do it for you”

“Stop rendering with cgi, AI will do it better”

“Stop buying decently priced gaming gpus, it is only good margin, I love extremely huge margins only, so I prefer to sell them to Microsoft who doesn’t have enough electricity to even turn them on”

Ok, the last one he didn’t say it, he just thought it. -

bit_user Reply

People buy GPUs with the expectation of keeping them for several years. So, one way to read his comments is that he's trying to sell people on buying Nvidia GPUs, since they have such a dominance over AI. If you believe that AI will become so fundamental to games & game performance, then buying a Nvidia card would be the logical outcome.hotaru251 said:I live in the present and the future has no impact on the now we live in. -

bit_user Reply

Eh, but the DPI on monitors is higher than it used to be. Even going from a 27" 1440p monitor to a 32" 4k monitor left me with much smaller pixels. I absolutely don't need them rendered with the same degree of fidelity, especially at high framerates that are way beyond what monitors were capable of, back when every pixel was lovingly rendered as a special and unique snowflake!thestryker said:This huge push for shifting the rendering pipeline has everything to do with margins and that's about it.

The way I look at DLSS is basically just better scaling. I don't need PS5 games rendered at 4k, for my TV, but I sure don't want to see conventional scaling artifacts, either.

I don't understand this point.thestryker said:It seems to me that if they'd used a fixed size tensor core setup for client parts they'd have more room for raster/RT. Of course this would increase costs most likely and as we've seen the last couple of generations nvidia is hyper focused on margins. -

thestryker Reply

Tensor cores take up die space and unlike RT cores don't scale on client workloads. A 5090 has over 5x the Tensor cores of a 5060 which doesn't really do anything for it when it comes to gaming. I just assume a design with a fixed Tensor core count would end up being more expensive at the very least design wise than just scaling up the same SM across the board.bit_user said:I don't understand this point. -

bit_user Reply

But more tensor cores should mean you can run DLSS at higher resolutions & frame rates, in less time?thestryker said:Tensor cores take up die space and unlike RT cores don't scale on client workloads. A 5090 has over 5x the Tensor cores of a 5060 which doesn't really do anything for it when it comes to gaming.

Also, the more they lean on neural rendering (e.g. neural texture compression), the more fundamental Tensor cores will become for gaming performance.

Finally I believe Tensor cores are now used in their raytracing pipeline. So, you also want their number to scale with RT cores.

Hence, their current architecture pretty much makes sense to me. Sure, the ratio of CUDA to Tensor cores might not be optimal, but I think it's not off by a factor that would massively impact other GPU functions. -

thestryker Reply

Maybe? There's no uplift difference between any card so it would be hard to say unless one could read the specific load.bit_user said:But more tensor cores should mean you can run DLSS at higher resolutions & frame rates, in less time?

It's not part of the RT pipeline itself, but it's used for Ray reconstruction if that's turned on. This isn't a particularly heavy workload and has only been around for a couple of years or so.bit_user said:Finally I believe Tensor cores are now used in their raytracing pipeline. So, you also want their number to scale with RT cores.

Which is of questionable benefit to people playing games. The only reason they'd keep putting more and more on Tensor cores is if they're not being fully utilized. The Tensor to shader ratio has been the same since the 30 series.bit_user said:Also, the more they lean on neural rendering (e.g. neural texture compression), the more fundamental Tensor cores will become for gaming performance. -

bit_user Reply

You might consider this interesting. It shows the difference in DLSS execution time for specific resolutions, on different GPUs of the same and different generations.thestryker said:Maybe? There's no uplift difference between any card so it would be hard to say unless one could read the specific load.

https://forums.developer.nvidia.com/t/frame-generation-performance-execution-time/263821There are marked improvements between tiers, within a given generation (as well as between the same tier of different generations). I think that cements the idea that more/better Tensor cores reduce DLSS execution time, thereby enabling higher resolution/framerate combinations.

How do you know how heavy it is?thestryker said:It's not part of the RT pipeline itself, but it's used for Ray reconstruction if that's turned on. This isn't a particularly heavy workload and has only been around for a couple of years or so.

FWIW, AMD made it clear how much AI can aid in not only ray sampling but also denoising.

Source: https://www.techpowerup.com/review/amd-radeon-rx-9070-series-technical-deep-dive/3.html

I'll admit that I was skeptical of neural texture compression, when Nvidia first started talking about it. However, upon digging into the research, I was quickly convinced of the benefits in reducing the memory footprint & bandwidth requirements of textures. You might be right that Nvidia is only doing it because they have these otherwise idle resources at hand, but that doesn't mean there's not value in harnessing them.thestryker said:Which is of questionable benefit to people playing games. The only reason they'd keep putting more and more on Tensor cores is if they're not being fully utilized. The Tensor to shader ratio has been the same since the 30 series.

While you can argue over Nvidia's die allocation between CUDA cores and Tensor cores, what's a lot harder to argue is the economics of increasing memory capacity and bandwidth. If neural texture compression helps there (and I think it's clear that it does), then it's another way the tensor cores are adding real value in gaming.

I actually liked how AMD's approach of trying to harness as much of the shading pipeline as possible, in their WMMA implementation. However, we're at a crossroads where even AMD can't deny how critical AI will be to the future of gaming. Hence, its collaboration with Sony, which we know has already resulted in the creation of a new class of compute unit for both PS6 and next gen AMD GPU: Neural Arrays.

https://www.tomshardware.com/video-games/console-gaming/sony-and-amd-tease-likely-playstation-6-gpu-upgrades-radiance-cores-and-a-new-interconnect-for-boosting-ai-rendering-performance