CPU scaling with DLSS — investigating CPU performance in the age of upscaling

In a time of ubiquitous upscaling, your CPU can quickly become a performance bottleneck.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Like it or not, upscaling is a cornerstone of a modern gaming PC. Much to the chagrin of vocal commenters, tools like DLSS and FSR are the backbone of PC gaming today. It's ubiquitous among AAA (and many AA and indie) releases, and essential for feeding the cutting-edge ultra-high refresh rate monitors in the most demanding games. Nvidia claims that over 80% of RTX users turn on DLSS, and even if you believe that metric is hogwash, there’s clearly demand.

Nvidia has been defined more by what DLSS can do than what its silicon can do over the past two generations, and AMD has cut down its lineup of graphics hardware while continuing to double down on FSR. Buy a new AAA game, and there’s almost a guarantee that it will have at least one upscaling feature, and usually multiple.

As GPU features, performance for upscalers is (rightfully) framed around graphics cards, but upscaling has a lot of implications for your CPU, as well. Your GPU takes up much less total render time when the upscaler is turned on, but that also means it needs instructions from your CPU faster. As the resolution drops and your GPU is able to churn through frames, your CPU has to keep it fed. If you push down your upscaler to extreme lows, you can easily run into a complete CPU bottleneck because it just can’t keep pace with the number of frames your GPU is able to render.

That’s the floor, but I wanted to investigate the ceiling. As you climb in resolution with DLSS enabled, at what point does CPU scaling disappear? All but the most CPU-bound games show almost no scaling at native 4K due to the massive workload put on the GPU. But in the age of upscalers, native 4K isn’t the way everyone plays games. According to Nvidia, at least, the vast majority of players don’t play that way, in fact.

That’s what we’re going to look at here, using five recent games from our test suite to see how CPU scaling works with DLSS in the equation. In addition to providing insight into one of the most important tools for modern PC gaming, we’ll also answer the question of how much CPU performance really matters for gaming above 1080p. If this is the way most PC gamers are playing their games — and that seems to be the case — it’s worth looking into. After all, if you assume CPU performance doesn’t matter at 1440p or 4K, you can easily create a bottleneck with DLSS enabled.

We’re mainly concerned with resolution here and how it impacts CPU performance as it goes down. As our testing reveals, however, resolution isn’t the only factor. Upscaling models have some overhead, which seems particularly pronounced with DLSS. We didn’t test FSR, though there’s a good chance we’d see less overhead with older, algorithmic versions of FSR compared to DLSS.

| Row 0 - Cell 0 | Scale Factor | Render Scale | Input resolution | Output resolution |

Quality | 1.5x | 66.7% | 1280 x 720 1706 x 960 2560 x 1440 | 1920 x 1080 2560 x 1440 3840 x 2160 |

Balanced | 1.7x | 58% | 1129 x 635 1506 x 847 2259 x 1270 | 1920 x 1080 2560 x 1440 3840 x 2160 |

Performance | 2x | 50% | 960 x 540 1280 x 720 1920 x 1080 | 1920 x 1080 2560 x 1440 3840 x 2160 |

Ultra Performance* | 3x | 33.3% | 640 x 360 854 x 480 1280 x 720 | 1920 x 1080 2560 x 1440 3840 x 2160 |

*Optional; not included in every game

The table above illustrates why we’re so concerned with resolution. The highest possible resolution with DLSS turned on — short of native resolution with DLAA — is 1440p. Unless you have a 4K output, turning on DLSS means your internal render resolution is always below 1080p. As resolution drops, your CPU should become the performance bottleneck, and we’re taking a look at how that dynamic plays out in real-world tests here.

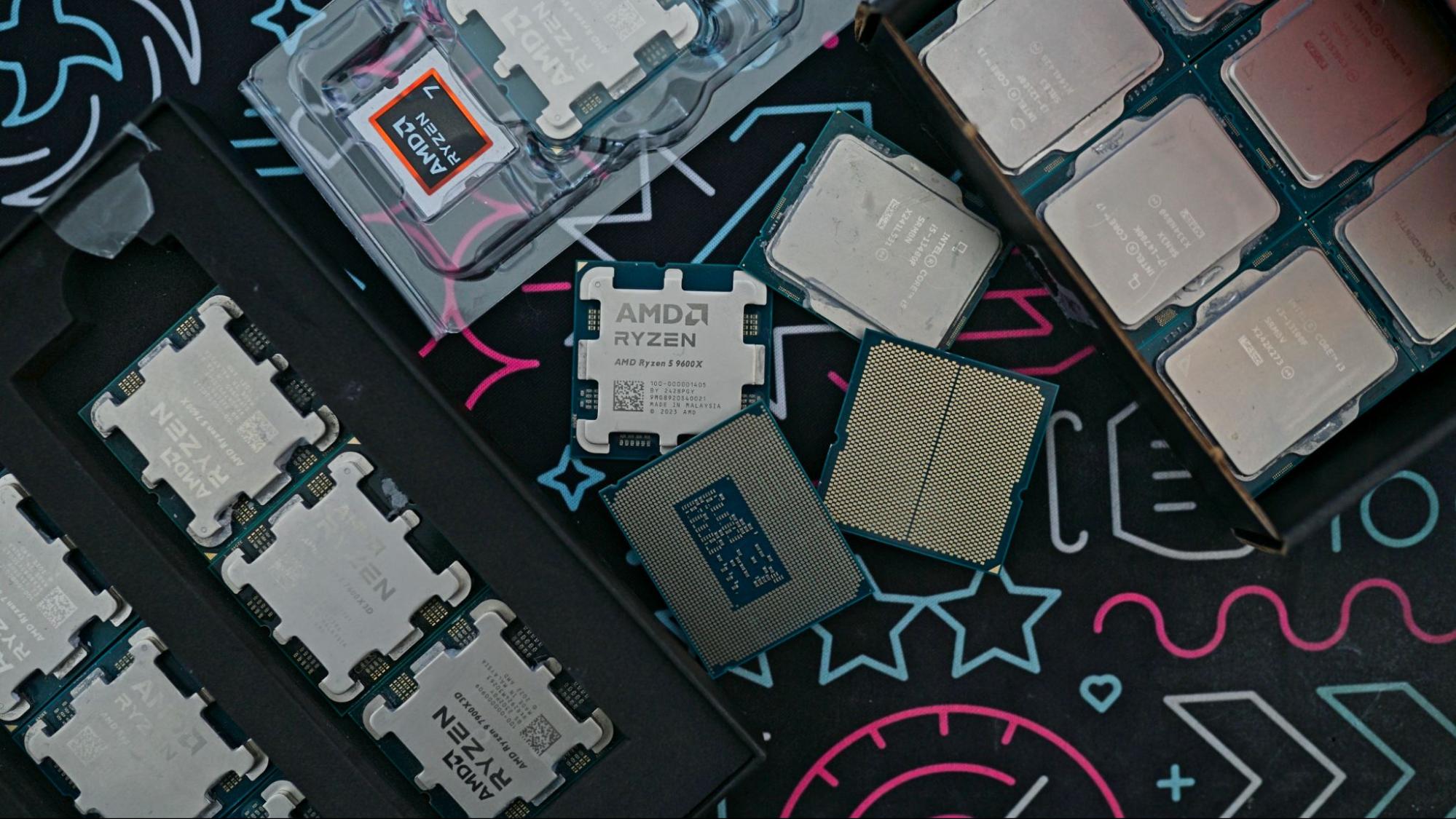

CPUs | Core i5-14400, Core i7-14700K, Ryzen 5 9600X, Ryzen 5 9850X3D |

GPU | RTX 4080 Super |

Motherboards | MSI MPG Z790 Carbon Wi-Fi (LGA1700), MSI MPG X870E Carbon Wi-Fi (AM5) |

Cooler | Corsair iCue Link H150i Elite Cappalix |

Storage | 2TB Sabrent Rocket 4 Plus |

RAM | 2x16GB G.Skill Trident Z Neo RGB DDR5-6000 |

PSU | MSI MPG A1000GS |

Game settings | Mixture of High/Ultra |

DLSS settings | Quality (66.7%), Performance (50%) |

In order to set a baseline, we must create a GPU-bound scenario to see at what point CPU scaling starts to show up. To keep testing focused, we tested DLSS Quality (66.7% scaling) and Performance (50% scaling). The Ultra Performance mode isn’t officially part of DLSS, and isn’t included across games with DLSS. Quality and Performance are.

| Row 0 - Cell 0 | DLSS Version | DLSS Preset |

Cyberpunk 2077 | v310.1.0 | Preset J |

Doom: The Dark Ages | v310.2.1 | Preset K |

Flight Simulator 2024 | v310.1.0 | Preset E |

Marvel’s Spider-Man 2 | v310.1.0 | Preset J |

The Last of Us Part One | v3.1.2 | Preset A |

DLSS has the same three (or four) quality settings, but it’s internally governed by different versions and presets. Nvidia is constantly refining the model DLSS uses, which shows up in new versions, and it has a list of presets that developers can choose from to optimize for quality, stability, performance, or some combination of all three. Above, you can see the DLSS version and preset we used for each test. These are the default settings with the latest versions of each of these games. You can override the DLSS version and preset with tools like DLSS swapper, as well as override the preset in the Nvidia App. We trusted that the developers chose right and stuck with their presets.

Short of The Last of Us Part One, all of the games we chose are using recent versions of DLSS with access to the newer Transformer model. Presets A through F are for older versions of DLSS that use a Convolutional Neural Network (CNN) model. The Transformer model has higher quality but more compute overhead; vice versa for the CNN model. In The Last of Us Part One, Preset A is the baseline preset for the CNN model. Other presets are optimized for different types of games, with some showing more stability or less ghosting depending on the pace of the game.

All the other games use a newer DLSS version, though Flight Simulator 2024 still defaults to the older Preset E, which is based on the CNN model. Otherwise, we’re looking at Preset J or K, both of which use the first iteration of Nvidia’s Transformer model. Preset K has better stability but is less sharp, while Preset J has less stability but is more sharp. Critically, we don’t have any games that use Nvidia’s newest Transformer model, which covers Presets L and M.

DLSS 4.5, which includes the second Transformer model, leverages FP8 instructions, and the Tensor cores on RTX 20- and RTX 30-series GPUs don’t support FP8. Because of that, there’s a significant performance drop with Preset L or M on those two generations, despite the fact that the cards support the first Transformer model. Those presets are technically available to us with the RTX 4080 Super that we used for testing. However, we opted to stick with the default, first-gen Transformer model presets. We’re looking at CPU performance, after all.

Let's see how performance looks on the following pages.

Jake Roach is the Senior CPU Analyst at Tom’s Hardware, writing reviews, news, and features about the latest consumer and workstation processors.