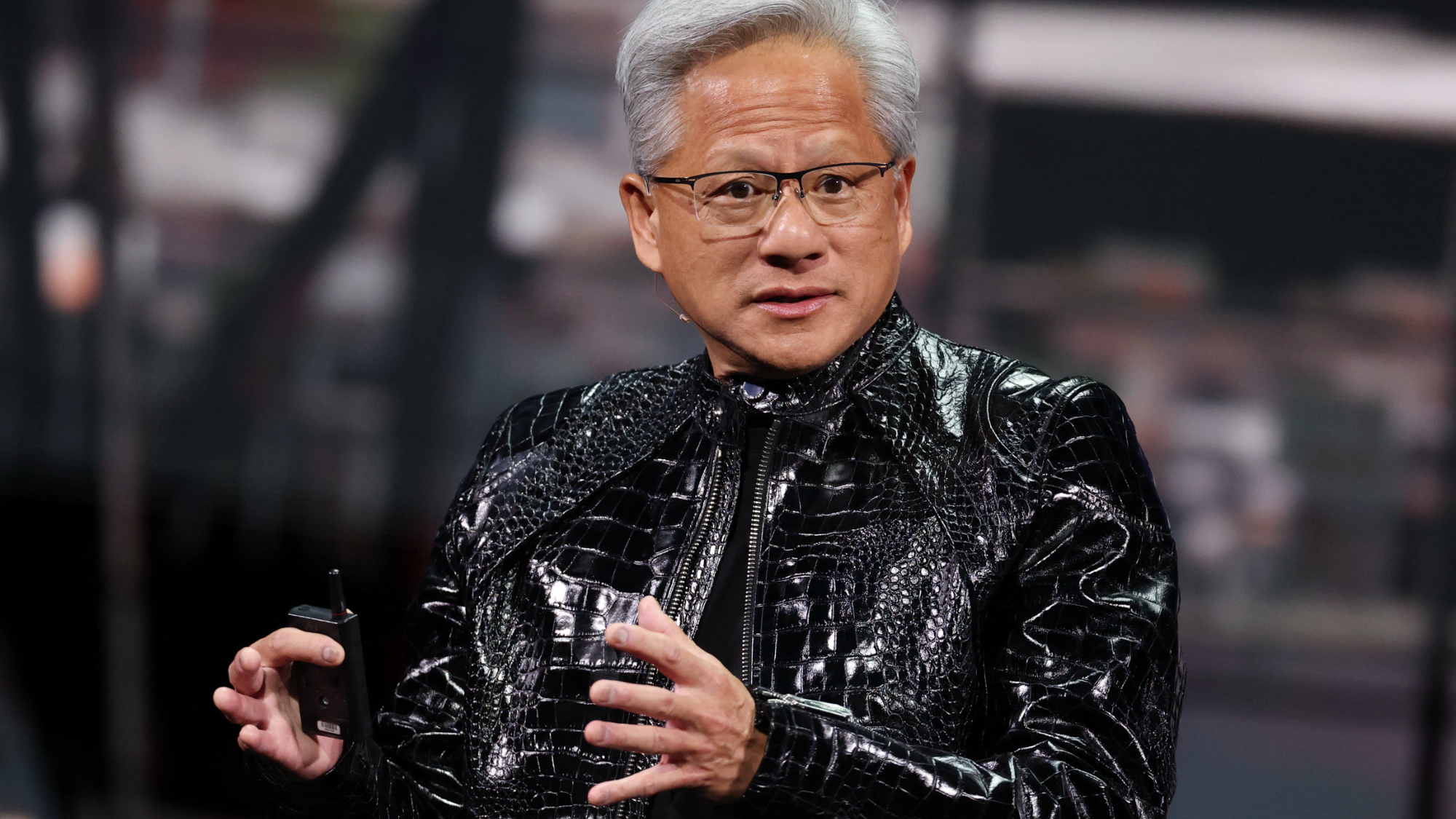

Jensen Huang claims that 'god AI' is a myth — Nvidia chief says 'doomer narrative' is 'extremely hurtful'

Jensen Huang doesn't want god AI in the world.

Nvidia CEO Jensen Huang went on the No Priors podcast to discuss the current advances in AI technology and to refute claims he believes are wrong about AI. The multi-billionaire made his feelings very clear about the current climate of AI, saying that doom-and-gloom influencers have negatively impacted the AI industry. He also claims that we are far away from "god AI" becoming a reality.

A third of the way into the podcast, Jensen claimed that someday we might have god AI, but clarified that someday will likely be on the level of a biblical or galactic scale. Huang said, "I don't see any researchers having any reasonable ability to create god AI. The ability [for AI] to understand human language, genome language, and molecular language and protein language and amino-acid language and physics language all supremely well. That god AI just doesn't exist."

Huang further clarified that god AI he believes is not coming "next week." But he deems that AI should be used to advance the human population as much as possible in the meantime, before this prophetic god AI shows up. He also does not desire a god-level AI to exist: "I think that the idea of a monolithic, gigantic company/country/nation-state is just.. super unhelpful, it's too extreme. If you want to take it to that level, we should just stop everything..."

Moving on, Jensen fired shots at influencers who paint AI in a negative way: "..extremely hurtful frankly, and I think we've done a lot of damage lately with very well respected people who have painted a doomer narrative, end of the world narrative, science fiction narrative. And I appreciate that most of us grew up enjoying science fiction, but it's not helpful. It's not helpful to people, it's not helpful to the industry, it's not helpful to society, it's not helpful to the governments."

Huang wants AI to succeed as a tool that people can use to make their work more efficient. One use case that Huang addressed last week is the ongoing labor shortage, noting that robots can act as "AI immigrants". However, the real-world effectiveness of AI paints a different picture. Stanford University reported last year that job listings dropped 13% in three years due to AI. Furthermore, Fortune reported that 95% of AI implementations have no impact on P&L.

But that is not stopping the tech industry from increasing AI capacity worldwide. Meta just announced a 6-gigawatt-capable nuclear power plant aimed at powering AI datacenters, following in the footsteps of The Stargate Project from OpenAI.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

RoLleRKoaSTeR This "AI" that we have now is total garbage.Reply

"Computers and programs will start thinking and the people will stop!" - Walter Gibbs -

bit_user Reply

This is already happening. Even with the "garbage" AI we currently have, a lot of people too easily accept whatever AI tells them.RoLleRKoaSTeR said:"Computers and programs will start thinking and the people will stop!" - Walter Gibbs -

waltc3 What should happen is that "AI" should be renamed to "IA" for Intelligence Assistant, because that is exactly what it is. The only intelligence in AI is the intelligence of the programmers who write the code. For instance, the computer doesn't know anything itself or comprehend anything, doesn't know what is true and what is false, never attended school and if it did, it would fail because it is the programmer who tells AI what is true and what is false. AI will assist the real intelligence of the user, assuming some exists...;) AI does not think or learn, but the human using it has those intelligence attributes and many more, hopefully. If the programmer lies, or errs, then AI will lie or err, etc. It's just another computer program--a search engine to be precise--and it could be a really helpful search engine or a propagandist. All depends on the coder.Reply -

bit_user Reply

Almost right. It's the people who determine its training set.waltc3 said:The only intelligence in AI is the intelligence of the programmers who write the code.

It knows based on what books and other training material it's read. Same as you, actually. If you spent your whole life being told the Earth was flat, you'd probably still believe it, even though it's wrong.waltc3 said:doesn't know what is true and what is false, never attended school and if it did, it would fail because it is the programmer who tells AI what is true and what is false.

For some definition of "think", it's a maybe. It doesn't learn on-the-fly, like we do. Recent models have a very large context window, which can give it the appearance of learning. However, it's not as if it's permanently learning new things, nor can it share what's in its context window across different users.waltc3 said:AI does not think or learn, -

TheWerewolf Replyxtremely hurtful frankly, and I think we've done a lot of damage lately with very well respected people who have painted a doomer narrative, end of the world narrative, science fiction narrative.

First, to translate... "STOP WARNING PEOPLE ABOUT POTENTIAL DANGERS! YOU'RE AFFECTING OUR ABILITY TO MAKE MONEY!"

Second, he's technically correct as long as you pay attention only to the one scenario he mentions. The odds of AI taking over the world like Colossus is extremely low, and if that were the only possible outcome, he'd have a point.

The problem is that there are a massive number of possible outcomes that are almost as bad.

Here are just four extremely likely and world destructive outcomes:

1. The military replaces human operators on nuclear and autonomous weaponry (like drones) and because of an AI failure, WWIII is started.

2. The widespread replacement of human worker with AI in a very short time creates such massive unemployment that it collapses economies around the world (not hypothetical - this is more or less what happened in the US in the 1920s leading to the 1929 Stock Market crash and the Great Depression).

3. The use of AI in speculative financial markets results in rapid and unpredictable economies and currency flows, resulting in funds being diverted from useful projects to simply spinning around chasing stocks (which is already kind of happening with microtransactions - but even faster with more volatility).

4. The use of AI in vaccine and targetted treatments oddly, leads to the very scenario everyone falsely accused the Chinese of having done with Covid-19: accidentally creating superviruses resulting in the death of millions. -

bit_user Reply

AI can cause chaos by empowering criminals, shady politicians, hostile state actors, and ruthless corporations with armies of amoral and increasingly clever bots to do things they could never find, retain, and motivate enough competent and knowledgeable humans to do.TheWerewolf said:The problem is that there are a massive number of possible outcomes that are almost as bad.

It's already happening.