Nvidia CES 2026 keynote live blog — Jensen Huang takes the stage to reveal what's next for the AI company, but don't expect new consumer GPUs

Jensen Huang takes the stage to lay out Nvidia's upcoming plans at CES 2026.

Jensen Huang, Nvidia CEO, will take to the stage today in Las Vegas, Nevada, for the company's CES 2026 keynote. The keynote begins at 1 p.m. PT, and we'll be covering it live, with the latest updates.

To set the stage, Nvidia has had a record 2025, building on the back of demand from its data center business, where it generated a staggering $51.25 billion in revenue in Q3 of FY2026, a rise of 66% year-on-year. For those looking for consumer products, such as its GeForce lineup of graphics cards, don't expect too much, as the company has already announced that we won't be seeing new GeForce GPUs during its later GeForce On community update, which is set to begin, at 9 p.m. PT.

It's Jensen's world, and we're just living in it

In 2025, Nvidia had one of its best years to date. It launched the RTX 50 series, and its data center profits skyrocketed. However, 2026 paints a very different picture. With the AI industry booming, and hyperscalers shovelling investment into new data centers like there's no tomorrow, the industry is waiting with bated breath to see exactly what course Huang might plot for 2026 and beyond. Needless to say, the entire industry will hear the reverberations.

— Sayem Ahmed

Nvidia preshow kicks off CES festivities

While Jensen Huang hasn't taken to the stage yet, Nvidia has a 'pre-game' show, where its showing a manner of applications that use Nvidia tech, complete with an interview panel ahead of time. While it's a neat warm-up, don't expect anything too staggering while things are still kicking off.

Huang is set to take the stage at 1 p.m. PT, so there's not too long to wait until we get to the real meat of today's keynote.

— Sayem Ahmed

The preshow is over: T-minus 3 minutes until the keynote begins

The preshow is now over, and Huang is expected to take the stage in three minutes to kick things off in earnest. Being rolled onto the stage is a high-resolution screen, with some semi-dramatic music playing, and we'll expect to hear more shortly. While this is fun, what are the odds that the music is AI-generated?

There may be some expectation that Huang will emerge carrying some kind of object. We've seen him roll out with robots and even a shield made of chips before; surely the company has the cash for more than just a new leather jacket.

— Sayem Ahmed

Nvidia is late to its own party

Despite a slick preshow, Nvidia has yet to officially commence its keynote, running around ten minutes later than anticipated. You'd think that with the vast resources available to Nvidia, they'd run on time. Perhaps an AI model running a calendar hallucinated somewhere?

Regardless, the industry is waiting with bated breath for things to kick off, and we'll be right here reporting the latest, as it happens.

— Sayem Ahmed

Jensen Huang takes to the stage

Slightly later than expected, the show has now officially begun, with Nvidia CEO Jensen Huang taking the stage to lay out what 2026 and beyond might hold for Nvidia. Expect Nvidia to press on topics like AI, data centers, and robotics. While it might not be quite as exciting as the launch of a new consumer graphics card, whatever is announced over the course of the keynote will likely set the tone for the rest of the industry to follow for the rest of the year.

— Sayem Ahmed

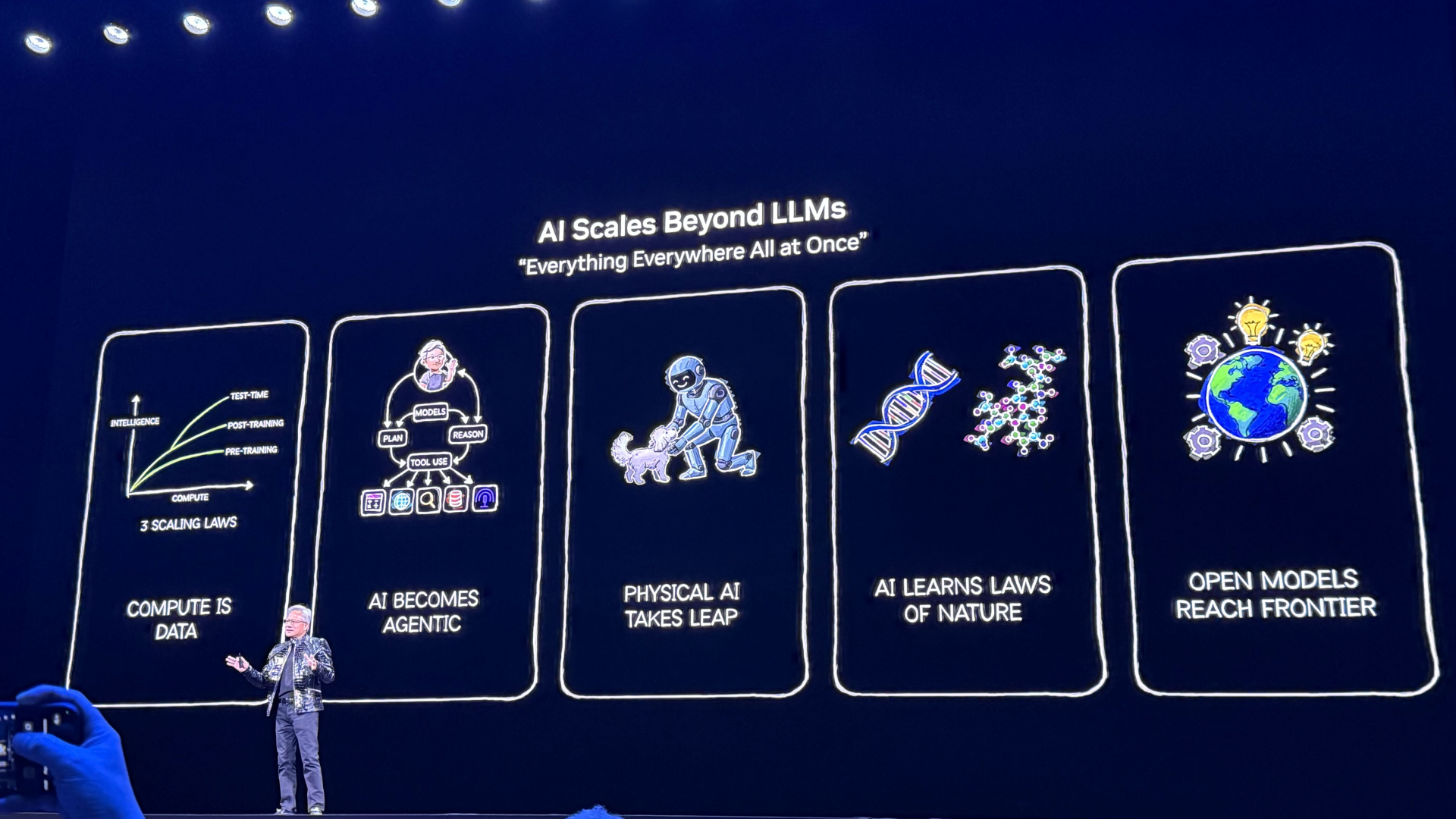

Inventing a new world with AI

100 trillion dollars of industry investment is shifting over to AI, or so Jensen Huang claims. The CEO states that R&D budgets are now pouring in, perhaps to cool down claims of circular funding in the AI industry. The CEO is now shifting the conversation into a history lesson for AI models, reiterating that Agentic models like Cursor are a focus for 2026 and beyond.

— Sayem Ahmed

Nvidia's focus on frontier models across industries

Huang reiterated that it's not a cloud distribution service, as DGX cloud winds down to R&D, it's now focused on developing frontier models like Earth 2, Cosmos, a world foundation model, GR00T for robotics and locomotion, and more.

Huang states that he open-sources these models, but also the datasets behind them, so developers understand what makes things tick, with the intention for every company to take part in the incoming AI developments. And just like that, the lights go out, and Jensen makes a joke.

— Jeffrey Kampman

AI tops leaderboards across the industry

Nvidia's models are topping leaderboards, with the hopes that it can make developers utilize and create AI agents in order to research, models, and more easily. With reasoning capabilities, AI models no longer have to understand everything in a single day.

Jensen mentioned Perplecity as an example of how it would create an AI reasoning chain to create a multi-model, making just about anything fit a certain task, which has empowered AI startups — all thanks to Nvidia's technology, of course.

— Jeffrey Kampman

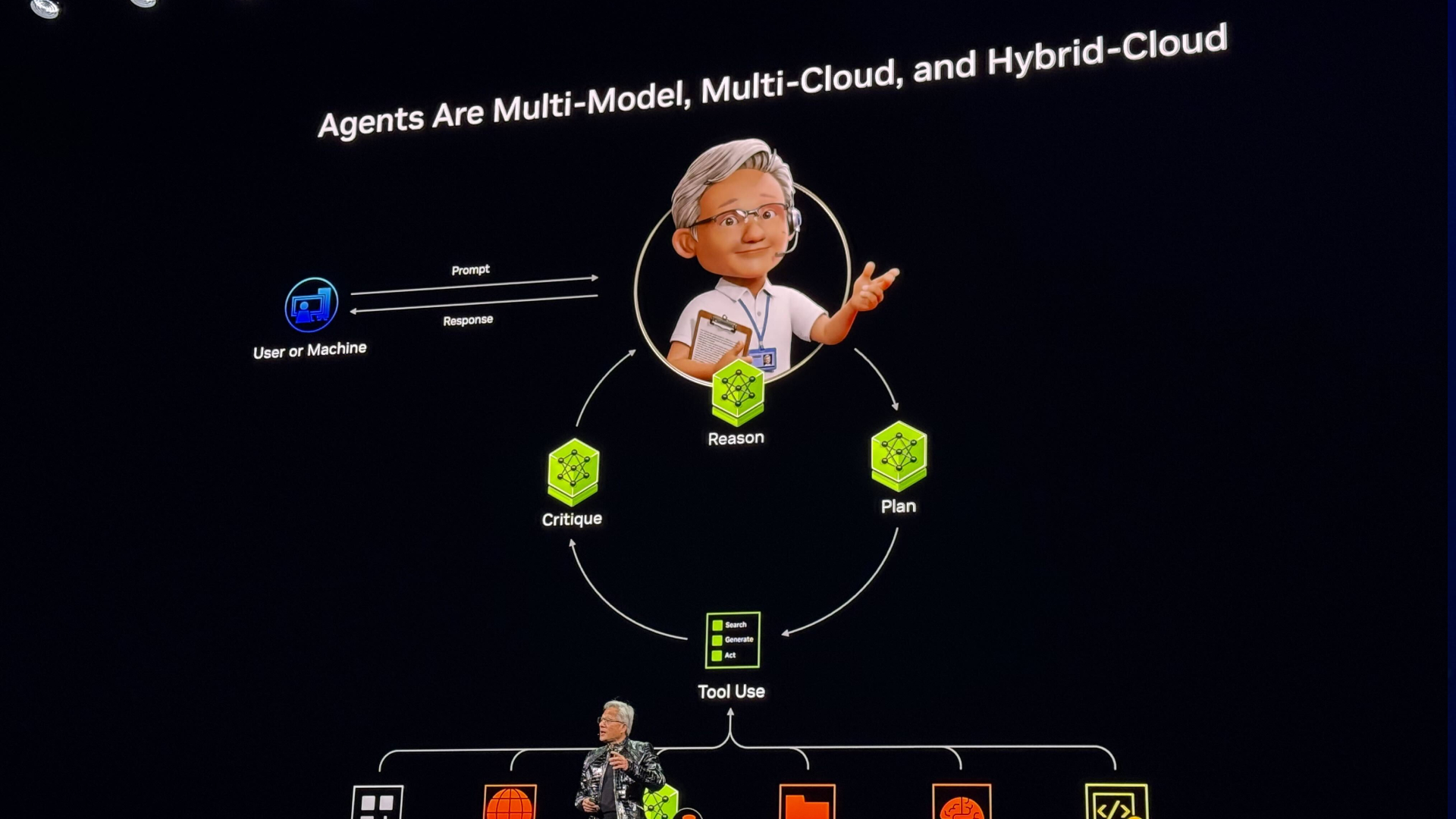

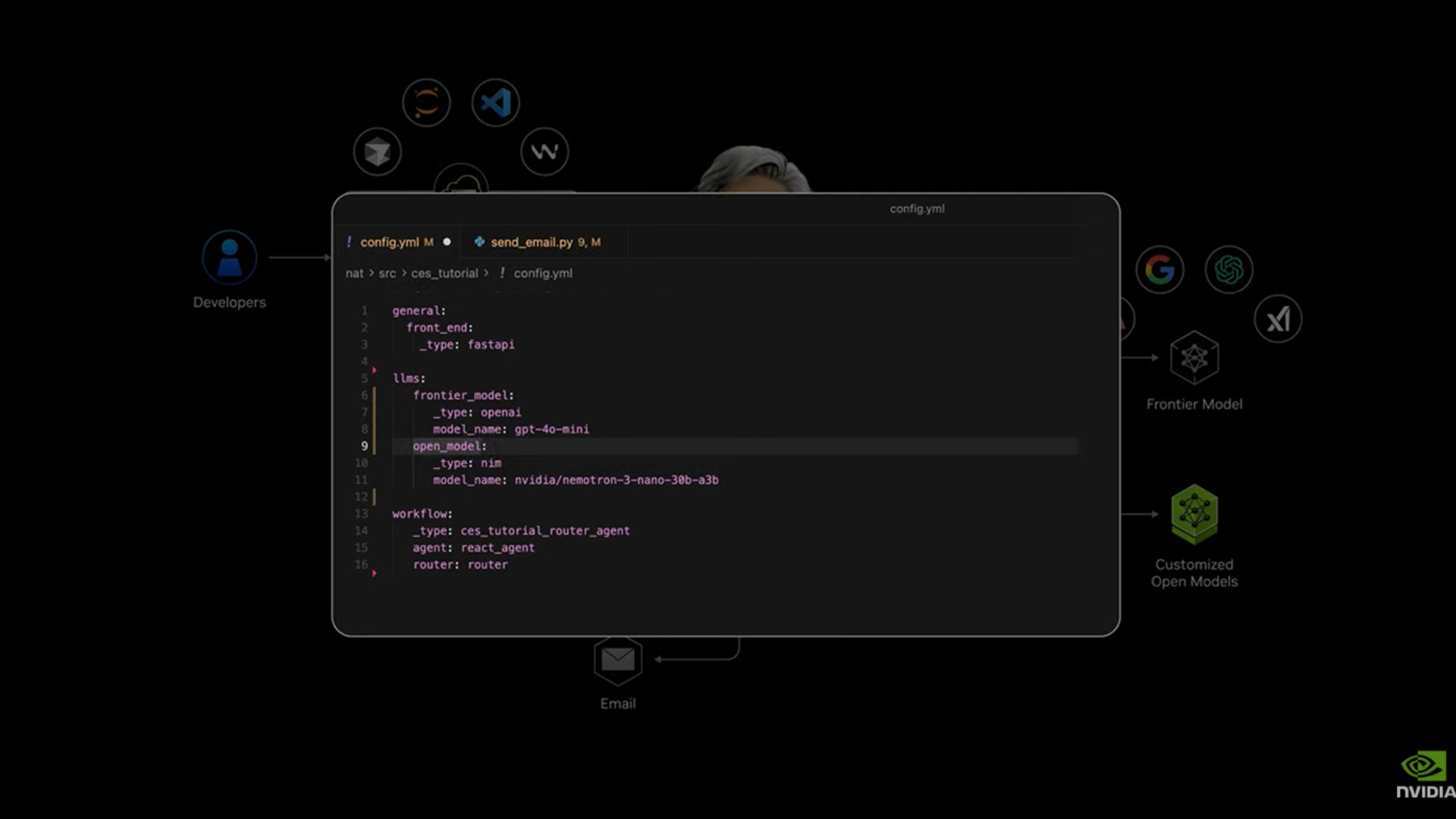

Nvidia Brev: Creating closed agentic sandboxes

Using a new tool called Nvidia Brev, the company has demoed an environement that can hook up to a DGX spark inside a closed environment for a variety of AI applications, or connecting it to frontier models via HuggingFace. A couple of examples were given, including integrating it with robotics.

"Isn't that incredible?", Jensen remarks, before mentioning that just a few years ago, it would have been impossible. Thanks to using a variety of applications, named an Agentic framework, Brev creates the architecture of applications across a closed sandbox.

— Sayem Ahmed

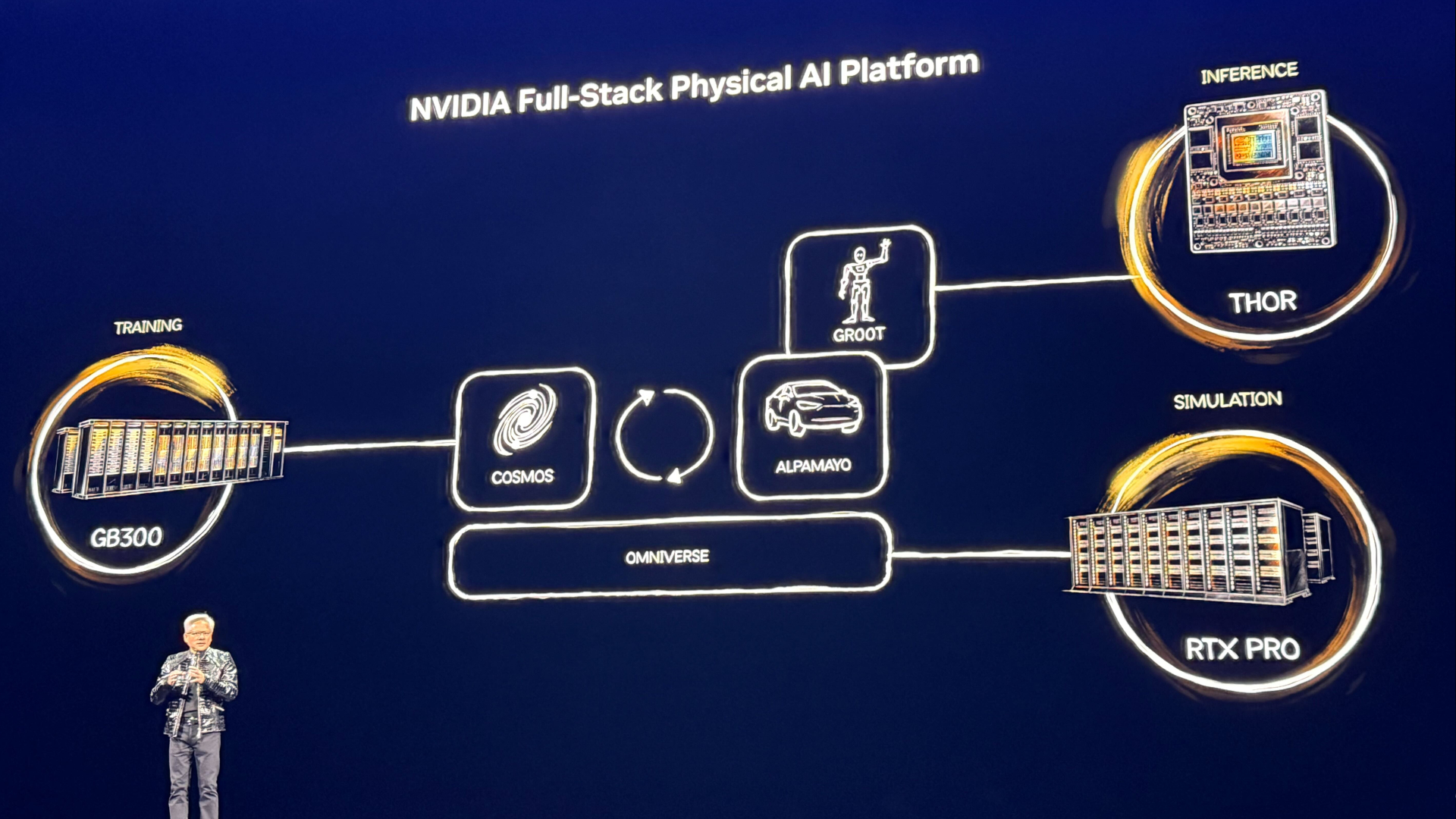

Full-stack Phsyical AI: Compute becomes data

Jensen is explaining how several computers, connected through Nvidia Omniverse, can create a full-stack AI platform through training (GB300), Simulation (RTX Pro), and inference (labelled THOR). By utilizing multiple models aligned with language, compute power has become data, Jensen says.

Synthetic data, grounded by physics, can be used in a Cosmos foundation model to become physically-based, digestible data to train a new AI platform. This could curtail the requirement for real-world training data for Physical AI platforms, aligning elements like trajectory predictions into real-world, usable data for training.

— Jeff Kampman

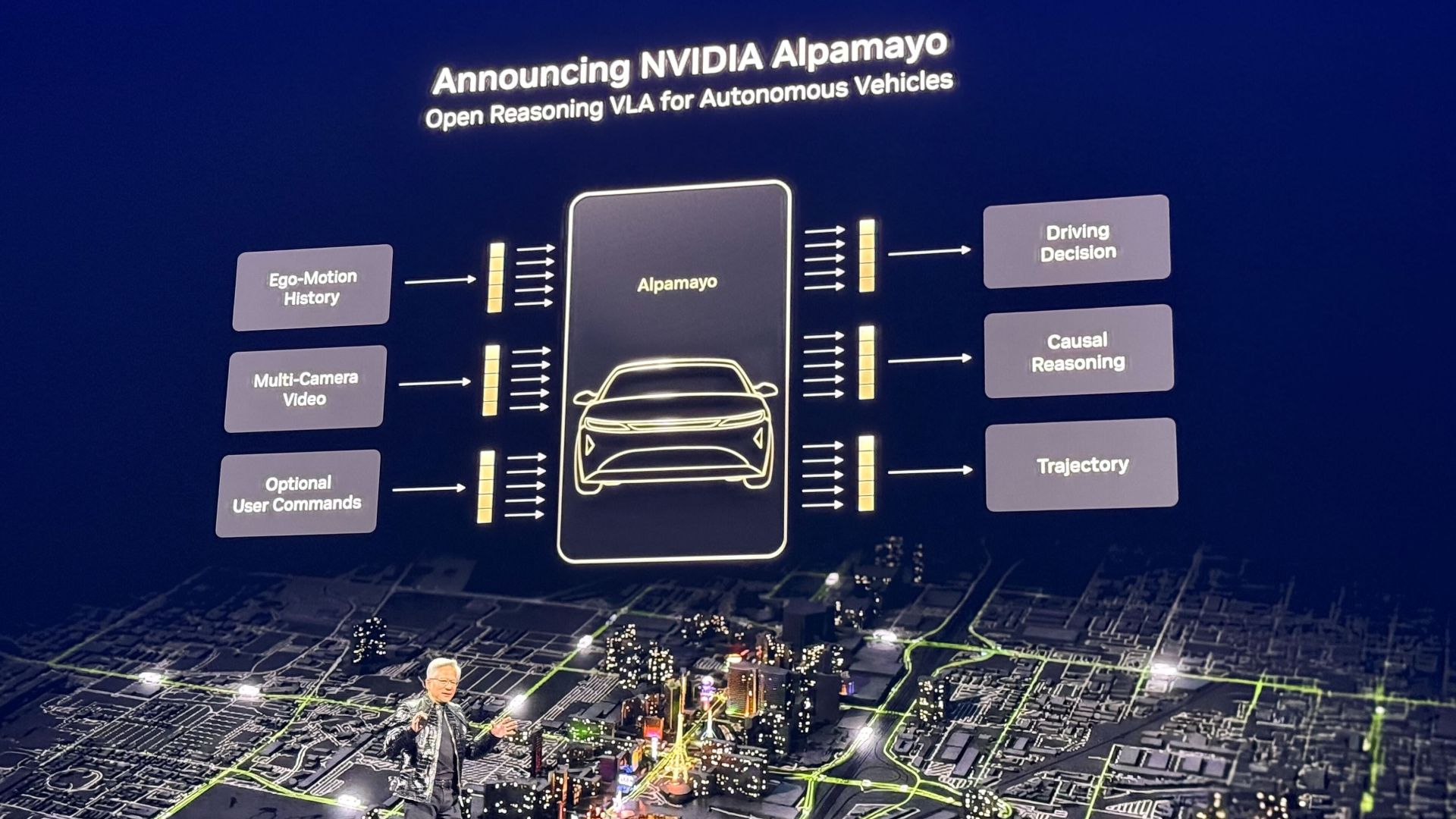

Nvidia Alpamayo: A thinking, reasoning AI model for vehicles

Powered by the Cosmos AI model, Nvidia has created Alpamayo, an AI model used for autonomous vehicles. It can reason every action: From the actions it's about to take, how it reasoned that action, and then developing a trajectory.

Huang claims that it's based on a large amount of human training and supplemented by Cosmos' synthetic data. The keynote has now shifted to a real-world example of the model working in action, with a car (in San Francisco) taking turns and making decisions on the fly. Huang notes that it's impossible to get every single possible scenario, but breaking these tasks down can be understood by the AI model if it reasons through it.

— Jeff Kampman

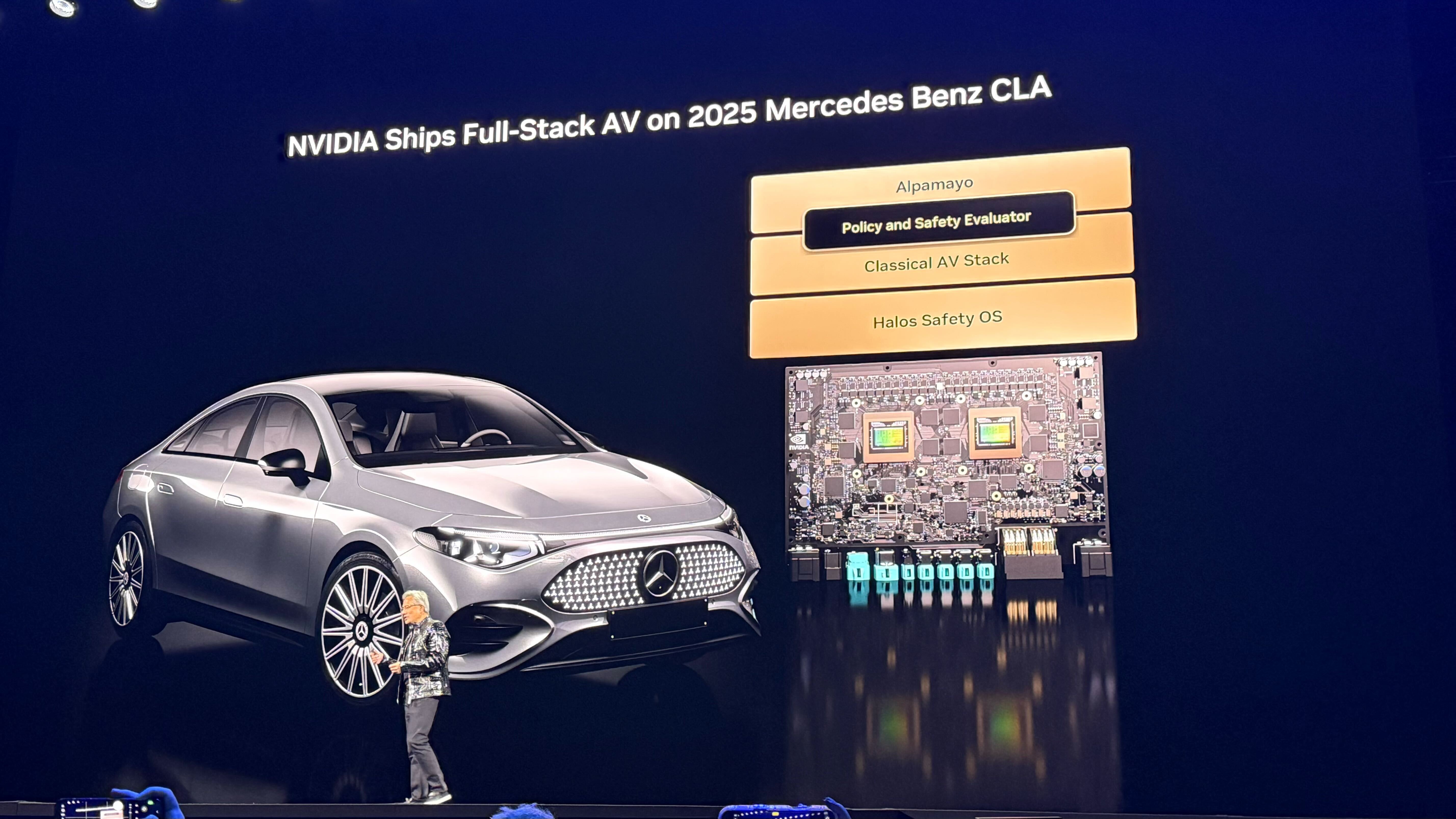

More Alpamayo - coming to Mercedes Benz CLA

"Not only does your car drive as you would expect it to drive, it reasons about every scenario and tells you what it's about to do."

The first AV car powered by Alpamayo is the 2025 Mercedes-Benz CLA, shipping to the U.S. in Q1. It has been rated as the world's safest car by NCAP.

How does Nvidia keep its car safe?

Nvidia says it has spent years building an entire AV stack that's fully traceable, running both stacks at the same time as a guardrail against accidents and scenarios you can't legislate against. A real shot across Tesla's bow.

"Use some Nvidia wherever you can"

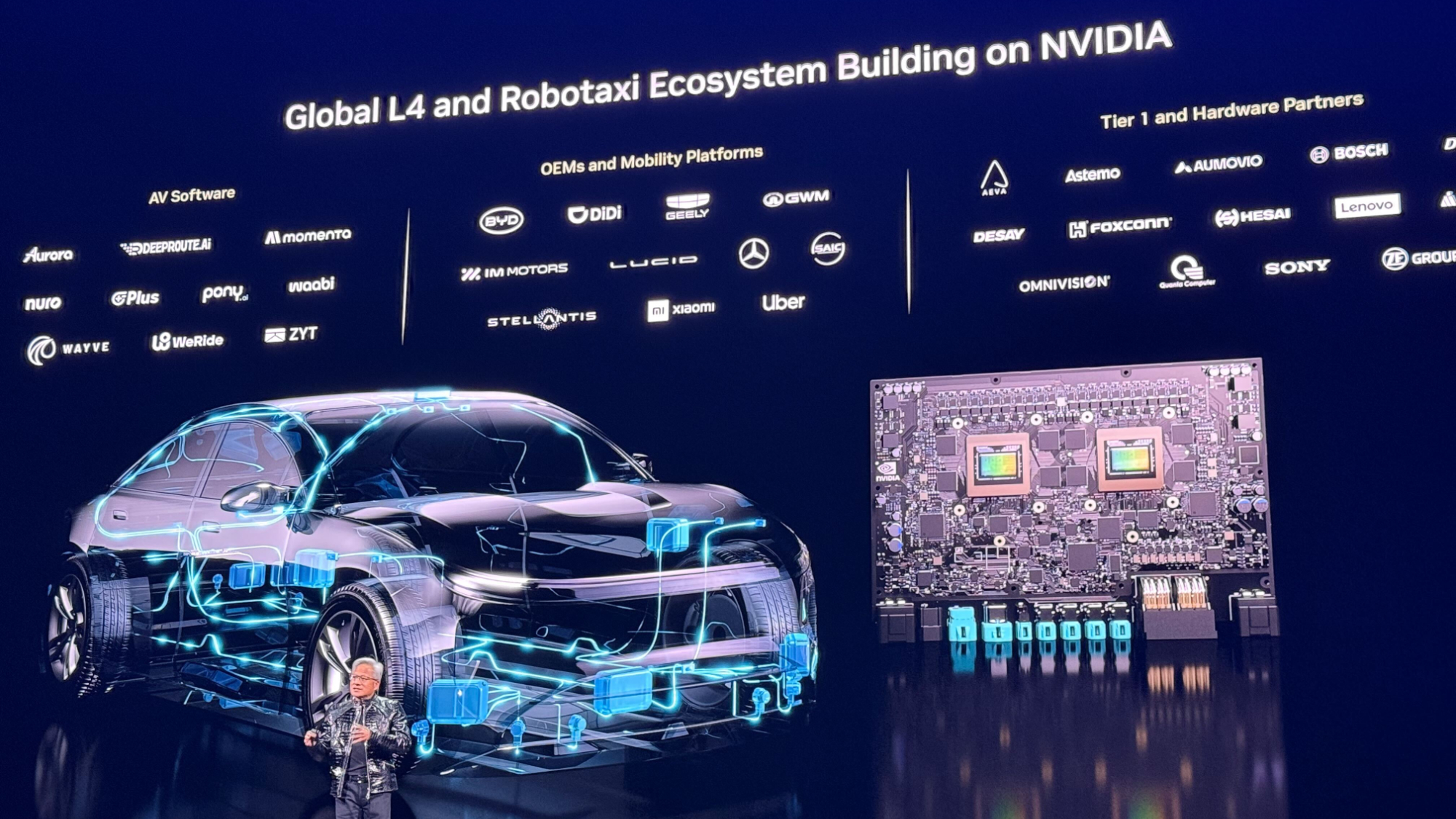

Jensen says that in the next 10 years, a high percentage of the world's cars will be autonomous. Stating we can all agree that this market has very much arrived.

IT'S ROBOT TIME

The moment you've all been waiting for, it's time for Jensen's robot hour! Not just for entertainment, Nvidia says that the principles that underpin its Alpamayo stack for autonomous vehicles will also inform other AI industries, including robotics. This is great cinema.

"Nobody's as cute as you guys"

Caterpillar and Uber Eats, as well as Boston Dynamics, join Jensen on stage to showcase the different types of robots that Nvidia's AI stack is being use to train.

Industry acceleration

Jensen says that the full Cadence stack is going to be accelerated by Cuda-X, and that future robots will have their chips designed inside tools like Cadence and Synopsys.

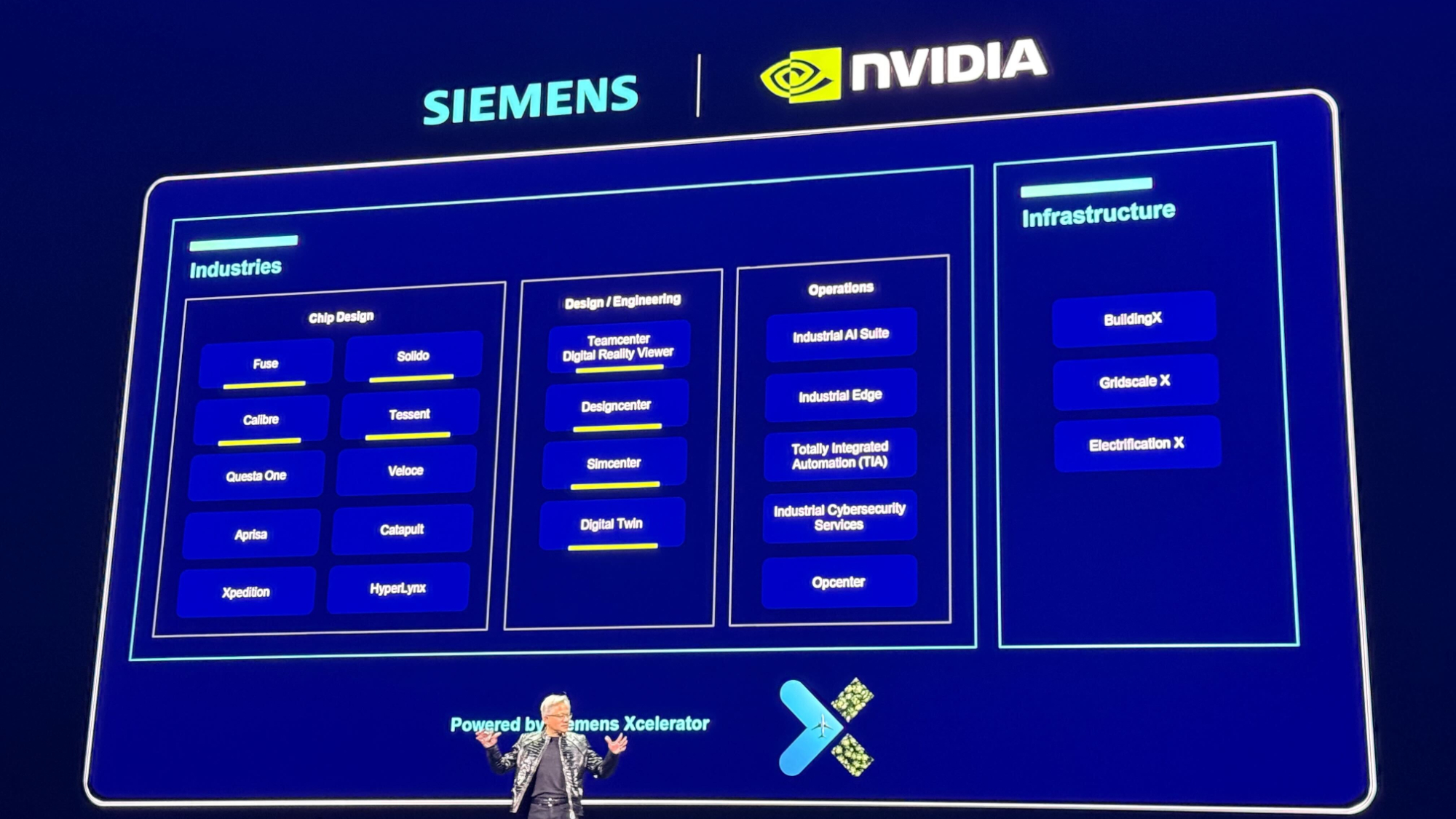

"We're going to integrate Cuda-x deeply into the world of Siemens." The robots are jumping up and down on the stage now.

Powering industry

Nvidia says that as global labor shortages worsens, we need AI more than ever to power industry. Siemens is integrating Cuda-x, Omniverse, and AI models into its whole portfolio, of EDA, CAE, and digital twin tools and platforms, bringing physical AI to the full industrial lifecycle - design and production, simulation, and operations.

Insane Demand for AI computing

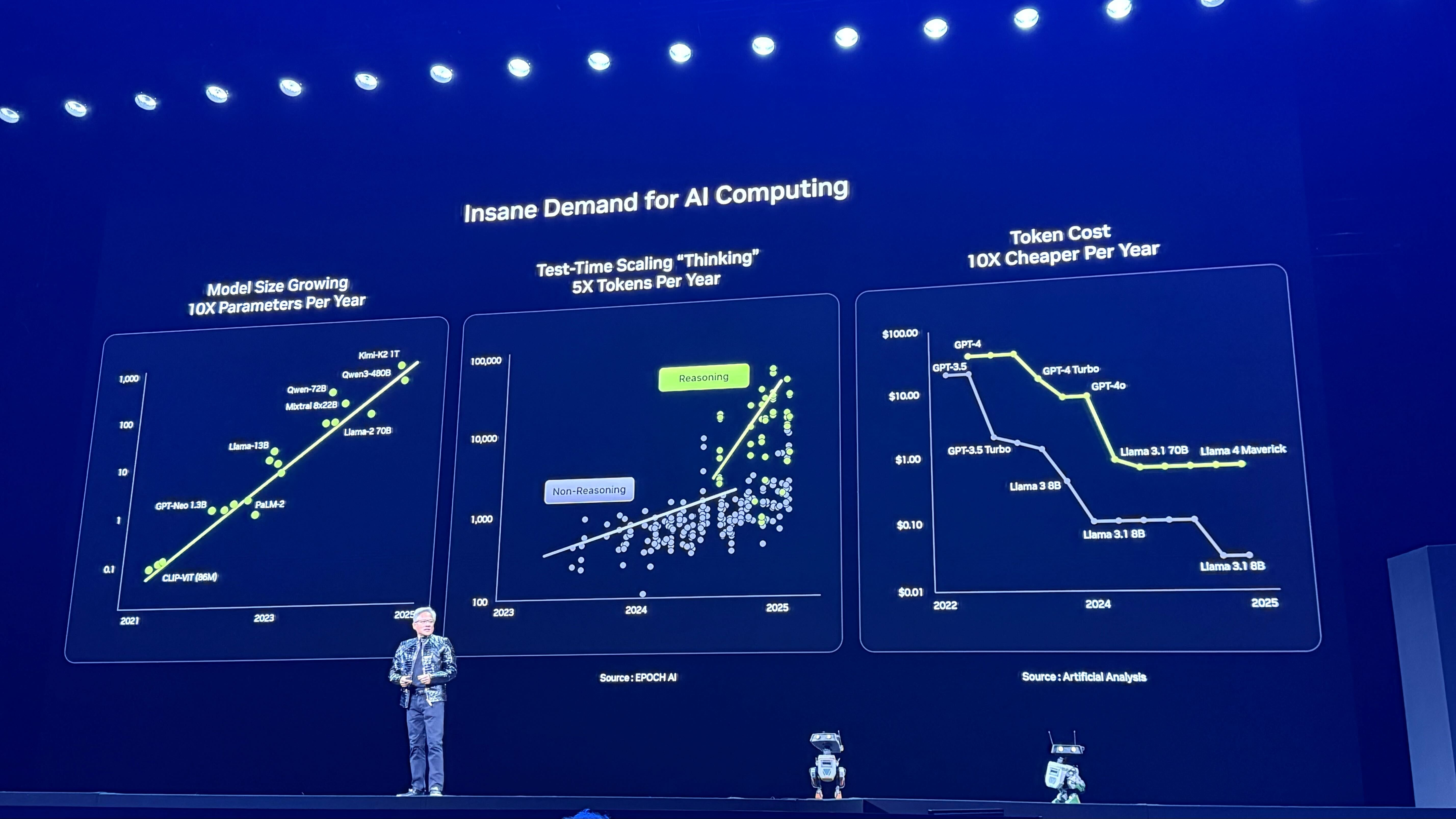

Jensen says the demand for AI is "skyrocketing," with model size growing by 10x parameters per year, test time scaling needs increasing 5x per year, and token cost becoming 10x cheaper per year. The latter signals the race that everyone is trying to get to the next level, which means it's a computing problem.

"We have to advance the state of computation every single year."

Vera Rubin is in full production

"I can tell you that Vera Rubin is in full production." Nvidia says it arrives just in time for the next frontier of AI.

"An order of magnitude every single year"

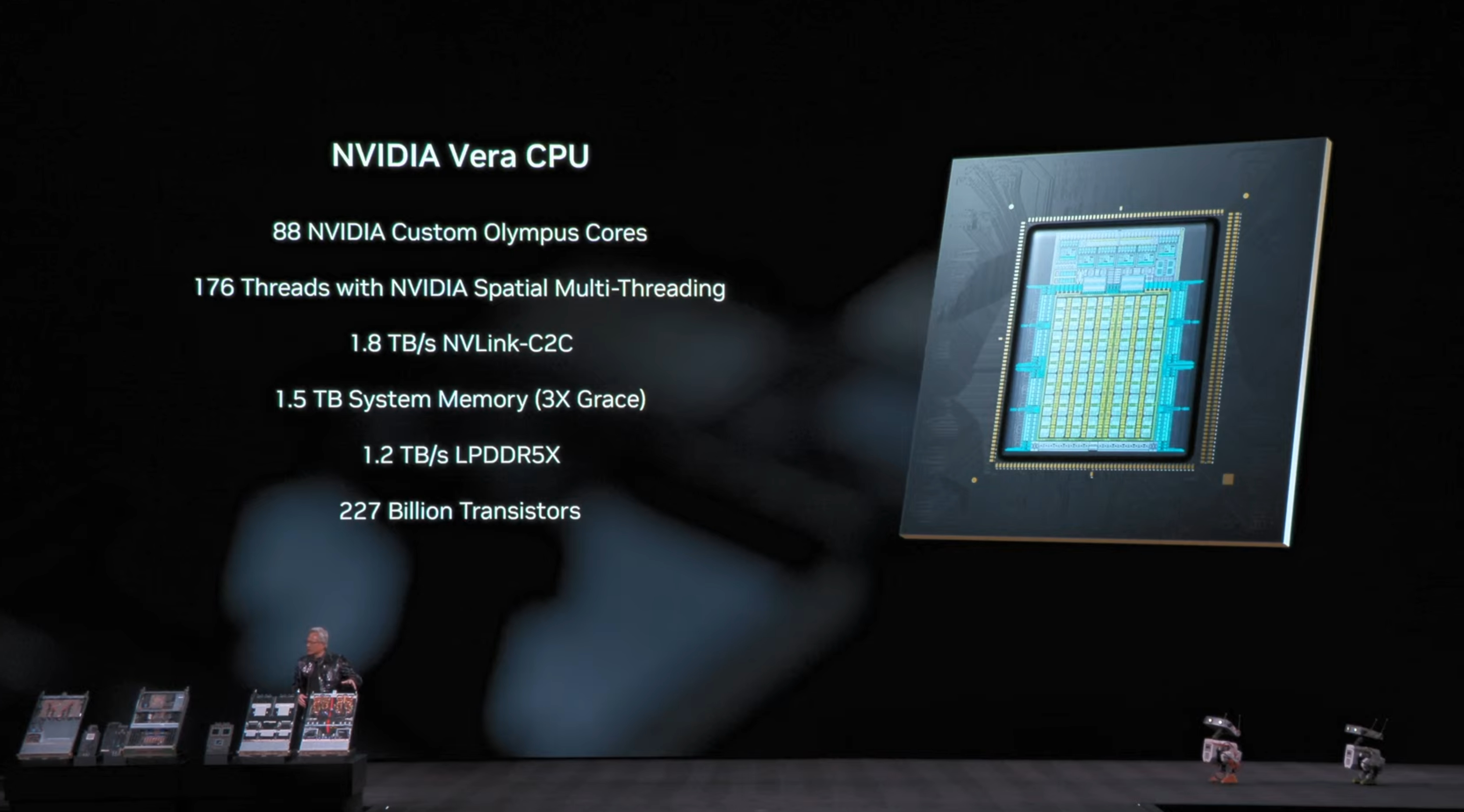

Vera CPU in all its glory, with 88 Olympus Custom cores, 227 Billion Transistors. Bottom right, two dejected robots leave the stage, no longer the center of attention.

Jensen says Nvidia has a rule: "No new generation should have more than one or two chips change." However, "the number of transistors we get year after year after year can't possibly keep up with the models, with 5x more tokens per year generated, it can't keep up with the cost decline of the tokens." Nvidia can no longer keep up with the demands of AI unless it innovates all of the chips, the entire stack, all at the same time.

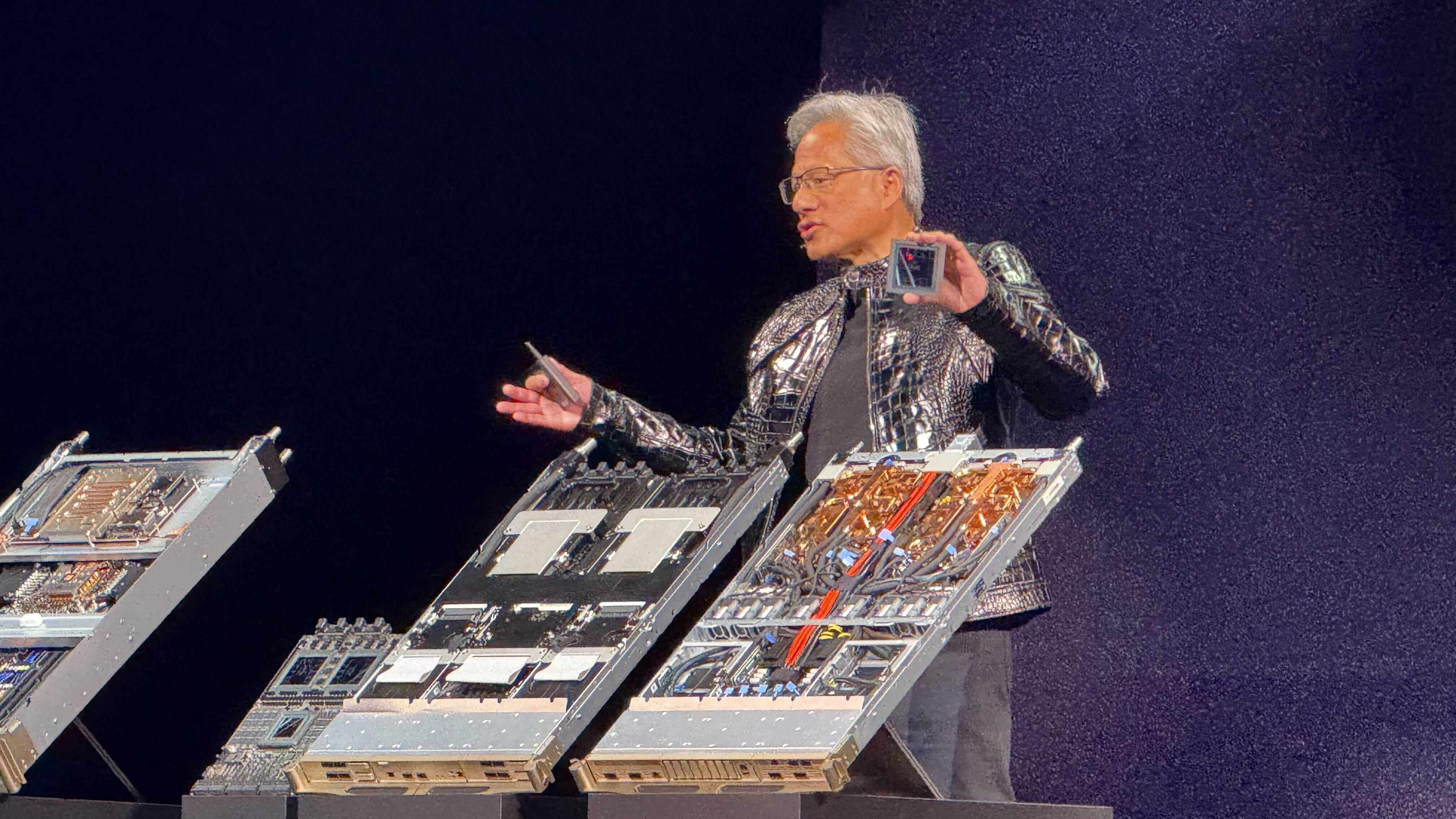

"I think this weighs a couple of hundred pounds"

Jensen cracks a joke about the weight of Vera Rubin that absolutely bombs. More pertinently, building the Rubin chassis takes just five minutes, down from two hours. And it's 80% liquid cooled.

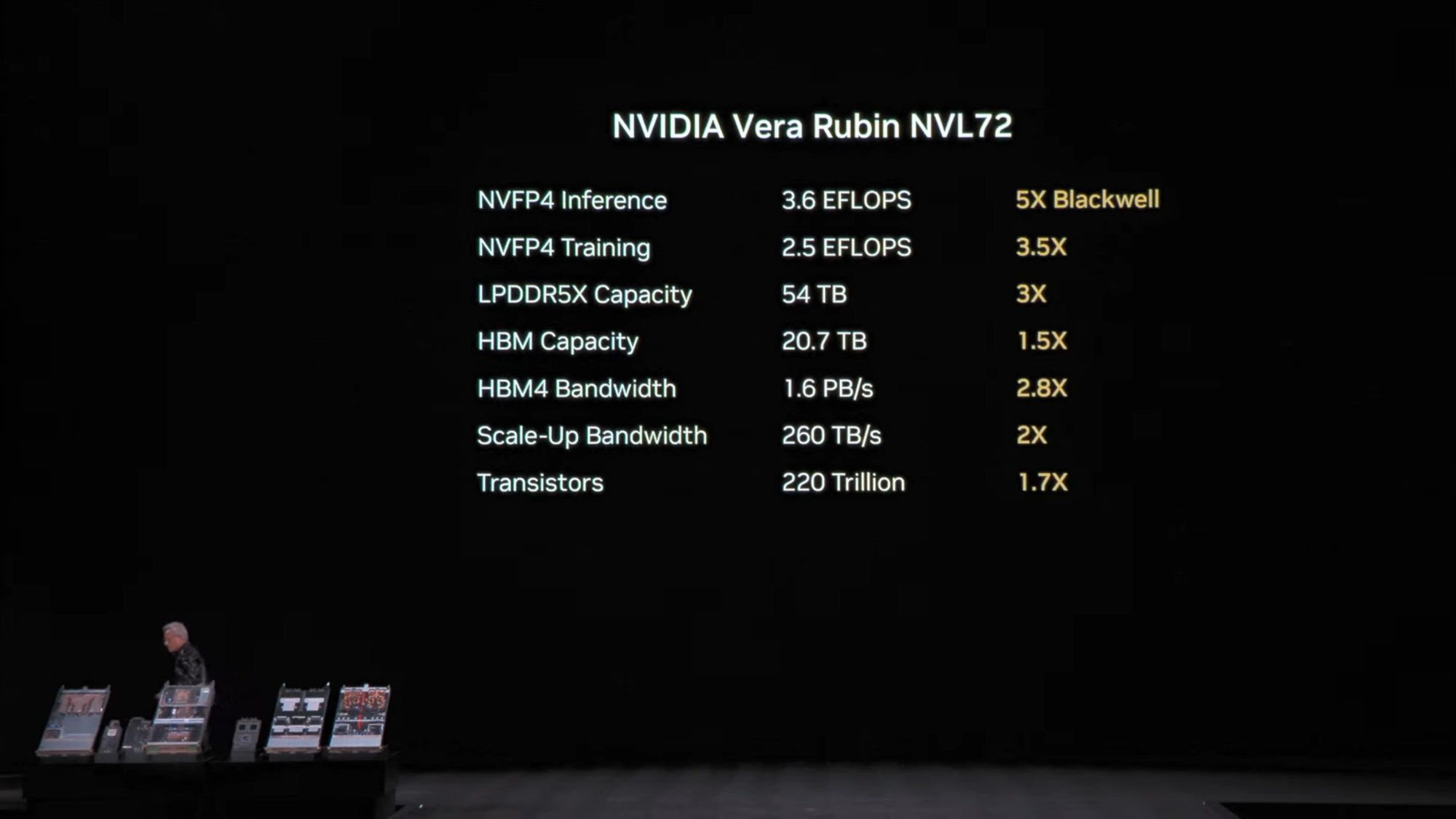

Vera Rubin vs Blackwell

Here's how Vera Rubin stacks up against Blackwell. Jensen tells another water-based joke: "It weighs two and a half tons today, because we forgot to drain the water out of it." A much better pop than his previous attempt.

"The power of Vera Rubin is twice as high as Grace Blackwell"

Despite the huge increase in power, cooling demands of Vera Rubin are the same, with no water coolers required. "We're basically cooling this computer with hot water."

Bluefield 4

"We created Bluefield 4 so that we could have a very fast KV cache memory store right in the rack." Jensen says everyone is suffering from storage issues, crushed under the amount of JV cache traffic moving around.

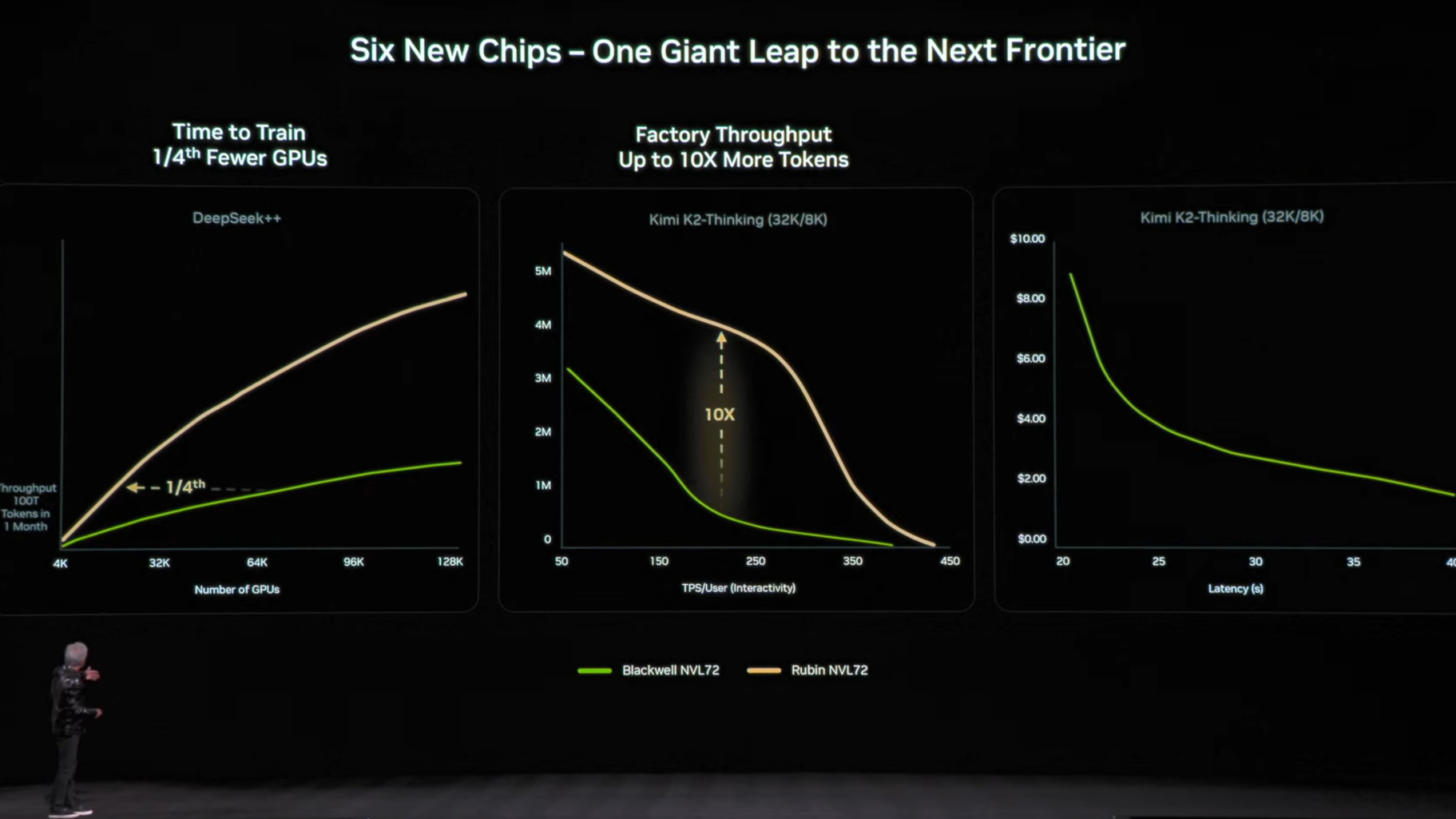

A giant leap

Nvidia says that Vera Rubin is "One Giant Leap to the Next Frontier."

With four Bluefields, 150TB of memory (presumably NVME), each GPU gets an additional 16TB of context memory. "This is crazy sh*t" - Jeffrey Kampman.

So how well does Vera Rubin perform?

As you can see, quite well. According to Nvidia, Vera Rubin throughput is through the roof compared to Blackwell, using 1/4th fewer GPUs in a time to train test of 100T tokens in one month. Rubin factory throughput is around 10x that of Blackwell, and with just 1/10th token cost.

And we are done!

Jensen leaves the stage, but says Nvidia will show us some outtakes of slides left on the cutting room floor. Yes, there are more robots.

And that is all for Nvidia! You can read the full announcement about Nvidia's biggest revelation, Vera Rubin, below:

-

hotaru251 please just rebrand as they have ruined the brand the small people built up in past.Reply