Tom's Hardware Verdict

The DGX Spark is a well-rounded toolkit for local AI thanks to solid performance from its GB10 SoC, a spacious 128GB of RAM, and access to the proven CUDA stack. But it's a pricey platform if you don't intend to use its features to the fullest.

Pros

- +

GB10 SoC is efficient and reasonably fast for AI tasks

- +

128GB of RAM makes it easy to run most local AI models

- +

Polished software and extensive docs get you up and running fast

- +

Proven CUDA ecosystem

- +

Unique ConnectX 7 connectivity options for local clustering

Cons

- -

Pricey if you don’t need everything it offers

- -

Doesn’t run Windows (yet)

- -

Gaming on GB10 is possible, but this isn’t a GeForce

Why you can trust Tom's Hardware

The fruits of the AI gold rush thus far have frequently been safeguarded in proprietary frontier models running in massive, remote, interconnected data centers. But as more and more open models with state-of-the-art capabilities are distilled into sizes that can fit into the VRAM of a single GPU, a burgeoning community of local AI enthusiasts has been exploring what’s possible outside the walled gardens of Anthropic, OpenAI, and the like.

Today's hardware hasn’t entirely caught up to the rapid shift in resources that AI trailblazers demand, though. Thin and light x86-powered "AI PCs” largely constitute familiar x86 CPUs with lightweight GPUs and some type of NPU bolted on to accelerate machine learning features like background blur and replacement. These systems rarely come with more than 32GB of RAM, and their relatively anemic integrated GPUs aren’t going to churn through inference at the rates enthusiasts and developers expect.

Gaming GPUs bring much more raw compute power to the table, but they still aren't well-suited to running more demanding local models, especially large language models. Even the RTX 5090 "only" has 32GB of memory on board, and it’s trivial to exhaust that pool with cutting-edge LLMs. Getting the model into RAM is just part of the problem, too. As conversations lengthen and context lengths grow, the pressure on VRAM only increases.

If you want to get more VRAM on a discrete GPU to hold larger AI models, or more of them at once, you're looking at professional products like an $8500+ RTX Pro 6000 Blackwell and its 96GB of GDDR7 (or two, or three, or four). And that’s not even counting the cost of the exotic host system you’ll need for such a setup. (Can I interest you in a Tinybox for $60K?)

The hunger for RAM extends to other common AI development tasks, like fine-tuning an already trained AI model for better performance on domain-specific data or quantizing an existing model to reduce its resource footprint for less powerful systems.

In the face of this endless hunger for RAM, systems with large pools of unified memory have become attractive platforms for those looking to explore the frontiers of local AI. Apple paved the way with its M-series SoCs, which in their latest and greatest forms pair as much as 512GB of LPDDR5 and copious memory bandwidth with powerful GPUs.

And AMD’s Ryzen AI Max+ 395 (aka Strix Halo) platform has found a niche as a somewhat affordable way to get 128GB of RAM alongside a relatively powerful GPU for local LLM tinkering.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

But none of those systems natively support Nvidia’s CUDA, which remains the dominant software platform for AI development the world over. Enter the DGX Spark, first announced all the way back at CES 2025, which brings the combo of a high-performance Arm CPU and a Blackwell GPU to desktops for the first time alongside full support for the CUDA ecosystem.

The centerpiece of the DGX Spark is Nvidia’s GB10 SoC, which combines a MediaTek-produced Arm CPU complex joined together with a Blackwell GPU on one package. Both of these chiplets are fabricated on a TSMC 3nm-class node, and they’re joined together by Nvidia’s coherent, high-bandwidth NVLink C2C interconnect. Those chips share a coherent 128GB pool of LPDDR5X memory that offers a tantalizing canvas for pretty much any common AI workload you can think of.

Ports, external stuff, operating system and software

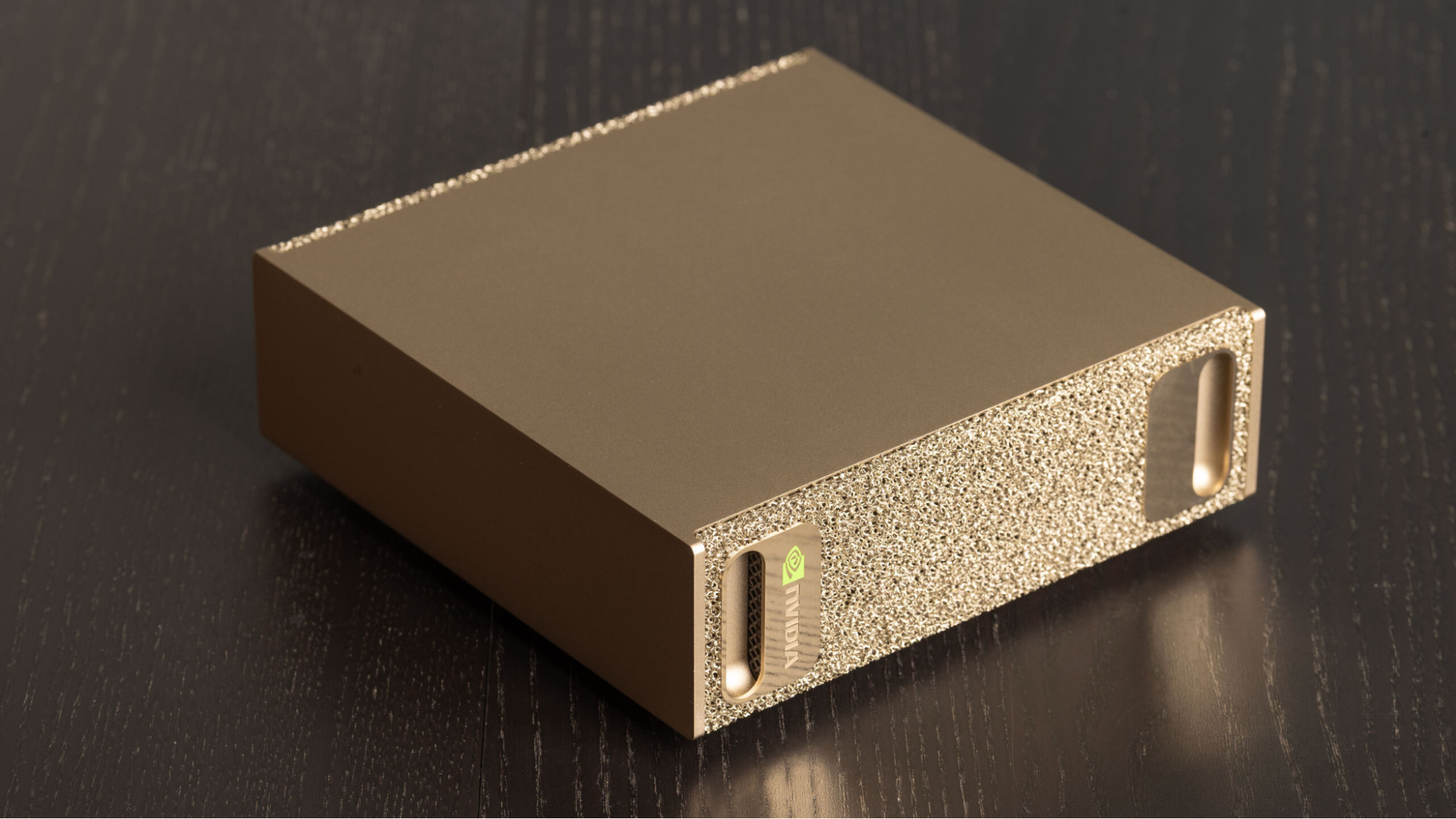

The Spark itself is a pretty straightforward mini PC. It measures just 5.9” by 5.9” by 2” (150mm by 150mm by 50.5mm) for a volume of just 1.1 liters.

The Founders Edition version we’re reviewing today has a spiffy gold finish with a metal foam front and back panel for ventilation. The “rack handles” at the front of the device conceal air intakes, but the top and sides are otherwise featureless.

Flip the Spark over, and you see another air intake along the bottom edge, as well as a removable rubber foot that conceals the wireless networking antennas and allows for access to the user-replaceable M.2 2242 SSD. Our unit came with a 4TB drive.

Around back, you get a power button, one USB-C power input, three USB-C 20Gbps ports with DisplayPort alt mode support, an HDMI 2.1a port, a 10Gb Ethernet port, and two QSFP ports for the onboard ConnectX 7 NIC running at up to 200 Gbps. That exotic NIC lets you cluster a pair of Sparks together for experimentation with Nvidia’s NCCL distributed computing libraries.

If the Founders Edition Spark isn’t to your liking, Nvidia has made the GB10 platform available to its system partners with a bit of wiggle room for customization. Dell, Acer, Asus, Gigabyte, HP, Lenovo, and MSI have all created GB10 boxes of their own with small variations in power, cooling, storage, cosmetics, and remote management options. Those options are likely of most interest to corporate and institutional IT departments that already have preferred vendors and support contracts.

Nvidia makes it easy to integrate the Spark into your existing workflow in a number of ways. The preinstalled DGX OS is a lightly Nvidia-flavored version of Ubuntu 24.04 LTS. You can use it as a regular PC with keyboard, mouse, and monitor connected, or you can set it up headless and use the free Nvidia Sync app to SSH into the system from your Windows PC or Mac. Using tools like Tailscale, you can conceivably connect to your Spark from anywhere in the world.

The included Nvidia Sync utility for Windows and macOS is handy, and it’s easy to set up. For just one example, I was able to leave ComfyUI running on the Spark and created a custom port forwarding rule that let me turn any PC in my house into a generative AI workstation with a single click. You can also set up Ollama for a private web chat interface or Cursor for AI-assisted coding in the same way.

MORE: Best Graphics Cards

MORE: GPU Benchmarks and Hierarchy

MORE: All Graphics Content

As the Senior Analyst, Graphics at Tom's Hardware, Jeff Kampman covers everything to do with GPUs, gaming performance, and more. From integrated graphics processors to discrete graphics cards to the hyperscale installations powering our AI future, if it's got a GPU in it, Jeff is on it.

-

Gururu UGLY. Should have wrapped it in snakeskin leather. I'm just going to say what everyone else is saying. Can you open it up and put a picture of the innards in the review?Reply -

Pierce2623 I noticed the headline mentions beating Strix Halo, but I’m not sure it’s much of an accomplishment for a $3000 mini pc to beat a $1500 mini pc. If it doesn’t nuke Strix Halo out of existence, then it’s pretty horrible value. Since it’s currently desktop only, it should be getting compared against ITX PCs of equivalent price. Personally, I’d be comparing it against a 9950x3d/5080 ITX system, since that’s equivalent pricing. Lastly it’s a $3000 portable with no USB4/Thuderbolt? That’s next level greedy.Reply -

bit_user I'm pleasantly surprised by the analysis on Page 2. I expected to have some notes, but I think that analysis hit all of the main points. Memory bandwidth is indeed its Achilles heel. It's awesome for what was rumored to have been primarily a laptop chip, but it's got nothing on its true datacenter cousins.Reply

Yeah, it's where a big chunk of the cost comes from. I've seen street prices for that card running around $1500.The article said:... the onboard ConnectX 7 NIC running at up to 200 Gbps. That exotic NIC ...

Based on Nvidia's prior Jetson platforms, you're really stuck with this as the OS, whether you like it or not. I haven't heard how long Nvidia plans to support it, either. It's a pretty safe bet they'll move to 26.04, but who knows if they'll release anything beyond that for it?The article said:The preinstalled DGX OS is a lightly Nvidia-flavored version of Ubuntu 24.04 LTS.

Yes, and I think the ConnectX 7 NIC is a big part of that. Sad to see you didn't compare against the Ryzen AI Max 395+, here. Elsewhere, I've seen idle power of the Framework Desktop w/ Ryzen AI Max 395+ measured at a mere 12.5W.The article said:The Spark idles at about 35 W as a headless system

It really is just a happy accident that AMD created Strix Halo (Ryzen AI Max), when they did. It wasn't designed to do local LLM workloads, but rather an answer to Apple's M-series Pro. The fact that it can hang so close to GB10 is mostly a testament to just how memory-bottlenecked both are, since the GB10 has way more AI compute horsepower.