Why you can trust Tom's Hardware

Gauging AI performance, especially for LLMs, is a broad discipline with a dizzying number of permutations thanks to the vast range of models, model runners, and possible workloads available.

We can’t possibly cover every one of those permutations in this article, but after much research and experimentation, we are at least confident that we’re able to provide some useful perspective on what the Spark can do.

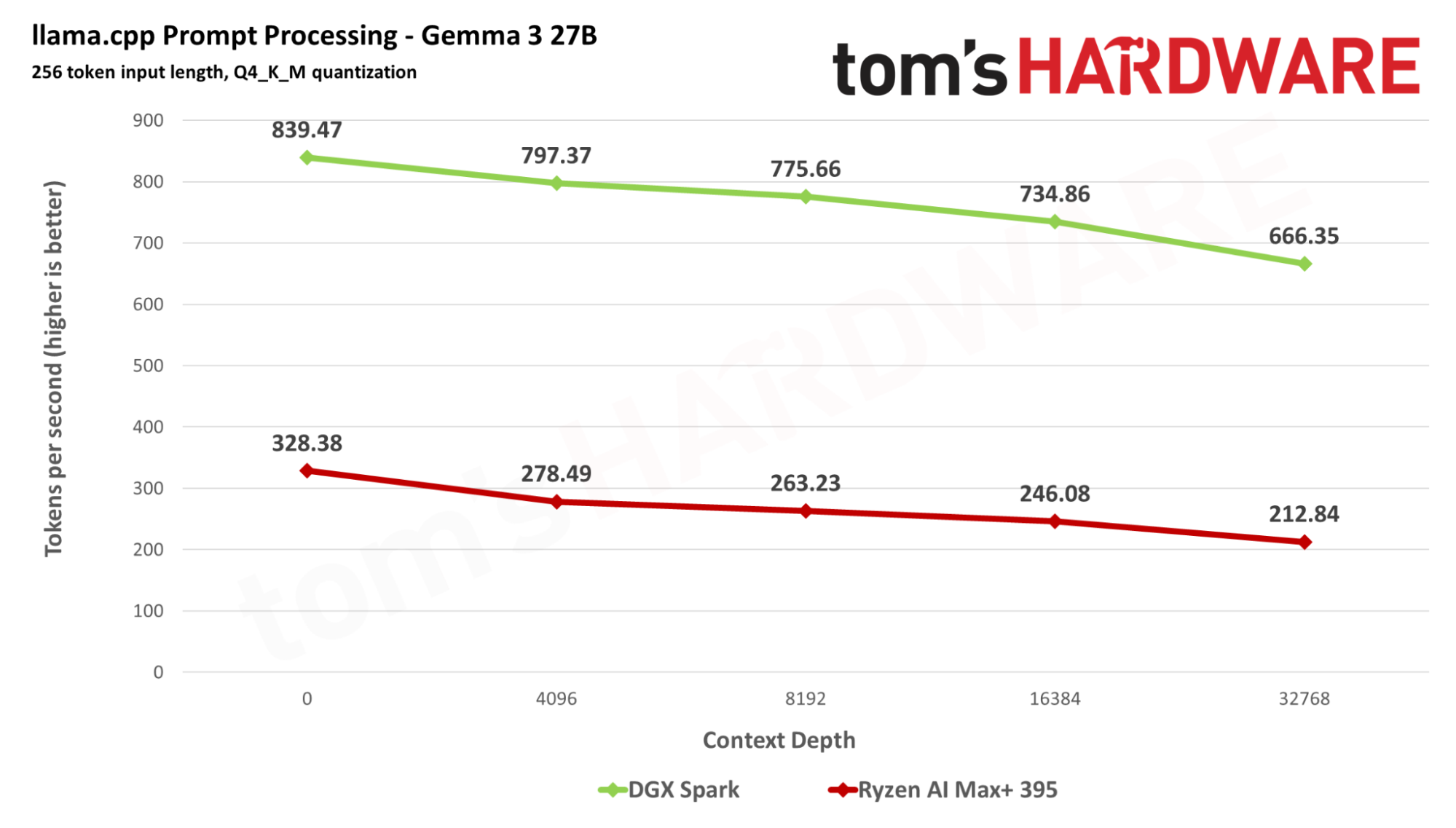

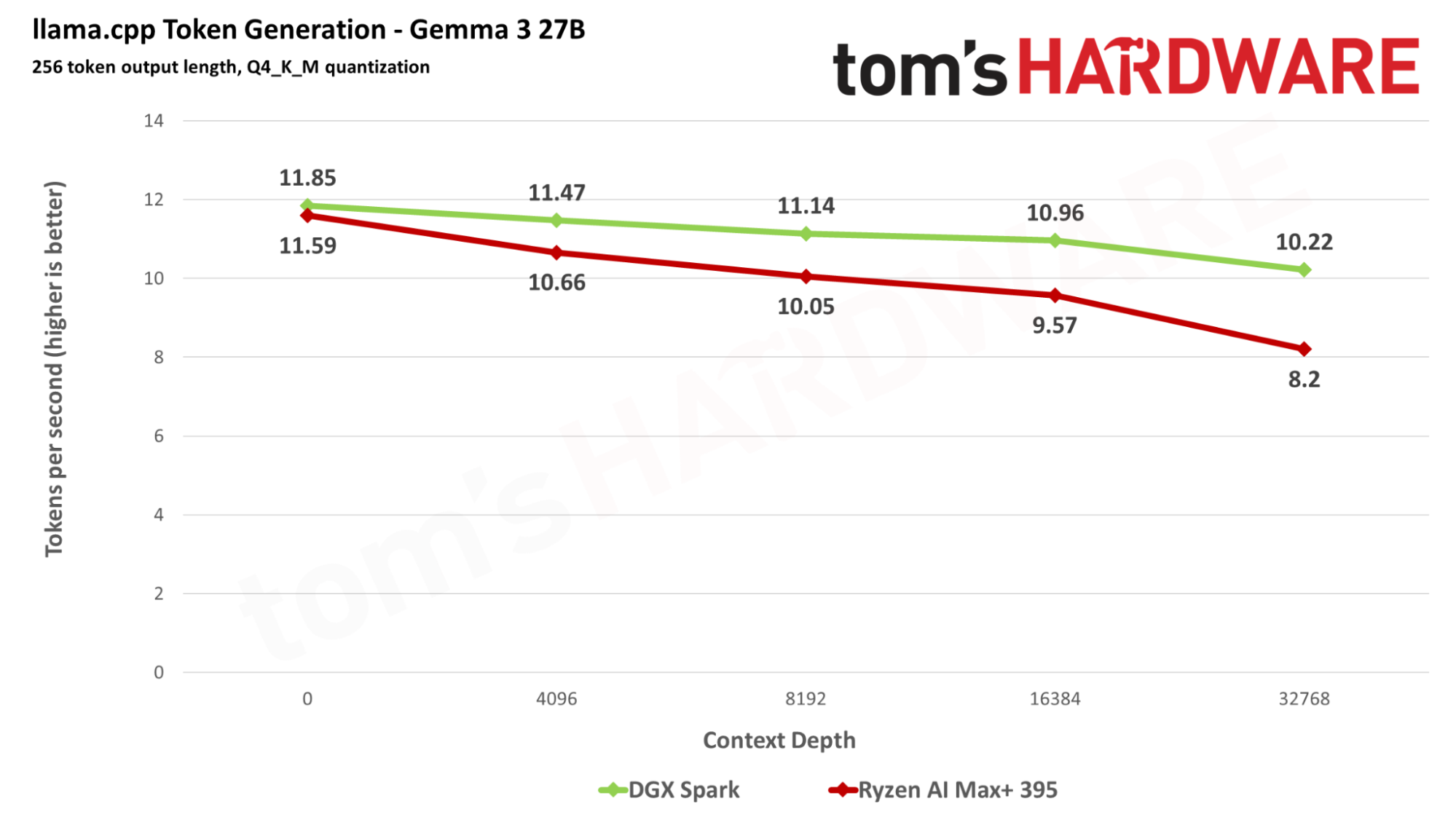

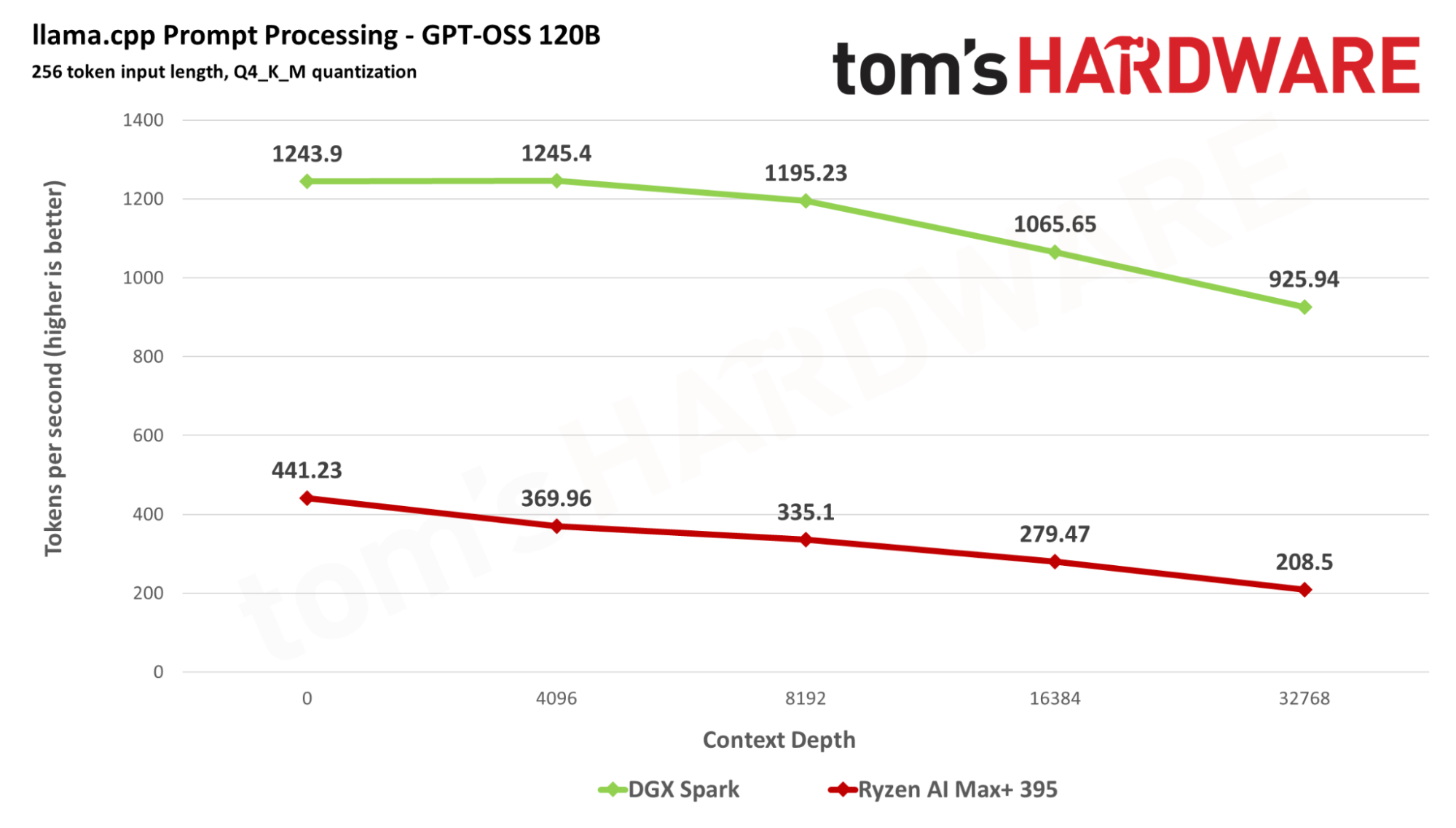

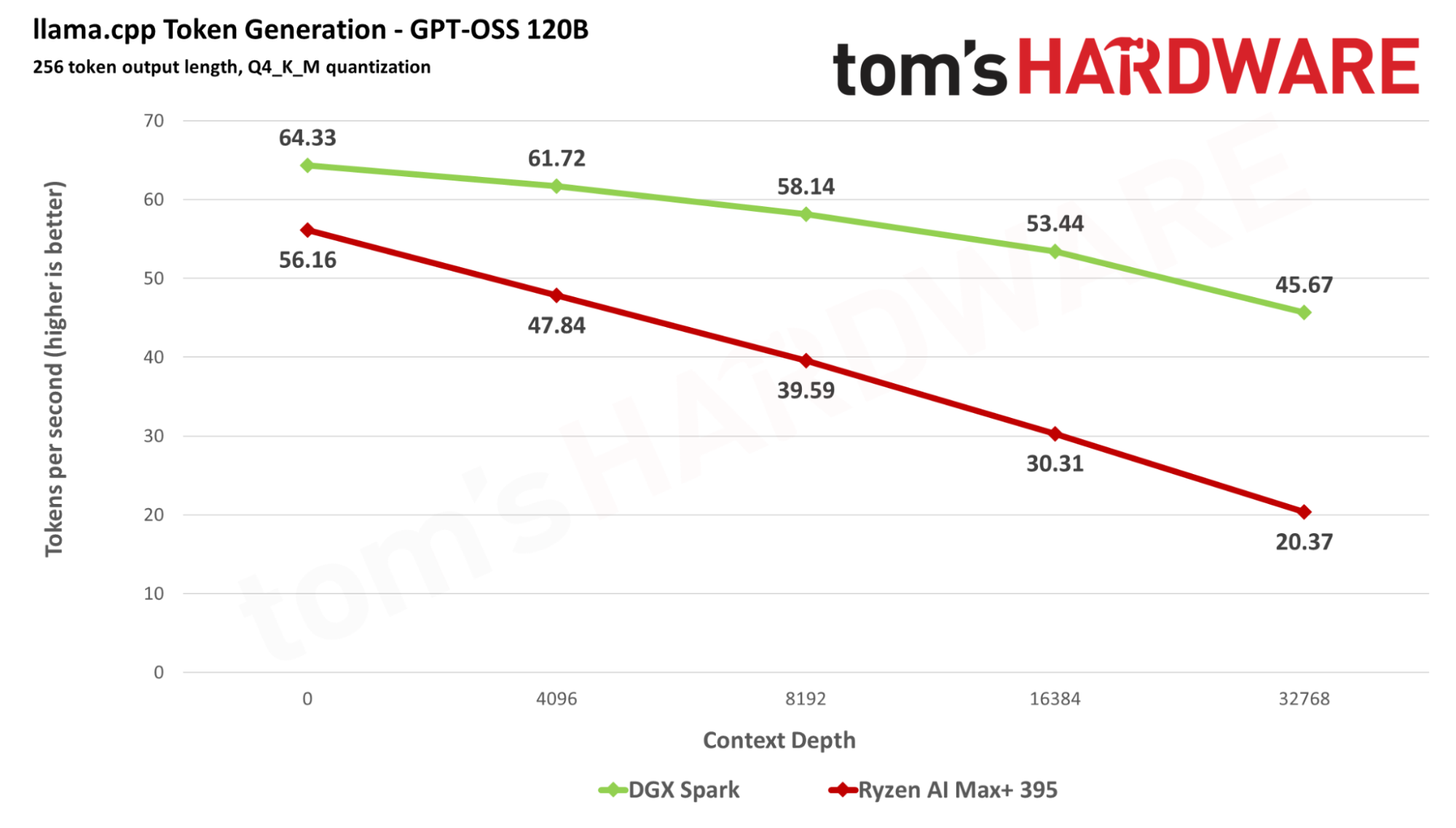

For comparison purposes, we've benchmarked both the DGX Spark and a Ryzen AI Max+ 395 platform in the form of Corsair's AI Workstation 300 mini-PC.

We’ll start with LLM inference performance, since that’s where most enthusiasts dipping their toes into local AI are focused nowadays. We chose llama.cpp as our inference platform since it’s broadly compatible across platforms and offers a well-documented and tunable benchmarking interface.

Other model runners, like vLLM, SGLang, and Nvidia’s own Triton inference server, have their own pros and cons and their own performance characteristics, but we’re not benchmarking them against each other today.

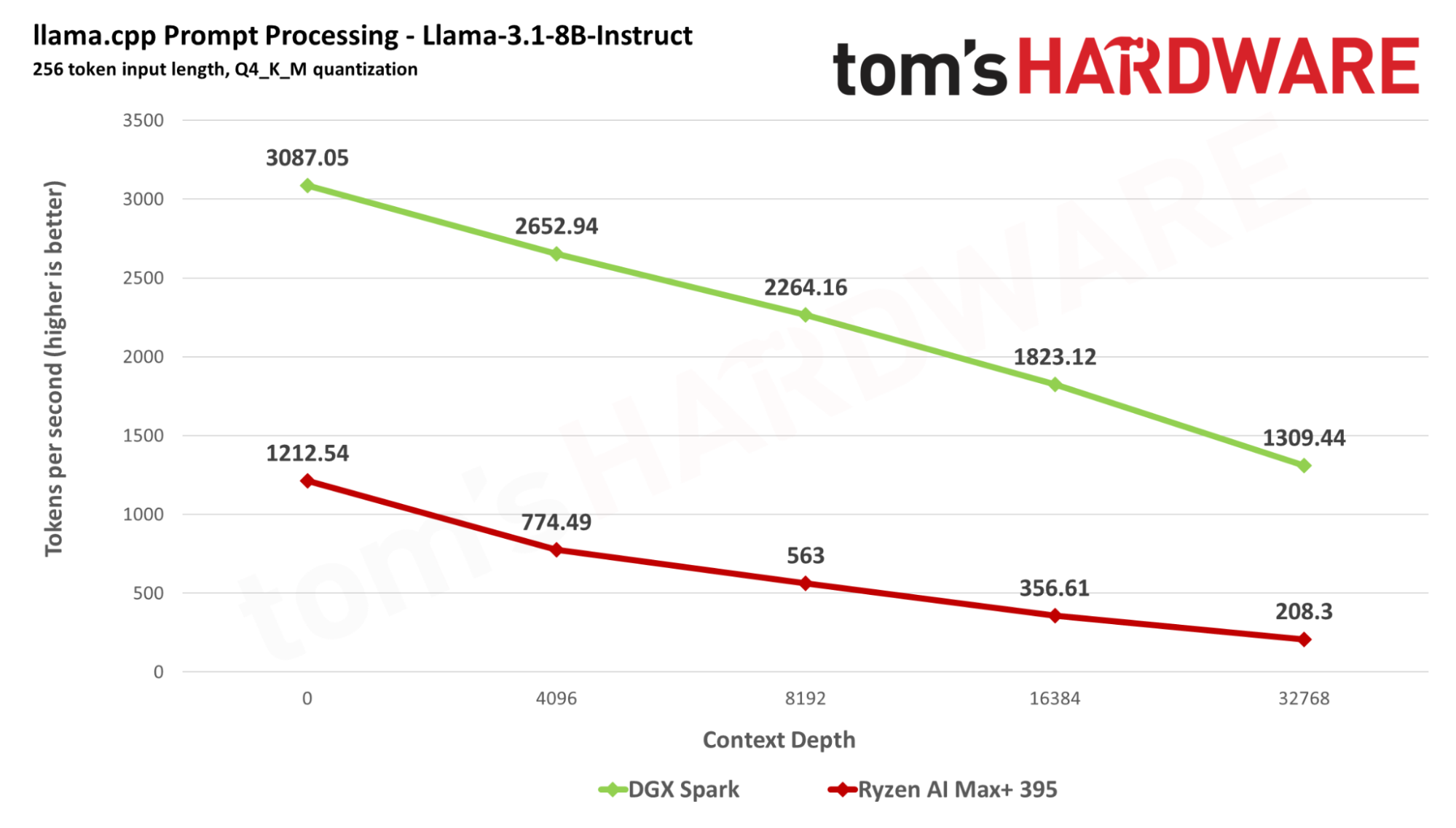

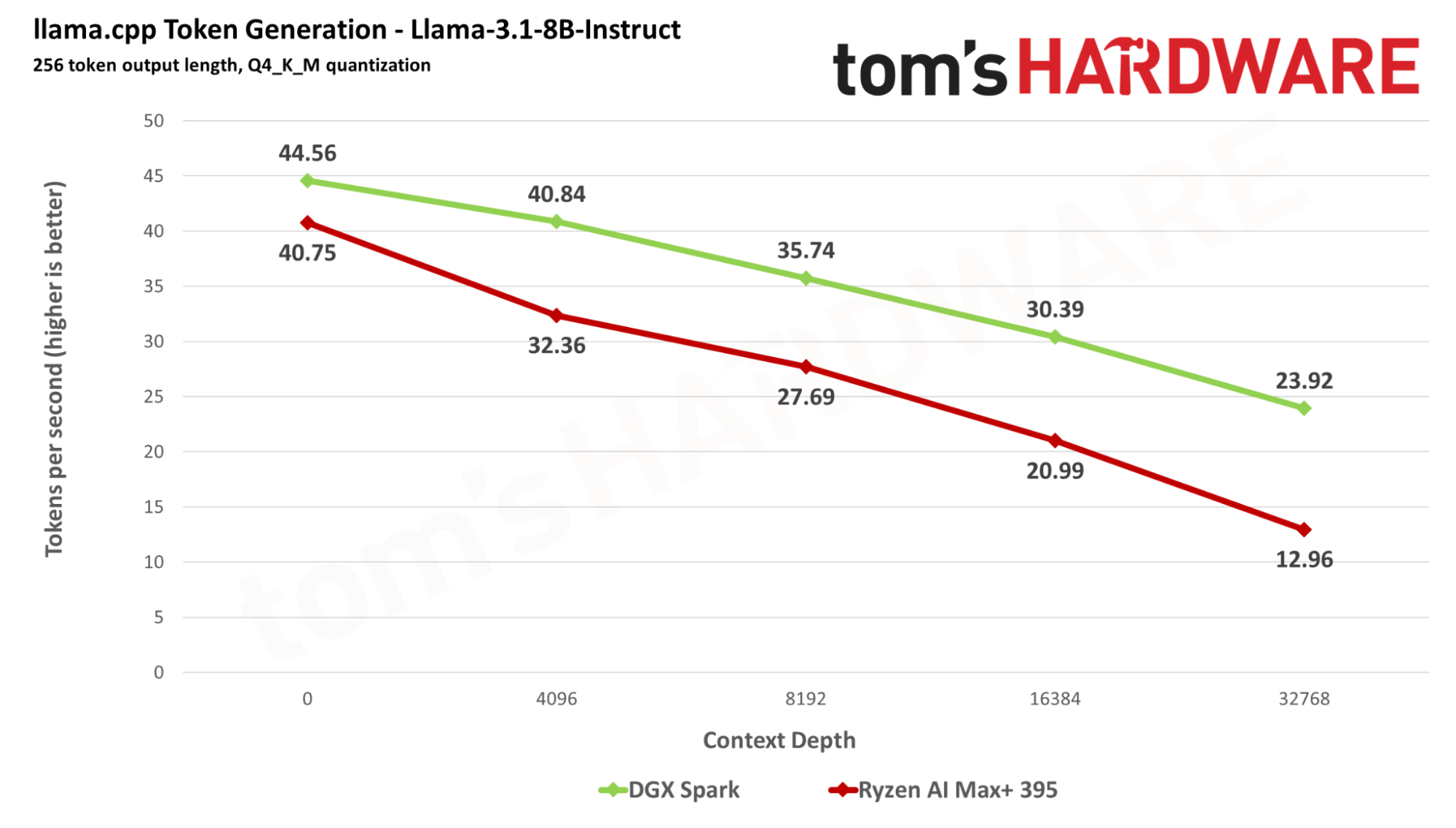

We bench two phases of LLM interaction: prompt processing or prefill for input, and token generation for output.

We’ll start off our benchmarks using Meta’s llama-3.1-8B, a dense LLM (i.e., one for which all parameters are activated per token) whose behavior is well-understood in the current AI landscape.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

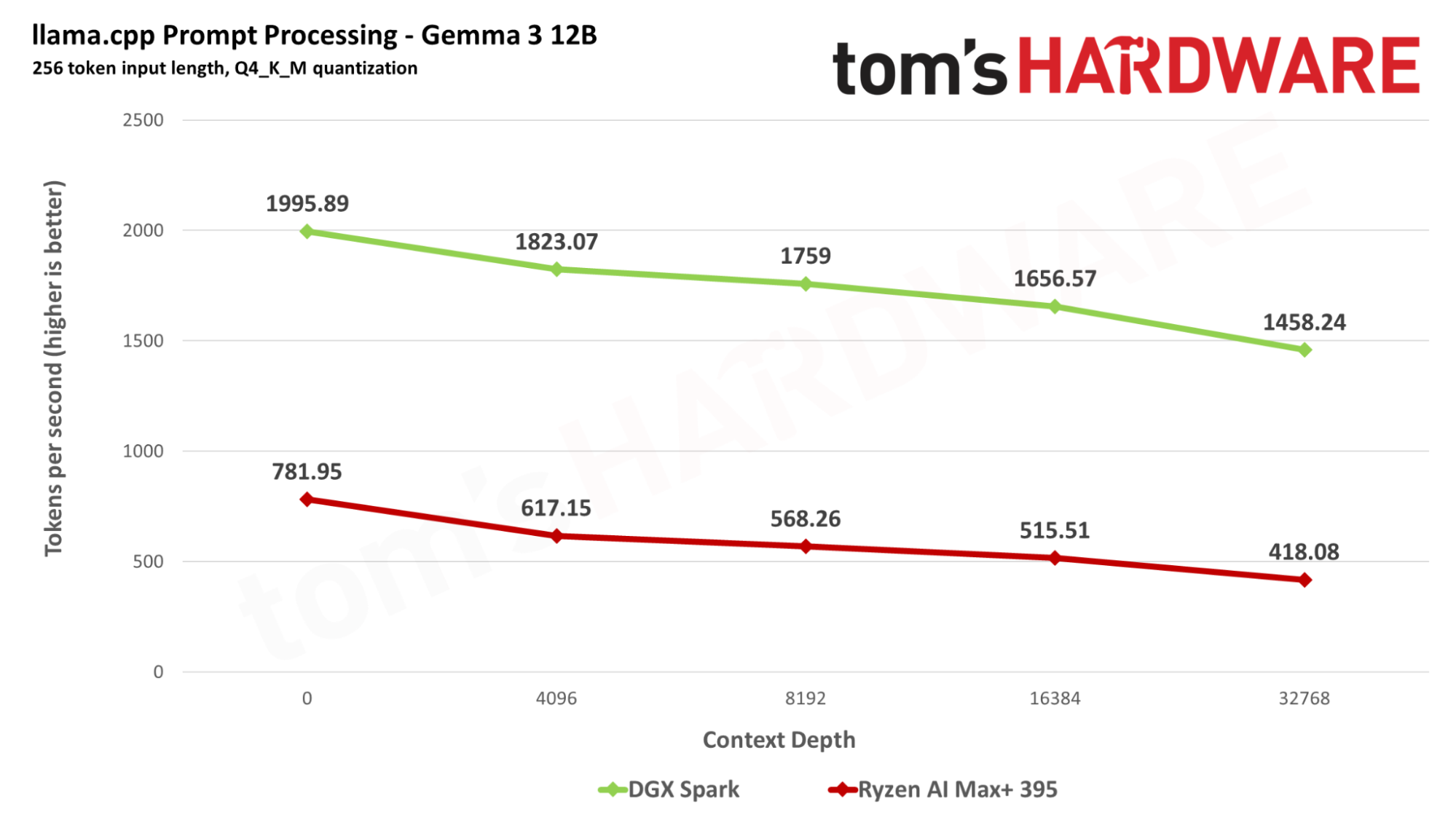

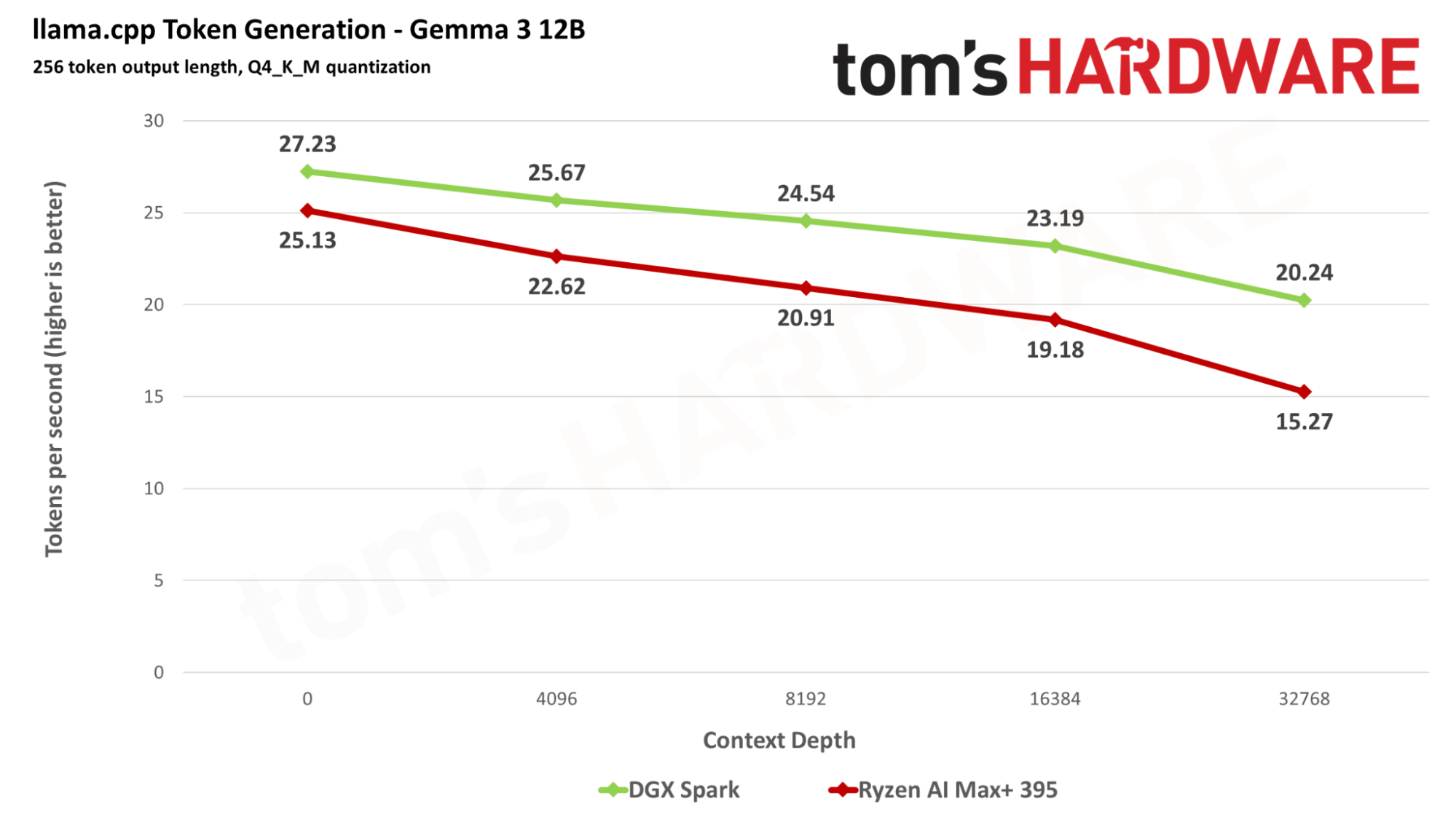

We extended our dense model testing using Google’s Gemma 3 12B and 27B.

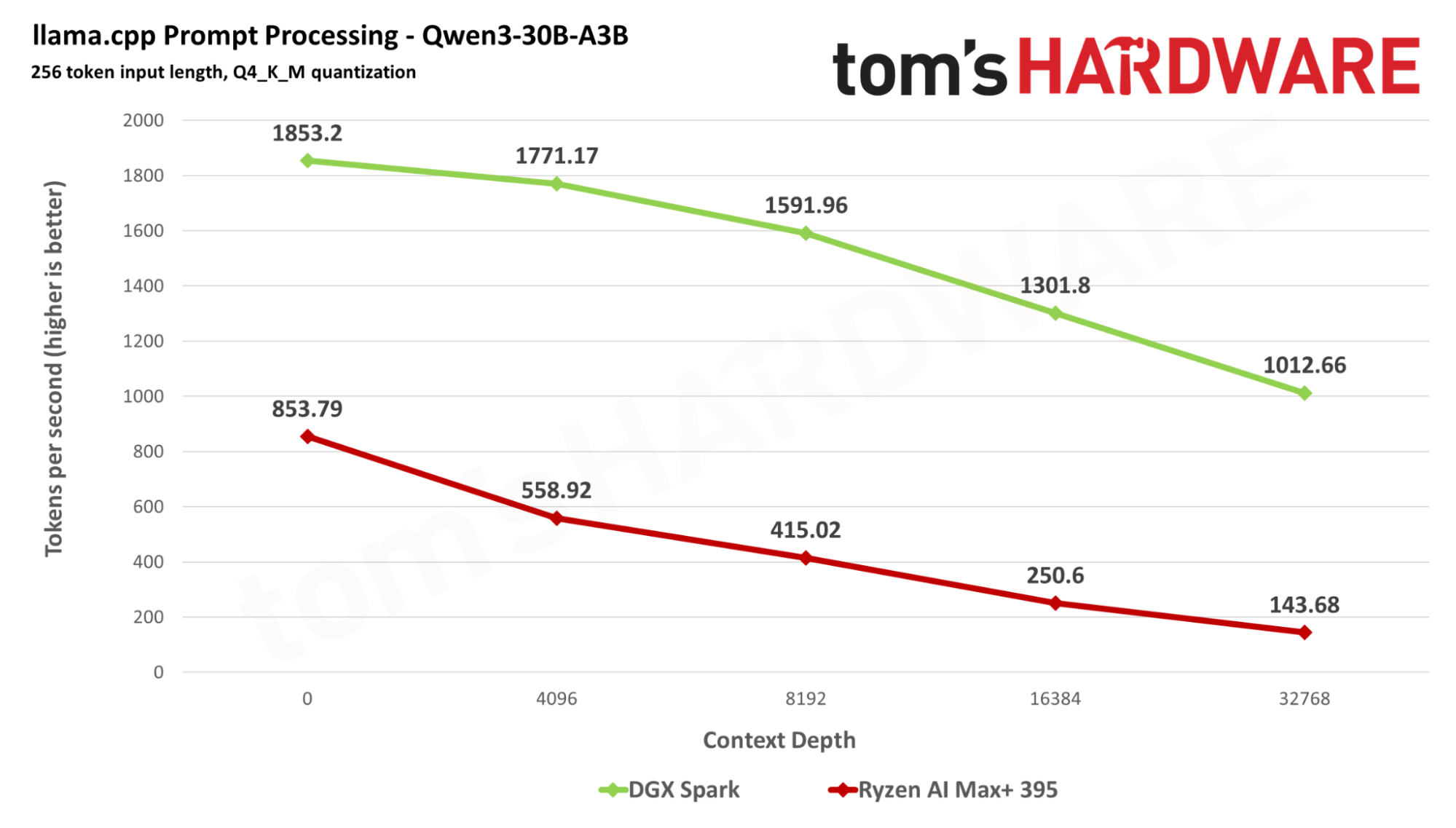

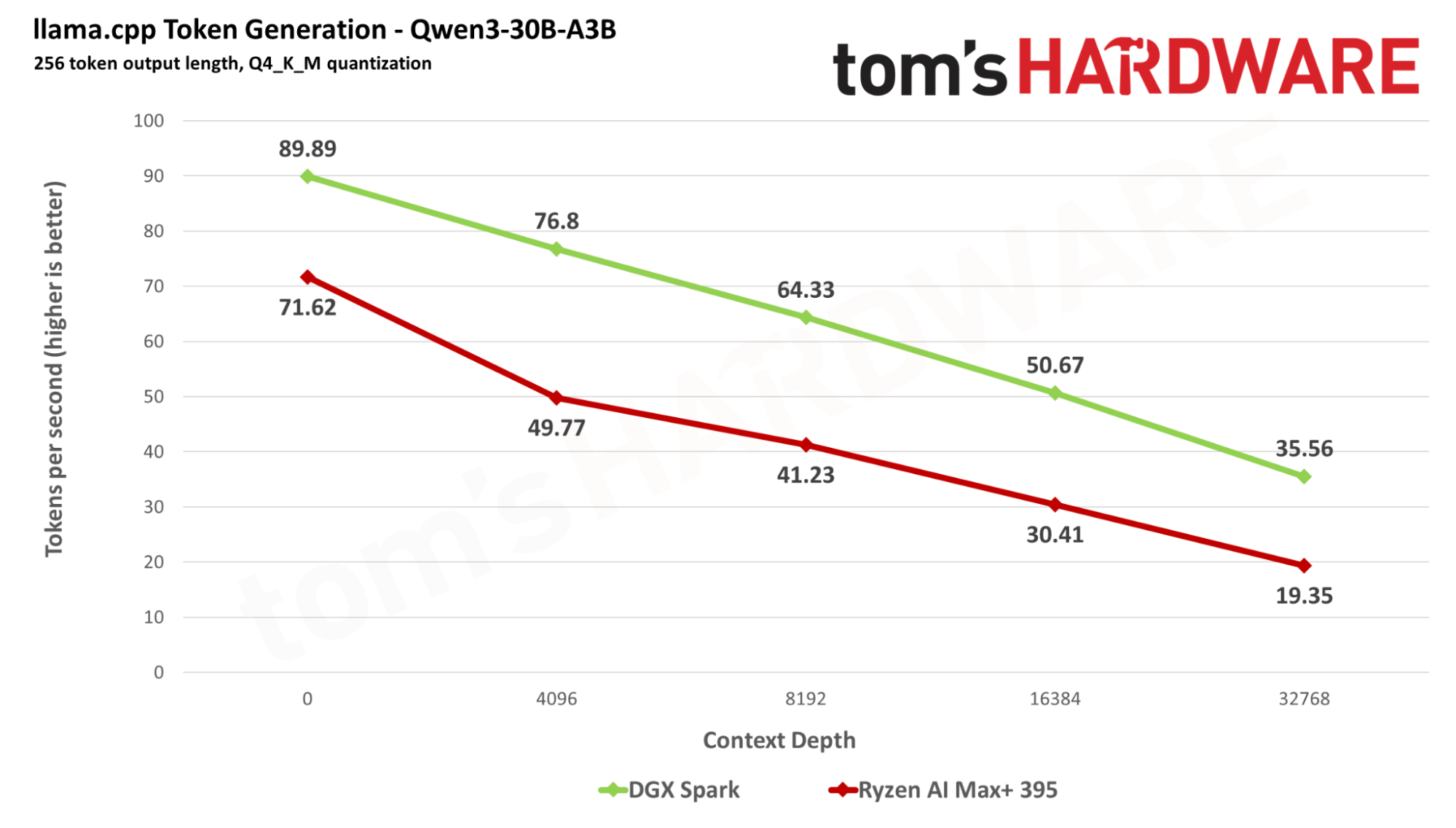

We’ll also look at performance with OpenAI’s gpt-oss 120b and Qwen3-30B-A3B models, which use mixture-of-experts (MoE) architectures that are closer to the state of the art in LLM research.

MoE models can have massive parameter counts, but only a portion of those parameters are activated for any given token. That allows MoEs to balance both capability and performance, although they can still require large amounts of memory. GPT-OSS 120b, for example, requires about 60 GB of RAM.

Speaking generally, the Spark’s higher raw compute horsepower makes it much faster for prompt processing than Strix Halo, meaning that your time to first token will generally be lower on the Spark, especially as context lengths grow. (For reference, a context length of 32,768 tokens is about 25,000 words.)

Once the tokens start rolling, the Spark is generally faster at churning them out than Strix Halo, too—especially at long context lengths.

The closest results between the platforms come from the dense Gemma 3 27B model, where both GB10 and Strix Halo seem to struggle with the sheer number of active parameters per token. But on every other test, the Spark holds the lead, and at every context depth.

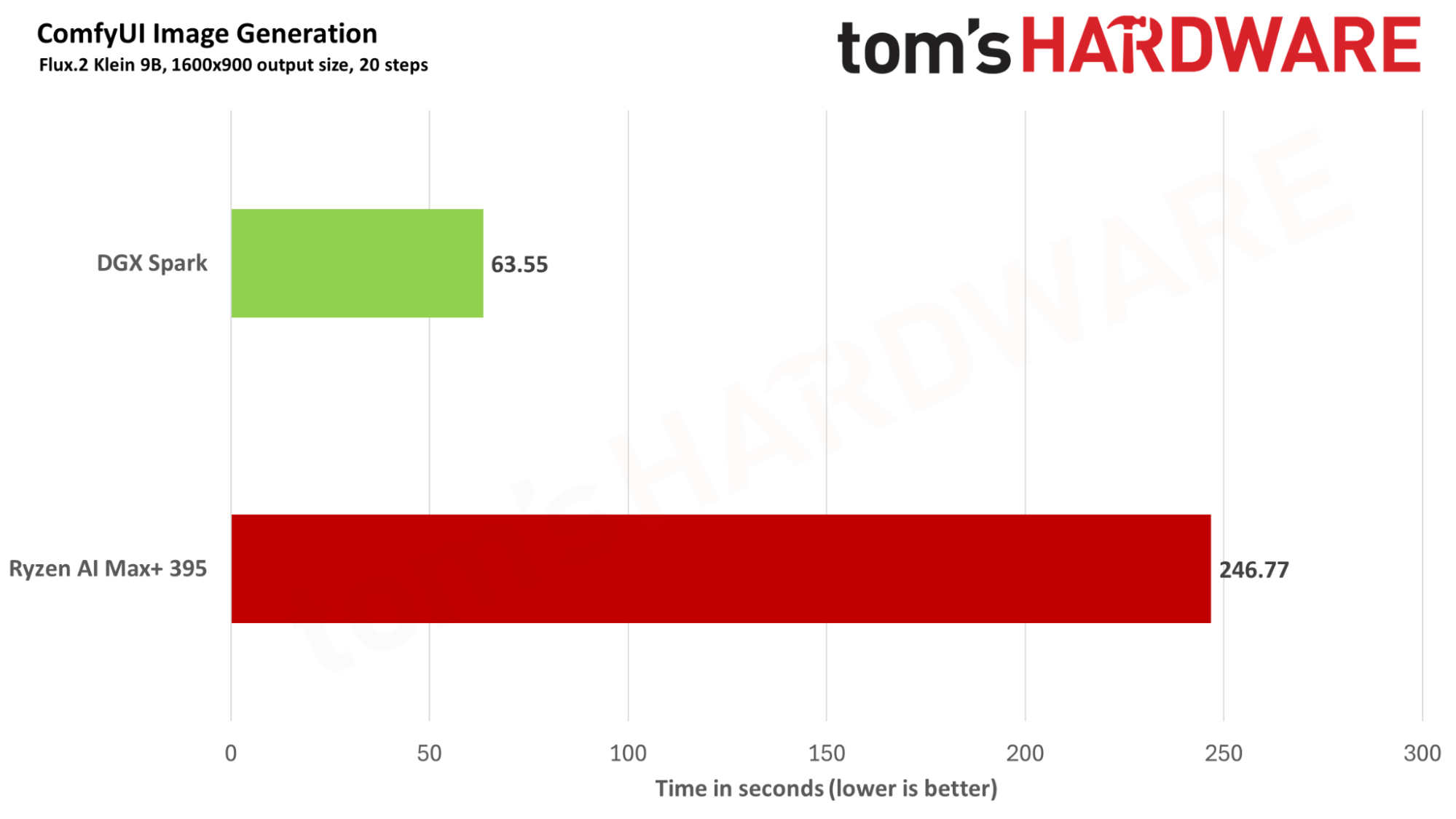

LLMs are just one usage for AI compute, of course. Nvidia also positions the Spark as a companion accelerator for creative users who primarily work on laptops or Macs but need to offload tasks like image generation to a more powerful or versatile GPU without upgrading their entire system or migrating to an unfamiliar platform.

We tested the latest Flux.2 Klein 9B image generation workflow in ComfyUI using the same random seed as a base. After the first load for the workflow, the Spark generated iterations on our desired image in just over 60 seconds, while the Strix Halo platform took a whopping four times as long.

If iteration on generative imagery matters to you, the Spark can do the job with practically any image model thanks to its generous VRAM pool. Discrete GPUs can be much faster still, but you have to match the amount of available VRAM to your workload. And have you tried to buy anything more powerful than an RTX 5070 recently?

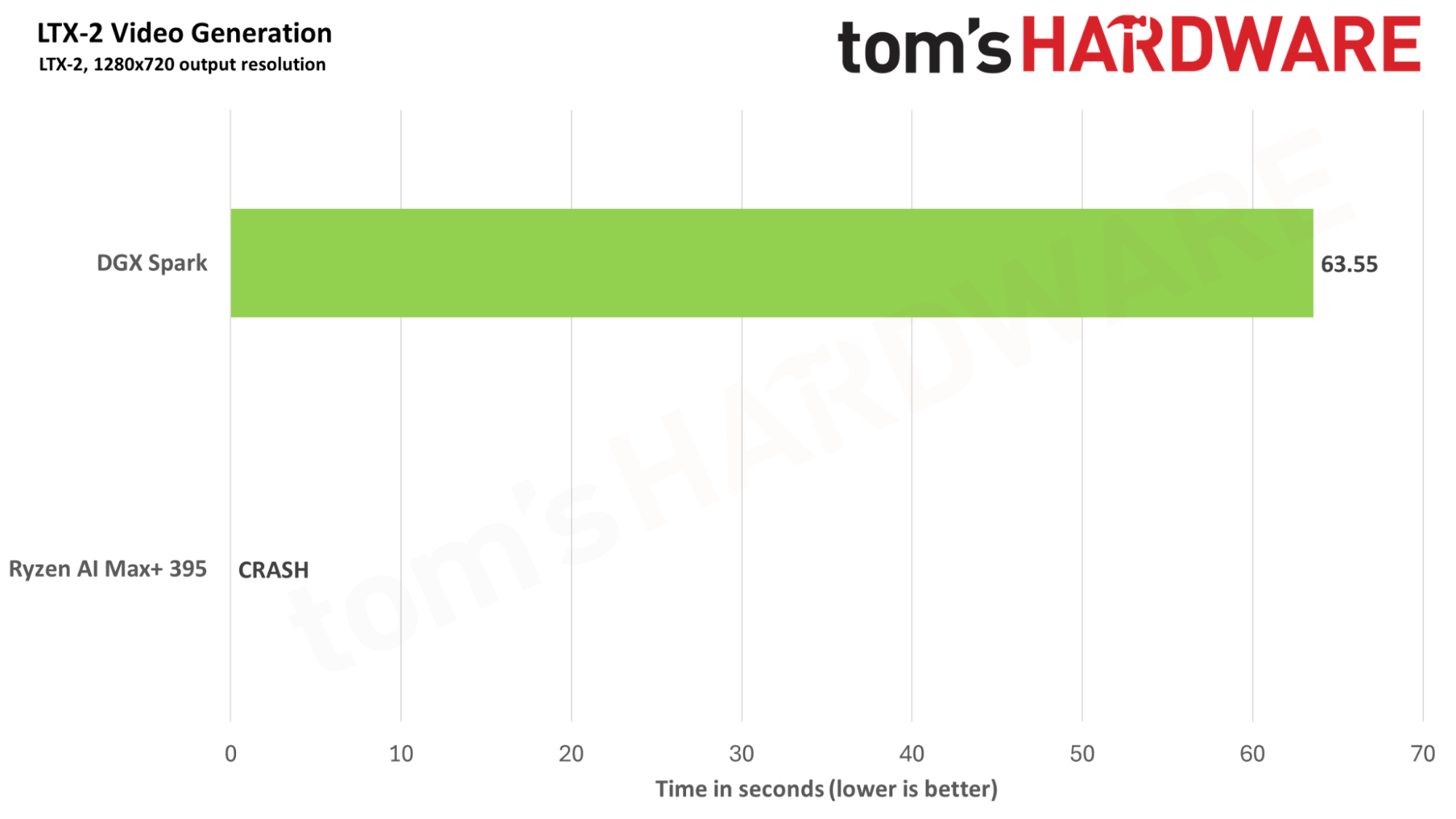

The brand-new LTX-2 video generation model also worked flawlessly on the Spark via ComfyUI as soon as I was able to load it up. After the first load, I was able to generate iterations on short 720p videos locally in just over three minutes.

Even with the latest ROCm 7.1.1 stack, however, trying to run the same LTX-2 workflow on our Strix Halo system resulted in HIP errors that would hang ComfyUI or crash the entire GNOME desktop environment.

That experience emphasizes a major advantage of the Spark as of this writing: stuff just works. If you need to try a particular workflow, you can generally rest assured that the Spark will run it on day one.

AMD has repeatedly and publicly committed to making ROCm a better AI platform from the desktop to the data center, but that improvement will take time, and the company clearly has its work cut out for it in this department.

MORE: Best Graphics Cards

MORE: GPU Benchmarks and Hierarchy

MORE: All Graphics Content

Current page: Performance

Prev Page Going deep on the GB10 Superchip Next Page Power, efficiency, heat, and noise

As the Senior Analyst, Graphics at Tom's Hardware, Jeff Kampman covers everything to do with GPUs, gaming performance, and more. From integrated graphics processors to discrete graphics cards to the hyperscale installations powering our AI future, if it's got a GPU in it, Jeff is on it.

-

Gururu UGLY. Should have wrapped it in snakeskin leather. I'm just going to say what everyone else is saying. Can you open it up and put a picture of the innards in the review?Reply -

Pierce2623 I noticed the headline mentions beating Strix Halo, but I’m not sure it’s much of an accomplishment for a $3000 mini pc to beat a $1500 mini pc. If it doesn’t nuke Strix Halo out of existence, then it’s pretty horrible value. Since it’s currently desktop only, it should be getting compared against ITX PCs of equivalent price. Personally, I’d be comparing it against a 9950x3d/5080 ITX system, since that’s equivalent pricing. Lastly it’s a $3000 portable with no USB4/Thuderbolt? That’s next level greedy.Reply -

bit_user I'm pleasantly surprised by the analysis on Page 2. I expected to have some notes, but I think that analysis hit all of the main points. Memory bandwidth is indeed its Achilles heel. It's awesome for what was rumored to have been primarily a laptop chip, but it's got nothing on its true datacenter cousins.Reply

Yeah, it's where a big chunk of the cost comes from. I've seen street prices for that card running around $1500.The article said:... the onboard ConnectX 7 NIC running at up to 200 Gbps. That exotic NIC ...

Based on Nvidia's prior Jetson platforms, you're really stuck with this as the OS, whether you like it or not. I haven't heard how long Nvidia plans to support it, either. It's a pretty safe bet they'll move to 26.04, but who knows if they'll release anything beyond that for it?The article said:The preinstalled DGX OS is a lightly Nvidia-flavored version of Ubuntu 24.04 LTS.

Yes, and I think the ConnectX 7 NIC is a big part of that. Sad to see you didn't compare against the Ryzen AI Max 395+, here. Elsewhere, I've seen idle power of the Framework Desktop w/ Ryzen AI Max 395+ measured at a mere 12.5W.The article said:The Spark idles at about 35 W as a headless system

It really is just a happy accident that AMD created Strix Halo (Ryzen AI Max), when they did. It wasn't designed to do local LLM workloads, but rather an answer to Apple's M-series Pro. The fact that it can hang so close to GB10 is mostly a testament to just how memory-bottlenecked both are, since the GB10 has way more AI compute horsepower. -

kealii123 Reply

I'd recommend reading the entire article/reviewPierce2623 said:I noticed the headline mentions beating Strix Halo, but I’m not sure it’s much of an accomplishment for a $3000 mini pc to beat a $1500 mini pc. If it doesn’t nuke Strix Halo out of existence, then it’s pretty horrible value. Since it’s currently desktop only, it should be getting compared against ITX PCs of equivalent price. Personally, I’d be comparing it against a 9950x3d/5080 ITX system, since that’s equivalent pricing. Lastly it’s a $3000 portable with no USB4/Thuderbolt? That’s next level greedy. -

kealii123 Now I'm super curious how this chip is going to perform in the rumored consumer-oriented laptop that's supposedly shipping soon.Reply -

kealii123 I wish the review included the mentioned comparable mac studio in the benchmarks sectionReply