AMD ROCm CES 2026 press Q&A roundtable transcript — 'ROCm from 2023 is completely unrecognizable to ROCm today' company details, as it seeks to break down barriers to AI development

ROCm and sock 'em.

At CES 2026, we had the opportunity to sit down in Las Vegas, Nevada, with key AMD representatives on the future of their hardware stack. One of the most pertinent topics was AMD's ROCm software stack for accelerated computing.

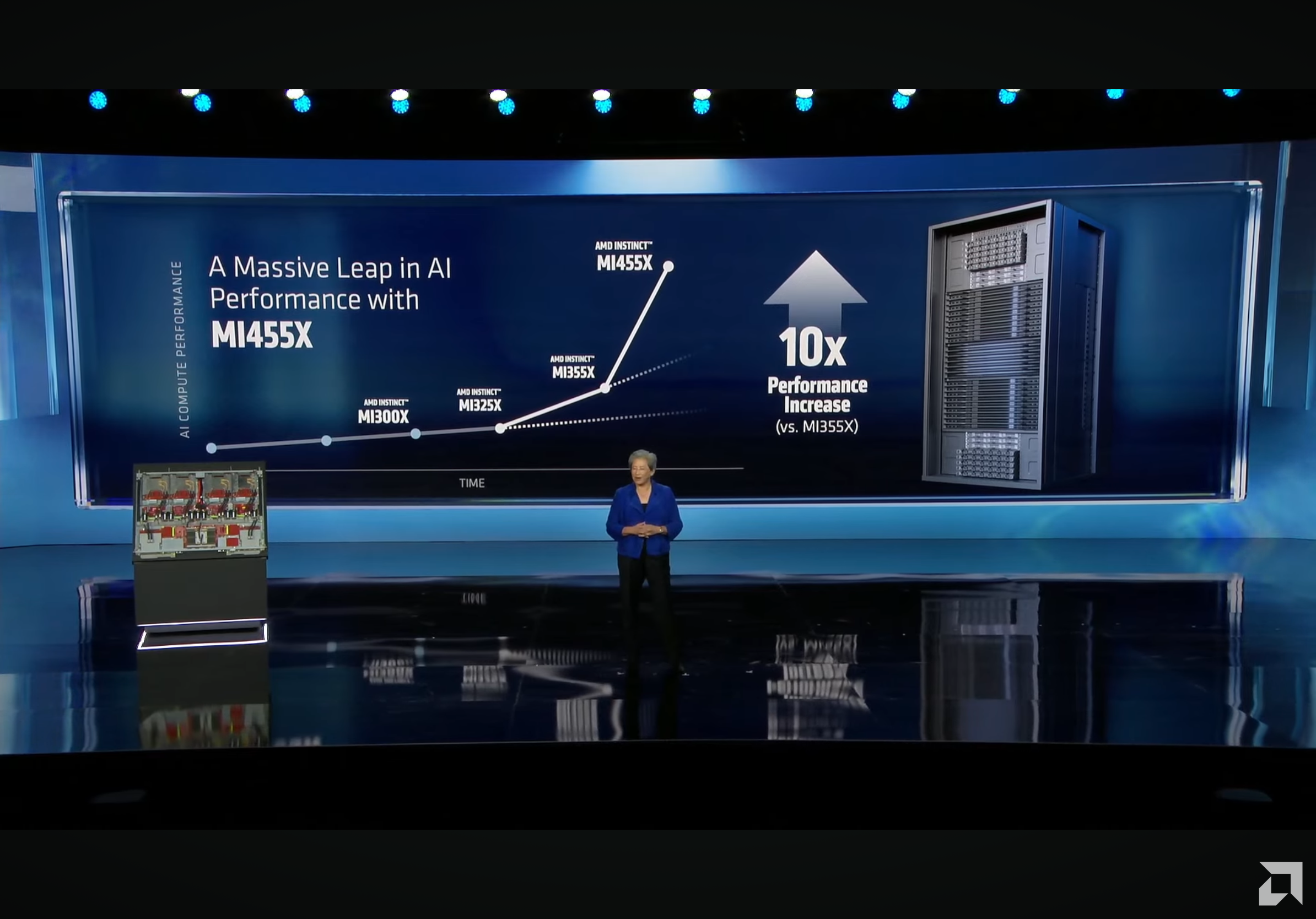

With all of the eyes of the world on boosting AI performance, this is a crucial avenue for AMD, as Radeon Open Compute is a part of the backbone for its Instinct AI accelerators and powers performance in key AI workloads such as Inference. AMD also discusses its FSR 4, among other subjects, in this roundtable interview we attended at CES 2026.

Before we begin, some elements of the transcript have been edited for flow and clarity. As some speakers did not name themselves, we have identified them as AMD representatives and Journalists in the following passages.

To catch up on our other transcripts, check out our Intel Panther Lake Q&A, our FSR Redstone Q&A, and our roundup of Jensen Huang's Q&A. Ahead of reading this transcript, be sure to catch up on AMD's keynote below.

AMD Representative: So just in terms of introduction, I think you might know already, but Andrej is the chief software officer, so the head software guy at AMD, and I'm the product manager, among other things, for the for the for the consumer side, the client side, so Radeon, and stuff like that. Like Adrenalin, that's kind of my baby in terms of roadmap and stuff like that.

And so we wanted the opportunity. We told Stacey, anyone wants to talk to us about software? We love software, obviously. The key thing, of course, is, as you noticed in the keynote, it's a lot about AI, and I was actually complaining to Andrej saying, "I'm the gaming, I'm the fun stuff!" We still do a lot on that side as well, but the key thing and why Andrej and I are here is because we're going to introduce something in Adrenalin in the next couple of weeks, and you guys will probably get the decks and all that kind of stuff.

Andrej Zdravkovic (AMD): I think it was released.

AMD Representative: The whole press deck? Just the high-level messaging; I think just the high-level messaging. Anyways, the point is, you'll get some information on this, what we call an "AI bundle." So, at a super high level — not to give away everything, but — at a super high level, we're going to make AI development easier on client machines, and the way we're going to do that is, within Adrenalin, we're going to have purely optional installation and configuration of a bunch of AI tools, and apps, and stuff like that, to let users get over the intimidation factor of the basic consumer saying, "No, I don't know what this stuff is. I'm intimidated." I'm not talking about the enthusiasts that you guys probably write to; I'm talking about the non-enthusiasts that are extremely intimidated and never click anything other than just "next."

So that's kind of the super high-level philosophy of what we're doing. It's the vision of AI everywhere on AMD, whether it's the most advanced data centers or the most basic client laptop there is, and it's a way of encouraging the world to — or users, at least, to understand, and not encourage but accommodate their journey if they want to explore that kind of stuff. So that's kind of the 30-second pitch.

Andrej Zdravkovic (AMD): That's a great pitch. The way we look to that is, of course, Terry and I, and you guys, we are techies. You end up playing with stuff, exploring, researching everything. There is a community out there that we want to kind of introduce to AI without introducing too much complexity, because I — part of my role as Chief Software Officer is also the role of making sure that we use AI in all aspects of AMD, and we do that quite a lot in software; we're probably right now improving our productivity maybe 25 to 30% with just the AI tools and stuff, and I do a lot of keynotes and various conferences about that.

So the standard question at some conferences, they're not completely technical, that I try to do is I ask them, "How many of the attendees use some AI tool five times a day?" Ten or fifteen people come up. Then I ask them, "How many times you actually start a browser, like Google Chrome or something like that?" And of course, everybody raises up. Did they use Gemini by doing that? But they don't know.

So the way to kind of introduce AI and get over this huge thing of, "I'm afraid that my data will be out there," "Big Brother is watching," or whatever — doesn't really matter whether it's true or not — is a huge barrier to entry, we believe. So I'm very much into — we have ROCm, we have the big MI systems, like 455, running phenomenal inference, phenomenal training, but you want to go to a little guy, and they're saying, "I'm afraid of all that, and I don't know what to do so," and they have their Windows notebook. So what we want to do is to get the Windows notebook guy to be able to start using LLMs, to start using [image generation] and video design in the privacy of their computer. And that's why we moved ROCm to basically Ryzen Max or any, actually, of the new Ryzen platforms on both Linux and Windows. I like this, making this happen, so — sorry; this is an interruption. Your question?

Journalist 1: So I remember the high-level slides, there was a comment about ROCm 7.2 becoming a "common platform." Does that mean that the binaries between Windows and Linux — is that the same binary between Windows and Linux, for ROCm?

Andrej Zdravkovic (AMD): Yes. Well, the same binary is — well, the operating system are different, so the underlying kernel-level stuff needs to be different, since they're so different, but it is the same source, compiled for Windows and for Linux. It's kind of interesting — I don't know if that's us or you, but we tend to go into these numbers, like ROCm 7.2, and there was ROCm 7, and 6.5; there will be a ROCm 8. I don't think it really matters very much. I think what matters is which functionality that is offered, and which — each and every release is going to give you a little bit more functionality. What we are trying to do is to link that functionality on the big system to functionality on the PC to functionality on each individual platform.

Journalist 1: You actually just preempted one of my questions with the different GFX versions. So Strix Halo being 1150 versus RDNA 4 being GFX1200, versus MI350 being GFX950; these are all disparate, different IPs with different capabilities, and something that you guys don't have is a — at least, that's mainline/mainstream, at the moment, is a [NVIDIA] PTX equivalent. So you can't just write a program on Strix Halo and run it on MI350 because of stuff like different matrix instructions. What are you guys doing to improve that beyond just AMD, GC, and SPIR-V?

Andrej Zdravkovic (AMD): Well, at this point in time, you're right; this is a recompile for the target product. When we devised ROCm as — the the idea was, we want the fastest possible way from the application, from the user, to the hardware, and any introduction of the intermediate layer, like SPIR-V or PTX, to a certain extent, slows down that path. When we designed ROCm, it was originally designed for high-performance computing. In the eye was systems like El Capitan; so these huge research machines where, it doesn't really matter whether you're going to compile or not, it's — once you do it, you then solve the weather patterns for North America.

So, as it started from that and started expanding, we are looking at various options. SPIR-V is one of the options to actually get that portability. At this point in time, we're just not offering — I think we are looking at what the right options are. We found that at the user level. So what we're trying to do right now on Windows and Linux, with the AI bundle. The idea is you have your Ryzen Max notebook, or you have the new, what's the name?

AMD Representative: The Halo box.

Andrej Zdravkovic (AMD): The Halo box. Sorry, I'm an engineer; I don't make product names. So you will be able to have the system that is pre-configured for either Linux or for Windows, with the right version of ROCm already installed. So we will abstract the difference for somebody who is going to use it in the application layer. But yes, if you are programming at the ROCm layer, it will still require recompile for some time. Great question, it's just, I don't have a perfect answer. I wish I had, but it is always a race, and the decision: what is more important? Because the resources are limited, and our technology is moving fast, and the use of our technology moves fast. So somewhere in between, you have this layer of ROCm and everything, and you have to make the decisions. What is the most important at this point?

Journalist 1: If you want my opinion on it, it would be ease of development for developers to develop on AMD, and recompiling is a barrier to that, especially if you're developing on — let's say, you're a startup, and you're developing on an MI300 box, then deploying to Strix Halo users, that's — you're going to hit sort of a barrier there in terms of your deployment. So that's why I was bringing it up.

Andrej Zdravkovic (AMD): Yeah, at minimum, we have one step, and it does happen sometimes that you have to touch up the code. Well, you probably don't know, but I actually used to be an engineer. So nobody allows me to code anything anymore, but I tend to know how things work reasonably well. So yeah, I agree, and thank you for that. But again — that's the balance of providing the next level, maybe the next format, or the next something, versus pausing to enable that commodity, which we at this time haven't finished yet.

Journalist 2: In the bundle, the AI bundle, is that focused more on developers or focused more on, like, an end user?

Andrej Zdravkovic (AMD): We're talking about two step approach. The first one right now, the one that we're releasing, is released on Windows, and on Windows, you basically have an option as you download the new Adrenalin driver for your notebook, to click there saying that [you want the optional AI bundle.] What you're going to get there is PyTorch with ComfyUI on top of it, you're going to get Amuse, you're going to get LM Studio, and going to get Ollama. The idea is that it kind of does a little bit of a balance. Because, of course, Amuse and ComfyUI are more creator or entertaining type of applications, and then you have LM Studio, a little bit more user-friendly, and then Ollama, a little bit more developer-friendly.

So, on top of the PyTorch, you can actually load the models and train some of the models; memory is quite high. As we move forward and as we are looking at the Ryzen Halo and the new box, there is the approach of Windows, where I think we need to go with the balance of user and developer, because that's — Windows is not the most-used developer platform in the AI world; we know that. I mean, there is a Windows and gaming guru in our team. But as you go on Linux, what we are going to do is going to be basically pre-installed. Most of the distributions right now already have ROCm in a way, like in the box or some so, so it will be a pre-installed or ability to go to distribution and kind of get the ROCm on the system. So with that, we're enabling developers to 'out of the box,' and it starts working and that, not worrying about the right version of ROCm for the system [...] and then there will be tools at the top of that.

AMD Representative: I just want to add a little bit more, because you said "is it for developers or consumers?" For me, that's the traditional way I would look at the world. What I believe is happening, and I've seen it, is a new field called 'practitioners,' like people that are not developers, but — anyone can mess around with AI. You don't need coding, you don't need engineering, to mess around with AI. So we're trying to find a way to get these people that have the curiosity to get up and running with no barrier to entry, because what we've found is that some of these things that Andrej talked about would require — and we have it in our slides — it requires, like, 17 steps and configurations and pointing to GitHubs and this and that. So what we've done, essentially, our value-add, is we've taken all that away, and it's just like, optional: install or don't install, and everything's up and running.

In my mind, it's trying to — for developers, probably not so much; they don't — they wouldn't find this interesting, and for the moms and pops, probably not. But then the kind of enthusiasts that are like, "what can I do with AI?" Like everyone's done the image generation, that's kind of like a cheesy thing you do, and that's kind of the end of it, but what if you can develop your own Tetris game or something like that, with these tools, just with five or six commands? That's the kind of people that we want to show that Ryzen and Radeon are absolutely perfect to do that, and we're going to get you up and running in one click.

Andrej Zdravkovic (AMD): Well, and I'm sure there will be — sometimes in any innovation, you actually have the idea why you're doing that, but what ends up very interesting with all these different things is you actually — the use of it usually surprises you. Like the invention of electricity had various ideas why this is going to be very important, but one of the most important factors for the industry, you actually don't have to build your factory next to the water, where you actually have your running power. So it's a huge difference. What I think is going to happen is the applications like, I'm going to do my taxes. I don't want to do my taxes asking ChatGPT, because then all my tax information is in the cloud, and I don't know if [Revenue Canada or the IRS], they are watching that. I don't know. People are paranoid. If I know that I disconnected my computer from the Internet, and now I can ask for help, and how do I, whatever, "pay less tax," right? That's the cool thing. I think a lot of people will find these kinds of applications very, very interesting over time. All of us are trying to do something more, and what he said, we are trying to get across the barrier. So this is really the AI adoption, and the thing that's going to get people to understand AI and this revolution better.

Journalist 1: So on that — you said that PyTorch would be installed, or that's an option. Would — I assume that that would also install ROCm and all the prerequisite? [AMD representative nods.] Okay. Just double-checking that that would — okay.

Andrej Zdravkovic (AMD): The whole story, because [...] We would want all our systems to be linked to the PyTorch stable. They're not yet, but they are linked to the latest and greatest steps, but in order to do the installation, you have to go to PyTorch, and then you need to pick the right version of ROCm. So that's what we are abstracting from you.

Journalist 1: Okay. I'm asking because I have — the laptop that I've been using is the HP ZBook. It's the G1A, so I've been playing with it and sort of seeing how the ROCm experience has developed in the past six months or so. It's come a lot further on Linux than it has on Windows, and for developers, I think that that's probably the correct move. But I guess, the largest part — my audience is much more technical, and — sort of, the developer community. Something that I see as lacking is documentation, and while this abstraction is good for the people that you're talking about, for the people that — yeah, need to go, like — that do AI daily, and develop for AMD, the documentation is sort of spread around 15 different places, and there's no [collated] source that I can just go, "bookmark this page," and I can just reference that page.

Andrej Zdravkovic (AMD): Great feedback. Well, the only thing I can say is that, about six months ago, it was 30 places.

Journalist 1: Yes!

Andrej Zdravkovic (AMD): Yes, so I think we are moving in the right direction.

Journalist 1: ROCm from 2023 is completely unrecognizable to ROCm today. Let me put you that way.

AMD Representative: And in that time, ROCm was truly not a very high priority for our consumer products, our client products; that's changed, so that's — you'll see the investment and the throughput and the roadmap, bringing that stuff that we do for the MI products to the client side, the Ryzens and the Radeons.

Journalist 1: So then on top of that, will all libraries available for MI also be available for client? Because I know that that's —

Andrej Zdravkovic (AMD): That's a part. As you look at the consumer products, we just need to make sure that the libraries are optimized for these products. So will they be available? Yeah, the simple answer, if I were unfair, is yes, they are. But are they optimized? Not all them are optimized yet, so we are gradually working towards. It's a journey, because the ROCm system is huge, and we again, have a number of products, and especially if you get into consumer space, there are slight differences from product to product; the version of graphics, the available memory, the throughput — you have to take all that in consideration.

So it takes a lot of time to make sure that we do it justice, but optimizations are going to come down, and we are going then to start focusing on the use cases more than — We're going to try to understand what are the more important use cases on the consumer type platforms, because they are different. Like on the big MI systems, there is just a very clear set of use cases for OpenAI, a clear use case for Microsoft, for Oracle, and so on.

Journalist 1: You have your dozen large customers, and that's sort of what everybody else is doing in that space, whereas in the consumer space, you have millions of single customers, all doing different things.

Andrej Zdravkovic (AMD): Exactly.

Journalist 1: Roughly similar workloads, but all different workloads in their own way.

Andrej Zdravkovic (AMD): Yes. So that's why we need software product management to tell the teams what to do.

[group laughing]

AMD Representative: Yeah, no, for sure, and I mean, speaking of that, what we definitely would like to do, like this bundle; give us feedback. Like, hopefully even before we launch. I don't know if we can change anything during launch, but as Andrej said, the software, the version doesn't matter. It's an ongoing train, and we can always add things and improve things. So I'd really value your opinion, both your opinion on this AI bundle, in terms of "is it useful? Is it easy? Is it useless? Is it interesting?"

I need experts to help me understand how [well] it's going to be perceived, because I think what I know what's going to happen: it's going to be full of gamers pissed off and saying, "Why are you putting AI in my system?" And I have the response ready: "well, it's optional!" If you don't want it? Don't do it. It's options, and so I want to try and find the balance of pissed off gamers versus these 'practitioners' that are going to say, "yeah, this is cool. Now I can play around with it instead of trying to read 30 documentations on how to get it set up and running." Anyways, hopefully you guys can play with it before we launch it, and just let us know what you think.

Journalist 1: If you want — and it's — I probably shouldn't say this — a specific green company with DGX Spark did a very good job; if you look at their GitHub, the DGX Spark playbooks.

AMD Representative: Yeah.

Journalist 1: It was ... really good. If you do — if that is something that comes with that Halo box that was announced, that would be great.

Andrej Zdravkovic (AMD): Thank you, and, yes — we are looking at — so again, the Halo [box] is going to, again, have two options. So there will be a Windows option, which is more or less going to be the follow-up of the AI bundle. So it is more like a user-friendly type of thing, allowing — and there will be developer tools, examples and so on, but on the Linux side, again, it's going to be — okay, there's a set of distributions, so only one distribution will happen yet, we haven't decided exactly — which is going to have ROCm come in the box, so you just get that, but it's going to come pre-loaded with distribution, but you also can, of course, use the distribution to load it and with some play examples.

Journalist 2: Terry, you had mentioned that there was a big shift in investment for ROCm on the client side—

AMD Representative: Not a shift, an addition.

Journalist 2: An addition. I'm wondering why that addition came to be. Was it just a bet on these 'practitioners' that you're talking about, or is there —

AMD Representative: We firmly believe, as AMD, that AI is inevitable. It's the future of computing across the board. There's so many scenarios, whether it be Andrej talking about his developers within AMD being 20% more productive, or my kid creating a school project in a week, that we — since we're the enablers, we're the chip manufacturers of AI, we also have a lot of input into the experience and the software that goes to enable it.

So the great value that I believe my team has added is simplicity, simplifying things. Everything we did with Adrenalin, with our first Control Panel, even with Catalyst and stuff like that, was with the mentality of simplifying tasks and getting people over the intimidation barrier. So the reason why we're investing in it is we believe that a lot of people will be "doing AI," whatever that means to you, and you don't need a super computer to do it. You can do it on your Strix laptop, or your Radeon 7090, or whatever it is. We want to enable the use of these things for these people. It's as simple as that in my mind.

Andrej Zdravkovic (AMD): It's also a progression, because when I was talking about ROCm, our focus with ROCm and MI-type products was high-performance computing; that's where we came from. So you're coming then, enabling Lawrence Livermore or supercomputers like that, and that was what we really pushed for. As we started shifting to enabling more, to enabling either smaller enterprises doing high performance, or then getting into enabling hyperscalers using AI, obviously the spectrum of possible applications expands. We are really the only ones that can fairly easily switch from from one type of graphics, like MI graphics, to our Radeon graphics, and the CPUs also, and port between two systems very easily. It was just an expansion of our fulcrum, I'd say.

AMD Representative: I think the key is, it's not a redistribution of resourcing; it's additional resourcing in an area that we didn't play in before — which nobody played in before, because didn't exist a few years ago. But I want my gaming crowd, my enthusiast crowd, to not hate AI, and if they want to use it, I'm going to make it easy for them to do it. Not forcing it down their throat, of course — I'm not going to say "This is it! You got to do it! Forget gaming! Don't do this!"

Journalist 2: At this point, you guys are the villain. The OpenAI DRAM situation; that's the villain right now.

AMD Representative: But in our history, we're always afraid of being the villain.

Journalist 2: Sure. [chuckling]

AMD Representative: AMD has always been like — we don't want to piss off the market, we're open source, we're responsive — at least, that's my perception; I don't know what the market says, but we really believe in the open sourcing model of everything, as you've seen, versus the CUDA versus the ROCm kind of thing. So it's in our mentality to to provide but not force.

Andrej Zdravkovic (AMD): Collaboration and listening to the voice of customers.

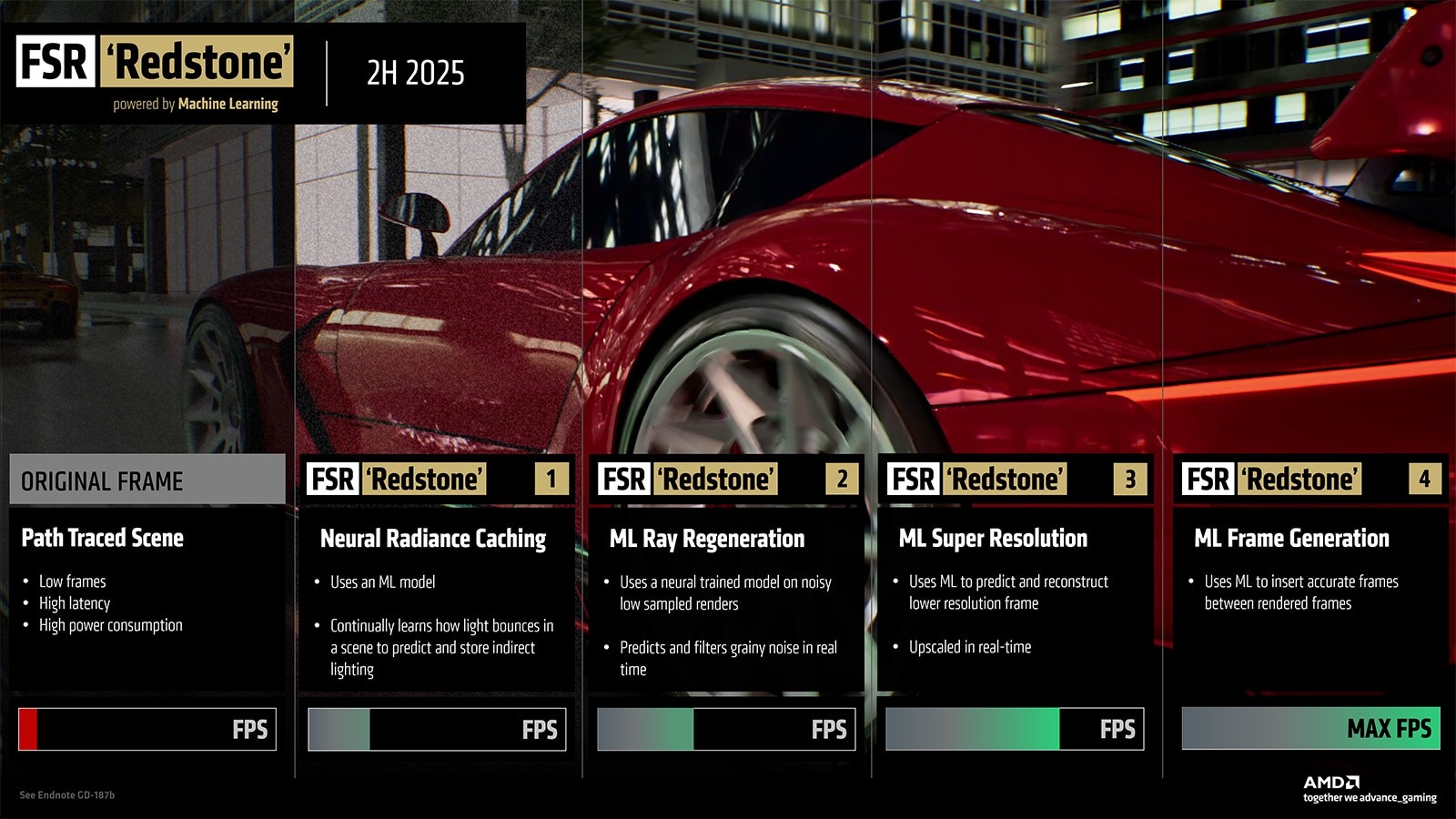

Journalist 1: So on the open source stuff, FSR 4; that — happened, with the whole release of the libraries, and then the sort-of "taking back." Are you guys still planning on open sourcing FSR 4?

AMD Representative: I don't — not that I know of...?

Andrej Zdravkovic (AMD): Well, when, when the accidental release happened, we were not ready for it. Our longer term plan is open sourcing, and you've seen that everything was on GPUOpen recently. At this point in time we are not open sourcing some of the core technology, but we are providing it in the form of a library for [...] of course, and we are finding the way to open source it in a way not to give advantage to our green friends

Journalist 1: Fair, but also, I would say that they have a lot of manpower, and, in my opinion, at least, it is unlikely that they would need any code from you guys.

Andrej Zdravkovic (AMD): Well, you would be surprised —

Journalist 1: Oh, I know how much sharing of — how much open source is important. My point is more that it's sort of — I guess, in the mobile space — sort of this space that — and by mobile, I mean — right, because Arm's scaler, super resolution, is based on FSR — 1, I believe.

Andrej Zdravkovic (AMD): Right. [ed. Arm's Accuracy Super Resolution is actually based on FSR 2.]

Journalist 1: And that is a place where upscaling can be very useful, so it's more that side of it, in the more mobile space where — well, yes, you guys partnered with Samsung; that's not necessarily a major market for AMD in particular, and I would like to see those technologies sort of trickle down to super-power-constrained environments.

Andrej Zdravkovic (AMD): Let me just put it in very simple way: we are fully committed to work as openly as possible, and in some areas we are basically fully open, like the area of ROCm. In the areas of graphics, we started the GPUOpen with that idea, that we're going to share the technology, and we continue doing that. It's simply what happened with FSR 4, we were just not ready at that point in time; it was just a mistake, but open sourcing is on our path and in our mind.

Journalist 2: So just to clarify: that's the plan for FSR 4?

Andrej Zdravkovic (AMD): That's the long term plan. So it's — I cannot at this point in time commit to any timelines on that, because we were looking at that. But in general, we are — the whole technology, about FSR and Redstone, everything was was based on the idea of, we are going to provide the industry with the best possible algorithms to use that.

Journalist 2: I appreciate you bringing up the question, because there's a dynamic right now, certainly with ROCm and CUDA that echoes a few years ago with — I mean, to a much different scale, but sort of echoes this openness approach with FSR and DLSS initially. So, yeah.

Andrej Zdravkovic (AMD): Well, FSR 4 is the first in the series of technology where we decided to hold it back a little bit, but the long-term plan is definitely being very open.

Journalist 1: So going back a few pieces, talking about client devices and getting software working on it. I think we both have this: What's the point of the NPU? [to Journalist 2] You probably get it from a different perspective than I hear —

Journalist 2: Sure, sure.

Journalist 1: — but I know my perspective is more on the "why NPU, because how do I program it?" Is there any plans for integrating NPU — being able to program the NPU via ROCm or via something like OpenCL? Or is it just going to be through the like DirectML or whatever Windows is doing right now?

AMD Representative: How much can I say? [laughing]

Andrej Zdravkovic (AMD): So you asked few different questions I get. It's a fair set of questions. The way I'm looking at it is that I cannot tell you what are the long-term plans at this point. We just need to align within AMD when we are going to discuss the long-term plans. If you look at the NPU, the genesis of the NPU is the Windows experiences. I think it's a very interesting concept, having a device that that allows Windows experiences to be better at the low power at a very — so, if you look at the limited number of use cases that are coming to windows and accelerated with Windows, I think the device becomes very, very useful, especially because of the low power to achieve a whole lot. The programming of the NPU is a different construct, and that's a little bit of an issue. Everybody has their own idea how it should be programmed. We have our own test stack, used by some ISVs, through to Windows ML, and what Microsoft is trying to do right now is trying to provide the abstraction layer, and then basically a switch hidden somewhere in between, so you can go on a CPU, NPU, or a GPU.

I think that's going to bring the next level of usefulness, because with DirectML before, the idea was that you as a developer, gaming developer, if you want, you don't worry about — you just use that interface and everything works, and you have interoperability and everything. I don't think it's going to be like OpenCL or anything on the side. Microsoft has the right path right now of providing the high-level interface through which you're going to be able to access all these devices, and the actual acceleration platform software will probably not matter for the application developers. The question, if you look at developers who would like to write the code to accelerate these devices faster, I think the state of art right now is three devices are different, and you will have to do use different programming approaches for it, and that's, I mean, of the various manufacturers

Journalist 1: Yeah, I — the point is, so like — a CPU, you write C code, and it will run just about anywhere. A GPU, okay, you need to go more specialized: CUDA, ROCm, SYCL, OpenCL, whatever. And then you have the NPU, which is even more distinct — again, it sort of comes back to, like — currently, your NPU has a lot of TOPS, it has a lot of compute there, and I want — versus say, the GPU; for RDNA 3.5 specifically, which doesn't have a ton of compute, and I want to access that NPU compute. It's difficult to be able to do that, because there's no — like, I can't just write C code. I can't just go write ROCm on it; I need something else, and it's not very easy to use. So I guess my question is, are there any plans to improve that experience?

Andrej Zdravkovic (AMD): We are, at this point in time, focusing on enabling the Windows approach, so enabling the access to Windows ML, and of course, continuing to polish the Vitis libraries, which is a specific way of accessing the NPU. The problem is that the technology is moving very fast, and if you look at the architectures of our NPU versus, let's say, Qualcomm's NPU, it's completely different. That happens in the industry when things start and then things converge later over time. So I think we are at that point that these approaches haven't really converged in, like Microsoft is trying to put the layer at the moment, but they don't have this year's answer for you.

Journalist 2: This is completely changing topics, but this is my own curiosity: when are we getting multi-frame generation and AFMF 2?

AMD Representative: Well, obviously I can't commit to when things are coming. All I can say is, you'll have to wait and see? We have a roadmap. We're not here to disclose upcoming stuff. I can't say it's coming three months, or six months, or two months, or 10 months, but — you know. Gaming is a focus. There's a lot of people with a lot of passion with AMD, and we've never stopped focusing on gaming or caring about what the community wants, and so, there's roadmaps. [...] Yeah, unfortunately, we can't. Upcoming things; I can't — primarily we're here to talk about either the AI bundle or just pick Andrej's brain about high-level software concepts, but I'll be happy to give you an update when we have it.

Journalist 2: Gotcha.

Journalist 1: So, I think one of the questions I have is with regards to, like — this may sound weird at first, but like, features within the software stack for gaming, specifically with regards to stuff like video ENCODE? Are there any plans to speed up integration of FFmpeg and getting that stuff out quicker so that — because, like, RDNA 4 still doesn't have very good FFmpeg support.

AMD Representative: My simple answer is, I'm not seeing a huge demand or huge request for anything like that at this point. So if there is, and you're aware of it.

Journalist 1: Like, streaming, like, I know RDNA 3 has a fairly bad bug in it with the AV1 encoding. That caused a lot of headache, and while RDNA 4 fixes that, there's still — it feels like it's been — what, about, nine months since the launch? And it still feels like the software support isn't there for what I would argue are key fundamental features of the GPU in a gaming context. Because lots of people stream, lots of people. They record videos, they encode videos, and it feels like while for gaming, Radeon is great; if you want to do more than just that —

Andrej Zdravkovic (AMD): You know, that's a very good feedback. So I have to admit, I don't know all the details, but that's something that would be interesting discussing. [...]

AMD Representative: I stand by my initial answer. Like, I personally haven't seen a huge demand or upheaval or request for it, and so if you're saying that there is, I'd like to very much follow up.

Journalist 1: There was a fairly public backlash to the poor state of AMD encoding about fifteen? Eighteen months ago. Remember that streamer?

Andrej Zdravkovic (AMD): Yeah, 15-18 months ago, I would — I think, after RDNA 4 I haven't seen anything.

Journalist 1: It still feels like — again, I don't know how much of that is inertia, but I've been trying to use FFmpeg, and it's not been the greatest experience.

Andrej Zdravkovic (AMD): Okay, well, we'd love your maybe even more detailed feedback, and Stacey, if you can help us, maybe coordinate that.

AMD Representative: Yeah, talk to me directly, or through Stacey, and let me just get your concerns; let me figure it out. If it's something we can fix, and if there is a feasible path to delivering it, I can tell you that we'll do it.

Journalist 1: Okay.

AMD Representative: It's simple as that. It may take three months, six months, nine months, but if it's realistic, we will make it happen. There's no reason for us not to, right? Other than the fact that Andrej and I didn't — like, this is, new information.

Andrej Zdravkovic (AMD): It's a lot of new information for me as well, and that's why I was not ready. We have deep video experts on the team, of course, and I'm sure they would, they would want to get the feedback. [...] We need to give the right resources.

AMD Representative: Just go through Stacy and give me any type of feedback on the driver, not just the video, not just whatever things we're talking about. I've never really — I've been doing this for 25 years, and I know a lot of the journalists very, very well, and they ping me and they say, "you got to do this, or this, and that." So I'd like to encourage you guys to just — don't hesitate. You know, go through Stacey, and it'll come to me, and you'll see stuff showing up on the roadmap just because you suggested it, or recommended it. That's the way we work.

Journalist 2: I did have a clarification on the AI bundle; so, you said it'll show up when you go to install the latest driver; will it live somewhere in Adrenalin where you can go and get it later? If you don't choose to install it.

AMD Representative: We have — so we introduced, I guess we call it internally DIME; it's the Download and Install Manager. It's a new little icon in the task tray, which we launched [a few months ago] that keeps your system up to date. So in there, it'll have options to select things, deselect things, keep things up to date, don't keep things up to date. So we have this download manager that would have all that stuff in there. So, you don't only have one chance to do it; you can do it later. You can not ever do it, or you can do it and then get rid of it.

Journalist 2: So you won't manage anything inside Adrenalin? It's just inside that download manager?

AMD Representative: It's — for me, it's part of Adrenalin, but when you say Adrenalin, for me, it's the whole package. Are you talking about the UI?

Journalist 2: Yes.

AMD Representative: The UI, yeah — there's a link to the download manager within Adrenalin, and that takes you to that, but everything's going to be tied in together as much as possible. But yeah, the simple answer is yes, you'll be able to install, uninstall, update anything at any point.

Andrej Zdravkovic (AMD): Good thing you were here; I didn't know the answer to that.

AMD Representative: That's my area.

Journalist 2: Just wanted to make sure you don't have to DDU if you want to change your selection. [chuckling]

AMD Representative: So, we don't — I mean, the thing is, we have a pretty good uninstaller that eliminated the need for DDU, at least in my opinion.

Journalist 2: I have a lot of emails with Matthew and various other people that suggest otherwise. You still need to DDU

AMD Representative: Well they tell you to use DDU, right?

Journalist 2: Yeah! Oh yeah.

AMD Representative: Yeah, I know — it's an internal thing. But anyways, it doesn't matter; if it works, it works. We did spend time to do everything DDU does within Adrenalin itself, so you don't have to go searching for a third-party tool, but enthusiasts are enthusiasts, and if that's the path they want to take I'm cool with that. Sure. I have no, no —

Journalist 2: I'm also swapping a bunch of components in; not a realistic scenario.

AMD Representative: Sure! I mean, you're a reviewer, right? So at some point you do want to clean system.

Journalist 1: You mean the average person isn't swapping their GPU three times a week?

[group laughing]

Andrej Zdravkovic (AMD): I think I mentioned that already; everybody, maybe short of Stacey, is a geek here; a complete geek. And you don't do things that the normal people do with their systems, and we need to think about that sometimes in the —

AMD Representative: Especially, this is a true story — so Andrej told my development team "I want to try this AI bundle," and they're like, babying him and saying make sure you do this and that, and he's like, "no, don't give me instructions. Just give it to me and let me play with it like a real person would."

Andrej Zdravkovic (AMD): We tend to do that. There is these 25 different switches that are going to do this different and better. Well, I'm interested in these 25 switches, and you probably aren't. 95% of the users just want to clean the damn thing. Terry and I are spending a lot of time discussing, like, how to actually make it [...] It's two different types of users: the everyday user who's just going to occasionally look at some feature and readjust it so the game plays better, or maybe get automatic suggestions and stuff, and somebody else who wants to go with a page with all the possible options and try them all. These are two different personas, and we need to cater to both.

[off-topic discussion]

Journalist 2: Okay, I do have a question for the handhelds and the experience of Adrenalin on handhelds, which isn't optimal, I would say — it's rough. Intel yesterday, when they were launching Panther Lake, they announced they're doing this whole handheld initiative, and I'm wondering if you were working with partners like Asus or Lenovo to optimize that experience of Adrenalin on handhelds.

AMD Representative: What part? What part of optimized; like the day zero, driver delivery?

Journalist 2: No, no; driver delivery is good. I'm more so talking about the additional features within Adrenalin, getting those things — maybe not through the GUI itself, but into — you know, Asus does it with Armoury Crate — into the actual software, and, more importantly, getting those features working with SteamOS, because we've seen a — especially from Lenovo —

AMD Representative: Totally separate. So let me park SteamOS, because that's Linux. The other stuff; we have something called ADLX. ADLX is our API for any OEM to take our software features and enable it through their UI, so there's no feature that's in Adrenalin that cannot be enabled in a third-party UI through ADLX. The OEMs can turn on any feature they want; any feature they don't want, they can hide, but that's the key, is the ADLX. With that API, any feature we have on the Windows graphics side is available. SteamOS, totally different beast. That's their own — they control their own destiny, they can turn on whatever features they want, but we would have to do some hand-holding or partnership, because it's not—

Andrej Zdravkovic (AMD): We do help them. RADV and all of this development is always a partnership; all of these open source projects, sometimes it looks like the community is doing everything, but in reality, they're usually doing 80% of the work.

AMD Representative: Can I deep dive on what you said, though? What do you think — what do you feel is not there for the handhelds that should be there? Is there something specific?

Journalist 2: On Linux? Or on—

AMD Representative: No, no, on the ASUS and the Lenovos.

Journalist 2: I think it's just a cleaner — I mean, ASUS has done a good job of it, actually integrating the most prominent features between RSR and AFMF, those being the key ones in that form factor. Lenovo hasn't. I haven't checked up on MSI lately, but when I originally checked up on MSI, it was bad. So just a cleaner unification of these features, because if I'm going to Best Buy or wherever to pick up one of these handhelds, and I hear that it has this AMD chip in it, I have a certain expectation of features that I've heard about that are very relevant in those form factors. Namely, RSR and AFMF.

Andrej Zdravkovic (AMD): That is probably more a question for OEMs than for us. The way you've just said it is — it tells you a little bit more about DNA of the OEM itself. Like Lenovo, is more of the production, commercial type of a system, so their handheld platform represents that DNA. Not that it's the — it's the intention of the Asus is much more consumer oriented. So it's just something that — we can't help what we cannot control.

Journalist 2: Sure. I did want to follow up on the Linux thing, really, really quickly. Again, those features are still relevant on on Linux platform, and I would say they're — from my experience, that's been a —

Andrej Zdravkovic (AMD): I tend to agree with that. But again, that's the — we can provide the feedback to developers, well, let's put it that way. It's not us blocking implementation of any features. It is more of, again, that type of OEM using our stuff. But on both of these, do send us your feedback. Within my team, there is a group that does the the OEM development and support, so picks up the stuff that Terry designs, and takes these ADLX features, again, 100% open. Everything that we do is open to any OEM, so they can pick it up; we just enable them as much as we can. So the more you give us, the more I can, on next meeting say, "okay, this is the feedback that we received."

AMD Representative: And not only that, we can also share with Microsoft, because Microsoft is extremely, extremely interested in working with us on beating Steam.

Journalist 2: They're doing not the best job with it right now.

AMD Representative: They're not, and so a lot of it is that they're looking for our expertise.

[Session ends]

Zak is a freelance contributor to Tom's Hardware with decades of PC benchmarking experience who has also written for HotHardware and The Tech Report. A modern-day Renaissance man, he may not be an expert on anything, but he knows just a little about nearly everything.

- Jake RoachSenior Analyst, CPUs