AMD's CES 2026 Keynote Liveblog: Gorgon Point, Helios racks, and AI

Dr. Lisa Su is unveiling AMD's latest chips and tech in Las Vegas.

We're here at CES 2026, where AMD CEO Lisa Su is set to take the stage for the conference's opening keynote. The event will start at 6:30 PM PT / 9:30 PM ET and we'll be covering it live here with all of the latest news and updates.

We'll have to wait for the keynote to see what's next for AMD, though there has been plenty of speculation about products that could be announced. That includes "Gorgon Point" laptop processors and new X3D desktop processors. There aren't any rumors regarding consumer GPUs, which matches up with what we saw from Nvidia.

Of course, we're also expecting to hear about AMD's AI initiatives, both in consumer and enterprise markets, so perhaps there will be Instinct news? Stick with us and check back often, we'll be updating frequently.

Our team is seated both in the Palazzo ballroom at the Venetian Hotel in Las Vegas and virtually at home. Thanks for joining us, we are ready to go. The event is set to start at 6:30 PM PT / 9:30 PM ET.

AMD's keynote follows presentations from both Intel and Nvidia. Intel announced its Core Ultra Series 3 processors for mobile, so we're expecting to see how AMD fights back. But AMD has promised that this keynote will be about "the AMD vision for delivering future AI solutions," so we're sure to see plenty of enterprise talk as well, or even heavy technical talk like Nvidia's AI-focused conference earlier today.

We're almost there.

But first, Gary Shapiro, the head of the Consumer Technology Association, which runs CES, is introducing Lisa Su. She's no stranger to CES, having made this keynote before.

We've got an AMD video, with a robot narrating a video, it seems? It's showcasing how gaming, industrialization, and education are being affected by AI.

We're seeing AI-mapped genomes and self-driving cars. We're hearing about faster travel and better energy sources. It implied an AI-flying plane.

"Video conceived by humans. Made possible by AI," it reads.

Dr. Lisa Su has taken the stage.

"It will come as no surprise that tonight is all about AI," she said. The theme is that "you ain't seen nothing yet."

AMD technology "touches the lives" of billions of people every day, says Lisa Su. I always appreciate that AMD includes "gaming" when looking at broad strokes because it is a large, legitimate segment that's so often ignored. — Jake Roach

Su sees 5 billion people using AI daily within five years. That's more than half of the world's population.

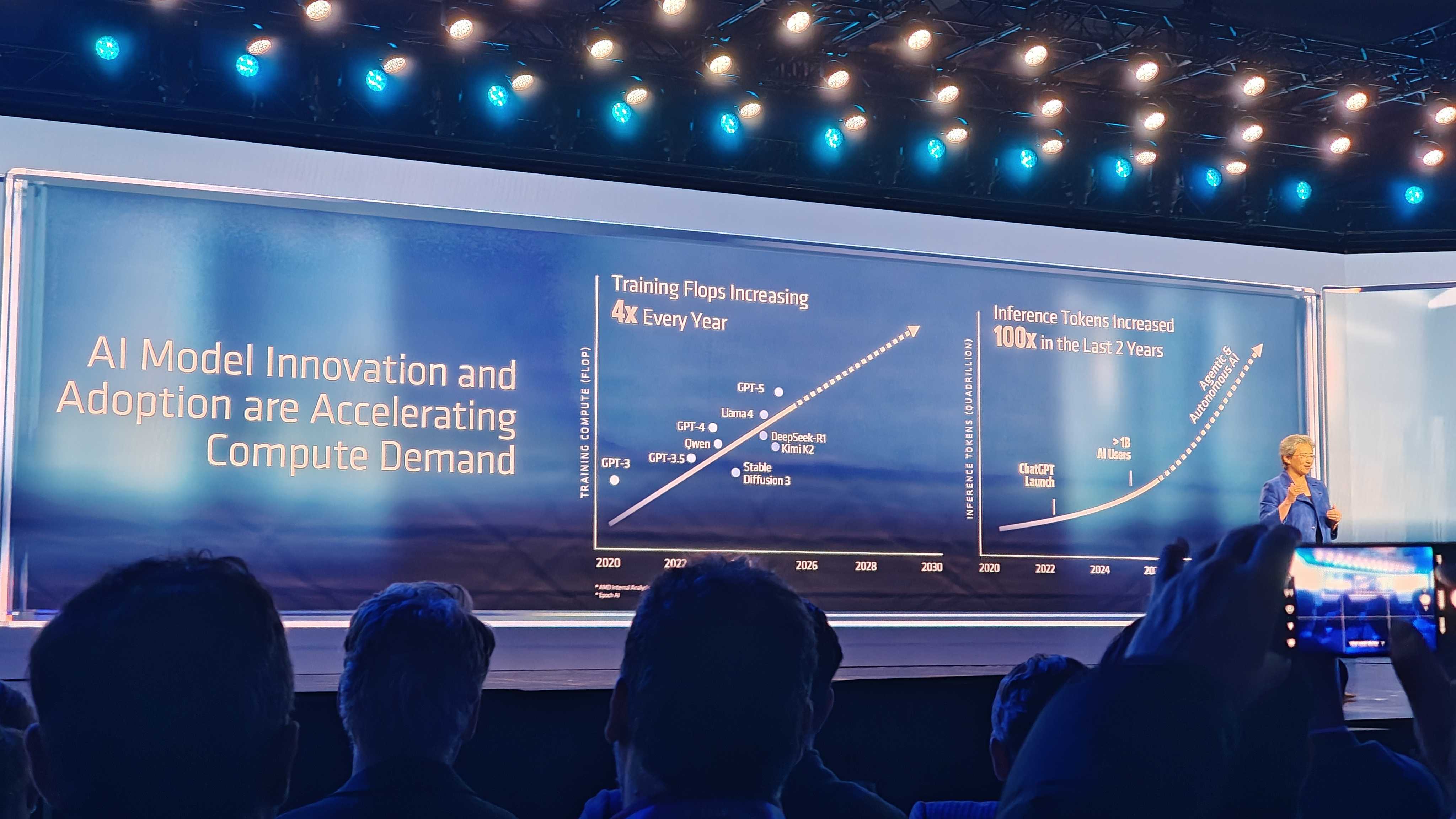

AMD says it "doesn't have nearly enough compute" for the rate of innovation, echoing Nvidia's claims earlier today.

Su wants to increase to 10+ YottaFlops in the next five years. A YottaFlop is a 1 followed by 24 zeroes. "There's never been a technology like AI," she says.

She says that only AMD can deliver AI across CPUs, GPUs, NPUs, and custom accelerators. We're starting with the cloud.

Su points out that most people experience AI in the cloud today.

"Every major cloud provider runs on AMD EPYC CPUs, she said. But they need more scaling for compute, and the increase in training and inference requires more AI hardware.

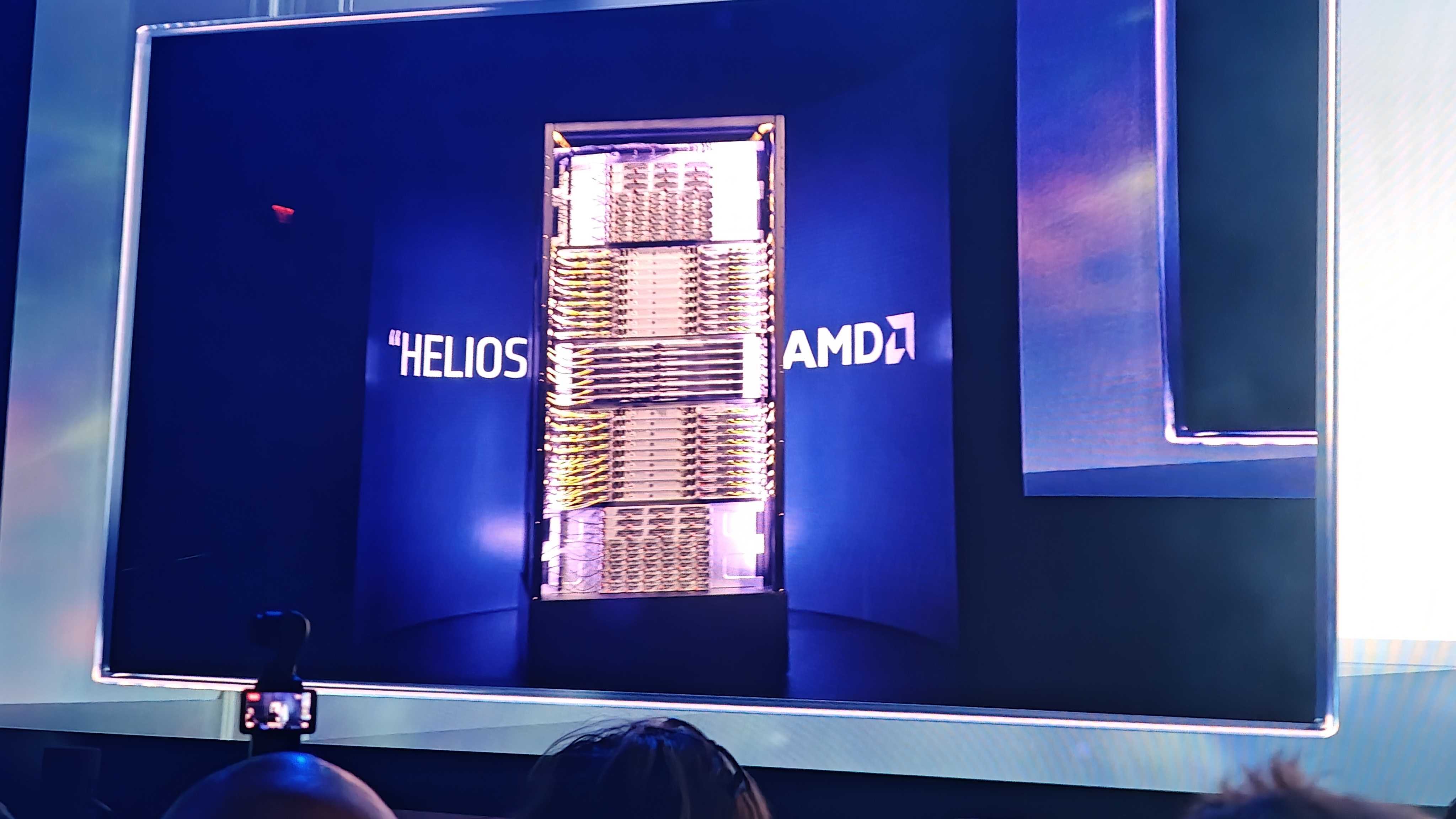

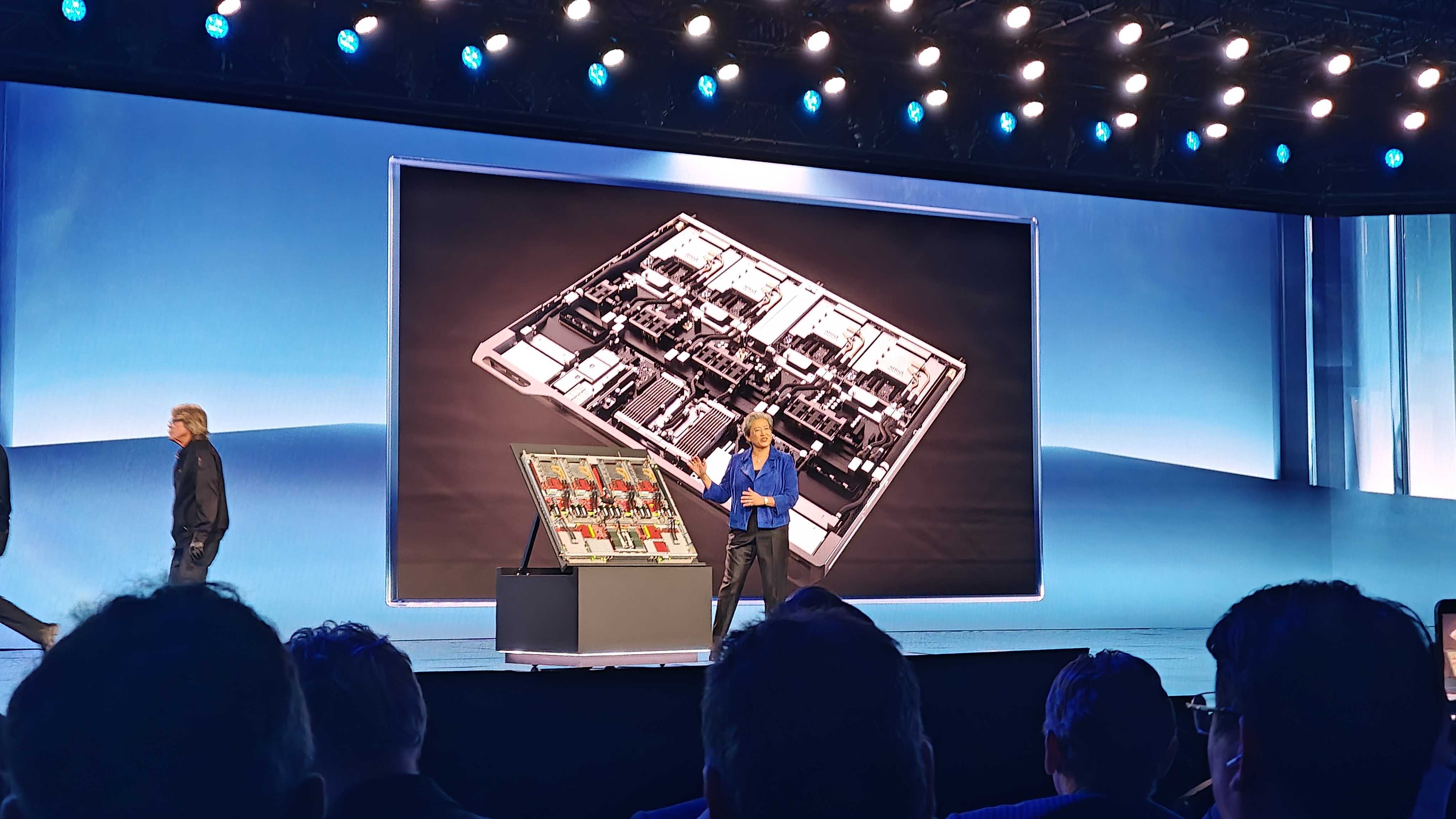

That is why AMD built Helios, a rack-scale platform "for the AI" era. This was introduced in the middle of 2025.

That Helios platform includes HBM4 and up to 72 GPUs in the rack, built on 2nm and 3nm processes.

And there's one on stage!

It's a double-wide design that weighs nearly 7,000 pounds.

Here's the Helios compute tray. It includes four MI455X GPUs and Epyc CPUs, all of which are liquid cooled.

There are 320 billion transistors, 432GB of HBM4. Four of those GPUs are in a compute tray, driven by a Venice Epyc CPU.

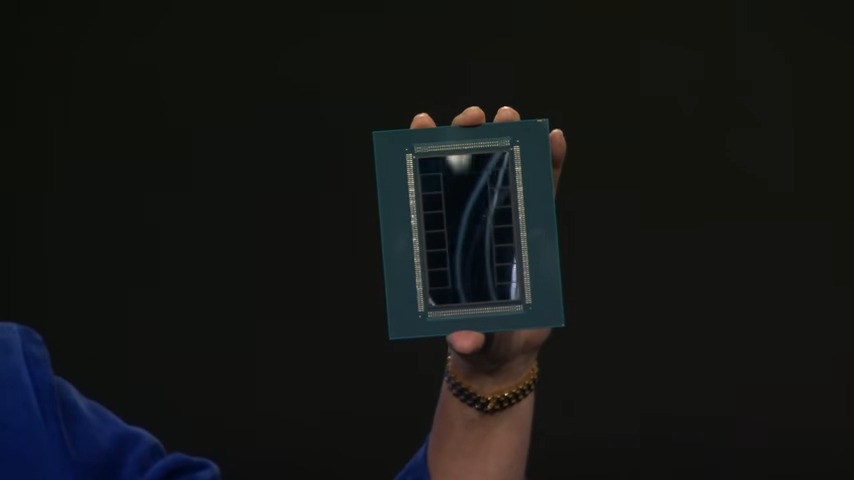

Here's the MI455X and Venice:

Venice is built with 2nm, up to 256 Zen 6 cores. A total of 4,600 Zen 6 cores, 31TB of HBM4 memory, and 18,000 GPU compute units

Helios is on track to launch later this year, Su says.

AMD claims a 10x performance increase with MI455X compared to MI355X.

Greg Brockman, president and co-founder of OpenAI, is on stage. He calls OpenAI an "overnight success seven years in the making," reminding everyone of the technical work behind AI.

He asked how many people use ChatGPT. Lots of hands raised.

Brockman says now we're seeing enterprise agents and scientific discovery "take off."

Su: "Every single time I see you, you tell me you need more compute."

It's great to hear from OpenAI if that's your game, but I don't want to gloss over that we just saw Zen 6 in the flesh for the first time, which likely sets up a consumer Zen 6 for later this year. — Jake Roach

ChatGPT made this slide that Greg Brockman is presenting, which is apparently good enough to show in the opening CES keynote.

Brockman says there are fights over compute at OpenAI.

"Is the demand really there?" Lisa Su asked Brockman, which is a fair question. OpenAI has been tripling compute over the past few years, and it sees that continuing going forward. He thinks that GDP growth will be driven by the amount of compute available.

He also claims that data centers can be "beneficial" to local communities, which is a tough claim to make.

Back to the hardware. In addition to the massive Helios, there's the drop-in ready MI440X platform and the MI430X paired with "Venice-X." — Jake Roach

Su has made the pivot to ROCm. We're onto software.

"We have day zero support" for the top models, Su adds.

LumaAI CEO and co-founder Amit Jain is on stage.

Jain says that there's a need for multi-modal models that can generate accurate physics, lighting, etc.

He says they are generating worlds and that this model, Ray3, can generate in 4K and HDR. The sizzle reel, at least, is pretty impressive.

This is extremely cinematic in the room, at least. But the music and cuts are driving a lot of that. AI weirdness becomes apparent when you have clips that are longer than five seconds. — Jake Roach

Jain says there's a new model on top of Ray3, called Ray3 Modify, which can take any real or AI footage and change it how you want. We're seeing subtle and massive changes to footage.

He calls it a human/AI hybrid production, where the "human becomes the prompt." You can use humans without sets, and then generate the film around that.

Jain is showing how an individual creative or small team could use agents to help build productions. Somewhere, someone is getting goosebumps at the Director's Guild.

"We bet on AMD very early on," Jain says, because most AI software runs "out of the box" on AMD.

He says most workloads run out of the box on AMD, which is certainly not what I've heard about ROCm. It's improved massively over the past several months, but it needed a lot of work when Luna's partnership began with AMD in 2024. — Jake Roach

Jain says multimodal AI will be the "backbone" for robotics.

These video models will help us with larger simulations. This sounds a lot like Nvidia's idea of AI factories, and the massive amount of resources it has put toward applying AI in healthcare, robotics, and manufacturing. — Jake Roach

Su says the AMD MI500 series is on track for 2027. Based on CDNA 6 architecture, uses HBM4E memory, and is built on a 2-nanometer process. AMD says that it will have delivered a 1000x increase in AI performance over the past four years.

We're moving onto PCs.

Su says AI PCs are delivering value across everyday tasks. That's arguable. She's showing demos, but not saying which software is being used for the generative stuff.

Su is reminding that it had the first x86 NPU, the first x86 Copilot+ PC (nevermind that QUalcomm stuff.)

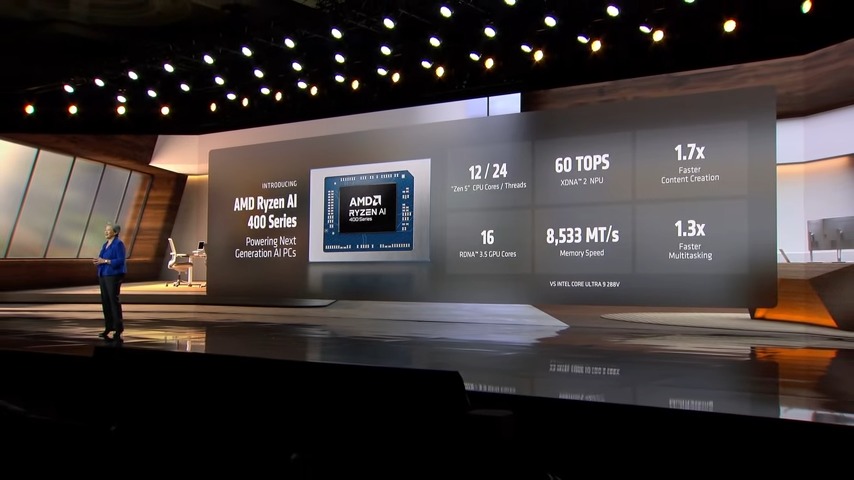

Now, Ryzen AI 400 series is here. Here's the stats:

Ryzen AI 400 is official. It's a name bump, but the architecture hasn't changed. It's still Zen 5 and RDNA 3.5, just like in Ryzen AI 300, but with support for faster memory speeds.

The first Ryzen AI 400 PCs are launching later this month, and there are over 120 designs rolling out over the year. That's not far off Intel's 200+ for Panther Lake. — Jake Roach

Liquid AI's cofounder and CEO Ramin Hasani is on stage with Lisa Su. He's talking about quantizing models for local use without sacrificing quality.

"We got another call out for AI on airplanes, which is making me scared to board my flight home after this week."

Hasani is now announcing LFM3.0, for real-time audiovisual interaction. That will come later in the year.

He says that it's always on, and can work on tasks proactively in the background. We're seeing a demo where a sales person gets a calendar invite while working in Excel. They allow an AI agent to attend a meeting.

"Are you sure we can trust this agent? I'm a little worried there, Ramin," Su jokes. I think.

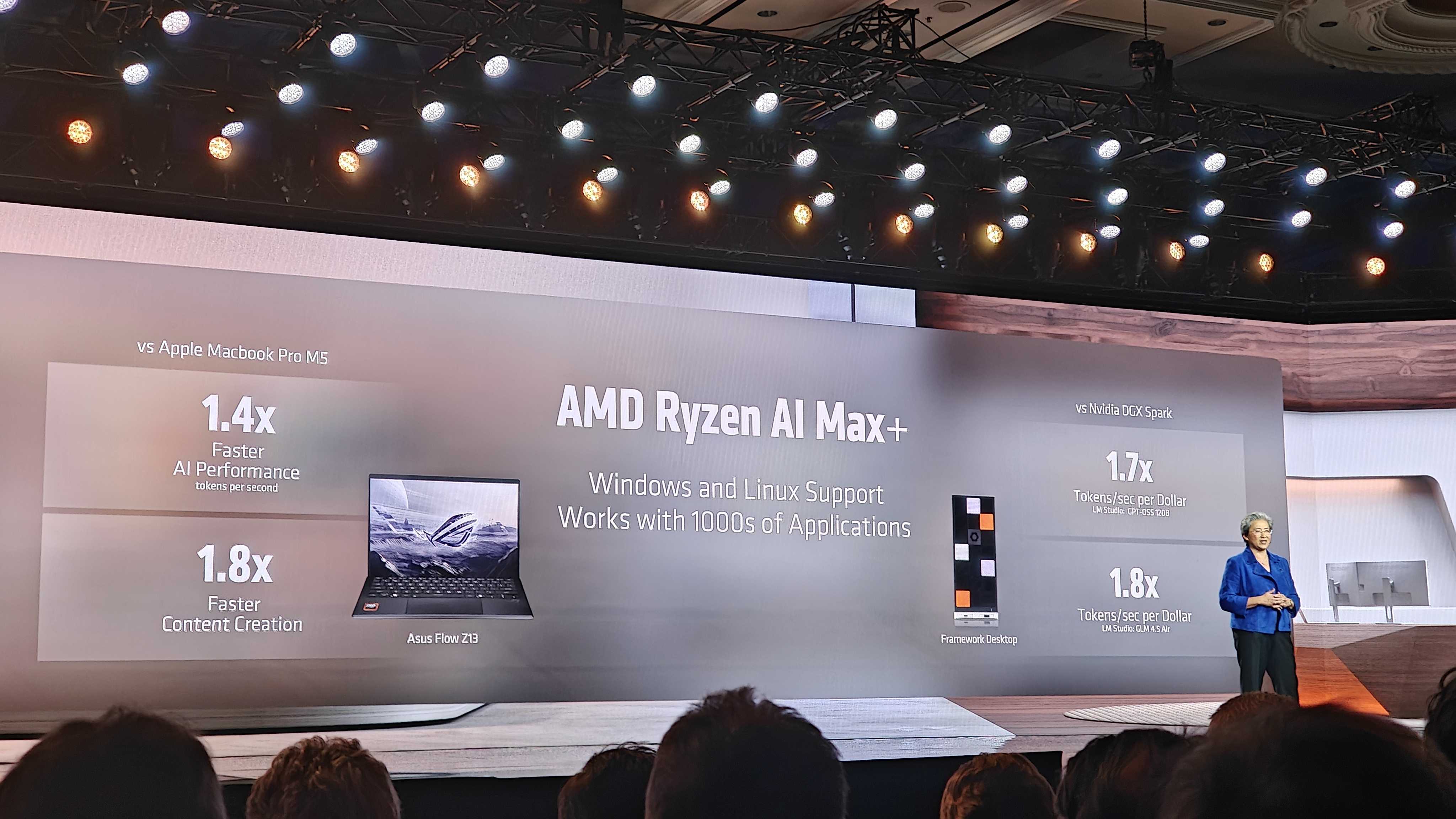

Onto Ryzen AI Max.

AMD says that it delivers higher value than the DGX Spark, but it's measuring in tokens per dollar per second, and the DGX Spark is very expensive by comparison.

Speaking of, the Ryzen AI Halo. It looks like AMD's take on the DGX Spark, powered by the flagship Ryzen AI Max and 128GB of memory. It even comes with preloaded models, and it's coming in the second quarter of this year. You'll get GPT-OSS, FLUX.2, SDXL, and more out of the box. — Jake Roach

We're finally onto AMD's take on AI and gaming. Dr. Fei-Fei Lee, co-founder and CEO of World Labs, joins Su on stage.

Li is discussing spatial intelligence, and that a "new wave" of AI technology can give machines "something closer to human-level spatial intelligence" to create 3D "or even 4D worlds."

World Labs wants to use gen AI technology to learn the 3D structure of the world, not just pixels on a screen. It might show up as pixels later, but it's informed by the 3D world. There's an example here that looks like it could be a video game, but it's created by an AI model.— Jake Roach

World Labs went to AMD's office and scanned it with phone cameras — no special equpment, Li says. Then, using their model, the company remade the Silicon Valley office.

Li says that what you could do in months would now take minutes. She is showing the model's take on the Venetian Hotel, which is where the event is taking place. Li says this was made yesterday.

Li is bringing this back to AMD. Using ROCm and Instinct, World Labs' team was able to increase performance.

"I don't like to hype," says Dr. Li, and you can tell. There's been a lot of AI hype in this keynote and across CES, and you can tell the audience appreciates something more grounded and inspired. — Jake Roach

Li gets some applause when she says that we need to "develop AI that reflects true human values."

We're making a pivot to healthcare. Three AI CEOs are joining Lisa Su on stage.

Sean McClain, CEO of AbSci, calls the way we current develop drugs "archaic."

"We're able to start engineering biology," he says, comparing drugs to AMD's GPUs and Apple's iPhones.

McClain says they want "AI curing baldness." He is sat next to a bald man, Jacob Thysen, CEO of Illumina.

More importantly, he also wants to focus on women's healthcare. Absci is moving to MI355X, and McClain says that extra memory will really improve the rate of drug discovery. — Jake Roach

Jacob Thaysen, CEO of Illumina, says that human genome is like a book with 200,000 pages, and it's contained in each of our cells. Genome sequencing is very data-intensive, he says, and that EPYC CPUs are the "only way" for the company to function with the amount of data it needs to process. — Jake Roach

Ola Engkvist, executive director and head of molecular AI at AstraZeneca, is discussing using AI to judge candidate drugs, and then they can be modified in the lab. Using the AI reduces the number of experiments they have to do, which should increase speed.

He says they need hyperscaling to optimize their datasets.

Dr. Su is moving us to physical AI with a focus on automotive. She calls physical AI one of the "toughest challenges in technology."

You need high-performance CPUs. accelerators, and other sensors in embedded applications.

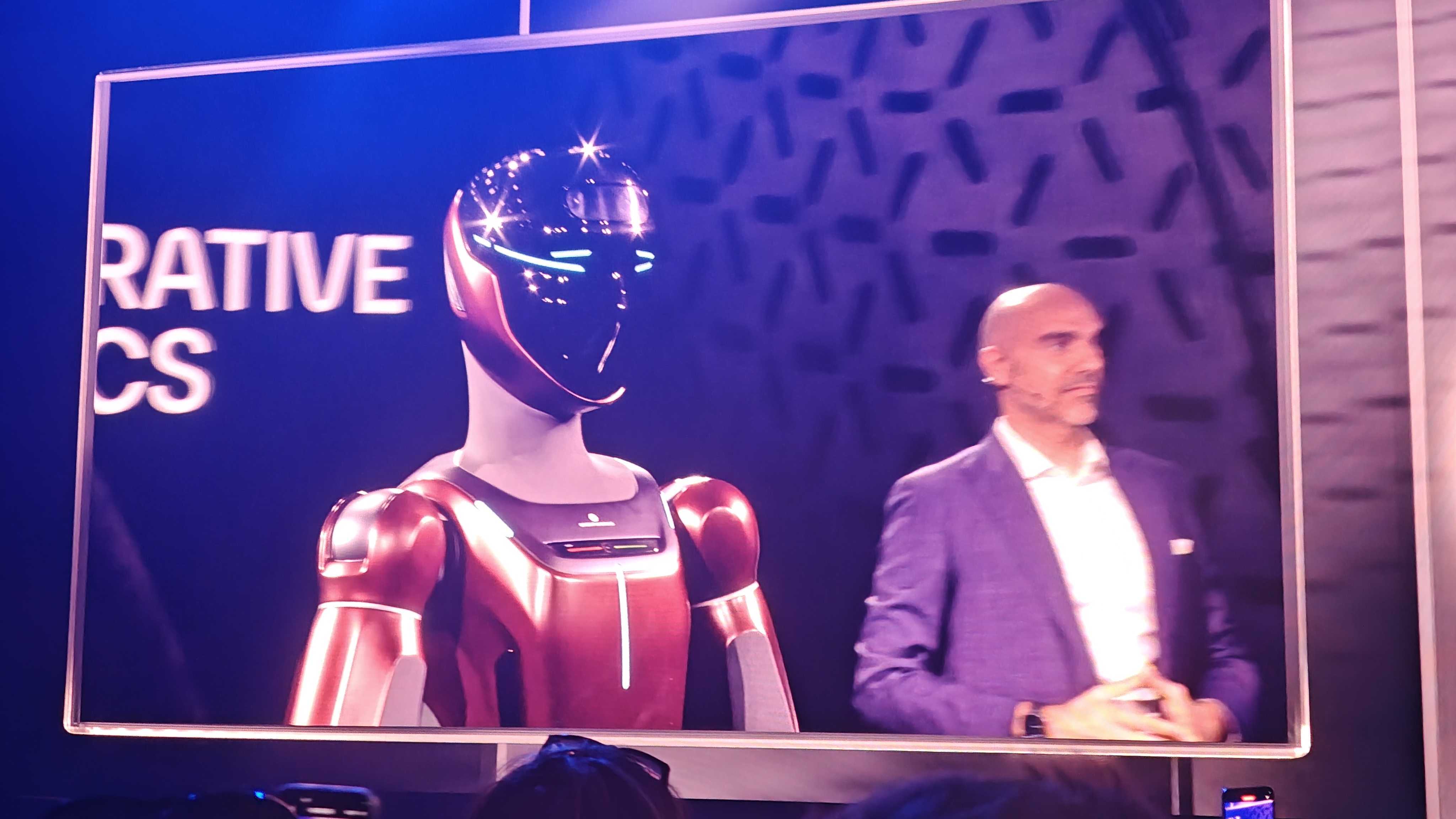

CEO of Generative Bionics. Daniele Pucci, is on stage with Lisa Su. His work can't wait for the cloud, he says, so they need AMD's fastest chips for robotics.

"Our approach to physical AI is to build a platform around the humanoid." Pucci is getting into some interesting weeds about robotics, such as the fact that humans walk by falling forward. I guess that's true, but I don't like thinking about it. — Jake Roach

Pucci says robots also need a sense of touch. They also built shoes for the robot, which acts a sensor. Lisa Su says this is "super cool," and yeah, robot shoes are indeed super cool.

So of course there's a robot on stage. Pucci says it's "Italian by design," and it does have a sports car look to it. It's called Gene 1.

Like The Fast and the Furious 9, AMD is taking this keynote into space.

AMD says its compute is used in many leading space agencies. John Couluris of Blue Origin is joining Lisa Su on stage.

"Space is the ultimate edge environment." Couluris points to mass constraints, radiation in space, and other factors that aren't relevant in other embedded applications. — Jake Roach

We're up to what Lisa Su says is the last chapter of the night: Science.

She is detailing supercomputers running global models to predict weather forecasts, create cleaner fuels, and model how viruses mutate and evolve.

Michael Kratsios, White House Science advisor, is on stage with Lisa Su. He's discussing the Genesis Mission to use supercomputers, laboratories, and scientists to use AI in science.

What must the US do to lead in AI, Lisa Su asks. He points to President Trump's AI action plan, including three points. Removing regulations, expanding energy, and exporting American technologies. — Jake Roach

The top three teams of Hack Club, a hackathon event, were brought out to CES by AMD to experience it for the first time. Now, the project leaders are joining Lisa Su and Kratsios on stage. One of the projects was for a robot barista, and it runs entirely on an AMD laptop. — Jake Roach

Su is wrapping up. She's summing up AMD's technology, ties with industry leaders, and public-private partnerships.

There's no mention of the Ryzen 7 9850X3D,. which the company announced via press release during the conference. You can learn more about that here.

That's a wrap! Thanks for sticking with us!

-

Bumstead I love the circular links in the article that never take the reader to the live video stream.Reply